Readtime: 55 mints

Table of Contents

Introduction

Compressed Model Architectures

Quantization

Pruning and Sparsification

Knowledge Distillation

On-Device Inference Techniques

Frameworks and Runtimes (Open-Source vs Proprietary)

Hardware Acceleration

Real-Time Deployment Strategies

Latency Optimizations

Edge-Cloud Collaboration

Industry Applications

Healthcare

Manufacturing & Industrial IoT

Automotive

Finance

Mobile and IoT Case Studies

Budget Considerations

Introduction

Edge deployment of machine learning models – especially large language models (LLMs) – has gained significant momentum in 2024–2025. “Edge” here refers to running models on local devices (smartphones, embedded/IoT devices, on-premise servers, etc.) rather than in the cloud. Key motivations include lower latency, since data doesn’t traverse a network, and data privacy, as sensitive data can be processed locally. However, deploying LLMs on-device is challenging due to their enormous size and computation needs – often hundreds of times larger than traditional mobile models (Large Language Models On-Device with MediaPipe and TensorFlow Lite).

Recent research and engineering efforts have tackled these challenges via model compression, specialized on-device inference frameworks, and novel real-time strategies. This report reviews the state-of-the-art (2024–2025) in edge ML deployment, covering techniques like quantization, pruning, and distillation, as well as hardware/software solutions for different industries and budgets.

Compressed Model Architectures

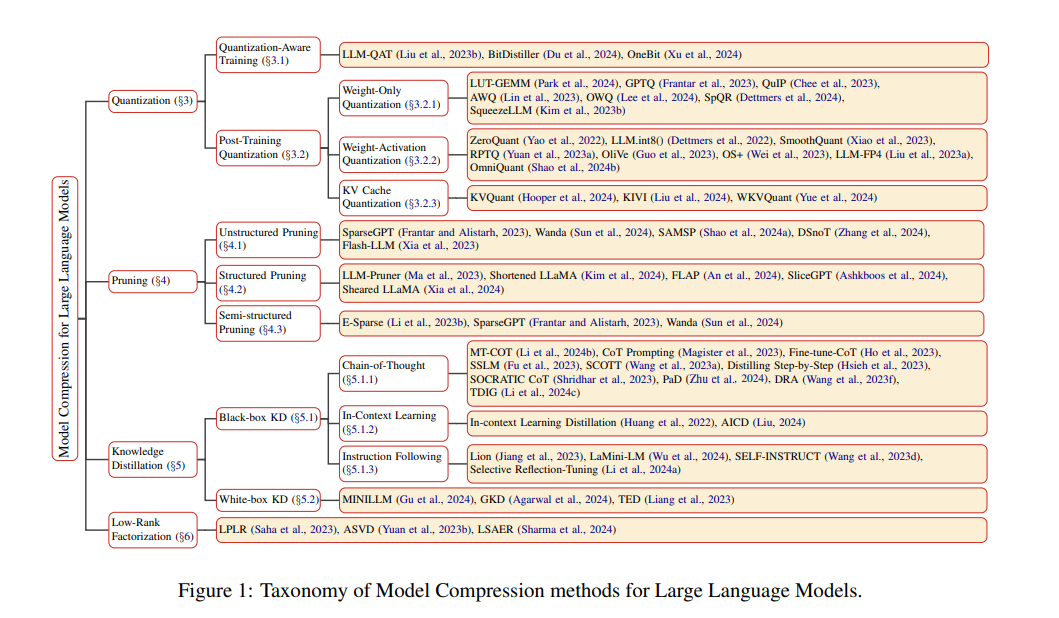

One major avenue for enabling edge deployment is compressing the model architecture so it uses less memory and compute. In 2024, research has advanced techniques such as quantization (reducing numerical precision of model weights/activations), pruning (removing redundant weights or neurons), and knowledge distillation (training a smaller “student” model to mimic a large model). These methods can often be combined for maximum effect. The goal is to shrink model size and inference cost while preserving as much accuracy as possible.

Quantization

Quantization reduces model size by representing parameters with lower-bit precision (e.g. 8-bit or 4-bit integers instead of 16- or 32-bit floats). This can cut memory usage and increase speed drastically at the cost of a small accuracy drop. For example, a recent deployment study quantized a 16-bit, 8-billion parameter LLM down to 4-bit, shrinking the model from ~16 GB to ~4 GB and significantly speeding up inference (Optimize LLMs for Efficiency & Sustainability | PyTorch). The authors note this was the “simplest and quickest” optimization, trading a bit of accuracy for a 4× model size reduction and faster generation.

Current research pushes quantization to extreme low bits without losing too much accuracy. Sub-4-bit quantization has been demonstrated with methods like BitDistiller (Du et al. 2024), which merges quantization-aware training with self-distillation to maintain performance even at 3–4 bit weights (A Survey on Model Compression for Large Language Models). BitDistiller uses asymmetric quantization and a special distillation loss to achieve “superior results” at ultra-low precision. Other advanced schemes like Activation-Aware Weight Quantization (AWQ) adapt the quantization based on activation distribution to minimize errors (AWQ won a best paper award at MLSys 2024) (AWQ: Activation-aware Weight Quantization for On-Device LLM ...) . These approaches find that not all weights are equally sensitive; by carefully handling outlier features, they can quantize many LLMs to 4-bit or even 3-bit with minimal perplexity increase.

An important point is that quantization can target not just the model weights but also the activations and transient states. Large LLMs use key–value (KV) caches to store past token embeddings for context, which can grow huge for long prompts. Research in 2024 showed that quantizing these caches can massively save memory. For instance, KIVI (Liu et al. 2024) found an optimal way to quantize keys per-channel and values per-token, allowing the KV cache to be compressed to just 2-bit precision with negligible loss and no fine-tuning needed. Similarly, WKVQuant introduced a two-dimensional quantization for KV caches (along with weight quantization), yielding memory savings comparable to full weight+activation quantization while nearly matching the accuracy of weight-only quantization. These innovations enable long-context LLMs on devices by keeping memory footprint in check.

Quantization is also being built into deployment frameworks. For example, NVIDIA’s TensorRT-LLM library in 2024 added support for FP8 and INT4 weight quantization (including the AWQ method) as built-in options, alongside custom kernels for efficient inferencing (NVIDIA TensorRT-LLM Now Supports Recurrent Drafting for Optimizing LLM Inference).

Overall, 2024’s progress shows that careful 4-bit quantization is practical for edge LLMs – significantly reducing model size (4× or more) and often increasing throughput, with only minor accuracy degradation.

Pruning and Sparsification

Pruning removes weights or even entire neurons from the model that contribute little to outputs, creating a smaller sparse model. Unlike quantization (which shrinks each weight’s size), pruning reduces the number of weights. There are several levels: unstructured pruning deletes individual weight values, structured pruning removes higher-level structures (e.g. entire neurons, attention heads, or layers), and semi-structured approaches enforce a pattern (e.g. prune 2 out of every 4 weights) to retain some regularity for hardware efficiency (A Survey on Model Compression for Large Language Models).

Unstructured pruning can achieve very high compression at the cost of irregular memory access. Recent works show that even very large LLMs can be pruned ~50–90% sparsely with limited loss. For example, the SparseGPT method pruned 50% of GPT-175B’s weights with virtually no perplexity increase (actually a slight improvement of -0.14). Another 2024 method, DSnoT, went as far as 90% unstructured sparsity on a 65B model, though perplexity degraded more noticeably. The downside is that such irregular sparse models require specialized sparse matrix libraries or hardware to realize speed-ups, since typical CPUs/GPUs aren’t optimized for random weight masks.

Structured pruning yields a smaller dense model that is easier to run. One notable 2024 result is SliceGPT (Ashkboos et al. 2024), which “slices” each weight matrix by removing entire rows and columns (effectively reducing the dimensionality) (SliceGPT: Compress Large Language Models by Deleting Rows ...). SliceGPT was able to remove ~25% of the parameters of LLaMA-2 70B while maintaining about 99% of the zero-shot task performance. Thanks to the reduced model size, the pruned model ran significantly faster – using only ~64% of the inference compute of the dense model on a 24GB GPU (36% speedup). Because the result is still a dense model (just smaller), it doesn’t need special sparse kernels, making deployment simpler. Other structured pruning efforts (e.g. Shortened LLaMA) have pruned ~30–35% of weights (removing some layers) albeit with a moderate perplexity hit. Structured and semi-structured pruning can also leverage hardware features: for instance, the Nvidia Ampere GPU architecture natively accelerates a 2:4 sparsity pattern (50% of weights pruned in each block of 4), giving up to 2× speedups. Techniques like E-Sparse (2024) apply this pattern to LLMs and saw about 1.5× runtime speedup with 2:4 sparsity.

In summary, pruning can meaningfully reduce model size for edge deployment. Unstructured pruning can push extremely high compression (e.g. halving or quartering model size), but structured pruning yields more deployable models that better translate to speed and memory gains on device. 2024’s “lottery ticket”-style findings (SliceGPT, etc.) suggest that many large models have significant redundancy – we can drop a quarter of parameters with minimal impact ([2401.15024] SliceGPT: Compress Large Language Models by Deleting Rows and Columns). For edge scenarios that can tolerate a tiny accuracy drop, pruning is a powerful tool, especially when combined with quantization (a pruned, 4-bit model might be orders of magnitude smaller than the original).

Knowledge Distillation

Knowledge distillation is another pillar of model compression, where a large “teacher” model’s behavior is used to train a smaller “student” model. The student model can learn to mimic the teacher’s outputs (logits or generated responses) on a large dataset, effectively inheriting some capabilities of the teacher despite having far fewer parameters. Distillation has been crucial for creating small LLMs that still perform well on complex tasks.

2024 saw advances in both black-box distillation (treating the teacher as an API only) and white-box distillation (using knowledge of the teacher’s internals). An example of black-box distillation is the LaMini-LM project ([2304.14402] LaMini-LM: A Diverse Herd of Distilled Models from Large-Scale Instructions). The authors compiled a diverse set of 2.58 million instruction-response pairs (many generated by GPT-3.5) covering broad topics, and fine-tuned a “herd” of smaller models (ranging from 100M up to a few billion parameters) on this data. The distilled models, called LaMini-LM, achieved performance comparable to much larger baselines while being much smaller in size. For instance, a 1.5B parameter LaMini-LM model can follow instructions fairly well, despite being distilled from a teacher with tens of billions of params. This demonstrates how companies are leveraging powerful closed models (like GPT-3.5) to bootstrap efficient models that run on consumer hardware.

On the white-box side, one notable work is MiniLLM (Gu et al., ICLR 2024). MiniLLM assumes access to a full teacher model and modifies the distillation objective itself. It argues that the standard distillation loss (which often uses forward Kullback–Leibler divergence) is suboptimal for generative models, because it can cause the student to overestimate low-probability tokens. Instead, MiniLLM uses a reverse-KL objective, which better aligns the student to the teacher’s distribution ([2306.08543] MiniLLM: Knowledge Distillation of Large Language Models). With some optimization tricks, they successfully distilled various large open-source LLMs into much smaller ones (120M up to 13B) that actually produced higher-quality, more stable long texts than baseline distilled models. In other words, by adjusting the training loss, the student’s generations had better precision and calibration according to their evaluations.

There are many other flavors of LLM distillation appearing: e.g. distilling specific capabilities like chain-of-thought reasoning (some works generate step-by-step rationales from the teacher and train the student to emulate that), or instruction tuning distillation where a generalist student is trained on the instructions+answers from a teacher to excel at following user instructions. All these share the goal of packing the “knowledge” or behavior of a 100B-scale model into a model 10× or more smaller. By 2024, it’s become feasible to get surprisingly strong performance out of models under ~7B parameters via distillation. For example, Microsoft’s Phi-3 series trains relatively compact models for on-device use: the latest Phi-3 mini has only 3.8B params but, through massive training on 3.3 trillion tokens, it scores 69% on the MMLU knowledge benchmark – not far off models like GPT-3.5 – and is competitive with some 7B+ parameter models (On-Device Language Models: A Comprehensive Review). Distillation and careful training are what make such small models punch above their weight.

In summary, compressed model architectures – through quantization, pruning, and distillation – are enabling LLMs to run in resource-constrained environments. By 2025 we have seen 4-bit multi-billion-parameter models running on smartphones and PCs, and sub-4-billion models that can handle complex language tasks. These techniques often work best in combination (e.g. a student model might be distilled and quantized). Compressed models form the foundation for on-device inference, but to actually deploy them efficiently, one also needs the right inference engines and hardware, which we discuss next.

On-Device Inference Techniques

Even a compressed model needs an optimized software and hardware stack to run smoothly on edge devices. In 2024, both open-source and proprietary players introduced improved frameworks, libraries, and runtime environments for on-device ML. Additionally, hardware vendors continued to innovate on AI accelerators and optimized chips for edge inference. This section covers the practical tools and platforms for on-device model execution.

Frameworks and Runtimes (Open-Source vs Proprietary)

Open-source frameworks for edge ML have matured greatly. TensorFlow Lite (TFLite) remains a popular choice for mobile and IoT deployment. It’s a lightweight runtime that takes a trained model (often compressed or converted to a special format) and runs it efficiently on ARM CPUs or specialized DSP/NPUs. Google announced in March 2024 an experimental MediaPipe LLM Inference API built on TFLite, to make running large language models on-device easier (Large Language Models On-Device with MediaPipe and TensorFlow Lite- Google Developers Blog). Google is also introducing Android 14’s new “AICore” service which can serve a small model (Gemini Nano) as a system service for apps, showing a trend toward OS-level support for on-device AI.

On the PyTorch side, 2024’s PyTorch 2.x series improved support for edge inference as well. PyTorch can directly run on mobile (via PyTorch Mobile/Metal backend on iOS or Android) and has quantization toolkits built-in. A PyTorch blog in Feb 2025 demonstrated deploying a Llama-based chatbot on an ARM CPU server using 4-bit quantization and an optimized runtime (Optimize LLMs for Efficiency & Sustainability | PyTorch). This underscores how software optimization (quantization + custom kernels) can unlock major performance gains on commodity edge hardware.

Beyond TF Lite and PyTorch, there are other notable open runtimes: ONNX Runtime (by Microsoft) is an open-source engine that can run models from any framework, with accelerations like TensorRT or OpenVINO under the hood. It’s often used on Windows, Linux, and even Azure IoT for edge deployments, supporting a variety of hardware backends. Apache TVM is another open deep-learning compiler that many researchers use to auto-tune models for a given device (e.g. compiling a Transformer for a specific ARM CPU to maximize throughput).

A special mention is deserved for community-driven projects like llama.cpp. In 2023 this C++ library emerged to run LLaMA and similar models on CPU with low memory, and it exploded in popularity. By 2024, llama.cpp and its forks support many LLMs in 4-bit or 5-bit quantized form, allowing people to run language models on everything from laptops to Raspberry Pi. It uses optimized CPU instructions and quantization (Q4, Q5, etc.) to achieve surprisingly good throughput without GPUs. This has enabled a vibrant ecosystem of open-source “chatGPT-like” assistants that run locally on consumer devices. Hugging Face even added support for the GGUF model format (an evolution of llama.cpp’s format) in its Inference Endpoints, showing the influence of these grassroots tools (Hugging Face Inference Endpoints now supports GGUF out of the box!). In summary, open solutions abound – from Google and Meta’s contributions down to independent GitHub projects – all lowering the barrier for edge ML.

On the proprietary side, every major hardware vendor has its own stack. For mobile devices, Apple’s Core ML is a key player. Core ML (with the Apple Neural Engine) powers on-device ML in iPhones and iPads. Apple has been relatively secretive, but from the outside we know recent iOS versions moved tasks like Siri’s speech recognition fully on-device using neural nets. Interestingly, a 2024 review article mentions Apple’s internal LLM efforts: an OpenELM 1.1B model integrated into iOS that provides app developers with on-device language understanding features (On-Device Language Models: A Comprehensive Review). OpenELM uses a novel “layer-wise scaling” architecture to maximize efficiency, achieving a small accuracy gain over prior models while using half the training data (On-Device Language Models: A Comprehensive Review) – a testament to Apple’s focus on efficient design. It’s compatible with Apple’s MLX library, meaning it can be fine-tuned on-device for personalization. Apple also has a multimodal model Ferret-v2 for on-device vision+language (leveraging a DINOv2 image encoder) to enable features like any-resolution image understanding on iPhones. All of this runs within their closed ecosystem (Core ML and neural engine), indicating that Apple is deploying compressed models as system services similar to Google’s approach.

Qualcomm offers the SNPE SDK for AI on Snapdragon chips, enabling models to run on the Hexagon DSP or Adreno GPU of phones. NVIDIA’s TensorRT and TensorRT-LLM are proprietary but free libraries targeted at NVIDIA GPUs (from desktop GPUs down to Jetson Orin modules). TensorRT-LLM, introduced late 2023 and refined in 2024, provides an easy Python API to convert LLMs into highly optimized GPU executables. It includes many edge-relevant optimizations, such as paged memory for long sequences and support for various quantization strategies (FP8, INT8 with SmoothQuant, INT4 with AWQ). Essentially, it encapsulates state-of-the-art tricks so that even non-experts can serve a large model with minimal latency on an NVIDIA edge device.

Between open and closed frameworks, interoperability is improving. Many proprietary runtimes allow importing models from PyTorch/TF (e.g. via ONNX). The choice often comes down to target hardware and developer familiarity. Open-source is attractive for its flexibility and community support (and zero license cost, critical for startups), whereas proprietary stacks might squeeze out a bit more performance on specific hardware or provide end-to-end enterprise support. In practice, edge deployments in 2024 often involve hybrid stacks – for instance, exporting a PyTorch model to ONNX, then running it with TensorRT on a Jetson; or converting a TFLite model to Core ML for iOS. The good news is that the ecosystem is rich, and running ML on-device is getting easier with each iteration of these frameworks.

Hardware Acceleration

Efficient edge inference also relies heavily on hardware acceleration. Different use-cases leverage different classes of devices in 2024–2025:

Mobile/Tablet SoCs: Modern smartphone chips (e.g. Qualcomm Snapdragon, Apple A-series/M-series, Google Tensor) include dedicated AI accelerators. These NPUs can run quantized models extremely efficiently within a tight power budget. For example, Google’s Pixel devices use a Tensor Processing Unit (TPU edge variant) for features like on-device translation and photo editing. Apple’s Neural Engine delivers over 15 TOPS within iPhones. These accelerators thrive on 8-bit operations, which is why quantization of LLMs to int8/int4 is so important – it lets the model run on the phone’s NPU rather than falling back to the CPU. In Android 14, the new AICore framework helps coordinate these hardware bits to serve the Gemini Nano LLM with low latency. Essentially, in mobile devices, hardware and software co-design is enabling real-time AI: e.g. Gboard’s on-device text generation uses Google’s Gemini Nano model running on the phone’s AI silicon to offer instant smart replies (On-Device Language Models: A Comprehensive Review).

Embedded & IoT Devices: Beyond phones, there’s a range of edge hardware like Raspberry Pi-class microcomputers, single-board computers with GPUs (NVIDIA Jetson family), and even microcontrollers for TinyML. NVIDIA’s Jetson line (used in robotics, cameras, etc.) received a notable upgrade in late 2024: the Jetson Orin Nano Super developer kit. It delivers ~1.7× higher generative AI inference performance than the previous Orin Nano (up to 67 INT8 TOPS of compute) while halving the price to $249 (NVIDIA Unveils Its Most Affordable Generative AI Supercomputer | NVIDIA Blog). This puts substantial AI horsepower (equivalent to a decent desktop GPU) into the hands of hobbyists and industry at low cost, suitable for running Transformer models at the edge. Jetson modules support NVIDIA’s full software stack (CUDA, TensorRT), so they can run large models and even multimodal pipelines (the Orin Nano Super can interface with multiple high-res cameras for vision AI alongside language tasks). On the smaller end, microcontroller-class chips (with only a few hundred kilobytes of RAM) are also being equipped with neural accelerators – for example, the ARM Ethos-U and Qualcomm Hexagon DSPs in IoT devices – enabling simple neural nets for tasks like keyword spotting or anomaly detection. While these can’t run an LLM, they benefit from the trickle-down of efficient Transformer variants and compression techniques. Research in TinyML is even exploring distilled or extremely quantized language models that could handle basic NLP on a microcontroller (Integration of Large Language Models with microcontrollers to ...), though this is still nascent.

Edge Servers and Appliances: In enterprise or industry settings, edge deployment might mean on-premise servers at a factory, hospital, or retail store. These often use more powerful accelerators, like NVIDIA A100 or H100 GPUs in an on-site server, or FPGA-based solutions for low latency. In 2024, NVIDIA released the IGX Orin platform for industrial/medical edge computing, which can be paired with an external GPU for a massive performance boost – adding an RTX 6000 Ada to an IGX Orin board yields up to 7× increase in AI throughput (1,705 TOPS) for handling advanced generative models on-site. This kind of setup is used in scenarios like robotic surgery assistants or automated optical inspection in manufacturing, where you need datacenter-class AI but with hard real-time and safety constraints. NVIDIA’s IGX comes with the Holoscan software (for streaming sensor data through AI pipelines in real-time) and enterprise support for reliability. Other vendors like Intel (with OpenVINO and Movidius VPUs) and Google (Coral Edge TPUs) also cater to this “edge server” niche, providing hardware that can be deployed outside the cloud data center but still pack a punch. The common theme is bringing compute closer to data – whether it’s a camera on a factory floor or patient data in a hospital – to avoid latency and privacy issues.

In summary, 2024’s edge hardware spans from tiny microcontrollers to mini-“supercomputers” like Jetson Orin, all increasingly affordable. Hardware advances are synergistic with model compression: e.g. an int4-quantized model can fully leverage a mobile NPU’s efficiency, and a sparsity-optimized model can take advantage of a GPU’s sparse tensor cores. Many industry players (NVIDIA, Qualcomm, Apple, etc.) are delivering complete stacks: hardware plus software libraries tailored for deploying AI at the edge, often with specific domains in mind (vision, healthcare, robotics). These options give practitioners flexibility to balance power, cost, and performance for their particular use case.

Real-Time Deployment Strategies

Deploying an ML model at the edge isn’t just about getting it to run – it must run fast enough to meet application requirements. “Real-time” can mean different things: a voice assistant responding within a fraction of a second, an autonomous vehicle’s vision system running at 30 frames/s, or an interactive chatbot generating a reply with minimal delay. Achieving low latency and high throughput with limited resources requires clever optimization at the system level. Here we discuss strategies being used to make edge ML deployments real-time and responsive.

Latency Optimizations

A variety of techniques are employed to reduce inference latency on-device:

Model optimizations: The first line of defense is compressing and simplifying the model, as covered earlier (quantization, pruning, etc.). A quantized model can execute faster due to fewer bits and specialized instructions. For example, after quantizing a CPU-based LLM to INT4 and using ARM assembly kernels (via Arm’s KleidiAI library), one team reported first-token latency under 1 second and ~25 tokens/sec generation speed, whereas the original FP16 model was outputting <1 token/sec. Another form of parallelism is batching – processing multiple requests or multiple data samples together to amortize overhead. On device, batching is less common (since often there’s one user request at a time), but in edge servers serving multiple streams (e.g. multiple camera feeds), batching can improve utilization.

Caching and reuse: If certain computations can be reused across inferences, effective caching can save time. An example is weight caching – many frameworks now keep models loaded in RAM with precomputed optimizations. TensorFlow Lite’s XNNPack backend in 2024 introduced a smarter cache for weight data to avoid re-packing weights on each run (Streamlining LLM Inference at the Edge with TFLite). For long-running edge services, these improvements are important. Another cache example is activations cache within a single session: LLMs generating long outputs reuse the KV cache from previous tokens, so frameworks ensure those stay in fast memory. Some optimized implementations use paged KV caching, where old cache chunks can be moved to slower memory if context is very long, to avoid running out of fast memory. All these caching mechanisms aim to make sure the model spends most of its time doing new inference work, not repeating setup or I/O.

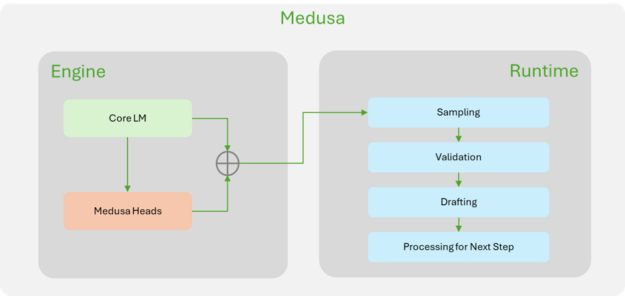

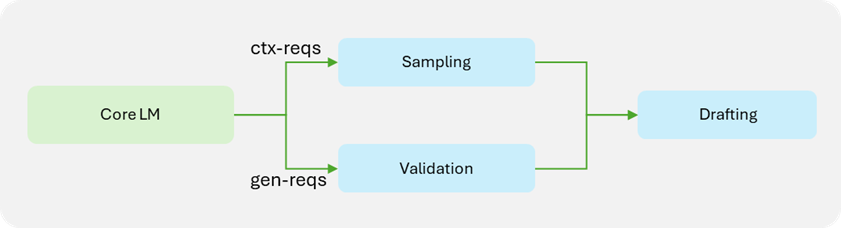

Speculative and approximate methods: A newer class of techniques trades extra computation for latency reduction by guessing part of the work in parallel. Speculative decoding for LLMs is a prime example. Here, a smaller “draft” model generates some future tokens quickly, and the large model then verifies those tokens – if correct, it skips directly to that future point. If not, it falls back to normal generation. Apple researchers developed a speculative decoding method called Recurrent Drafting (ReDrafter) which was open-sourced in 2024, and NVIDIA integrated it into TensorRT-LLM (NVIDIA TensorRT-LLM Now Supports Recurrent Drafting for Optimizing LLM Inference | NVIDIA Technical Blog). ReDrafter can significantly boost throughput on NVIDIA GPUs by utilizing the GPU to generate multiple tokens in parallel with the draft model. Importantly, it maintains output quality nearly the same as standard decoding, but reduces latency especially when the system isn’t fully loaded (by filling idle compute with draft predictions) . This is a clever way to leverage extra computation to overcome the sequential bottleneck of autoregressive generation. Another similar approach is speculative beam search or multi-path decoding (e.g. the Medusa method) – generating multiple possible continuations in parallel and then choosing one, to potentially accept more than one token per step. These methods are cutting-edge and mostly applied in server scenarios, but as edge devices gain more horsepower, they may be applied on-device too for ultra-low response times (especially if a draft model can run on an NPU in parallel with the main model on CPU/GPU). On a simpler note, some edge vision applications use approximate computing: running a lightweight model every frame and a heavy model only on demand, or using lower precision for less critical calculations. This kind of dynamic quality/latency trade-off is an active area of research.

System-level tuning: A lot of latency gains come from non-ML tweaks – e.g. pinning threads to certain cores, using big cores for important tasks, adjusting operating frequency (DVFS), etc. Real-time OS extensions or libraries like NVIDIA’s VRWorks and RTX (for robots/cars) ensure the scheduling of ML tasks meets deadlines. In edge cloud boxes, people use Docker or Kubernetes with CPU pinning for ML inference to avoid latency spikes from other processes. All these ensure the ML model isn’t preempted or starved when a critical inference is needed.

In practice, achieving real-time performance might require combining all of the above: e.g. quantize the model, load it at startup and cache weights in RAM, use all cores/NPU in parallel, and maybe even use a speculative decoding for the first few tokens to get a head-start. By mid-2020s, the community has accumulated lots of know-how to make even large models responsive in constrained settings. A concrete example: Google’s Gboard keyboard, which now can run an on-device LLM to offer smart replies during chat, detects the user is typing rapidly and in that mode uses the local Gemini Nano model to suggest replies instantly (no waiting for server). If it relied on a cloud model, network latency would make the feature too slow for quick messaging – but on modern phones the tiny LLM can output suggestions in real-time, showing that with careful optimization, interactive LLMs on-device are feasible.

Edge-Cloud Collaboration

Not every task can be handled entirely on the edge – sometimes a combined approach is optimal. Edge-cloud collaboration strategies are being explored to balance latency, accuracy, and resource usage:

One approach is offloading: run a smaller model locally to handle easy cases, and only query a large cloud model for hard cases. The local model can act as a gate or provide a rough answer quickly. For example, an uncertainty-based router might run a lightweight classifier on-device to decide if a query needs the “big brain” in the cloud (Exploring Uncertainty-Based On-device LLM Routing From ... - arXiv). If the on-device model is confident, it responds immediately; if not, the request goes to a cloud API. This can significantly cut down cloud calls (saving cost and latency) while preserving quality on the tough queries. A 2025 study on on-device LLM routing found that such selective offloading can generalize well, though it requires careful setting of confidence thresholds and a sufficiently capable edge model (Exploring Uncertainty-Based On-device LLM Routing From ... - arXiv).

Another pattern is co-processing, where different parts of a pipeline run in different locations. For instance, in an augmented reality translator, a phone might do local speech recognition and text-to-text translation (fast and keeping user’s voice data private), but then send the text to a cloud LLM for a more nuanced rephrasing. In robotics, a robot might do immediate obstacle detection on its onboard computer, but send visual data to a cloud service for long-term path planning or mapping which is too heavy to do on-board. The key is to split tasks by latency sensitivity: real-time, safety-critical pieces stay on the edge, while more background or heavy analytics go to cloud.

A simpler edge-cloud synergy is periodic model refresh. An edge-deployed model can be periodically updated from the cloud with new knowledge. For example, a hospital edge server might run an LLM that’s fine-tuned on local patient data for question-answering, but every night it pulls the latest medical research embeddings from the cloud to update its knowledge base. This way, day-to-day operations are local and fast, but the model doesn’t grow stale compared to cloud-based counterparts.

In 2024, product and research discussions also highlight privacy-preserving split processing. Projects like federated learning gained traction earlier, but now for inference, the idea is to only send minimal information to the cloud. For example, some new phones do face identification by extracting an embedding on-device and only sending that (not the raw image) to a server for matching. Similarly, an edge LLM might send an encrypted or transformed representation of a user query to a cloud service, such that the cloud can help without seeing raw data. This requires advanced techniques (secure enclaves, homomorphic encryption, etc.) and isn’t common yet, but it’s being explored in sectors like finance and healthcare where regulations demand maximum privacy.

Finally, there are architectural approaches like cloud-assisted search to lighten LLM loads. The PyTorch efficiency blog showed using a vector database (FAISS) to answer common queries and only call the LLM for novel ones (Optimize LLMs for Efficiency & Sustainability | PyTorch). This was in a cloud context, but the same idea can apply with an edge twist: maintain a local cache or database of past queries or known answers. If a user asks something the device has seen before, it can instantly retrieve the answer (or an LLM-generated answer template) rather than run the model again. Over time, the edge device “learns” frequent requests. This is akin to how web browsers cache pages – an edge AI could cache answers. It’s a simple but effective way to reduce unnecessary computation, thereby speeding up average response times.

In summary, edge-cloud collaboration is about using the edge for what it’s best at (immediate, private computation) and the cloud for what it’s best at (heavy lifting when needed, aggregating knowledge). In an industrial setting, this might manifest as an AI-enabled factory where each machine has an onboard model for quick anomaly detection, and a central cloud system that collects those signals and runs a larger optimization model to adjust factory workflow. The trend is moving away from “send everything to the cloud” toward smarter, bandwidth-conscious strategies. This not only improves latency but can drastically cut cloud costs – a big consideration we turn to next.

Industry Applications

Edge deployment of ML is happening across virtually all sectors. Here we highlight how different industries are leveraging edge LLMs/ML and give concrete examples of deployments in offline-first or low-latency environments. The requirements differ by domain – a medical device prioritizes privacy and reliability, an automotive system demands real-time throughput, etc. We also note specific examples from 2024–2025 that illustrate the trend.

Healthcare

Healthcare has strong drivers for on-device AI: patient data privacy (compliance with regulations like HIPAA), and the need for AI assistance in settings like clinics with limited connectivity or in ambulances/remote areas. In 2025, a study evaluated the performance of on-device LLMs for clinical reasoning tasks on smartphones (Medicine on the Edge: Comparative Performance Analysis of On-Device LLMs for Clinical Reasoning). The findings were promising: compact general-purpose models like the 3.8B Phi-3 Mini (from Microsoft) offered the best speed-accuracy trade-off on mobile devices, while specialized medical LLMs (e.g. Med42 and Aloe, fine-tuned on medical text) achieved the highest accuracy. Notably, they showed that even older phones can feasibly run these models, with memory being a bigger limitation than raw compute power. This means a mid-range phone with sufficient RAM could host a doctor’s assistant bot that answers questions locally using patient data – extremely useful for field doctors or when hospital networks are down.

We’re also seeing edge ML in medical devices. Imaging devices (ultrasound, MRI) increasingly integrate AI modules within the machine for instant analysis of scans. For example, an ultrasound cart might use an on-board GPU to run a small vision Transformer that flags anomalies in real-time as the technician moves the probe, rather than sending images to a server. NVIDIA’s IGX platform is explicitly targeting such use cases: it’s being adopted by Medtronic and others to power AI-enabled surgical systems and patient monitors at the edge ( NVIDIA Corporation - NVIDIA Enables Real-Time Healthcare, Industrial and Scientific AI Applications at the Edge With Enterprise Software Support for NVIDIA IGX With Holoscan ). These edge systems can process high-bandwidth sensor data (imaging, vital signals) on-site and provide immediate decision support to clinicians. Since it all stays local, patient data doesn’t leave the hospital network, mitigating privacy issues. The trade-off is that models may need to be smaller – but through quantization and efficient design, many medical AI models (which often are not as large as GPT-style LLMs) fit on these devices. The Med-PaLM M model (Google’s multimodal med LLM) is huge and runs in the cloud, but there are efforts to distill its knowledge into smaller “MedGPT” variants that could run on hospital servers for sensitive data.

Another advantage is cost: running inference on patient devices or hospital edge servers can save the recurring fees of cloud AI services. Hospitals generating large volumes of data (like continuous patient monitoring) prefer to analyze it locally to avoid saturating network bandwidth and incurring cloud compute charges. Edge AI can thus make advanced diagnostic support more accessible in smaller clinics that can’t afford big cloud infrastructure. We’re already seeing “AI in a box” products – e.g. a local server with pre-loaded medical NLP that can summarize doctor-patient conversations after each visit, without sending recordings to the cloud. This kind of deployment is very recent, but pilots are underway.

In summary, healthcare edge AI in 2024–2025 is moving from concept to reality. Privacy and compliance needs are pushing LLMs onto hospital premises. With evidence that carefully tuned small models (and older hardware) can still deliver useful accuracy (Medicine on the Edge: Comparative Performance Analysis of On-Device LLMs for Clinical Reasoning), we can expect to see more “doctor’s little helper” LLMs on tablets or medical devices soon. The key is ensuring they are validated for accuracy and safety – which is an ongoing challenge (medical AI has to be rigorously tested). Nonetheless, edge deployment is an attractive path for many health applications where lives are at stake and every millisecond and datapoint’s security matters.

Manufacturing & Industrial IoT

Manufacturing and industrial operations benefit greatly from on-site, real-time AI – often termed Edge AI in this context. Factory equipment and industrial IoT sensors generate a firehose of data that needs immediate analysis for quality control, predictive maintenance, and safety monitoring. Sending all that sensor data to a cloud is impractical due to latency and bandwidth; hence, edge ML models are deployed directly on the factory floor or inside machines.

A prime example is predictive maintenance: sensors on motors, pumps, or assembly lines stream vibration and temperature data. An edge ML model (like a small 1D CNN or transformer) running on a microcontroller or an edge gateway can analyze signal patterns locally to detect early signs of failure (e.g., an anomaly in vibration frequency). By catching it on-device, the system can trigger an alert or even an automatic shut-off within milliseconds, potentially preventing costly breakdowns. Companies in 2024 report that deploying such models on inexpensive hardware attached to each machine has reduced downtime. The low latency of on-site inference is crucial – waiting even seconds for a cloud response could mean damage is already done if a part is grinding itself apart.

Another use is real-time quality inspection. Using cameras and edge vision models, manufacturers can inspect products on the production line instantaneously. For instance, an AI camera might use a quantized object-detection model to spot defects in each item and eject bad ones, all in a matter of milliseconds as items whiz by on a conveyor. Systems like this are often powered by devices like NVIDIA Jetson or Intel Movidius sticks which are installed right on the assembly line for inferencing. With the latest Jetson Orin Nano Super (2024), even generative AI models (like anomaly-detection LLMs for sensor logs, or small vision transformers) can run on a palm-sized device, bringing what NVIDIA calls a “generative AI supercomputer” to the edge for just a few hundred dollars (NVIDIA Unveils Its Most Affordable Generative AI Supercomputer | NVIDIA Blog). This democratizes access – smaller manufacturers or even hobbyists can now deploy fairly advanced models without a huge investment.

There’s also an emerging role for language models in industrial settings via instruction-following robots and assistants. Consider a factory technician who could speak or type a query to a local “factory GPT” assistant: “Show me the maintenance log summary for machine 7 and any anomalies”. An edge-deployed LLM could integrate data from on-site databases (which never leave the premises) and output a quick summary, all without an internet connection. Some factories are indeed trialing private LLMs trained on their internal documentation and standard operating procedures, hosted on local servers for workers to query. This is safer than using cloud chatbots (which could leak proprietary info). The challenge is ensuring the LLM has up-to-date data – which is solved by connecting it to local data sources, possibly via retrieval-augmented generation where the LLM on the edge indexes and queries local files.

NVIDIA’s developments also highlight industrial edge focus: the NVIDIA IGX platform announced in 2024 is specifically to “address the increasing need for real-time AI at the industrial edge”, combining high-performance hardware with safety and security features. It’s being adopted by electronics manufacturers (Foxconn, Pegatron, etc.) to build “AI factories” where everything from logistics robots to assembly line inspection is coordinated by edge AI (Robotic Factories Supercharge Industrial Digitalization as Electronic ...). With IGX’s functional safety, one can run AI that directly controls machinery (e.g. robotic arms), because the platform can guarantee certain real-time responses and has fail-safes – something cloud cannot assure due to unpredictable latency.

In Industrial IoT broadly, scalability is key: a company might have hundreds of remote sites or oil rigs where deploying a cloud-connected AI per site would be too costly or unreliable (some sites have poor connectivity). By using modest edge ML devices at each site, they can cover more ground. In 2025 we even see TinyML in agriculture sensors – tiny devices on farms analyzing soil data on-device to decide watering needs, etc., without any connectivity.

Overall, manufacturing and industrial sectors are enthusiastically embracing edge ML. The year 2024 provided the hardware boost (more TOPS at lower cost) and the confidence that compressed models can still deliver useful accuracy in these settings. Real-world case studies have shown ROI in terms of reduced defects, downtime, and improved safety. As a result, many industrial companies are building out edge AI competency, either using platforms from NVIDIA/Siemens/ABB or open frameworks like Edge Impulse (an IoT AutoML platform) to train models that run on microcontrollers. This domain values reliability and determinism, so simple and well-optimized models (often smaller than bleeding-edge academic LLMs) are preferred – which aligns well with the constraints of edge deployment.

Automotive

The automotive industry has been a pioneer of edge AI out of necessity – a self-driving car or even an advanced driver-assistance system (ADAS) cannot rely on the cloud to make split-second decisions. Thus, cars are equipped with powerful on-board computers to run vision, sensor fusion, and decision models locally.

In autonomous vehicles (AVs) and modern cars, computer vision models (CNNs, Transformers) analyze camera feeds for lane detection, object detection (pedestrians, other cars), traffic sign recognition, etc., all on-device (within the car’s ECU). These models are heavily optimized to meet real-time frame rates (30+ FPS) with limited power. Techniques like pruning and low-bit quantization have been applied to production vision nets to fit them into automotive-grade chips. For example, Tesla’s FSD computer runs a suite of neural nets at low precision on a custom chip to achieve near 36 TOPS, enabling full self-driving visualization and planning on the edge. Similarly, many cars using Mobileye or NVIDIA DRIVE platforms run models with parts quantized to INT8 to use the accelerator cores efficiently.

What about LLMs in cars? We’re starting to see language and dialogue models embedded in vehicles for voice assistants or in-car entertainment. These range from simpler models that handle voice commands offline (e.g. controlling AC, navigation by voice without cloud) to more advanced prototypes of in-car chatbots that could explain vehicle features or even converse with passengers. Because an car is a somewhat contained environment, a moderately-sized LLM can be stored on the infotainment system. In fact, some high-end cars already do on-device speech recognition (to not depend on cellular signals for voice commands). As LLMs get optimized, we can imagine a car having an LLM that passengers can ask questions like “What’s that landmark we just passed?” and it could answer from a locally stored knowledge base (GPS location + Wikipedia data cached). This is still early, but concept cars have demoed such capabilities.

Automotive also intersects with safety-critical inference. Any model that affects vehicle control must meet strict latency and reliability standards. That has spurred work on real-time optimization: for instance, in-flight batching in NVIDIA’s TensorRT-LLM ensures even if multiple perception models run concurrently, they batch requests smartly to utilize the GPU without introducing jitter (NVIDIA TensorRT-LLM Now Supports Recurrent Drafting for Optimizing LLM Inference | NVIDIA Technical Blog). Also, functional safety (ISO 26262) often means having redundancy – e.g. two different models or circuits checking the same object detection to avoid single-point failure. Some AV systems run a primary DNN and a secondary simpler model (or traditional computer vision) in parallel as a check.

By 2025, cars are essentially “edge computing platforms on wheels.” The trend is only growing as more sensors (LiDAR, radar, HD cameras) are added, and as infotainment/ADAS features expand. All major auto OEMs are thus investing in on-board ML. NVIDIA DRIVE Orin and the upcoming Thor are SoCs specifically for vehicles, capable of hundreds of TOPS, to handle multiple LLMs and CV models in parallel (for example, a car might run a driver monitoring model, a language model for voice, and several vision models simultaneously). Tesla’s approach with its Dojo supercomputer is to train huge models but deploy distilled versions in-car that run on their FSD chip.

From an industry perspective, edge AI in automotive is non-negotiable because connectivity can’t be assumed (tunnels, remote roads) and latency is life-or-death. It’s a great example of pushing cutting-edge ML (like vision transformers) out of the lab into a constrained embedded environment. Expect to see continued improvements: e.g. multimodal LLMs in cars that combine vision and language (a car that can answer a question about what it sees), enabled by efficient on-device multimodal models (similar to Apple’s Ferret-v2 for iOS, which targets robust on-device vision+language. The pieces (hardware accelerators, compressed models, real-time OS support) are coming together to make this feasible.

Finance

The finance sector is cautiously adopting LLMs and ML, with a strong preference for on-premises or edge deployment due to data sensitivity and regulatory compliance. Banks and financial institutions handle private customer data and proprietary trading strategies that they are reluctant to send to third-party cloud APIs. As a result, many are exploring on-premise LLMs running in their own data centers or branch offices (“edge” in the sense of being on the enterprise’s premises rather than a public cloud).

For example, some banks in 2024 started deploying internal chatbots powered by LLMs trained on their internal documents (policies, product info). These are often hosted on company servers. In one case, a large bank invested in setting up an on-prem GPU cluster (on the order of tens of millions of dollars upfront) to run an internal GPT-style model, expecting to save much more in cloud API fees over time by handling thousands of employee queries daily in-house (Empowering BFSI with On-Premise LLMs for Security, Compliance ...) (Exploring New Frontiers: The Adoption Of LLMs In Banks - Forbes). The economics can favor edge deployment at enterprise scale: paying per-query for a cloud GPT service can rack up huge bills, whereas a one-time hardware buy (plus maintenance) can be amortized over years. Indeed, a LinkedIn article noted an example that a bank spending $10M on an on-prem LLM setup could avoid even higher annual cloud costs, achieving ROI in a short time.

Another area is algorithmic trading and risk management. These require extremely low-latency processing – trading algorithms often run co-located with exchange servers for microsecond advantages. While LLMs aren’t yet central to trading decisions (due to unpredictability), smaller ML models (like for fraud detection or market anomaly detection) are deployed at the edge of the trading network. For instance, a stock exchange might use an on-site anomaly detection model to monitor for suspicious trading patterns in real-time, flagging them to compliance officers without delay. These models must be compact and lightning-fast, operating under high throughput. FPGA-based inference engines are sometimes used in this domain for ultimate speed.

In branch banking and ATMs, edge ML can enhance customer service. Some banks have smart ATMs that can scan documents or IDs on the spot with embedded vision models, or detect fraud tampering on ATMs via an onboard model that monitors sensor data. These devices are essentially IoT endpoints with AI. Given they may be in locations with only intermittent connectivity, having offline intelligence is a plus.

One interesting use of LLMs in finance is assisting bankers or analysts by querying large internal knowledge bases. JPMorgan and others have reportedly built private versions of ChatGPT trained on their research and market data. They run these on-prem (often leveraging high-performance servers with GPUs) to ensure client data and proprietary analyses never leave their environment. The LLM can answer bankers’ questions like “What’s our exposure to sector X?” by drawing from internal reports. The challenge is that finance also demands accuracy and auditability – a hallucinating LLM is not acceptable when giving investment advice. So these deployments often put the LLM behind a retrieval system: the model only generates answers based on retrieved documents, and those documents are shown as references (so the human can verify). This kind of system can be deployed on the bank’s edge servers with relative ease now, thanks to open-source LLMs (like Llama-2) that can be fine-tuned on private data and run under the bank’s full control.

Security and compliance drive many design decisions. Financial firms often require that models are explainable and that decisions can be traced. While LLMs are black-boxy, running them internally at least ensures you can log all inputs/outputs securely and that no data leaks to an external provider. Some regulators also mandate that certain data (e.g. EU customer data) must stay in-country – which further motivates on-prem solutions rather than using a global cloud API.

In summary, finance is leveraging edge AI carefully: deploying models internally for privacy and speed, using smaller or distilled models to reduce resource needs, and focusing on use cases like customer support, fraud detection, and data analysis support. The budget is often there for big iron (banks can afford expensive servers), but they value efficiency because every dollar spent on IT is a cost against profits. So compression and optimization are as welcome here as in any startup. We’ll likely see more banks announcing they have their own “GPT” models running in their private cloud or edge infrastructure, as a way to stay competitive without compromising on security.

Mobile and IoT Case Studies

Mobile apps and consumer IoT are where many people encounter on-device AI directly. We’ll highlight a few concrete deployments from 2024–2025:

Smartphone Apps: We already mentioned Google’s Gboard keyboard using on-device LLM for smart replies. This feature rolled out experimentally and showcased that even a ~8B parameter model (quantized and optimized) can run fast enough on a modern phone to be useful. Another example is Samsung’s camera app, which uses on-device neural nets for scene recognition and photo enhancement (though not LLMs, it’s edge vision). There are also offline translation apps: e.g. Apple’s Translate and Google Translate both can download language models to your phone. By 2024, these models have evolved from classical phrase-based systems to neural machine translation models that run fully on-device. A Google developers blog noted that using a small on-device translation model yields only about a 4% drop in translation accuracy compared to a large cloud model, but with much faster response and no need to send data out. This is why features like Live Translate on Android devices can function even without internet – the phone has a decent translation LLM (or sequence-to-sequence model) inside.

Voice Assistants: Both Apple Siri and Google Assistant have been moving more speech processing on-device. As of iOS 15, Siri processes voice commands offline for many tasks, thanks to an on-device speech recognition model. In 2024, rumors suggest Apple is working on a local language model to allow Siri to answer some queries without server help (especially after they beefed up device RAM and Neural Engine capabilities in recent iPhones). Similarly, Amazon’s Alexa was updated to process certain commands locally on Echo devices (e.g. smart home controls) to reduce latency. These voice assistants mix edge and cloud: a request to “turn off the lights” can be handled locally, but a general question still goes to cloud. However, as small LLMs improve, we might get to the point where a home assistant device carries a multi-billion parameter model that can handle a lot of conversational queries offline. That would be the ultimate offline-first smart home setup, ensuring privacy (your voice stays local) and reliability (it works even if internet is down).

IoT Gadgets: Take wearables – the Apple Watch now does on-device heartbeat irregularity detection using an ML model, and it can even do basic Siri requests on-device. There are also AI-powered hearing aids that use embedded ML to filter noise and enhance speech in real-time, all within the earpiece. Another area is smart cameras (like the Nest Cam or Arlo) which perform person detection and even package detection on-device so they don’t have to stream video constantly. The Nest Cam IQ, for example, had a local model to recognize familiar faces. In 2025, we’re seeing some home security hubs integrate small LLM-based logic to give a more natural summary of events (e.g. “While you were out, the front door opened twice and a package was left on the porch.”) – this summary could be generated by a local model that has seen the timeline of sensor triggers.

Offline education tools: There are language learning apps and devices for kids that deliberately work offline (for safety and remote accessibility). One case is a children’s reading pen gadget that uses an offline OCR and language model to read text from a book out loud and explain difficult words – all without needing internet. This uses a combination of a vision model (for text recognition) and a small language model for explanations, running on a low-power embedded chip. Such devices benefit from the compressive techniques we discussed; for instance, using a distilled language model to fit the limited memory.

These case studies demonstrate the broad reach: from high-end smartphones running quantized LLMs to tiny IoT chips running micro-models. In all cases, the driving factors are similar – latency (real-time response) and offline capability (works anywhere, preserves privacy). Users have grown to expect immediate intelligent responses, and companies have found that delivering that often means moving intelligence onto the device.

One more interesting trend: personalization on the edge. Because the model is on your device, it can adapt to you specifically (learning your usage patterns) without that data leaving. Some apps fine-tune or update the model locally over time. For example, a keyboard app might locally adjust its next-word prediction model to your writing style. In late 2024, Apple’s research hinted at on-device fine-tuning of their OpenELM model via MLX for personalized experiences on iOS . This “learning on the fly” is only possible when the model resides at the edge and has access to personal data directly (rather than retraining a cloud model on aggregated data). We expect more apps will include a tiny training loop to continuously improve their on-device AI for the user – essentially bringing model training to the edge as well, not just inference.

Budget Considerations

When deploying ML models at the edge, cost is a major practical consideration. Different organizations have different budget constraints – a startup, a large enterprise, and a resource-constrained IoT deployment will make very different trade-offs. Here we outline considerations and strategies for each scenario:

Startups and Small Teams: Startups often can’t afford massive cloud compute bills or expensive hardware at the outset. Edge deployment can actually be a cost-saver here – running a model on users’ devices (leveraging BYO hardware) means the startup doesn’t pay for those inference cycles. For example, an AR app that performs on-device object detection pushes the compute cost to the user’s phone rather than maintaining GPU servers. The key is choosing models that are lightweight enough to run on typical consumer devices (or using the compression techniques we covered to get them there). Open-source models and frameworks are the go-to for startups to avoid licensing fees. Projects like ONNX Runtime or TFLite are free and have broad hardware support. There’s also a vibrant community – so rather than hiring a large MLops team, startups can use community-built solutions like llama.cpp for local LLM inference, which are essentially “free” aside from integration effort. Another budget tip is to use commodity hardware for edge devices. A startup building an edge AI appliance might opt for off-the-shelf hardware like Jetson Orin Nano (only a few hundred dollars) rather than a custom FPGA that could cost tens of thousands in development. These off-the-shelf modules come with optimized libraries (saves development time) and are getting cheaper (the Orin Nano Super is $249 now (NVIDIA Unveils Its Most Affordable Generative AI Supercomputer | NVIDIA Blog)). In sum, startups can leverage edge deployment to reduce cloud costs, but they need to carefully optimize models to run on affordable hardware and use open tools to minimize software costs.

Large Enterprises: Big companies have larger budgets but also larger scale. For them, edge deployment is often about long-term cost efficiency and control rather than upfront survival. Enterprises might invest in dedicated edge infrastructure (e.g. a fleet of on-prem servers at branch offices, or equipping thousands of retail stores with AI sensors). These capital expenses can be high, but they often replace recurring cloud costs or expensive manual processes. For instance, a retailer could spend a few million on smart cameras and edge ML in stores to prevent theft, which might save more in losses or cloud video bandwidth over time. Enterprises also value vendor support and reliability, so they may choose proprietary solutions (like NVIDIA’s full stack or cloud-provider edge offerings) even if open-source is free, because downtime or failures are more costly than the price of software. We see enterprises partnering with vendors: e.g. a factory might use NVIDIA IGX and pay for Nvidia’s enterprise software support, ensuring the edge system is maintained and updated securely. That said, enterprises also contribute to open source (for flexibility and avoiding lock-in). It’s common to use a mix: maybe an open-source model architecture, but running on vendor-certified hardware with an enterprise service agreement. Budgeting for edge ML at enterprise scale also includes operational costs: power, maintenance, and upgrades for all those devices. Interestingly, edge ML can save on network costs – if 1000 retail stores don’t need to stream raw footage to cloud, that’s a huge bandwidth saving (which for enterprises can be a significant budget line). So money is shifted from cloud computing to edge infrastructure and personnel to manage it. Enterprises also consider depreciation cycles: they might deploy hardware expecting a 3-5 year life. Choosing slightly over-specced hardware can “future-proof” deployments so they don’t have to be upgraded soon as models evolve. All these factors mean enterprises might spend more upfront for robust, scalable edge solutions that will pay off over years.

Resource-Constrained Environments: In some contexts (like remote areas, developing regions, or battery-powered IoT), the budget is not just money but also power and connectivity. Here the challenge is deploying something that can run on a $5 microcontroller or a solar-powered unit, etc. The models must be ultra-efficient (TinyML territory), and every extra milliwatt or megabyte might be unacceptable. Strategies include aggressive compression (quantize to 8-bit or even binary if possible), using smaller model architectures by design (e.g. TinyML might use a small RNN instead of a large Transformer to fit an MCU), and leveraging any specialized silicon available. Sometimes analog techniques or neuromorphic chips are used to run simple models at microwatt power budgets. The budget constraint also means minimal hardware – maybe one device has to handle multiple tasks to save cost. For example, a single modest CPU at an IoT gateway might run one model that does many jobs (multipurpose) instead of having many chips. Software frameworks like TF Lite Micro are tailored for these scenarios, with static memory planning (no dynamic alloc) and extremely small runtime footprint (Tiny Machine Learning: Progress and Futures - arXiv). From a financial standpoint, deploying millions of tiny edge devices means every cent counts: using a free model could save licensing fees when multiplied at scale, and optimizing the model to require a cheaper microcontroller can save millions in BOM cost. Thus, companies deploying at massive scale (think IoT sensors across a smart city) invest in model efficiency R&D to shave costs. A real example: Amazon optimized Alexa’s on-device models to run on cheaper chips in their Echo Dot, likely saving on the cost of goods for millions of units.

In all cases, edge vs cloud cost trade-offs are evaluated. Often a hybrid approach is chosen to minimize cost: use edge to cut cloud usage (and thus cloud bills), but still use cloud for the heavy stuff rather than provisioning expensive edge hardware that sits idle. An example strategy: a startup might run a small model on device for most user interactions (no cloud cost), but if the user asks something really complex, fall back to a cloud API call occasionally (pay per use). This keeps average costs low while still providing high-quality service when needed. As another example, enterprises might use cloud to train and update models, but do inference on edge. Training is occasional and can be rented from cloud as needed (cheaper than maintaining giant training rigs), whereas inference is continuous and better done on owned devices.

Maintenance and updates are another budget aspect: edge devices need physical management if something goes wrong. So investing in robust models that don’t crash and good remote management can save costly site visits. Choosing simpler models that are less likely to hang or overheat a device can be indirectly a budget decision too (lower support costs).

To summarize, startups leverage edge AI to bootstrap cheaply (using user devices and open tools), large enterprises invest big in edge for strategic savings and control, and IoT deployments focus on extreme efficiency to meet tight cost/power constraints. The good news from 2024’s advancements is that edge AI is becoming more affordable for all – models that once required an expensive server GPU can now run on a $100 device thanks to compression, and open-source innovations mean you don’t have to pay licensing fees for decent model performance. So the trend is lowering the entry barrier and total cost of ownership for edge ML solutions.

Conclusion

Edge deployment of LLMs and ML models has rapidly evolved in 2024 and early 2025, transforming what was once thought impractical into reality. Through a combination of clever model compression, optimized inference engines, and specialized hardware, we can now run surprisingly capable AI models in offline and low-latency environments – from phones and cars to factory equipment and hospital devices. This literature review surveyed the latest techniques: 4-bit quantization and hybrid pruning methods that shrink models with minimal accuracy loss, distillation approaches that produce small yet high-performing students, and system-level innovations like caching and speculative decoding that slash response times. Both open-source communities and industry leaders have contributed: we see frameworks like TensorFlow Lite and PyTorch enabling edge LLMs, as well as proprietary stacks like NVIDIA’s IGX and Apple’s Core ML pushing the envelope of performance and integration (NVIDIA Enables Real-Time Healthcare, Industrial and Scientific AI Applications at the Edge With Enterprise Software Support for NVIDIA IGX With Holoscan) .

Crucially, we explored how these advances apply across sectors. In healthcare, on-device models are boosting privacy and enabling real-time clinical decision support . In manufacturing, edge AI is driving quality control and predictive maintenance with immediate insights on the factory floor. Automotive platforms rely on on-board ML for safety and are beginning to incorporate language capabilities locally for user interaction. Finance is balancing compliance and cost by keeping LLMs on-premise to assist with customer service and analytics without data leaving the organization. And everyday mobile and IoT scenarios are enriched by edge AI – giving users smart experiences that are faster, more reliable, and respectful of privacy (since data stays on the device).

We also discussed the economic aspect: edge deployment can shift costs favorably, but it requires careful planning depending on the scale and context. The trend for startups is to leverage open models and users’ devices to avoid cloud costs, while enterprises invest in edge infrastructure to save in the long run and meet regulatory needs. Meanwhile, the falling cost of powerful edge hardware (as evidenced by devices like Orin Nano Super) and the improvement of free tools and models mean that even smaller players or resource-constrained projects can harness on-device AI.

In terms of technical challenges, some remain. Ultra-low-power scenarios still need further innovation to run complex models. Tooling for monitoring and updating models across thousands of edge devices is an ongoing effort (edge MLOps is a growing field). And ensuring the security and robustness of edge AI (protecting models from tampering and making them fail-safe) is critical, especially in industries like automotive and healthcare. Research continues into methods like federated learning to train models across edge devices without centralizing data, which could further enhance the capabilities of on-device models with shared learning.

All things considered, the period of 2024–2025 marks a significant shift in AI deployment strategy – a “return to the edge” where intelligence is not confined to cloud servers but distributed to where the action is. This shift is enabling faster, more private, and more context-aware AI applications. With LLMs being tamed to run on portable devices and tinyML bringing neural networks to microcontrollers, the range of edge AI will only expand. We can expect that future research will yield even more compressed architectures (perhaps training paradigms explicitly for edge models), better real-time algorithms, and unified frameworks that make deploying an AI model on anything from a smartphone to an embedded board as straightforward as deploying to the cloud. The comprehensive advances reviewed here form the foundation for that edge-first AI future. Each industry is poised to benefit as these technologies mature, ultimately bringing powerful AI closer to end-users and physical world processes than ever before.