"FastKV: KV Cache Compression for Fast Long-Context Processing with Token-Selective Propagation"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.01068

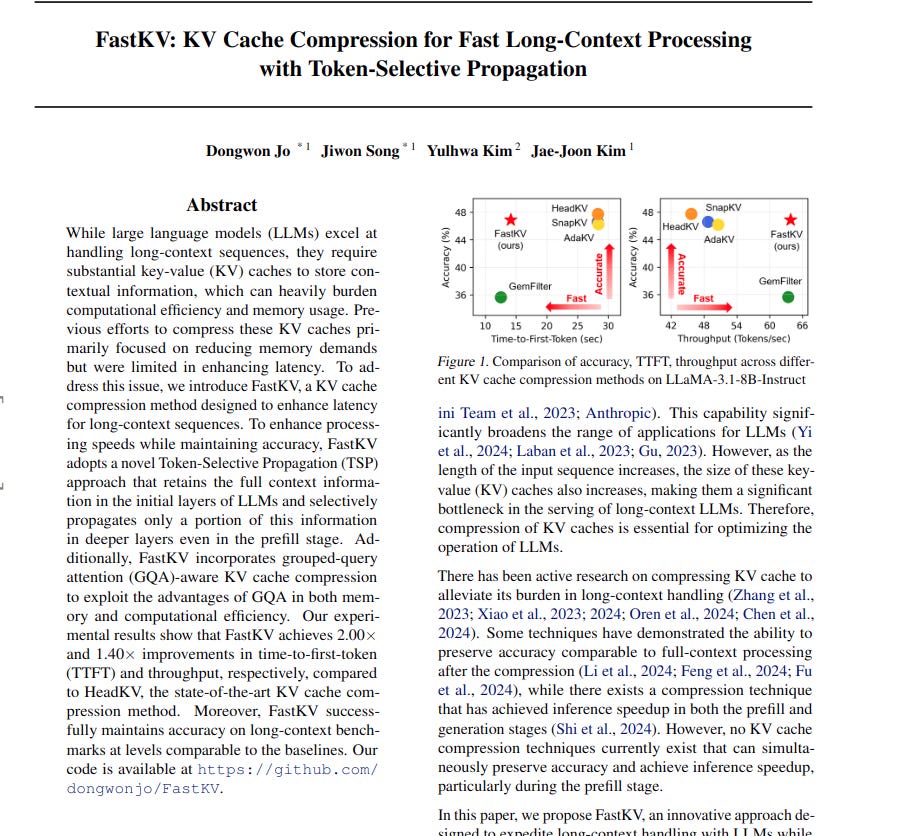

LLMs face challenges with long contexts due to extensive Key-Value cache requirements, impacting computational speed and memory. Existing compression methods reduce memory but often fail to accelerate processing, especially during the initial prefill stage.

This paper introduces FastKV. It speeds up long-context processing while maintaining accuracy. It uses Token-Selective Propagation.

-----

📌 FastKV's Token-Selective Propagation smartly reduces prefill computation by focusing on important tokens in later layers, while early layers retain full context for accuracy.

📌 Grouped-Query Attention-aware compression in FastKV effectively manages Key-Value cache in modern LLMs, boosting throughput without accuracy loss.

📌 FastKV achieves latency gains in prefill stage, unlike prior KV compression methods, directly improving Time-To-First-Token, crucial for user experience.

----------

Methods Explored in this Paper 🔧:

→ FastKV uses Token-Selective Propagation (TSP) to compress Key-Value caches.

→ TSP differentiates between early and later LLM layers.

→ Early layers process full context to capture broad information.

→ Later layers, from a chosen TSP layer onwards, process only important tokens.

→ Token importance is determined by attention scores, similar to SnapKV.

→ FastKV also incorporates Grouped-Query Attention (GQA) compatibility.

→ GQA-aware compression aggregates attention scores within head groups for efficient KV cache management.

-----

Key Insights 💡:

→ Analysis reveals that later LLM layers focus on a consistent subset of important tokens.

→ Early layers handle diverse token interactions for initial context understanding.

→ Token-Selective Propagation leverages this by propagating all tokens initially, then compressing in later layers.

→ This approach balances full context processing in early layers with efficient computation in deeper layers.

-----

Results 📊:

→ FastKV achieves a 2.00× speedup in Time-To-First-Token (TTFT) compared to HeadKV.

→ Throughput improves by 1.40× over HeadKV.

→ Accuracy on long-context benchmarks remains comparable to baseline methods, with less than 1% accuracy gap.