"FBQuant: FeedBack Quantization for LLMs"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2501.16385

The deployment of LLMs on devices with limited resources faces challenges due to memory bandwidth and quantization errors. Current sub-branch quantization techniques often lead to overfitting and increased latency.

This paper introduces Feedback Quantization, called FBQuant, to address these issues. FBQuant uses a feedback mechanism and kernel fusion to optimize sub-branch quantization. This approach aims to reduce overfitting and inference latency effectively.

-----

📌 FBQuant's feedback loop elegantly bounds reconstructed weights. This addresses a core weakness in sub-branch quantization: the risk of overfitting calibration data.

📌 Kernel fusion in FBQuant significantly cuts down memory access overhead. This directly tackles the sub-branch latency issue, achieving faster inference.

📌 By integrating feedback and kernel fusion, FBQuant delivers accuracy and efficiency. It surpasses prior methods in both perplexity and zero-shot performance metrics across LLMs.

----------

Methods Explored in this Paper 🔧:

→ Feedback Quantization, or FBQuant, is introduced. It incorporates a feedback mechanism into the weight quantization process.

→ Sub-branch weights are fed back into the main quantization path. This ensures that the reconstructed weights remain bounded.

→ Bounding the weights prevents the sub-branch from overfitting to calibration data. FBQuant also uses a CUDA kernel fusion technique.

→ Kernel fusion integrates de-quantization, linear projection, and up-projection into a single CUDA kernel. This reduces memory access overhead and minimizes inference latency.

-----

Key Insights 💡:

→ Existing sub-branch quantization methods can suffer from ill-posed optimization. This can lead to unbounded reconstructed weights.

→ Unbounded weights increase the risk of overfitting to calibration data. Sub-branches introduce latency despite low computational overhead.

→ This latency increase is due to memory access bottlenecks. FBQuant's feedback mechanism bounds weights and prevents overfitting.

→ FBQuant's kernel fusion reduces memory access overhead and latency from sub-branches.

-----

Results 📊:

→ FBQuant achieves state-of-the-art perplexity of 6.78 on 3-bit Llama3-8B, improving by 0.85 over AWQ.

→ On Llama2-7B, FBQuant achieves 64.68% zero-shot accuracy in 3-bit quantization, outperforming OmniQuant by 1.20%.

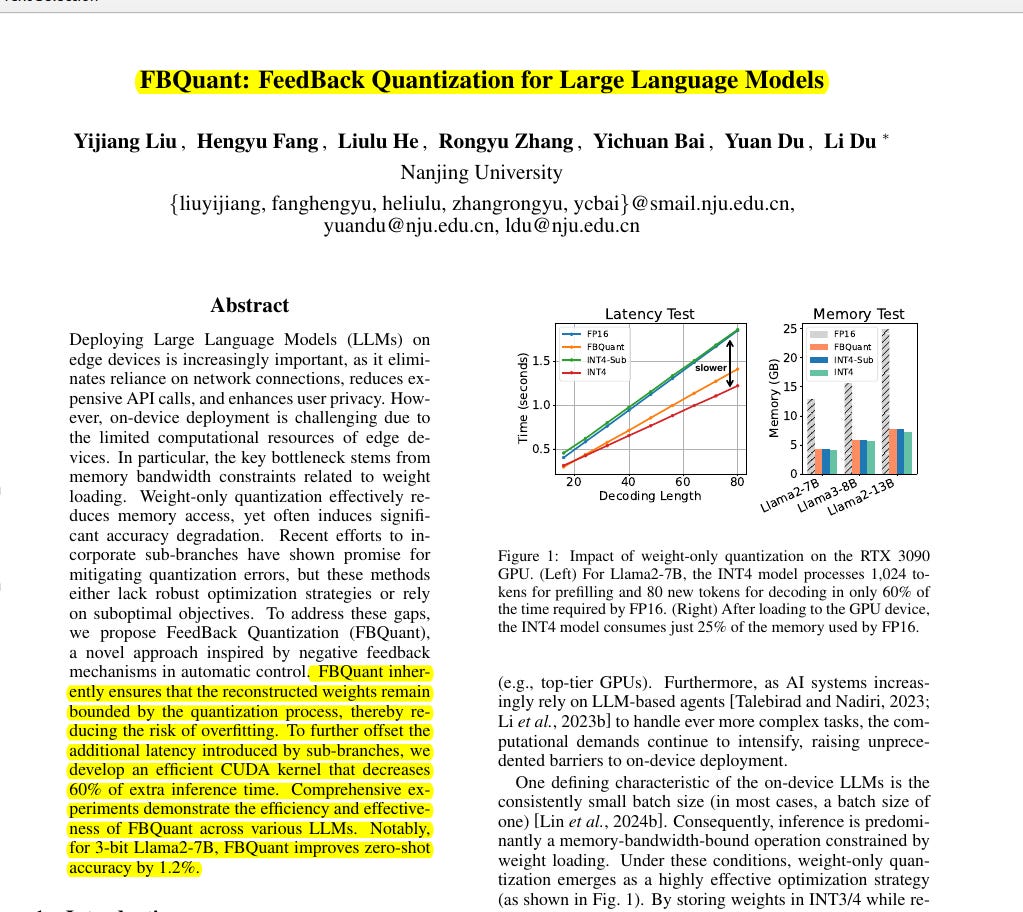

→ FBQuant reduces inference latency by 60% compared to conventional sub-branch implementations on RTX 3090 GPU.