Fei-Fei Li, World Labs Co-Founder in 2024, says LLMs are highly limited.

Fei-Fei Li critiques LLM limits, Nvidia drops 128GB GDDR7 Rubin CPX and Blackwell Ultra benchmarks, Claude adds file editing, and Google TPUs rise as GPU challengers.

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (10-Sept-2025):

🧠 Fei-Fei Li, the world-renowned technology visionary in AI and Co-Founders of World Labs in 2024 thinks LLMs are highly limited.

📢 NVIDIA now has actually introduced a real 128GB GDDR7 GPU, but not for gaming with Rubin CPX

💻 The New Nvidia Blackwell Ultra sets reasoning records in MLPerf debut.

📂 Claude now makes real files in chat, creating and editing spreadsheets, documents, slides, and PDFs so users get ready to use outputs fast.

💰 Opinion: Google’s custom chips tensor processing units, or TPUs, are being seen as the strongest alternative to Nvidia’s GPUs

🧠 Fei-Fei Li, the world-renowned technology visionary in AI and Co-Founders of World Labs in 2024 thinks LLMs are highly limited.

Fei-Fei Li on limitations of LLMs. The post went viral on Twitter.

"There's no language out there in nature. You don't go out in nature and there's words written in the sky for you.. There is a 3D world that follows laws of physics. Language is purely generated signal."

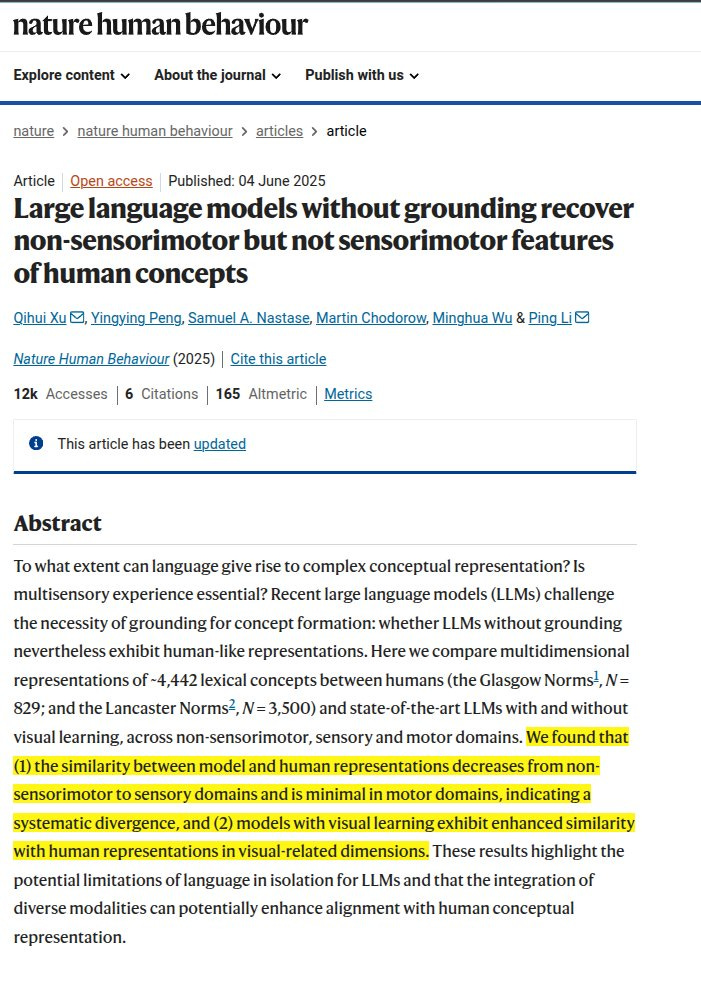

And in-fact there are many research to support her idea.

For example, this study shows that when AI models learn only from text, they can handle things like emotions or abstract ideas fairly well, but they struggle badly with things that require sensing or moving, like touch, vision, or physical action.

When researchers gave the models some visual information, their understanding got better. Means that learning only from language is not enough, because language is just signals humans invented, not something that exists in the real physical world. To really understand the world, models need grounding in actual physical experiences, like seeing and interacting with objects that follow the laws of physics.

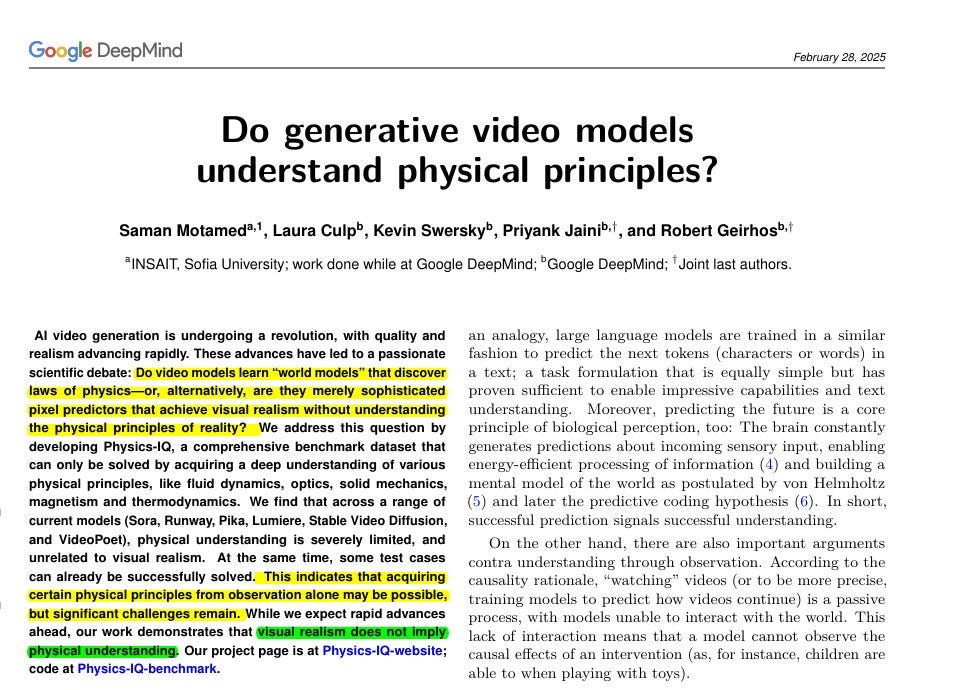

Another study by GoogleDeepMind makes almost the same point, just in the world of video.

The models are just very advanced pattern matchers. They can recreate what looks like reality because they’ve seen so much data, but they don’t know why the world works the way it does. The models can generate clips that look stunningly real, but when you test whether they actually follow basic physics, they fall apart.

The Physics-IQ benchmark shows that visual polish and true understanding are two completely different things. Here, the authors build Physics-IQ, a real-video benchmark spanning solid mechanics, fluids, optics, thermodynamics, and magnetism

Each test shows the start of an event, then asks a model to continue the next seconds. They compare the prediction to the real future using motion checks for where, when, and how much things move.

Scores then roll into a single Physics-IQ number that caps at what 2 real takes agree on. Across popular models, even the strongest sits far below that cap, while multiframe versions usually beat image-to-video versions.

Sora is hardest to tell apart from real videos, yet its physics score stays low, showing realism and physics are uncorrelated. Some cases work, like paint smearing or pouring liquid, but contact and cutting often fail.

This is yet another one, that says AI models trained on linguistic signals fail when the task requires embodied physical common sense in a world with real constraints.

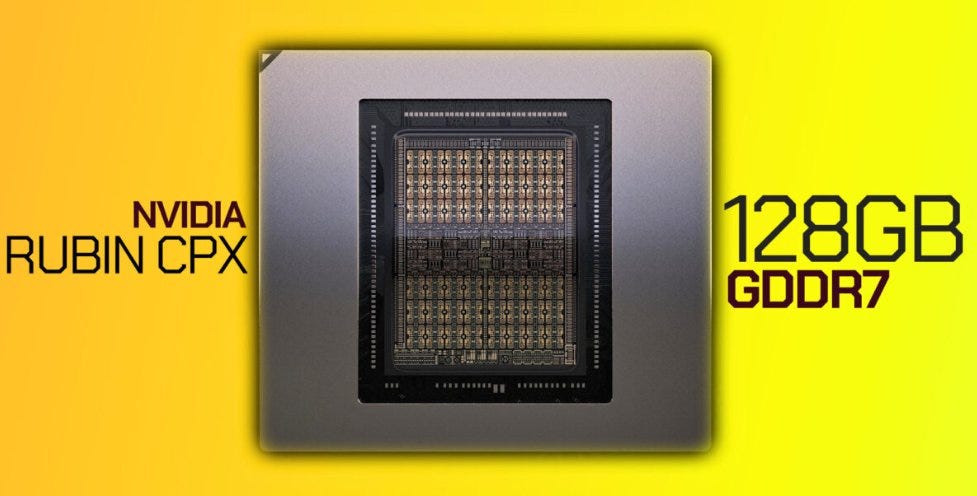

📢 NVIDIA now has actually introduced a real 128GB GDDR7 GPU, but not for gaming with Rubin CPX

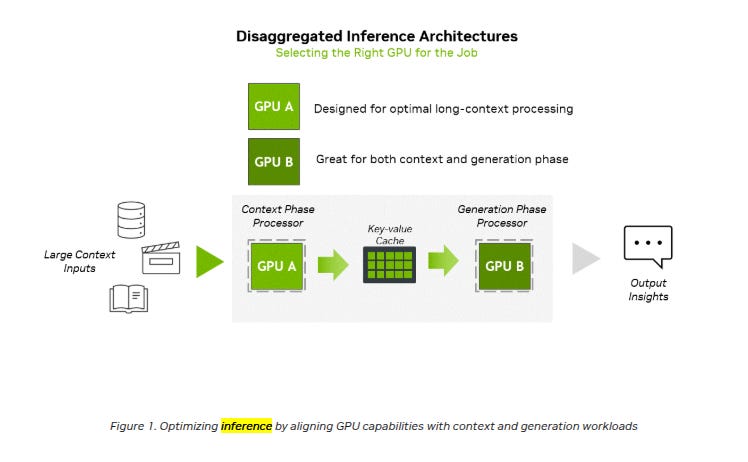

Inference has 2 phases, the context phase is compute-bound while the generation phase is memory-bandwidth-bound, so splitting them lets each side use hardware that actually fits the job.

Rubin CPX targets the context phase where long inputs must be crunched before the first token appears, so throughput per watt is the key metric here.

The chip delivers 30 petaFLOPs NVFP4, packs 128GB GDDR7, has video encode or decode blocks, and claims 3x attention acceleration vs GB300 NVL72, so it is built for long-sequence math and fast token-prep.

NVFP4 is NVIDIA’s 4-bit floating format for inference, so models can push more activations per cycle if accuracy stays within tolerance.

Disaggregation then hands generation to standard Rubin GPUs linked by NVLink, which favor high memory bandwidth to stream tokens while reading the key value cache.

Moving that key value cache quickly is hard, so NVIDIA Dynamo coordinates routing, cache transfer, and scheduling across nodes.

A single rack, the Vera Rubin NVL144 CPX, stitches 144 Rubin CPX, 144 Rubin GPUs, and 36 Vera CPUs into 8 exaFLOPs NVFP4, about 7.5x the GB300 NVL72, with 100TB of fast memory and 1.7PB/s of memory bandwidth.

Networking uses Quantum-X800 InfiniBand or Spectrum-X Ethernet with ConnectX-9 SuperNICs, so the KV cache hops with low latency.

What is the Disaggregated inference: a scalable approach to AI complexity.

It shows how inference is split into 2 phases, context and generation, and how different GPUs are matched to each one.

The context phase needs heavy compute power to process huge inputs before the first token is produced, so GPU A is built just for that.

The generation phase is about streaming tokens quickly, which relies on memory bandwidth, so GPU B handles this part. The key value cache in the middle passes processed context from GPU A to GPU B, making the system efficient without overloading either GPU.

The main point is that by separating context and generation, each GPU does what it is best at, which speeds up inference and reduces wasted resources.

💻 The New Nvidia Blackwell Ultra sets reasoning records in MLPerf debut.

On DeepSeek-R1, Blackwell Ultra reaches 5,842 tokens/s/GPU offline and 2,907 tokens/s/GPU server, which is 4.7x and 5.2x over Hopper numbers.

The Llama 3.1 405B interactive record lands at 138 tokens/s/GPU, and a separate study shows ~1.5x higher per-GPU throughput using disaggregated serving on GB200 NVL72 versus aggregated serving on DGX B200, roughly 5x over a DGX H200 setup.

Under the hood, Blackwell Ultra brings 1.5x higher NVFP4 compute, 2x attention-layer compute, and 1.5x more HBM3e capacity.

A dense NVLink fabric connects 72 GPUs with all-to-all at 1,800 GB/s each for an aggregate 130 TB/s, which feeds multi-GPU shards without choking communication.

NVIDIA quantizes most DeepSeek-R1 weights to NVFP4 and drops the key-value cache to FP8, shrinking memory footprints while using faster 4-bit tensor cores.

They pair expert parallelism for the mixture-of-experts blocks with data parallelism for attention and add ADP Balance to spread context work so throughput stays high and first-token latency stays low.

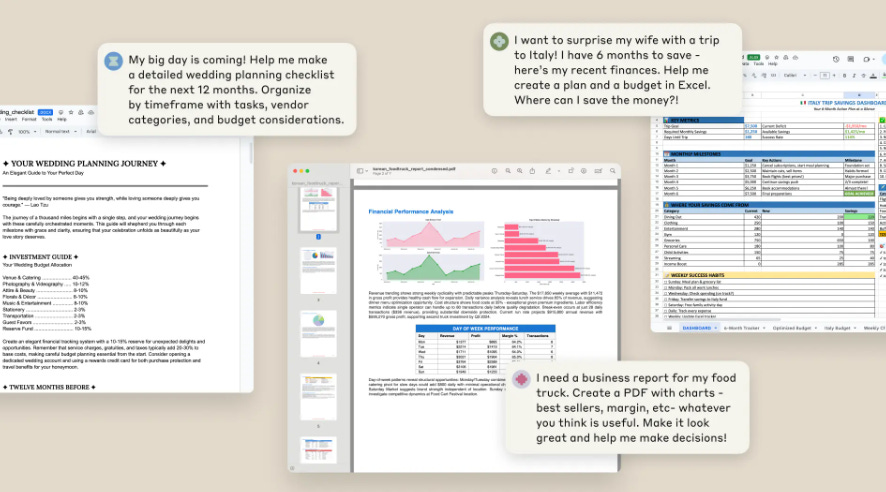

📂 Claude now makes real files in chat, creating and editing spreadsheets, documents, slides, and PDFs so users get ready to use outputs fast

It now builds Excel, Word, PowerPoint, and PDF files from instructions or uploaded data with working formulas, multiple sheets, charts, and short written takeaways.

It converts across formats by turning a PDF into slides, meeting notes into a clean document, or invoices into a structured spreadsheet with calculations.

That setup lets it do statistical work, simple ML models, and visualizations while accepting CSV, TSV, or PDF as inputs for extraction and transformation.

Outputs can be downloaded or saved to Google Drive, and each upload or download is limited to 30MB.

Availability of this feature is a preview for Max, Team, and Enterprise, with Pro access rolling out in the next few weeks once Upgraded file creation and analysis is enabled in settings.

Because the environment can execute code and fetch packages, malicious instructions hidden in files or links are a real risk, so protections include sandbox isolation, injection detectors, admin controls, red teaming, and proxy based allowlists, but active monitoring is still required.

The change moves Claude from advisor to hands on collaborator by shrinking the gap between a request and a finished spreadsheet or deck into a short chat.

Overall this is a strong step toward practical agent workflows, but teams should start with low risk data, tighten install permissions, and keep human review on every generated file.

How does it work ?

From a technical implementation perspective, Claude generates documents by writing and executing code in a private computing environment. This technical architecture ensures that the generated Excel files have correct formula settings and multi-sheet structures, allowing users to download or save them directly to Google Drive for use.

💰 Opinion: Google’s custom chips tensor processing units, or TPUs, are being seen as the strongest alternative to Nvidia’s GPUs

According to some research Analysts say if Google ever spun off this business along with its DeepMind lab, the combined unit could be worth $900 billion, of course they do not expect Google to actually do it right now.

TPUs are chips Google designed specifically for machine learning, and they now rival Nvidia in both speed and cost-efficiency, with performance scaling up to 42.5 exaflops.

Developer activity around TPUs in Google Cloud grew by 96% in just 6 months, showing momentum among engineers and researchers outside of Google itself.

The 6th generation called Trillium is already in high demand, and the upcoming 7th generation Ironwood is expected to see even more interest because it is the first one built for large-scale inference, which is the step where AI models are actually used after training.

Big players like Anthropic and xAI are looking at TPUs because they now come with better software support through JAX, which makes them easier to run at scale compared to before.

According to media reports, Google struck a deal with at least one cloud provider, Fluidstack, a London-based company that will run Google’s Tensor Processing Units (TPUs) out of its New York data center.

Google has also tried making similar arrangements with other providers that specialize in NVIDIA hardware, like Crusoe, which is building a data center for OpenAI using a large fleet of NVIDIA chips, and CoreWeave, which supplies NVIDIA chips to Microsoft and OpenAI.

Google's approach effectively puts it in direct competition with NVIDIA, as NVIDIA primarily sells chips to these cloud service providers.

🗞️ Byte-Size Briefs

Employees at Safe Superintelligence (SSI), co-founded by Ilya Sutskever (ex-OpenAI), are told not to list the company on their LinkedIn. 😀

Partly to keep rivals from trying to hire them away.

According to a new WSJ article.

Meta hired >50 AI researchers, built the TBD Lab with special badge access, and is now dealing with pay gaps, compute fights, and quick exits. The TBD Lab sits near Mark Zuckerberg’s desk, and its member list is hidden on the internal org chart, which creates a status tier.Teams are competing for compute, and a hiring freeze routes new roles to Meta Chief AI Officer Alexandr Wang for approval, which slows moves.

Over the last months Meta's intake includes 21 from OpenAI, 12+ from Google, plus others from xAI and Apple, but several have already left.

That’s a wrap for today, see you all tomorrow.