🌏 Google introduced Gemini 3-powered AI agents to Chrome, we get autonomous in-browser workflows

Google upgrades Chrome with Gemini 3 agents, Grok tops AI video benchmarks, and AI cracks DNA dark matter and writes scientific laws from papers.

Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (29-Jan-2026):

🌏 Google just introduced Gemini 3-powered AI agents to Chrome.

🏆 xAI’s Grok Imagine is #1 across both Text-to-Video and Image-to-Video, on Artificial Analysis Video Arena.

🛠️ BREAKTHROUGH: Google’s AI can now read 1 million DNA letters in a single pass, Decodes 98% of Human DNA’s Dark Matter

📡 Ai2 released Theorizer, open-source code that reads research papers and writes scientific laws with citations.

👨🔧 Video to watch: Full Interview: Clawdbot’s Peter Steinberger Makes First Public Appearance Since Launch

🌏 Google introduced Gemini 3-powered AI agents to Chrome, we get autonomous in-browser workflows

The feature is rolling out to AI Pro and Ultra subscribers in the US now.

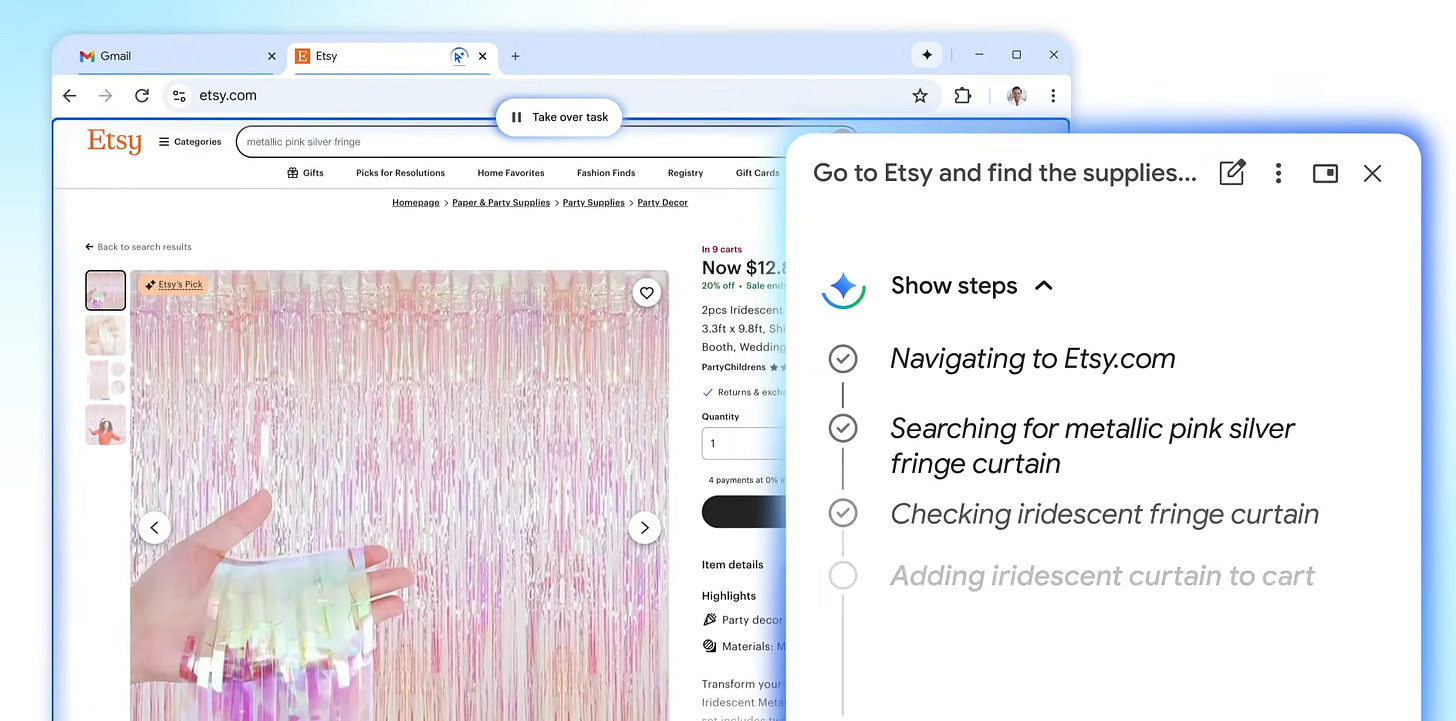

So its basically Chrome letting Gemini run a whole web task end to end by clicking around pages, filling forms, switching tabs, and keeping track of what it already tried. It can also use Connected Apps context when you allow it, so it can pull details like dates, locations, and confirmation info from Gmail, Calendar, or Google Flights while it works.

Google says that while using auto browse, Gemini can identify decorations inside of a photo you’re looking at, find similar items on the web, add them to your cart, and apply discount codes, all while staying within your budget. If a task requires you to log into an account, Gemini can also use the browser’s password manager to log in.

Along with this change, Google has moved Gemini in Chrome from a pop-up window to a panel anchored to the right side of your screen. It now supports integrations with Gmail, Calendar, Maps, Google Shopping, and Google Flights for all users, allowing it to reference information from across the apps you use, as well as perform actions within them.

A typical example: you give a goal, it proposes a plan, it navigates and fills things in, then it pauses at risky moments like purchases, reservations, or posting content for you to approve. If you deny a step, it can back out and try an alternative path, like picking a different flight, changing filters, or using a different site.

Chrome is also adding a persistent AI side panel, Nano Banana image transformation, and deeper ties with Gmail, Calendar, YouTube, Maps, and Flights.

On the commerce side, Google is adopting the Universal Commerce Protocol, built with Shopify, Etsy, Wayfair, and Target, to enable smooth agent-driven shopping across the web.

Google has been ramping up the addition of AI features to its browser since a U.S. district judge ruled in September against forcing the company to sell off Chrome as a consequence of being found to hold an illegal monopoly in the market of internet search. Google earlier this month filed to appeal the ruling that it holds a monopoly in search.

By turning Chrome into a front door for an AI assistant that can research, act across sites, and sit in a side panel, Google can argue that users face more ways to get answers, and that Chrome competes on assistant capability, not only on a default search box. That supports Google’s position that the market is becoming more contestable.

It also helps with remedy optics. If Chrome offers choice flows and AI tools that work across many sites and services, Google can say it is aligning with the spirit of the court’s limits on exclusive distribution and with the order to lower barriers for rivals that use search data.

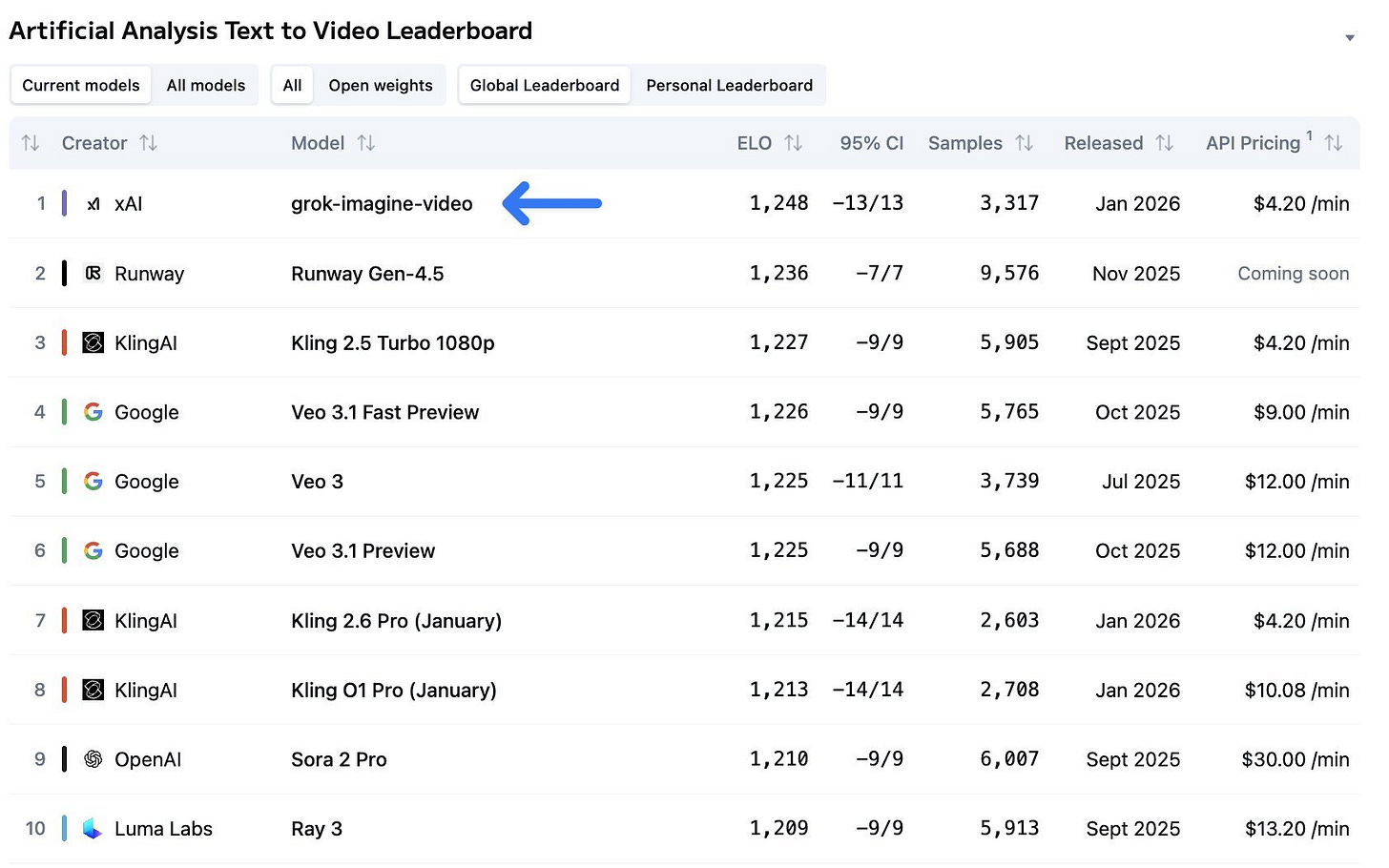

🏆 xAI’s Grok Imagine is #1 across both Text-to-Video and Image-to-Video, on Artificial Analysis Video Arena.

The API is priced at $4.20/min including audio, versus Veo 3.1 Preview at $12/min and Sora 2 Pro at $30/min. The API supports text-to-video, image-to-video, and prompt-based video edits, with 1-15s output, 720p or 480p resolution, and video edits capped at 8.7s input length.

On the Artificial Analysis leaderboards, Grok Imagine is now ahead of models like Runway Gen-4.5 and Kling 2.5 Turbo. Also note, Artificial Analysis’ arena playback is “video without audio,” so the #1 rank reflects visuals, and audio still needs separate evaluation by buyers.

Hitting state of the art this fast is no small thing. As quality maxes out, costs should keep dropping 10x+ each year, putting movie-length generation with acceptable quality is very possible, in my opinion.

🛠️ BREAKTHROUGH: Google’s AI can now read 1 million DNA letters in a single pass, Decodes 98% of Human DNA’s Dark Matter

The tool focuses on interpreting how variations in DNA influence gene regulation, a critical factor behind many inherited diseases and cancers.

AlphaGenome, can read 1mn DNA letters at once and still notice a single-letter change.

This is a BIG deal because many health problems come from tiny DNA edits that sit far away from the genes they influence, and older tools often missed these long-range effects.

While earlier models either analysed long DNA sequences or provided highly detailed predictions, AlphaGenome achieves both. It can analyse up to one megabase (1 Mb) of DNA at a time while delivering predictions down to a single DNA letter across thousands of biological signals.

Today, researchers sift through millions of DNA differences to find the handful that matter. With this model, they can score changes in minutes, then spend lab time on the most promising ones.

That can accelerate rare disease diagnosis by spotlighting harmful changes outside genes, sharpen cancer studies by explaining how hidden switches turn genes on, and help drug discovery by linking DNA changes to gene activity in the right tissues.

So how it will improve Gene Analysis

AlphaGenome predicts how mutations disrupt gene regulation, including when genes are activated, which cell types are affected, and how strongly genes are expressed. Most common conditions such as heart disease, autoimmune disorders, mental health conditions, and many cancers are linked to regulatory mutations rather than changes in protein-coding genes. Identifying these subtle but impactful changes has remained a major scientific challenge.

The team released research code and weights for non-commercial use, plus a hosted API, so hospitals and labs can try it in studies.

Github of AlphaGenome model and weights. Available to scientists around the world to further accelerate genomics research.

📡 Ai2 released Theorizer, open-source code that reads research papers and writes scientific laws with citations.

The big deal is that it aims at “making theories” instead of “making summaries,” which is closer to what a scientist actually needs when deciding what to test next.

Also another key point is the traceability, because each rule is tied to real evidence, so a human can quickly check if the tool is bullshitting or if the rule is solid.

A normal summary says, “This paper did X and got Y,” but Theorizer tries to say, “Across many papers, a repeatable pattern is Z,” and it attaches the proof links for Z.

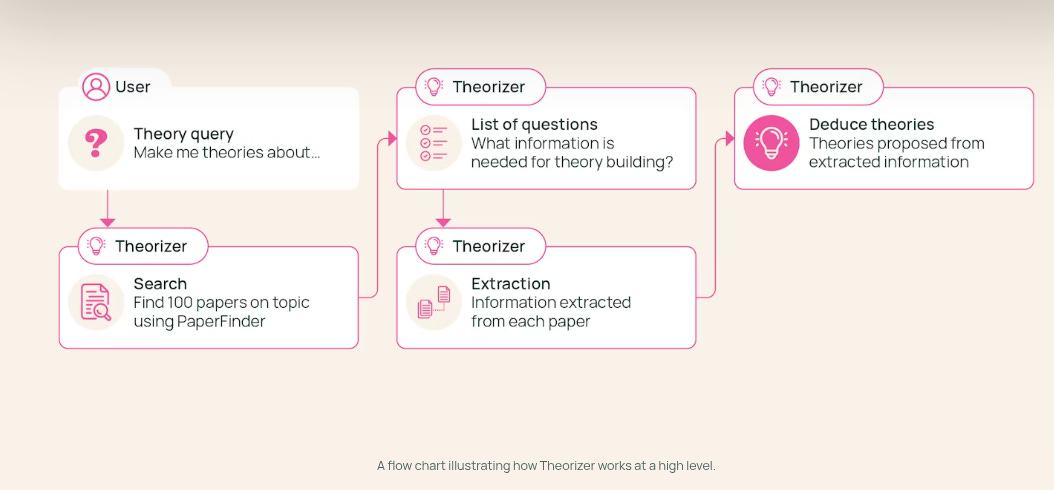

It outputs each claim in a simple package: a LAW, the SCOPE where that law seems to hold, and the EVIDENCE which is the specific paper results it used. So instead of you reading 50-100 papers and trying to notice the pattern yourself, it tries to do the pattern-spotting and rule-writing automatically.

Under the hood it does 2 jobs, first it extracts structured facts from each paper, then it combines those facts into higher-level rules. A law is the pattern, scope states boundary conditions and exceptions, and evidence links the law to extracted results in papers.

It rewrites the query for search, uses PaperFinder and Semantic Scholar to download papers, then converts them to text with optical character recognition (OCR).

It generates a query-specific extraction schema, then GPT-5 mini fills it per paper into structured records.

GPT-4.1 aggregates those records into candidate laws, runs self-reflection to tighten consistency and attribution, and may subsample evidence when context is tight.

Theory quality is scored by a language model as a judge, and predictive accuracy is backtested on later papers, where precision is support rate and recall is checkable coverage.

The literature-supported mode is almost 7x more expensive than parametric-only generation, takes 15-30 minutes per query, depends on open-access coverage, and comes with about 3,000 theories across AI and natural language processing (NLP) for benchmarking.

Outputs are hypotheses and can be wrong, so the sweet spot is fast literature compression with human verification.

👨🔧 Video to watch: Full Interview: Clawdbot’s Peter Steinberger Makes First Public Appearance Since Launch

So whats happening with Clawdbot.

“Clawdbot,” now renamed Moltbot following a trademark request from Anthropic, is a viral open-source AI agent created by Peter Steinberger. It enables users to chat directly with their local computer via mobile apps like WhatsApp.

Unlike standard chatbots, Moltbot gives an LLM (like Claude Opus) access to your machine’s terminal and file system. Using “Agentic Engineering,” it interacts with software via command lines rather than visual interfaces. This allows it to autonomously execute code, troubleshoot system errors, convert files, and control smart home devices without hard-coded instructions. It essentially turns your local hardware into an autonomous agent you can text, signaling a major shift from cloud-hosted SaaS to personalized, local AI utility.

If you’ve seen a GitHub star chart going vertical this week, you’re looking at Moltbot (formerly known as Clawdbot). Created by Peter Steinberger—a software veteran who returned from a four-year retirement just to “mess with AI”—this project has inadvertently become the manifesto for a new era of computing.

Steinberger started with a simple, personal desire: he wanted to chat with his computer via WhatsApp. Not a cloud server, but his actual local Mac Mini.

The result is a hacked-together masterpiece that bridges mobile chat interfaces with local hardware. By giving an LLM access to his terminal, file system, and smart home devices, Steinberger created an agent that can troubleshoot file formats, control his Sonos, and even act as an over-zealous alarm clock, all without explicit hard-coding.

The Core Concept: Agentic Engineering

Steinberger coined a new term during his interview: “Agentic Engineering.” The premise is simple but radical. Instead of building user interfaces for humans, developers should build Command Line Interfaces (CLIs) for models.

If you give an AI a help menu and a tool, it will figure out how to use it. You don’t need to build the logic; you just need to provide the access. As Steinberger puts it, “Don’t build it for humans, build it for models.”

The Death of the App?

The most disruptive takeaway from Moltbot’s success is the potential obsolescence of the traditional SaaS app. Steinberger argues that specialized apps—like fitness trackers or food loggers—are red tape.

In his view, the future isn’t subscribing to 25 different services. It is having one hyper-personalized agent that knows your context, has root access to your data, and solves problems via API calls. Why use a fitness app when your agent can read your food logs and autonomously update your workout schedule?

The “Overnight” Success

Despite venture capitalists frantically trying to throw money at him, Steinberger is keeping the project open-source (likely moving toward a foundation model). He views this not as a product to be sold, but as a playground to prove that the future of AI isn’t just in the browser—it’s on the metal of your local machine.

That’s a wrap for today, see you all tomorrow.