🧠 Google just introduced an open-source translation model family designed to run efficiently on edge

Google drops efficient edge translation models, Anthropic shows Claude's scientific utility, Zhipu trains on Huawei chips, and OpenAI eyes ads with a new ChatGPT tier.

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (16-Jan-2026):

🧠 Google just introduced an open-source translation model family designed to run efficiently on edge

🧪 In a new study, Anthropic explains how scientists are relying on Claude to speed up the research process.

🏆 China’s Zhipu AI breaks US chip reliance with first major model trained on Huawei stack

📡 OpenAI is planning to expand ChatGPT access by pairing a cheaper paid tier with limited advertising on free usage.

🧠 Google just introduced an open-source translation model family designed to run efficiently on edge

Gives decent translation quality in small, open models you can run locally, so you do not need to call a big paid API for every sentence. The practical use case is on-device or low-cost translation for apps like chat, customer support, travel, and document reading where latency, privacy, or offline use matters.

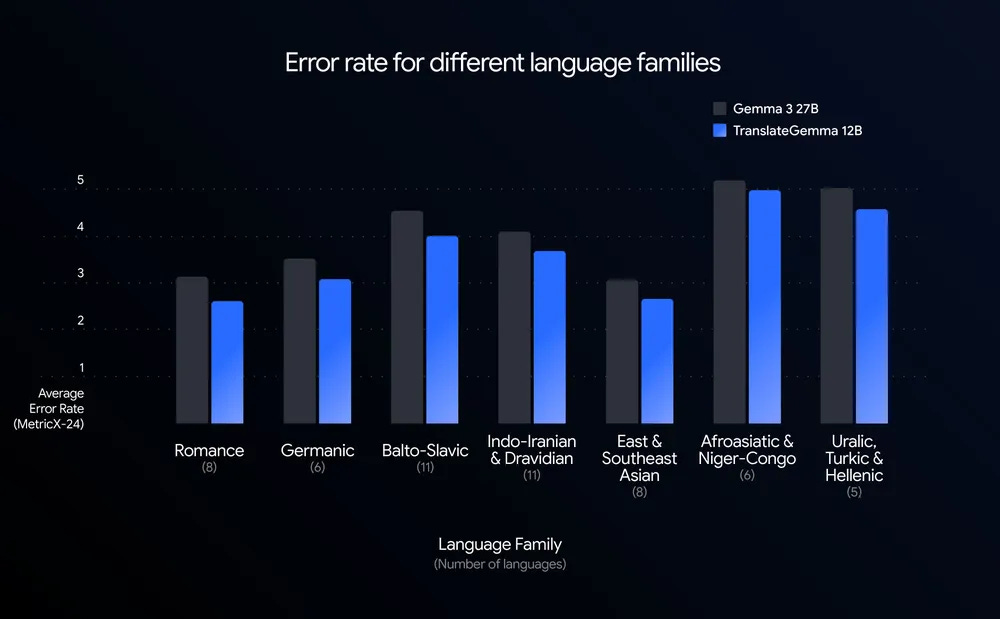

In evaluation, the 12B model beats a Gemma 3 27B baseline on WMT24++ using MetricX.

The core idea is distillation, taking translation behavior from larger Gemini models and compressing it into an open model.

Training uses a 2-stage recipe that combines supervised learning with reinforcement learning to push translation quality higher.

Supervised fine-tuning (SFT) uses parallel text, mixing human translations with synthetic translations generated by Gemini for broad coverage.

Reinforcement learning (RL) then uses an ensemble of reward models, including MetricX-QE and AutoMQM, to prefer more natural and context-faithful outputs.

WMT24++ is a translation benchmark spanning 55 languages, including high-, mid-, and low-resource settings.

MetricX is an automatic translation quality metric, and it shows 12B surpassing the larger 27B baseline despite having under half the parameters.

The 4B model is reported to rival the 12B baseline, which is why it will be great for mobile inference.

Across the 55 languages, TranslateGemma reduces error rates compared to the baseline Gemma model.

Beyond the main 55 language pairs, training also covers nearly 500 additional pairs as a starting point for later fine-tuning, but without confirmed metrics yet.

The models also keep Gemma 3’s multimodal behavior, and improvements in text translation carry over to translating text inside images on the Vistra benchmark.

Deployment targets range from edge devices at 4B, to consumer laptops at 12B, to a single NVIDIA H100 GPU or Tensor Processing Unit (TPU) in the cloud at 27B.

The weights are distributed through Hugging Face and Kaggle, with recipes in the Gemma Cookbook and an option to deploy in Vertex AI.

This looks most useful for teams that need offline translation, or want predictable costs without a server round trip.

🧪 In a new study, Anthropic explains how scientists are relying on Claude to speed up the research process.

How scientists are using Claude to accelerate research

Across the examples, projects that used to take weeks or months are compressed into minutes or hours. Anthropic spoke with 3 labs where Claude is reshaping research—and starting to point towards novel scientific discoveries. The shared approach is connecting the model to databases and software, plus guardrails, so results stay verifiable.

Stanford’s Biomni bundles hundreds of biomedical tools so a Claude-powered agent can operate across 25 subfields from a plain-English request.

In an early trial it completed a genome-wide association study (GWAS), which links genetic variants to traits, in 20 minutes instead of months, and separate case studies report it handled 450 wearable files from 30 people in 35 minutes versus 3 weeks. Biomni also analyzed 336,000 embryonic cells to recover known gene regulators and suggest new transcription factors, and it can be taught expert workflows as reusable skills when the default approach is wrong.

In another lab, they do CRISPR experiments, which means they turn off thousands of genes and see what breaks, but the hard part is interpreting huge piles of results.

They built MozzareLLM, which takes groups of genes that seem related, tells what job they might share, flags which genes are poorly studied, and gives confidence levels so the lab knows what is worth chasing. When they tested multiple AI models, Claude did best, including correctly spotting an RNA modification pathway that other models wrote off as noise.

A 3rd lab is using Claude to help choose which genes to test in smaller experiments, because 1 focused screen can cost over $20,000 and humans often pick targets by manual guessing in a spreadsheet. They built a map of known molecules and how they relate, then Claude navigates that map to propose gene targets, and they plan to test this on primary cilia and compare Claude’s picks to human picks and to a whole-genome screen. The big deal is speed plus scale, because it lets labs do more experiments, faster, with the same people and budget.

🏆 China’s Zhipu AI breaks US chip reliance with first major model trained on Huawei stack

The 16B-parameter GLM-Image model was trained entirely on Huawei’s Ascend chips and software, with no use of US semiconductors.

From data preparation to the final training run, training was conducted on Huawei’s Ascend Atlas 800T A2 server, incorporating the company’s in-house Ascend AI processors, and with MindSpore, Huawei’s all-in-one machine learning framework.

Zhipu AI says it performs especially well on text-heavy images and even outperforms Nano Banana Pro on accuracy tests

It still lags behind models like Nano Banana Pro and Seedream in overall image quality, but it’s fully open-source under a permissive license.

👨🔧 Architecture

Zhipu’s model has a hybrid architecture made up of both autoregressive and diffusion elements. It generates images in 2 steps, an auto-regressive (AR) transformer predicts discrete semantic tokens, then a diffusion decoder renders pixels.

The model pairs a 9B GLM-based generator with a 7B diffusion transformer (DiT) decoder, for 16B total parameters.

The generator predicts semantic vector-quantized (semantic-VQ) tokens, a compressed representation that keeps layout and meaning.

A diffusion transformer (DiT) decoder then reconstructs detail with flow matching and Glyph-byT5 character embeddings for cleaner text.

Post-training uses group relative policy optimization (GRPO) with optical character recognition (OCR) and a vision-language model (VLM) reward, plus LPIPS, a perceptual similarity metric.

📡 OpenAI is planning to expand ChatGPT access by pairing a cheaper paid tier with limited advertising on free usage.

“In markets where Go has been available, we’ve seen strong adoption and regular everyday use for tasks like writing, learning, image creation, and problem-solving,” the company’s announcement stated.

For $8 per month, Go subscribers get more messages, file uploads, and image generation than the free ChatGPT tier subscribers. The price slots Go between the free version of the AI chatbot and the $20-a-month “Plus” subscription tier.

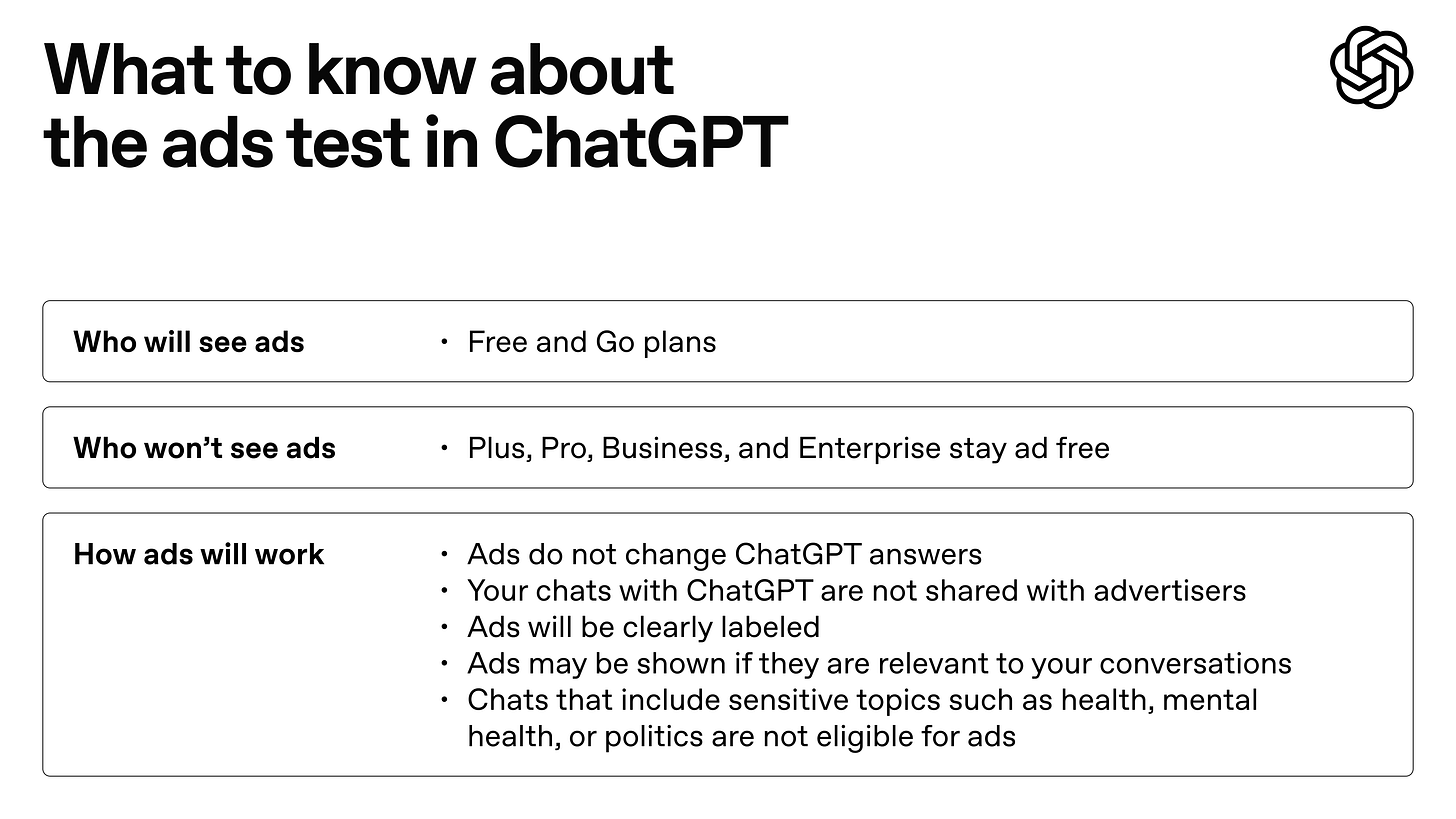

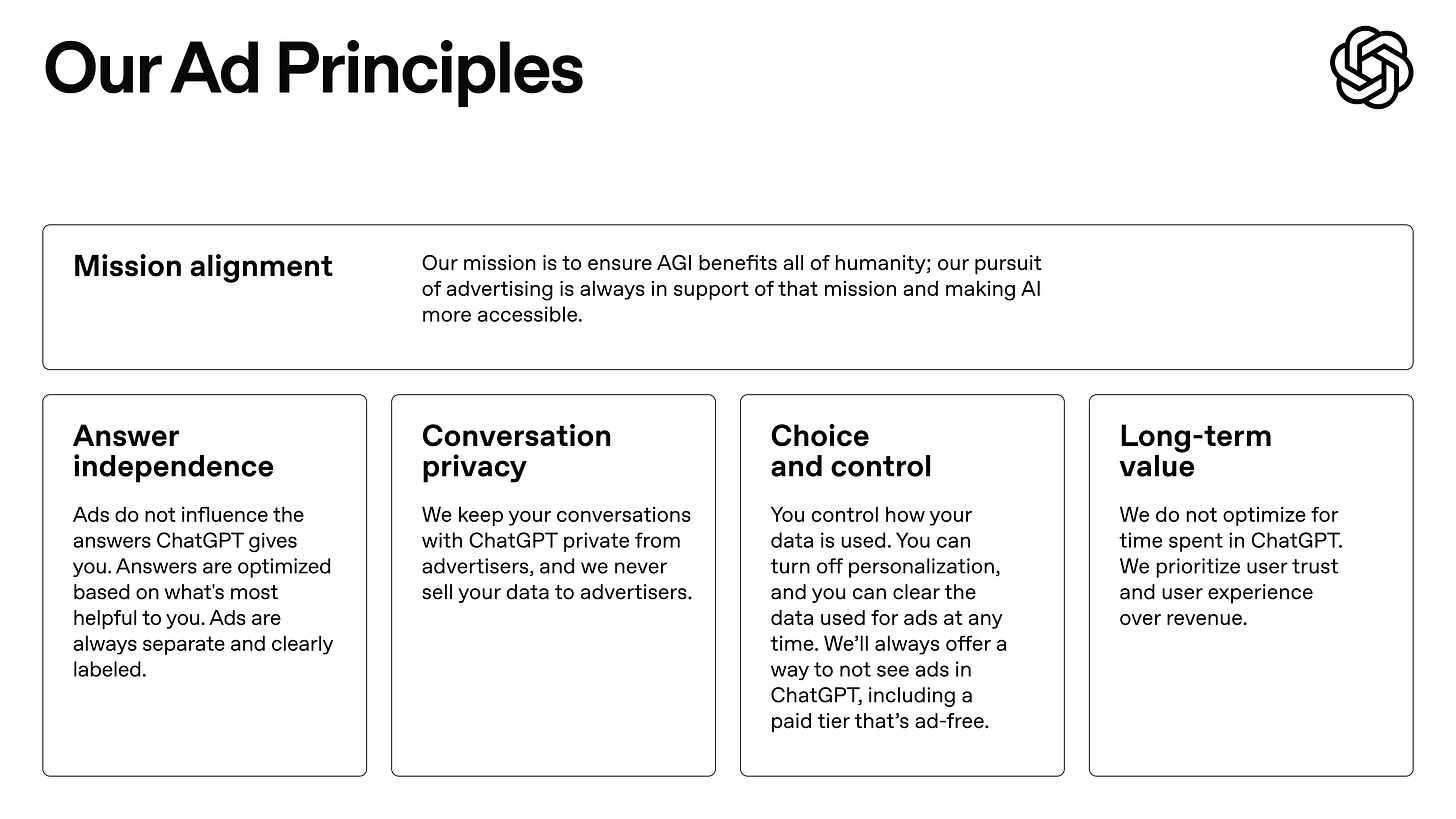

Ads are planned for testing in the U.S. soon on Free and Go, while Pro, Business, and Enterprise stay ad-free. The first ad test is meant to place clearly labeled sponsored items at the bottom of answers when relevant.

OpenAI says ads will not change what ChatGPT answers, and ads stay separate from the response. The plan also says conversations are not shared or sold to advertisers, and personalization can be turned off.

Users should be able to see why an ad appeared, dismiss it, and clear the data used for ads. The test is intended for logged-in adults, with ads blocked for under-18 users and near sensitive topics like health, mental health, and politics.

Advertisers also won’t be able to influence answers displayed in chat, according to OpenAI. While advertisements will be personalized by default, the company said users will be able to opt out of this and turn off personalization for ads at any time.

OpenAI is being extremely careful about how it rolls out ads, trying to make sure they don’t feel intrusive. That’s understandable, especially after users reacted badly when a Target shopping integration was mistaken for an ad inside ChatGPT. Still, OpenAI doesn’t really have the luxury of moving slowly. It needs to find a real path to profits soon. The company is expected to remain deeply in the red well into the next decade, and with so much money riding on AI becoming a cash machine, investor patience won’t last forever. On top of that, most people aren’t paying for tools like ChatGPT, and there’s no clear profit plan that doesn’t rely on some massive, world-changing breakthrough that might never happen.

What I think is that, if answer and ad separation is enforced strictly, it can lower costs without breaking usefulness.

That’s a wrap for today, see you all tomorrow.

This article comes at the perfect time; it's promising to see Google push for more open, efficient edge translation, though I'm curious to see its real world peformance.