🧠 Google launched Gemini 3, its ‘most intelligent’ AI model yet

Google drops Gemini 3, OpenAI's money math leaks, AA-Omniscience pushes LLM evals, infra cost pressures grow, and Jensen Huang breaks down why AI ≠ traditional software.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (18-Nov-2025):

🧠 Google launched Gemini 3, its ‘most intelligent’ AI model yet

🧪 Artificial Analysis launched a new benchmark AA-Omniscience to tests LLM knowledge and hallucination.

💰 OpenAI’s leaked numbers show very high revenue flowing through Microsoft while the cost of running models may still be even higher than what OpenAI brings in.

🏗️ AI data center spending is exploding, but the hard limits of power grids and the need for real customer revenue may slow or shrink what actually gets built.

🛠️ Jensen Huang explains the difference between AI and traditional Software and why there’s no bubble.

🧠 Google launched Gemini 3, its ‘most intelligent’ AI model yet

Also hits a massive 1501 Elo score on the LMArena Leaderboard and pushes Google’s AI into a new era of agentic capabilitie.

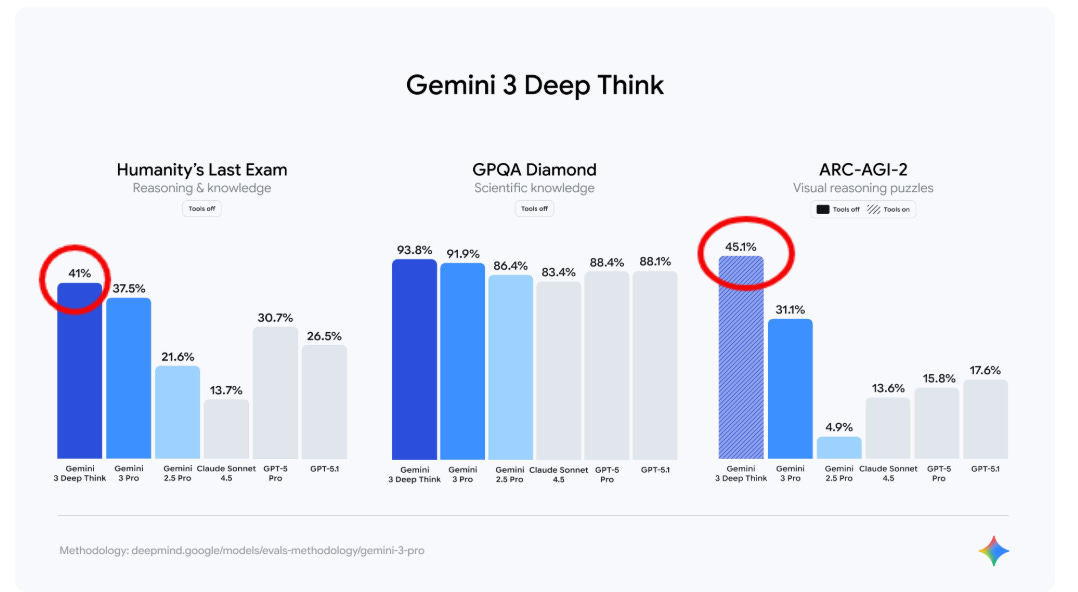

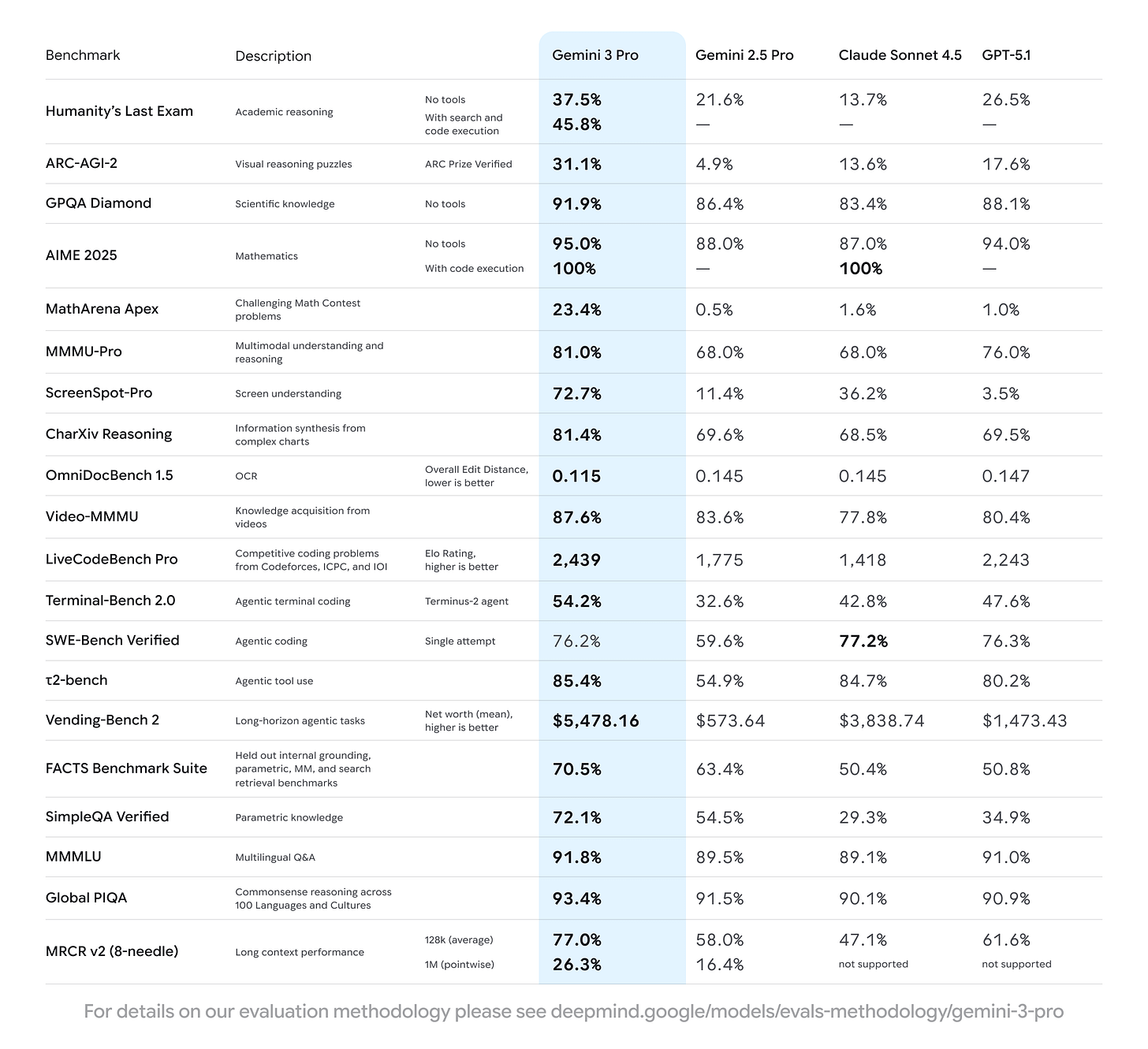

It also sets a new standard for frontier models in mathematics, achieving a new state-of-the-art of 23.4% on MathArena Apex.

1 million-token context window

The most significant update here is something Google calls Gemini 3 Deep Think.

World-leading multimodal understanding: It can take in any kind of input you give it – from text to video to code – and responds with whatever best suits your needs. Ask Gemini to break down concepts from a long video or quickly turn a research paper into an interactive guide.

This is a specialized reasoning mode designed to solve complex math, science, and coding problems by “thinking” through them before answering.

The “Deep Think” mode will be rolling out to subscribers of the Google AI Ultra plan in the coming weeks.

According to Google, the Gemini app currently has more than 650 million monthly active users, and 13 million software developers have used the model as part of their workflow.

Alongside the base model, Google also released a Gemini-powered coding interface called Google Antigravity, allowing for multi-pane agentic coding similar to agentic IDEs like Warp or Cursor 2.0. In this environment, the AI agent has direct access to the code editor, the terminal, and the browser.

Specifically, Antigravity combines a ChatGPT-style prompt window with a command-line interface and a browser window that can show the impact of the changes made by the coding agent.

Google is also not so subtly jabbing at OpenAI, describing Gemini 3 Pro as less prone to the type of empty flattery espoused by ChatGPT. Doshi says you’ll see “noticeable” changes to Gemini 3 Pro’s responses, which Google describes as offering a “smart, concise and direct, trading cliche and flattery for genuine insight — telling you what you need to hear, not just what you want to hear.”

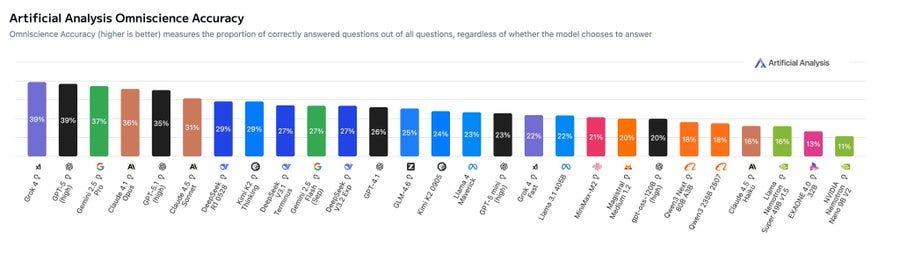

🧪 Artificial Analysis launched a new benchmark AA-Omniscience to tests LLM knowledge and hallucination.

It penalizes wrong answers instead of only rewarding right ones, so guessing hurts a model’s score. Most older benchmarks score Accuracy alone, so a model that answers everything can look strong even if it confidently makes things up.

AA-Omniscience adds an abstain path that counts as 0, while correct is +1 and incorrect is -1, so the safest behavior under uncertainty is to say “I do not know.” This directly measures knowledge reliability, which is the balance between knowing facts and knowing when to stop.

Shows most models answer wrong more often than right on hard questions, so only a few sit slightly above neutral in the Omniscience Index. Claude 4.1 Opus ranks highest on the Index, GPT-5.1 and Grok 4 are close, yet the margins are tiny around 0 because penalties for wrong answers are heavy.

Claude’s lead means it is better at calibrating uncertainty, so it refuses more when unsure and gives fewer fabricated facts. In real use, that trait reduces bad outputs in risky tasks like law, health, policy, and software changes where a wrong claim can propagate errors. The test has 6,000 questions across 42 topics and 6 domains with 89 subtopics.

💰 OpenAI’s leaked numbers show very high revenue flowing through Microsoft while the cost of running models may still be even higher than what OpenAI brings in.

According to the documents, OpenAI pays Microsoft about 20% of OpenAI revenue as revenue share tied to Microsoft’s roughly $13B investment and Azure cloud support, and Microsoft in turn sends around 20% of Bing and Azure OpenAI revenue back to OpenAI because those services use OpenAI models. The reported payments of $493.8M in 2024 and $865.8M in the first 3 quarters of 2025 are Microsoft’s net revenue share from OpenAI, which means Microsoft has already subtracted the royalties it pays back before reporting those figures.

From a 20% share, those net payments imply OpenAI revenue of at least $2.5B in 2024 and about $4.3B in the first 9 months of 2025. On the cost side, Zitron estimates that OpenAI spent about $3.8B on inference in 2024 and about $8.65B on inference in the first 9 months of 2025, which is the money spent on GPUs and data centers to answer user prompts with trained models.

Training huge models is mostly covered by non cash credits from Microsoft, so that bill does not hit OpenAI’s cash flow as hard, but inference is largely paid in cash because every API call and every chat session uses real compute that must be paid for in money, not credits. Earlier reporting has put OpenAI’s total compute spend around $5.6B for 2024 and its broader cost of revenue at about $2.5B for the first half of 2025, which all lines up with an operation where the biggest single expense is running inference for users.

When the estimated inference bill is placed next to the inferred revenue, it becomes very plausible that OpenAI is still losing money on day to day usage of its models even while headline revenue grows fast. OpenAI still leans heavily on Microsoft Azure but has also added CoreWeave, Oracle, AWS and Google Cloud, which looks like a way to secure more hardware and push cloud providers to compete on price and special deals.

🏗️ AI data center spending is exploding, but the hard limits of power grids and the need for real customer revenue may slow or shrink what actually gets built.

The first charts show that Amazon, Microsoft, Alphabet, and Meta are pushing capital spending on this stack toward about $100B per quarter and that for some of them these investments are rising to roughly 30% to 35% of yearly sales, which is a very high share for mature companies. A growing chunk of this spending comes from debt and outside investors, because pure AI players like OpenAI and Anthropic still lose money and even a giant like Meta is signing complex private equity funded structures, with 1 Goldman Sachs report guessing OpenAI could spend about $75B in 2026.

The US data center build out chart splits capacity into built, underway, planned, and stalled and shows that by 2024 to 2025 the total planned capacity jumps to about 80GW while the already built slice is small and a noticeable block has already moved into the stalled bucket. On top of hardware lead times, there are slow parts like getting permits approved and negotiating connections to natural gas pipelines and high voltage lines, which means that even if buyers are ready to spend, extra capacity still shows up on a multi year delay.

At the same time there is a kind of land rush, since many developers are buying or optioning sites near substations in the hope of flipping them into AI campuses. Raymond James analysts project that revenue from AI cloud services across providers like Microsoft, Oracle, Amazon, Alphabet, CoreWeave, and Nebius could grow to nearly 9x current levels by 2030, which would mean hundreds of billions of dollars a year flowing through AI APIs and managed services.

JPMorgan analysts build a financial model that assumes global AI infrastructure investment reaches about $5T by 2030, then ask how much extra yearly revenue that pool of hardware must generate to give investors a reasonable return. Their answer is that the AI stack would need to produce around $650B of additional revenue each year forever to hit a 10% annual return, which is more than 150% of Apple’s current yearly sales and far above OpenAI’s present revenue of about $20B.

This image is showing how much electricity and physical space all the world’s data centers use today, and how much more they are expected to use by 2027.

Power capacity goes from 70.8GW today to about 93.3GW at the end of 2026 and 109.2GW at the end of 2027, while floor space climbs from 455.0M square feet to 570.5M then 645.6M square feet. AI and cloud build out is pushing data center power and land use to the scale of whole countries.

🛠️ Jensen Huang explains the difference between AI and traditional Software and why there’s no bubble.

It didn’t need constant high-power processing once finished. Users simply ran the completed program as a tool. AI, by contrast, generates its output in real time. It must process context, reason, and produce intelligence at the moment of use, not in advance.

This requires ongoing computation for every request. Because of that, AI systems depend on continuous GPU power to “manufacture” responses like a factory producing tokens. So instead of static software tools, AI is an active computational process that needs large-scale, always-on infrastructure to create intelligence dynamically.

My Further rundown.

The current situation is not where GPUs and AI services are sitting idle because inference is too expensive. It shows that even at today’s high cost per token, there is more paid demand than capacity.

Microsoft said on 2025‑10‑29 that Azure AI demand “again exceeded supply,” that it expects to be capacity constrained through at least its fiscal year end. In another supporting point, Microsoft also said that commercial remaining performance obligations rose to “nearly $400B” with a roughly 2‑year weighted duration.

Means customers have already signed contracts that commit them to pay Microsoft that amount over the next 2 years. Those are not hype clicks or free trials. This is contracted revenue, which usually includes AI infrastructure and AI services.

That’s a wrap for today, see you all tomorrow.