⚙️ Google launches Gemini subscriptions to help corporate workers build AI agents

Google’s pushing agent workflows at scale, OpenAI tackles bias in LLMs, GPT-5 Pro tops ARC-AGI, plus 1,001 real GenAI use cases and an open-source AgentKit alt.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (10-Oct-2025):

⚙️ Google launches Gemini subscriptions to help corporate workers build AI agents

💼 Google Cloud published 1,001 real enterprise gen-AI use cases, a signal that AI is in production at scale across multiple industries.

👨🔧 Github Resource: Shannon Open‑source, self‑hosted, vendor‑neutral alternative to OpenAI’s AgentKit

📡 GPT-5 Pro now holds the highest verified frontier LLM score on ARC-AGI’s Semi-Private benchmark 👏

⚖️ OpenAI introduces a comprehensive framework for measuring political bias in large language models using realistic conversational scenarios.

⚙️ Google launches Gemini subscriptions to help corporate workers build AI agents

Google just launched Gemini Enterprise, its full-stack AI platform that brings all its AI tools into one unified workspace so employees can use AI without switching between multiple apps or tabs.

It combines tools like Gemini CLI, Google Vids, and Google Workspace apps under a single interface.

Gemini Enterprise is Google’s “front door for AI at work.” It merges six major components: Gemini models as the system’s intelligence, a no-code workbench for orchestration, pre-built Google agents, secure data connectors, a central governance layer, and an open partner ecosystem of over 100,000 collaborators.

Connects directly to enterprise data sources such as Google Drive, Docs, and even Microsoft 365 or Salesforce. The idea is to give every employee access to AI agents and automation directly within their work environment. Users can query data, generate documents, build reports, or trigger actions without leaving the interface.

Gemini Enterprise works as a no-code workbench, meaning anyone can orchestrate AI agents or build workflows through plain-language prompts. It includes pre-built agents for deep research, insights, and task automation, while also allowing organizations to bring their own or third-party agents.

For governance, admins can visually manage which agents and workflows are active and how they access company data. This ensures better control over how AI is used within an enterprise.

Google reports that 65% of Google Cloud customers already use its AI tools, and nearly 50% of all new code within Google AI is generated by Gemini models. Pricing for Gemini Enterprise, both the standard and pulse editions, start at $30 per seat per month. A new pricing tier, Gemini Business, costs $21/seat per month for a year.

Google is also introducing pre-built and specialized agents, including a new Data Science Agent (in preview) that automates data preparation, exploration, and model training. This agent generates multi-step plans automatically, removing the need for manual tuning. Companies like Morrisons and Vodafone are already using it to speed up data workflows.

Gemini Enterprise integrates with protocols like Agent2Agent (A2A) for inter-agent communication, Model Context Protocol (MCP) for sharing context, and the Agent Payments Protocol (AP2) for secure financial transactions between agents. These standards lay the groundwork for what Google calls the “agent economy.”

The platform also comes with Gemini CLI extensions, letting developers automate tasks, generate code, and connect to services like GitLab, MongoDB, Shopify, and Stripe directly from their terminal. Over 1 million developers have already adopted Gemini CLI.

4 ways Gemini Enterprise makes work easier

AI specialist for every team

Anyone can build or use pre-built AI agents through a no-code workbench, automating routine work like campaign creation or data analysis.

Unified business data

It connects data from Google Workspace, Microsoft 365, Salesforce, and SAP, giving agents full context to deliver smarter, more accurate results.

End-to-end automation

It links multiple agents to handle entire workflows, not just single tasks, cutting manual steps and improving efficiency.

AI in familiar tools

Works directly inside Workspace and Microsoft 365, adding multimodal features like Google Vids for instant video creation and Meet’s real-time translation for seamless multilingual collaboration.

💼 Google Cloud published 1,001 real enterprise gen-AI use cases, a signal that AI is in production at scale across multiple industries.

The list grew from 101 in Apr-24 to 1,001 in Oct-25, a 10X jump.

Some examples

Customer agents handle real volume with measured outcomes, for example Commerzbank’s bot manages 2M chats with 70% resolution, Best Buy summarizes reviews for faster decisions, and Mercedes-Benz ships a Gemini-powered in-car assistant.

Employee agents crush repetitive work, Toyota’s factory platform frees 10,000 hours per year, Manipal Hospitals cuts nurse handoff from 90 minutes to about 20, and Equifax saw 97% of trial users want to keep licenses.

Data agents and digital twins turn operations into searchable context and simulations, UPS models its entire network for live visibility, BMW builds 3D twins to run thousands of layouts, and Lloyds verifies mortgage income in seconds instead of days.

Code agents raise engineering throughput with repo-aware help, CME developers report 10.5 hours saved per month, Wayfair’s pilot shows 55% faster environment setup and 48% better unit test performance, and teams adopt company-tuned Code Assist.

Security agents fold large language models into SIEM and SOAR workflows, Mitsubishi Motors centralizes detection and response, BBVA moves triage from minutes or hours to seconds, and Palo Alto Networks drops latency and cost while enabling near real-time detection.

👨🔧 Github Resource: Shannon Open‑source, self‑hosted, vendor‑neutral alternative to OpenAI’s AgentKit

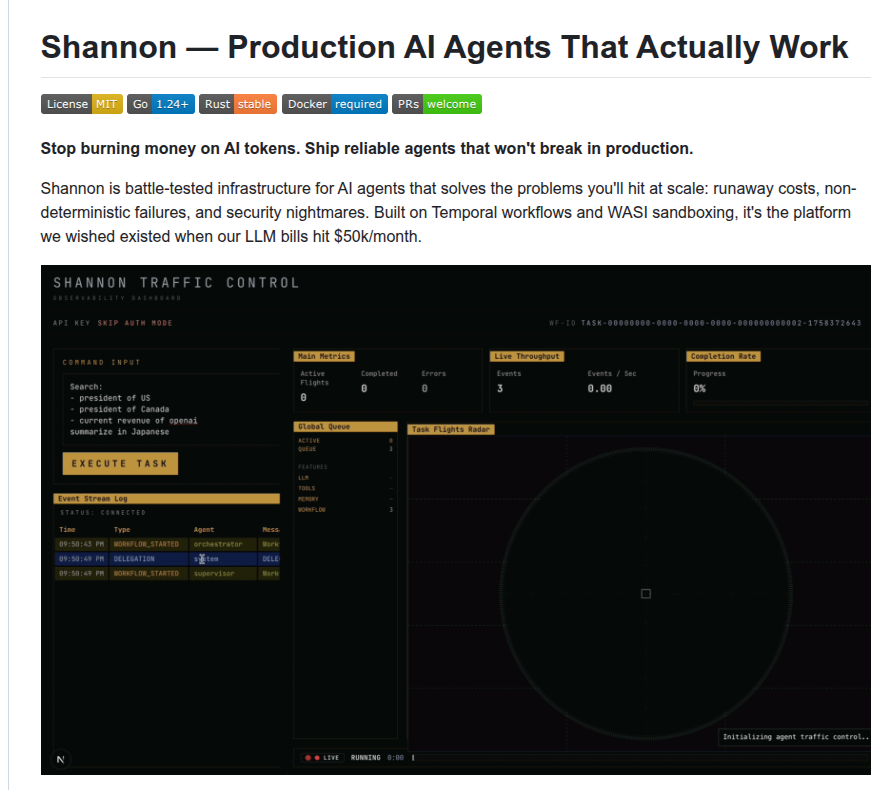

This Github repo Shannon turns agent ideas into production systems, cheaper, traceable, and safe to run.

Enforces hard token budgets, caching, rate limits, and supports zero-token YAML templates that skip unnecessary LLM calls, cutting waste dramatically and avoid provider throttling.

Many agent stacks break in real use as costs spike, runs fail, and logs stay thin.

Shannon wraps every task in a Temporal workflow, so state persists and exact replays are possible.

Workflows use simple templates structured as directed acyclic graphs that split goals into ordered and parallel steps.

Python runs inside a WebAssembly System Interface sandbox with no network and a read only filesystem - i.e. brings security, determinism and reproducibility.

Open Policy Agent rules gate models, tools, and data per team, with optional approvals.

A live dashboard, metrics, and traces show behavior and cost in real time for each session.

Result: faster shipping, lower spend, safer execution, and reproducible debugging for production agents.

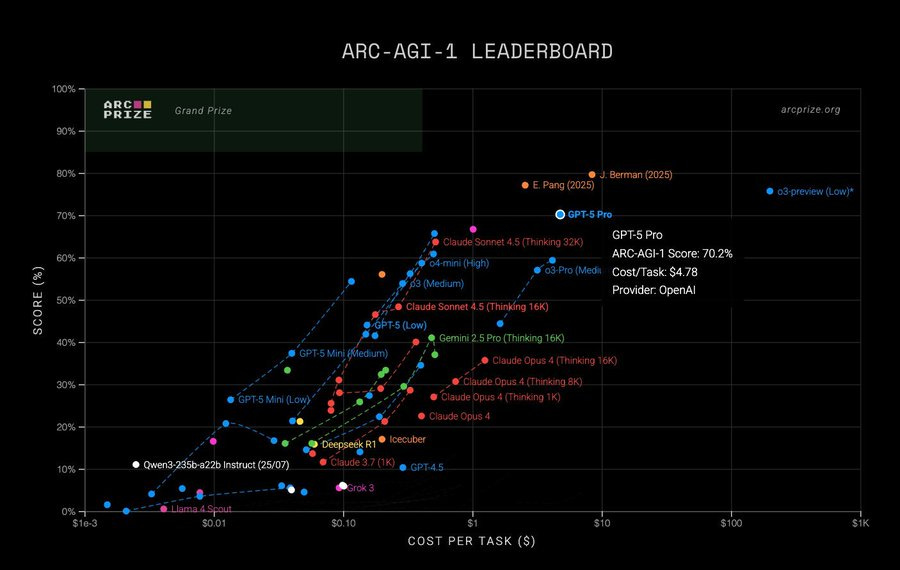

📡 GPT-5 Pro now holds the highest verified frontier LLM score on ARC-AGI’s Semi-Private benchmark 👏

GPT-5 Pro has achieved the highest verified frontier LLM score on the ARC-AGI Semi-Private benchmark, demonstrating advanced reasoning capabilities. This is a significant development in the field of AI, as the ARC-AGI benchmark assesses a model’s ability to generalize and perform abstract reasoning, which is considered a key indicator of intelligence.

It still lags the OG o3-preview model that OpenAI announced in December last year. That one was almost 50x more expensive than GPT-5 Pro. Makes you wonder what models they have internally now.

But to note, o3 preview is a different model it was never released and only used one to test against Arc AGI 1. o3 preview was tested at low and high - only low is on the leaderboard as high took more than the $10k compute cap. o3 preview(high) got 87.5% while using 172x the compute of low. Price estimates vary but anywhere between 1k and 10k per task has been thrown around.

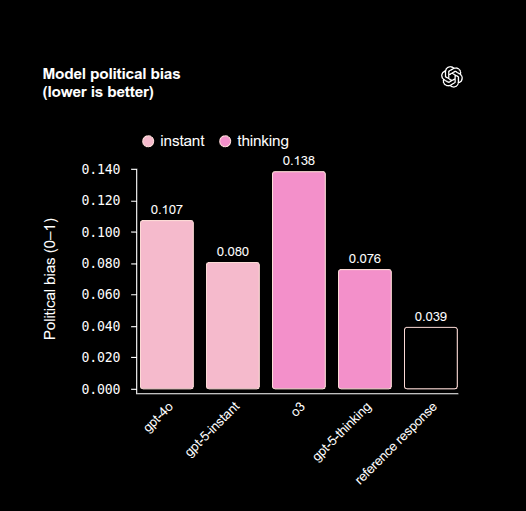

⚖️ OpenAI introduces a comprehensive framework for measuring political bias in large language models using realistic conversational scenarios.

The evaluation mirrors real use with ~500 prompts across 100 topics, each written in 5 political slants to test robustness. Bias is defined across 5 axes, user invalidation, user escalation, personal political expression, asymmetric coverage, and political refusals.

An LLM grader scores each response on a 0 to 1 scale where lower is better, with older models peaking at 0.138 for o3 and 0.107 for GPT-4o, and GPT-5 lower. Models stay near objective on neutral or slightly slanted prompts, but charged prompts raise scores, with a larger pull from charged liberal wording than charged conservative wording in these tests. When bias appears it is usually the model speaking in its own political voice, or giving one sided coverage, or echoing the user’s emotional tone.

Under what conditions does bias emerge?

Bias emerges mainly when prompts are emotionally charged or highly partisan. In neutral or slightly slanted cases, models stay objective and match expected behavior. When users phrase prompts with strong emotional or ideological framing, moderate bias appears. Across tests, strongly charged liberal prompts tend to pull the model’s objectivity more than conservative ones.

That’s a wrap for today, see you all tomorrow.