Google Releases Gemma 3n, Open-Source LLM, Runs on 2GB RAM

Gemma 3n goes open-source on 2 GB RAM, Meta secures a fragile copyright win, Ant launches AQ healthcare LLM, and the AI talent arms race heats up.

Read time: 7 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (27-Jun-2025):

🥉 Google Releases Gemma 3n Open-Source, Can Run Locally on 2GB RAM

🚨 Meta Wins Blockbuster AI Copyright Case—but There’s a Catch

📡 Ant Group has launched the AI healthcare app, AQ, powered by specialised healthcare language model

🥊 Fight for top AI talent intensifies, Meta just reportedly snagged a win

🥉 Google Releases Gemma 3n Open-Source, Can Run Locally on 2GB RAM

Google launched the full version of Gemma 3n, its new family of open AI models (2B and 4B options) designed to bring powerful multimodal capabilities to mobile and consumer edge devices.

Natively multimodal text/vision/audio at both input and output.

Matryoshka-style architecture allowing a dial of capability up and down at test time.

Reasoning, also with a dial. Can use tools.

⚙️ The Details

→ Handles image, audio, video, text. E2B and E4B run in 2 GB and 3 GB VRAM, matching classic 2 B/4 B models. Built-in vision capabilities analyze video at 60 fps on Pixel phones, enabling real-time object recognition and scene understanding.

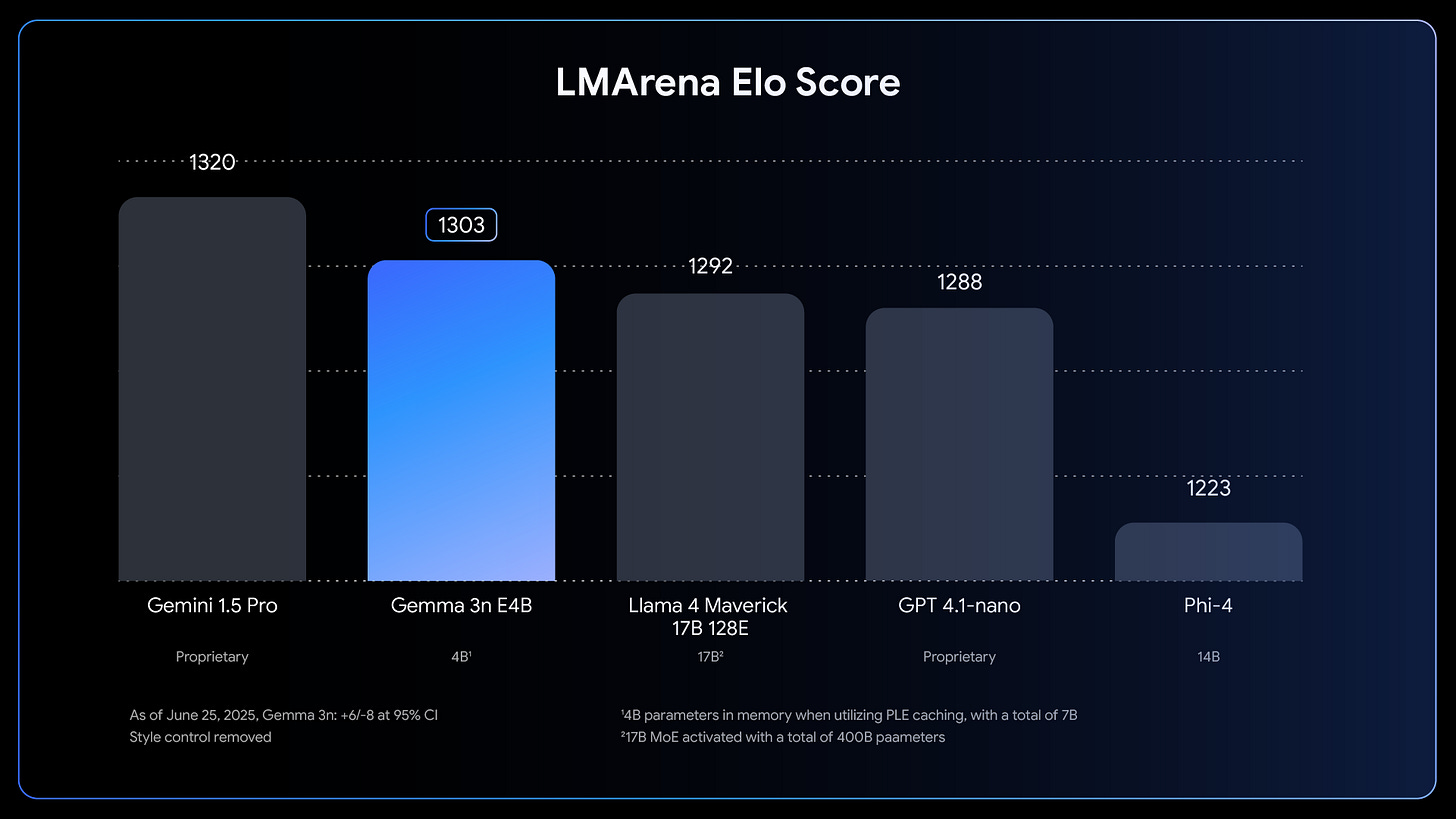

→ Gemma’s larger E4B version scored 1303 LMArena: This is the first sub-10B model past 1300; edges GPT-4-1-nano offline

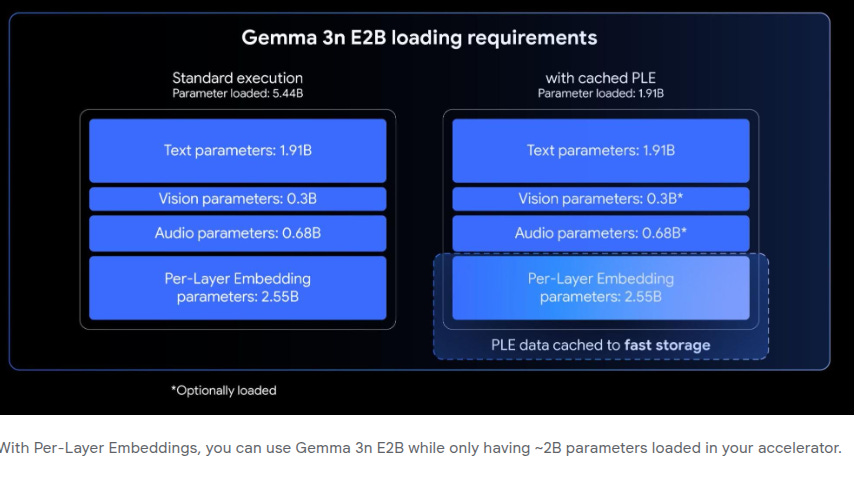

Per-Layer Embeddings (PLE): Unlocking more memory efficiency

PLE splits each layer into two chunks:

Core weights stay in VRAM and drive the main compute.

Layer-specific embeddings sit on the CPU and stream in only when needed.

Think of each transformer layer as two parts. The core weights run the math that turns one set of tokens into the next; those have to sit in fast VRAM so the GPU can crunch them quickly. Wrapped around each layer is a big table of embeddings that help the model keep track of position and modality. Per-Layer Embeddings (PLE) lets Gemma 3n move those tables out of VRAM and into ordinary CPU RAM. Because CPU RAM is larger but slower, the model streams these tables in only when the layer fires, then drops them back, so they no longer block precious GPU space.

For the small E2B variant this trick leaves about 2 billion core weights on the GPU even though the full checkpoint is 5 billion. The larger E4B keeps roughly 4 billion on the GPU out of an 8 billion total. End result: devices with just 2–3 GB VRAM can host a model that used to need double that, with almost no hit to throughput or accuracy because the heavy vector math still happens on the accelerator while the CPU only shuffles lookup tables.

Why you care:

You can run Gemma 3n on a laptop or phone that has just 2–3 GB VRAM.

Smaller VRAM load means lower power draw and longer battery life.

Cloud bills drop because you rent cheaper GPUs.

Same accuracy, no extra coding from your side.

Inference-time speed-ups of Gemma 3n with KV Cache sharing:

Google shows one small tweak inside Gemma 3n (KV cache sharing) that halves the work the model does when it first reads a long prompt.

KV cache holds the attention keys-values each layer needs. Normally every layer builds its own cache, so the first pass over a long prompt repeats similar work 24+ times.

Gemma 3n copies the cache from one mid-layer straight into the layers above. That single reuse step cuts the duplicate work in half, so the first token appears about 2× sooner while output quality stays the same.

Availability of Gemma 3n:

→ Download the models: Find the model weights on Hugging Face and Kaggle. Experiment directly: Use Google AI Studio to try Gemma 3n in just a couple of clicks. Gemma models can also be deployed directly to Cloud Run from AI Studio.

→ Dive into its official documentation to integrate Gemma into your projects or start with our inference and fine-tuning guides.

For running on your own Laptop, you can use Ollama

This an example command

ollama pull gemma3n

llm install llm-ollama

llm -m gemma3n:latest "Generate image of a girl flying through cloud"

The Ollama version doesn't appear to support image or audio input yet.

You can use the mlx-vlm version for that.

→ Use your favorite development tools: Leverage your preferred tools and frameworks, including Hugging Face Transformers and TRL, NVIDIA NeMo Framework, Unsloth, and LMStudio. Gemma 3n offers multiple deployment options, including Google GenAI API, Vertex AI, SGLang, vLLM, and NVIDIA API Catalog.

Overall, this on-device LLMs may lag cloud scale and top-tier reasoning, yet they crush it on instant responses, direct private data access, offline continuity, and “own-your-weights” control

🚨 Meta Wins Blockbuster AI Copyright Case—but There’s a Catch

After Anthropic, the next SUPER IMPORTANT WIN FOR AI Copyright battle. This time its Meta

Federal Judge found training on copyrighted books by Meta falls under fair use. The suit by 13 authors including Sarah Silverman claimed illegal copying. The judge said plaintiffs failed to argue market harm and presented no evidence. Chhabria called Meta’s use transformative. He noted models did not reproduce books verbatim.

He warned ruling is not blanket approval. He said cases rely on detailed records of market effects. So, these decisions aren’t the sweeping wins some companies hoped for — both judges noted that their cases were limited in scope.

Judge Chhabria made clear that this decision does not mean that all AI model training on copyrighted works is legal, but rather that the plaintiffs in this case “made the wrong arguments” and failed to develop sufficient evidence in support of the right ones.

So why this judgement?

Highly “transformative” purpose. The books were not reused as reading material; they were fodder to detect statistical patterns so an LLM could perform a broad range of language tasks.

Miniscule verbatim output. Even with “adversarial” prompts, Llama could spit out at most 50 consecutive tokens from any plaintiff’s book—far too little to compete with or substitute for the originals.

No evidence of market harm. The authors showed neither lost sales nor a concrete market for licensing books as training data that Meta’s conduct displaced.

What’s next?

Possible appeal to the Ninth Circuit. The authors can challenge both the fair-use ruling and the partial denial of their DMCA claim. Discovery on distribution. If evidence shows Meta re-shared files, the “shadow-library” theory could still create liability (distribution enjoys no special fair-use thumb-on-the-scale).

More will be revealed as the Meta case advances next month, but Chhabria noted that one potential outcome, win or lose for authors, could be that publishers become incentivized to make it easier to license authors' works for AI training.

The judge suggested that Meta won only because authors raised the "wrong arguments," suggesting Meta may be more inclined to renew licensing talks in the future if a stronger copyright fight is raised, despite winning this landmark copyright battle against a handful of authors this week.

📡 Ant Group has launched the AI healthcare app, AQ, powered by specialised healthcare language model

Ant Group Unveils AI Healthcare App AQ. Turns your phone into a private clinic, powered by a specialized healthcare language model.

100+ AI tools like doctor recommendations

medical report analysis

connects users to services from 5,000+ hospitals and 1 million doctors across China

🤖 A specialised healthcare language model sits at the core of AQ

"The medical large model behind AQ has learned over 10 trillion tokens of professional medical data, possessing "medical thinking" reasoning and multimodal interaction capabilities."

Ant Group trained a Healthcare LLM on anonymised patient records, guidelines, and research papers. The model achieved leading score on HealthBench and MedBench, which measure clinical reasoning accuracy.

It handles text, images, and numeric lab values together, so it can read a chest scan and blood panel in one pass.

🥊 Fight for top AI talent intensifies, Meta just reportedly snagged a win

→ Meta CEO Mark Zuckerberg personally wooed Lucas Beyer, Alexander Kolesnikov, Xiaohua Zhai, and Trapit Bansal. They built OpenAI's Zurich hub and helped craft the o1 reasoning model. Their jump gives Meta fresh research muscle.

→ Rumors said Meta waved $10M+ sign-on offers. Sam Altman conceded Meta dangled cash. Big sums show Meta will pay any level to win talent.

→ Meta earlier injected $15B into Scale AI and hired CEO Alexandr Wang to run the new unit. The group targets superintelligence that beats humans at reasoning, coding, and agent work. Meta's next model will test if the spending sticks.

→ OpenAI counters with higher pay and scope, but some still left. Altman says his best people stay, yet the loss stings. The talent war now shapes the timeline for next-gen LLM breakthroughs.

That’s a wrap for today, see you all tomorrow.