🎞️ Google Releases Veo 3.1, its majorly upgraded version.

Veo 3.1 hits Gemini API, Sora 2 Pro ranks 4th in Text to Video, Anthropic launches Haiku, PyTorch 2.9 drops, and AI outpaces radiologists but demand grows.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (16-Oct-2025):

🎞️ Google Veo 3.1 and Veo 3.1 Fast land in the Gemini API as paid preview.

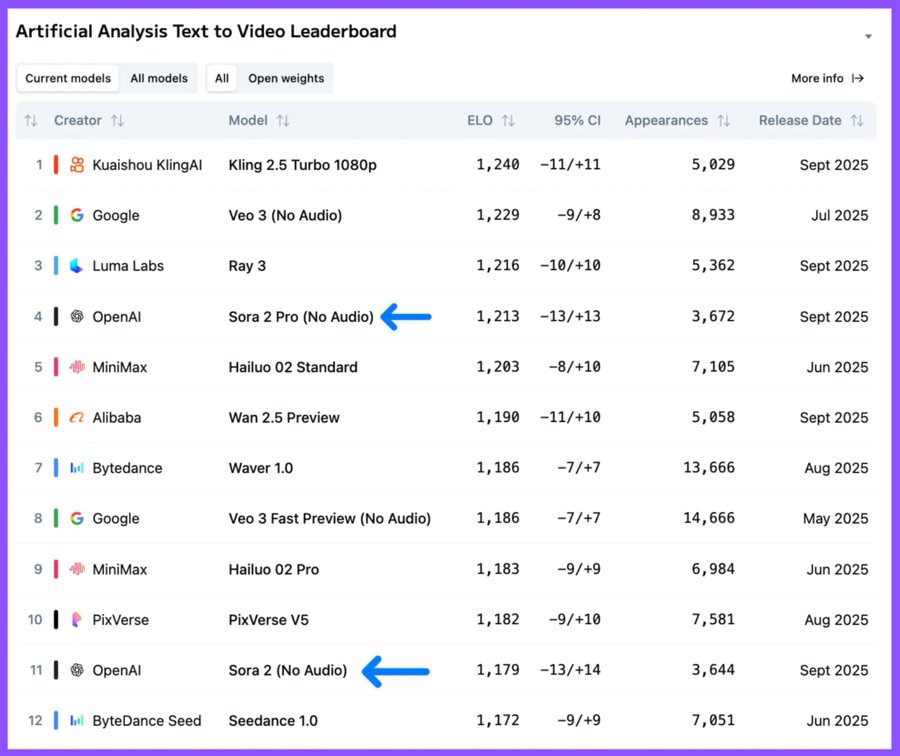

🎬 Sora 2 Pro lands at no-4 in text to video, behind Kling 2.5 Turbo and Veo 3 on Artificial Analysis benchmark.

📡 AI Dominates Radiology, Yet Demand for Radiologists Soars

🛠️ Anthropic launches new version of scaled-down ‘Haiku’ model

🧠 PyTorch 2.9 is now released, introducing key updates to performance, portability, and the developer experience.

🎞️ Google Releases Veo 3.1, its majorly upgraded version.

Google Veo 3.1 is a text-to-video AI model that turns short written prompts and references into short video clips, with native audio support and more control over storytelling and style.

Yesterday, Google released a new major update to that model, Veo 3.1 (and a faster variant) in paid preview for developers and creators. both landed in the Gemini API.

You get richer native audio, tighter prompt adherence, stronger cinematic control. AI now “thinks” more like a real director and editor.

Also adds reference image guidance, scene extension for longer clips, and first and last frame transitions, while keeping the same price as Veo 3. The model now produces native audio that covers natural conversation and synchronized effects so the soundtrack lines up with the shot without extra tooling.

Veo 3.1 has been trained to better understand how human filmmakers design shots and scenes, like how camera motion, lighting, and pacing usually work in real movies. So when you describe a scene in a text prompt, the model doesn’t just produce random movements or cuts. It follows film-like rhythm — for example, keeping consistent timing between shots, smoother transitions, and logical framing — which makes the result feel more natural and cinematic.

Image to video gets better adherence to the prompt and maintains character consistency across multiple scenes, which reduces style drift when stitching shots. Ingredients to video lets developers pass up to 3 reference images of a character, object, or scene so the model keeps the same identity and look across shots.

Scene extension grows a sequence by conditioning each new clip on the final 1 second of the prior one, which preserves motion and background audio for runs that can last 1 minute or more. First and last frame generates a smooth transition between two images and includes matching audio so cuts are cleaner.

Access is through Google AI Studio and Vertex AI, and it is also in the Gemini app and Flow, plus Veo Studio and cookbook examples are available for quick starts. Early users like Promise Studios and Latitude are already applying it to storyboards and interactive narrative engines.

Scene extension in Veo 3.1

It creates extended clips, lasting a minute or more, that pick up right where your original shot left off and continue the same action.

You can give Veo 3.1 two still images - one as the starting frame (A) and one as the ending frame (B). Then Veo creates a full smooth video that moves naturally from the first image to the last, filling in all motion and transitions automatically.

🎬 Sora 2 Pro lands at no-4 in text to video, behind Kling 2.5 Turbo and Veo 3 on Artificial Analysis benchmark.

It beats the original Sora, adds native audio and Cameo, but costs $30/minute.

Sora 2 Pro and Sora 2 mark a clear jump over the original Sora at #19, and Sora 2 is only the second model after Veo 3 with native audio. For fair scoring the arena disabled audio, so Sora 2 was judged on video only even though it can generate sound in sync. A strict safety filter in Sora 2 blocked many image to video prompts with people, so that mode could not be ranked yet.

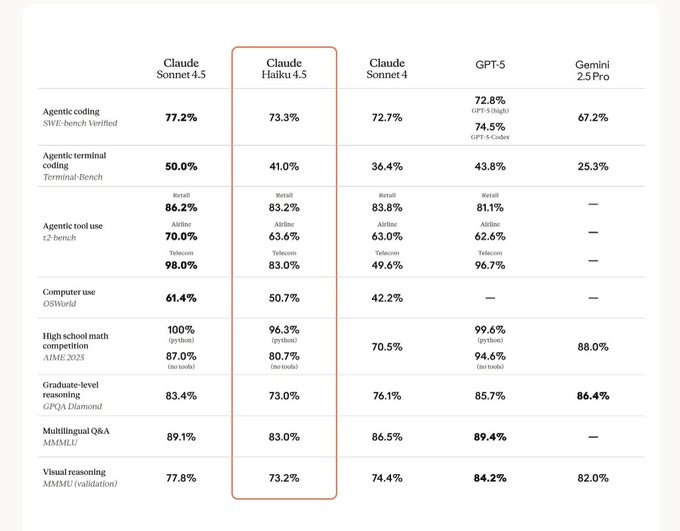

🛠️ Anthropic launches new version of scaled-down ‘Haiku’ model

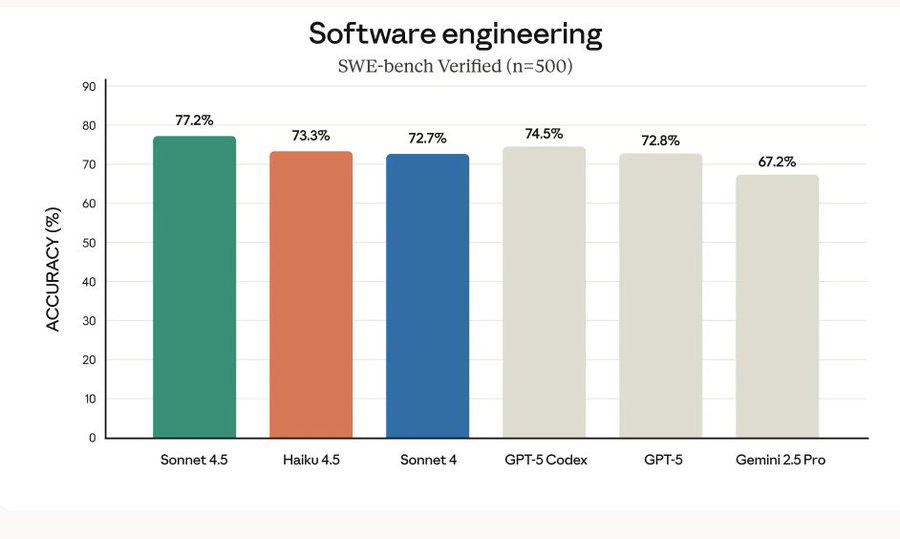

Near-Sonnet-4 coding quality at 1/3 the cost and 2x+ the speed.

Context Window: 200K tokens. This is equivalent to Claude 4.5 Sonnet

Availability: Claude 4.5 Haiku is available via Anthropic‘s API, Google Vertex and Amazon Bedrock. Claude 4.5 Haiku is also available via Claude, and Claude Code

Claude 4.5 Haiku is also 3x cheaper than Claude 4.5 Sonnet on per token pricing

It also beats Sonnet 4 on some agent and computer-use tasks, so everyday tools feel faster and more capable. Pricing is $1/$5 per 1M input/output tokens, model id is claude-haiku-4-5, and it is available in the API, Claude apps, Amazon Bedrock, and Google Cloud Vertex AI.

On software engineering, Haiku 4.5 scores 73.3% on SWE-bench Verified across 500 problems, using a simple scaffold and no extra test-time tricks. On terminal workflows it posts 41.0% on Terminal-Bench, and on real computer control it reaches 50.7% on OSWorld-Verified, which is stronger than Sonnet 4 on those tasks.

The team measured these with multiple runs and a 128K “thinking” budget, and they describe the exact prompts and tool configs used for reproducibility. For low-latency use like chat assistants, customer support agents, and pair programming, the point is to keep responses tight while staying smart.

Sonnet 4.5 can plan multi-step work then dispatch a team of Haiku 4.5 workers in parallel, which makes multi-agent coding and rapid prototyping feel snappier. Safety checks show lower misalignment rates than Sonnet 4.5 and Opus 4.1, and the model ships at AI Safety Level 2, reflecting limited CBRN risk under their tests.

In short, this is near-frontier ability at small-model latency and price, which is a strong fit for cost-sensitive production traffic. Anthropic believes it will be particularly appealing for free versions of AI products, where it can provide significant capabilities while minimizing server loads.

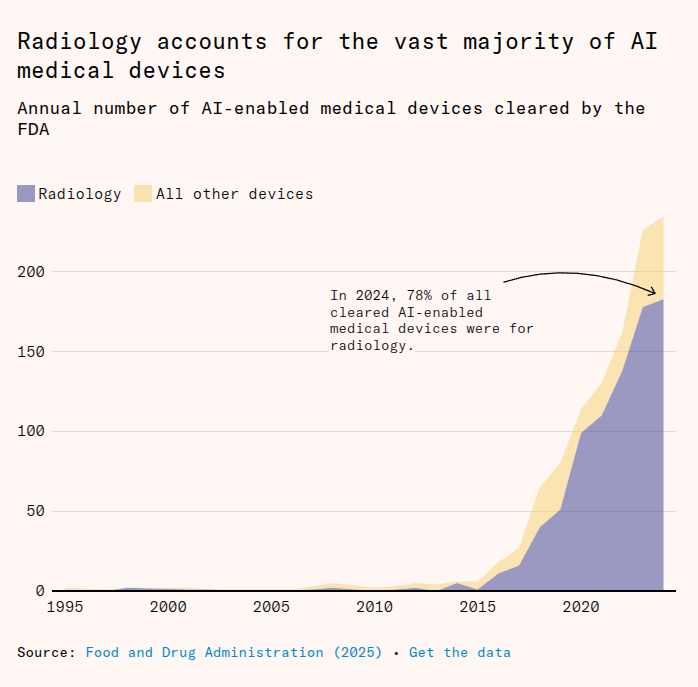

📡 AI Dominates Radiology, Yet Demand for Radiologists Soars

In 2016, Geoffrey Hinton, Turing Award winner Computer Scientist said that ‘people should stop training radiologists now’.

Radiology accounts for the vast majority of AI medical devices. Radiology has >700 FDA cleared AI models that beat doctors on tests.

AI tools like CheXNet, trained on 100,000 chest X-rays, can diagnose pneumonia faster and more accurately than many doctors, taking less than a second per scan. Despite this, hospitals still hire more radiologists, not fewer. In 2025, U.S. radiology residencies hit 1,208 openings, up 4% from the year before, and average pay jumped to $520,000, the second highest of any specialty.

This could be a classic example of Jevons paradox. Jevons paradox means that when a process becomes cheaper, faster, or more efficient, people end up using more of it instead of less.

In radiology, AI and digital imaging made scanning faster and cheaper to process. Once turnaround times dropped from days to hours, hospitals started ordering more scans for more patients, since imaging became a low-friction diagnostic tool. So even though each radiologist could handle more cases, the overall number of scans ballooned, which created more work overall.

Why AI hasn’t replaced radiologists

Three main problems hold AI back. First, models that look great on benchmark datasets often collapse in real hospitals. Many are trained and tested on data from only one site, and their accuracy can drop 20% when moved elsewhere. Second, each tool only handles one condition, like a stroke or lung nodule, so doctors would need to juggle dozens of them to cover a typical day. Third, regulation and insurance rules still demand a human in the loop.

🧠 PyTorch 2.9 is now released, introducing key updates to performance, portability, and the developer experience.

This release includes a stable libtorch ABI for C++/CUDA extensions, symmetric memory for multi-GPU kernels, expanded wheel support to include ROCm, XPU, and CUDA 13, and enhancements for Intel, Arm, and x86 platforms.

1. Symmetric Memory (Multi-GPU programming)

Now you can write multi-GPU kernels that directly communicate across GPUs, with very low latency. It’s great for training large models or MoE setups, since compute and communication can happen together instead of in separate steps.

2. Stable C++/CUDA ABI

PyTorch now makes it easier to build C++/CUDA extensions that stay compatible across versions. You don’t need to rebuild them every time you update PyTorch.

3. Graph Break Control in torch.compile

You can now control what happens when the compiler hits a graph break — either stop or continue — and toggle that inside specific regions of your code. It helps debug or tune compiled code more easily.

4. Wider Wheel Support

You can now get official install wheels for AMD (ROCm), Intel (XPU), and CUDA 13 — so PyTorch runs natively across more hardware setups.

5. FlexAttention Everywhere

FlexAttention, a more efficient attention kernel, now runs on Intel GPUs and even X86 CPUs (with flash decoding). This makes LLM inference faster, especially for long contexts.

6. Arm Platform Boosts

Better performance on Arm (like AWS Graviton 4) and faster torch.compile runs than eager mode.

If you care about performance, hardware compatibility, or writing custom kernels, Symmetric Memory and FlexAttention are the biggest highlights.

That’s a wrap for today, see you all tomorrow.