Google unveiled Private AI Compute, running Gemini securely without exposing user data to Google.

Google launches privacy-focused Gemini cloud, LeCun may exit Meta, SoftBank exits Nvidia, plus a laptop-friendly RAG engine you can try now.

Read time: 6 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (12-Nov-2025):

⚙️ Google announced Private AI Compute, a cloud platform that runs Gemini on Google’s servers while promising that the user’s data remains visible only to the user, not even to Google.

📢 Meta’s chief AI scientist Yann LeCun is reportedly preparing to leave to start a new company.

🚨 SoftBank sold its entire Nvidia stake for $5.83B to raise cash for a planned $22.5B push into OpenAI and other AI bets.

📡 Github resource: LEANN, Build a capable RAG engine right on your laptop!

⚙️ Google announced Private AI Compute, a cloud platform that runs Gemini on Google’s servers while promising that the user’s data remains visible only to the user, not even to Google.

The core of the system pairs Google’s custom TPUs with Titanium Intelligence Enclaves, a hardware isolated area that runs code in a way where the host cannot read user inputs or outputs, similar to trusted execution on other platforms but built into Google’s stack. The connection between the phone and this enclave uses remote attestation and encryption, which is a proof that the code and hardware are the expected ones before any data is sent, a widely used method in secure enclave systems.

Google frames this as “no access”, meaning the data processed in Private AI Compute should not be inspectable by operators or services outside the enclave boundary, and the claim relies on attestation plus key management tied to the enclave state. Early user features include better Magic Cue suggestions on Pixel 10 and wider language summaries in Recorder, both tasks that benefit from large context and heavier reasoning across personal data yet retain the privacy promise by confining processing to the enclave.

The phone asks the enclave to run a Gemini prompt, the phone verifies the enclave’s identity, the enclave runs the model on TPUs, and only the user can access the sensitive inputs and outputs, with logs and keys scoped to that sealed context. The closest analog many will know is Apple’s Private Cloud Compute, and Google’s move mirrors that privacy-first split where simple tasks stay on-device and heavy ones go to an attested cloud enclave.

📢 Meta’s chief AI scientist Yann LeCun is reportedly preparing to leave to start a new company

A storm is brewing inside Meta as reports suggest that the company’s Chief Artificial Intelligence Scientist, Yann LeCun, is preparing to leave the social media giant to start his own venture.

Though LeCun is one of the most celebrated figures in the field of artificial intelligence and is said to be in early talks to raise funds for his new startup, the news has created intense debate online.

LeCun has long argued that current large language models (LLMs) are “useful” tools but not the path to human-like reasoning, and his planned startup will focus on “world models” that learn from video and spatial signals to plan and act, which is a very different bet from scaling text-only systems.

Inside Meta, power shifted from the long-horizon FAIR research group that LeCun created in 2013 to new product-aimed units, including a handpicked team building the next Llama models with aggressive hiring packages, and LeCun is now reporting into Wang rather than the previous product chain.

So overall, Meta is doubling down on near-term LLM products under new leadership, while LeCun is stepping out to prove that video-grounded world models can close the reasoning gap that scaling text models has not.

🚨 BREAKING: SoftBank sold its entire Nvidia stake for $5.83B to raise cash for a planned $22.5B push into OpenAI and other AI bets.

Management framed the move as financing for AI expansion, not a change of view on Nvidia. The funding bundle also includes a $9.17B partial sale of T-Mobile shares plus bridge loans of $8.5B for OpenAI and $6.5B arranged for the Ampere Computing deal.

SoftBank said it increased its margin loan against Arm shares, giving it more borrowing headroom to deploy into upcoming investments. Those dollars are earmarked for OpenAI, the planned $6.5B Ampere acquisition, and a robotics push paired with ABB’s unit at roughly $5.4B, alongside the large US data-center build dubbed Stargate.

After the recap, SoftBank’s OpenAI ownership is set to rise from 4% to 11%. Profit jumped to ¥2.5T in Q2 FY2025, helped by Vision Fund gains linked to AI mark-ups.

Selling Nvidia does not sever ties with its ecosystem, since several SoftBank projects still rely on Nvidia hardware for training and inference capacity, including Stargate. A margin loan backed by Arm shares means SoftBank pledges Arm stock as collateral to borrow quickly, so it keeps Arm exposure while unlocking cash for deals.

So the simple tradeoff is that SoftBank swaps a liquid, high-beta position in Nvidia for concentrated exposure to model equity, chip design, robotics, and data-center build-outs, which raises financing complexity and execution risk. The upside case is direct participation in model economics and vertical integration across compute, chips, and applications, which could compound if OpenAI revenue and Stargate capacity scale on schedule.

The company is rotating from being a passenger in the Nvidia rally to being a principal in building and owning parts of the AI stack, and the bet will show up in cash burn and delivery milestones over the next few quarters. Even after selling its Nvidia stake, SoftBank still holds a strong position in the chip industry. It continues to own about 90% of Arm, whose processors power devices ranging from smartphones to Nvidia’s Grace Blackwell chips and upcoming AI-driven Windows laptops.

That ownership keeps SoftBank involved in AI hardware despite exiting Nvidia completely. Nvidia’s stock hasn’t reacted much to the sale, staying near record highs thanks to strong earnings and optimistic forecasts.

This stability remains even with investor Michael Burry betting against the company. Still, SoftBank’s move hints that big investors are rethinking where the next big wave of AI value will come from. SoftBank’s CFO Yoshimitsu Goto told Reuters that its investment in OpenAI is “very large,” and that it’s using existing assets to fund new bets.

📡 Github resource: LEANN, Build a capable RAG engine right on your laptop!

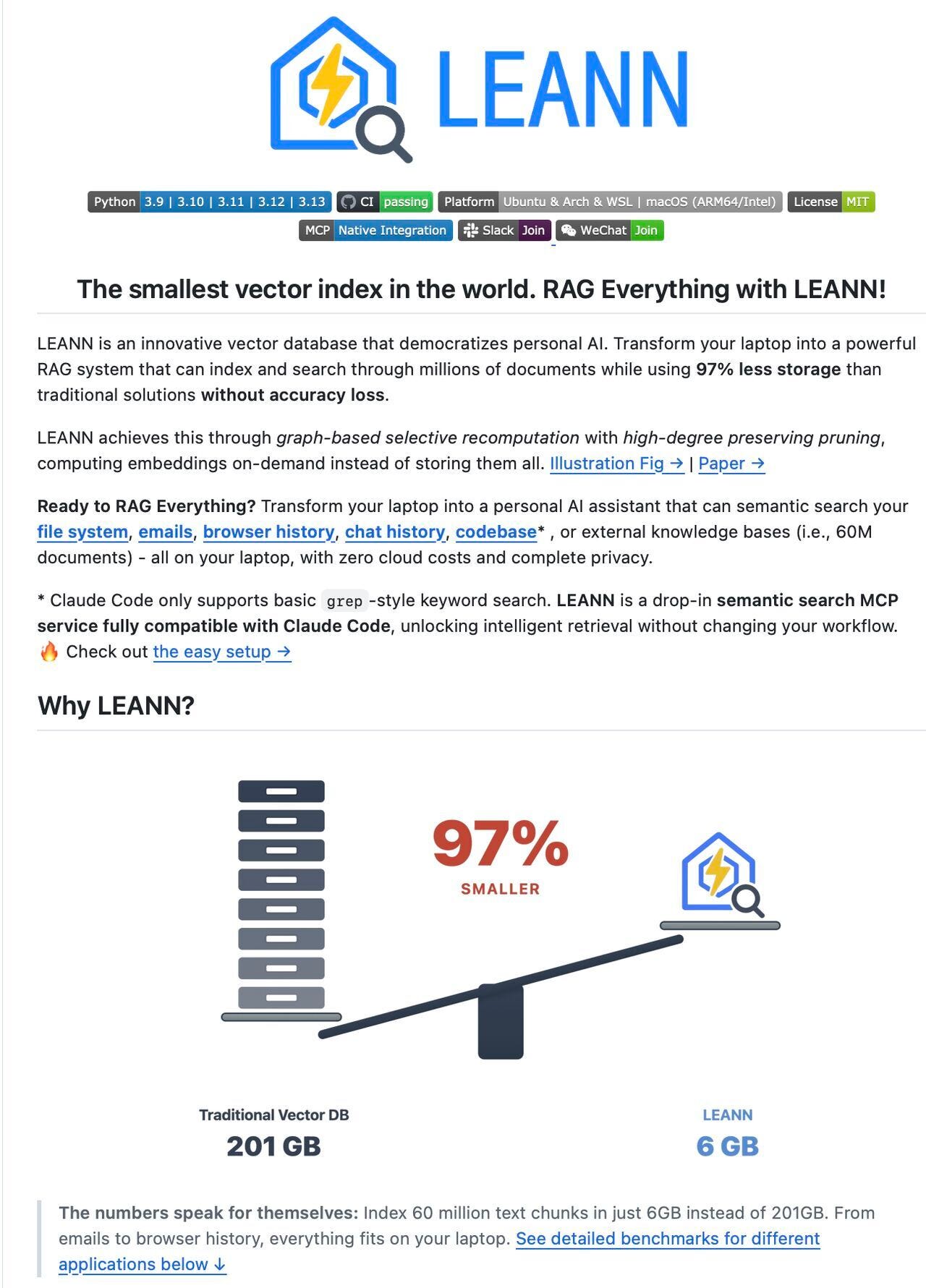

LEANN skips storing all embeddings and instead recomputes only what a search touches using a pruned graph, so the index stays tiny and still returns strong results.

The core tricks are graph-based selective recomputation, high-degree preserving pruning to keep hub nodes, CSR storage to shrink graph size, two-level search to route quickly, and dynamic batching to keep the GPU busy.

For scale, the README reports 6GB for 60M chunks versus 201GB with a traditional setup, 79MB for 780K emails, and 6.4MB for 38K browser entries, which is dramatic when everything lives on a single machine.

For accuracy, the system claims parity with heavier vector databases because the recompute path embeds only the candidates that matter instead of relying on stale stored vectors.

It supports HNSW for maximum savings and DiskANN for speed, so developers can pick storage first or throughput first.

For data, it ingests PDFs, text, markdown, code with AST-aware chunking, emails, browser history, WeChat or iMessage, and even live sources through MCP like Slack or Twitter, so one index can cover personal and work knowledge.

For generation, it plugs into OpenAI-compatible, Ollama, or Hugging Face backends, so developers can keep everything local or mix in a cloud model if they want.

For everyday use, the CLI is simple, build an index from a folder, run a search, or start an interactive ask session, and the metadata filters plus optional grep mode make it practical for code and logs.

That’s a wrap for today, see you all tomorrow.

https://open.substack.com/pub/pramodhmallipatna/p/the-rise-of-private-ai-clouds