🔥 Google unveils ‘spectacular’ general-Purpose AI for Algorithm Discovery

AlphaEvolve, DeerFlow, entropy-weighted quantization, Gemini everywhere, OpenAI’s research claims, Trump's AI policy rollback, and Microsoft’s ADeLe

Read time: 9 mint

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (15-May-2025):

🔥 Google DeepMind dropped AlphaEvolve: a Gemini-powered coding agent for algorithm discovery

🥉ByteDance Open-Sources DeerFlow: A Modular Multi-Agent Framework for Deep Research Automation with code execution and TTS support.

🗞️ New Paper Proposes a Completely Novel and New Way to Quantize LLMs - Entropy-Weighted Quantization Slashes Memory, But Without the Accuracy Hit

🚘Gemini smarts are coming to more Android devices and cars, TVs, and watches

🛠️ ‘AI models are capable of novel research’: OpenAI’s chief scientist on what to expect

🏛️ Trump Admin Finally Rolls Back Biden Era 'AI Diffusion Rule'

🪟 Microsoft introduces ADeLe, for Predicting and explaining AI model performance

🔥 Google DeepMind dropped AlphaEvolve: a Gemini-powered coding agent for algorithm discovery

🎯 The Brief

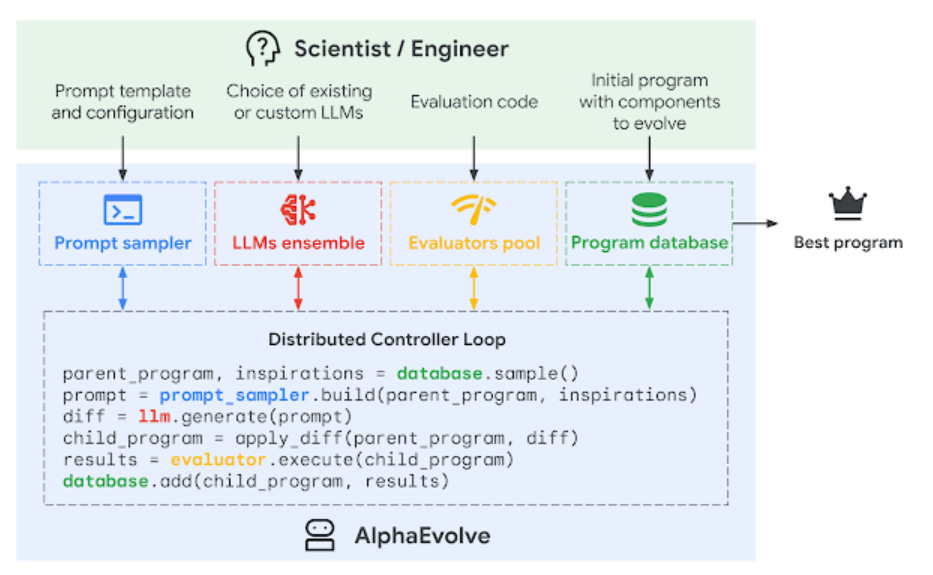

Google DeepMind launched AlphaEvolve, a Gemini-powered coding agent that discovers and evolves algorithms, outperforming its predecessor AlphaTensor. It achieved 23% matrix kernel speedup, 32.5% GPU kernel boost, and 0.7% compute recovery in data centers, driving major efficiency gains in AI training, chip design, and math problem-solving.

⚙️ The Details

→ AlphaEvolve uses an ensemble of Gemini models—Flash for exploration and Pro for deep refinement—to generate and evolve code for algorithmic tasks. Programs are scored via automated evaluators, enabling objective progress tracking.

→ It discovered faster matrix multiplication algorithms, including a 4x4 complex matrix multiplication using only 48 scalar ops, improving on Strassen’s algorithm.

→ It recovered 0.7% of compute resources globally via improved data center scheduling with interpretable heuristics.

→ Contributed Verilog-level enhancements to Google's TPU design pipeline.

→ Delivered a 23% speedup in matrix ops and 1% reduction in Gemini model training time.

→ Achieved 32.5% acceleration on FlashAttention GPU kernels, reducing dev cycles from weeks to days.

→ AlphaEvolve doesn't just optimize—it innovates. Given only a minimal code skeleton, it co-designed a novel gradient-based optimization procedure, creating multiple new matrix multiplication algorithms.

→ Solved parts of 50+ open math problems, improving 20% of them, including the 11D kissing number problem with 593 spheres.

→ It rediscovered state-of-the-art solutions in 75% of experiments across number theory, geometry, analysis, and combinatorics, validating its mathematical depth.

→ In 20% of cases, AlphaEvolve pushed the frontier, like improving the kissing number problem in 11D with a new configuration of 593 spheres, setting a new lower bound.

→ Setup time for most math problems was just hours, enabling fast iteration and exploration across diverse domains.

→ Early access for academics is being planned, signaling potential broader availability.

🥉ByteDance Open-Sources DeerFlow: A Modular Multi-Agent Framework for Deep Research Automation with code execution and TTS support.

🎯 The Brief

ByteDance has launched DeerFlow, a fully open-source, multi-agent research automation framework that merges LLMs, code execution, and web data tools into a LangGraph-powered orchestration layer. It's significant for automating complex, multi-step research with modular agents, and supports open APIs, TTS, Docker, and web UIs.

⚙️ The Details

→ DeerFlow orchestrates research via a 5-agent setup: Coordinator, Planner, Researcher, Coder, and Reporter, handling everything from task planning to final report generation.

→ It uses LangGraph for state-based orchestration and LiteLLM for model-agnostic LLM support, compatible with Qwen and OpenAI APIs.

→ Integrated tools include Tavily, DuckDuckGo, Brave Search, and Arxiv for research, and volcengine for TTS with full control over pitch, speed, and volume.

→ Unlike conventional autonomous agents, DeerFlow embeds human feedback and interventions as an integral part of the workflow. Users can review agent reasoning steps, override decisions, or redirect research paths at runtime.

→ Fully MIT licensed. API keys are required for certain services.

→ It includes human-in-the-loop, MCP integration, and Notion-style editing via tiptap. Checkout their Github repo.

🗞️ New Paper Proposes a Completely Novel and New Way to Quantize LLMs - Entropy-Weighted Quantization Slashes Memory, But Without the Accuracy Hit

The paper proposes a smart way to make LLMs smaller and faster without making them worse at their tasks. Entropy-Weighted Quantization (EWQ). By intelligently selecting LLM transformer blocks for quantization based on their weight entropy. This paper is from webAI.

This method outperforms existing uniform quantization approaches, maintaining Massive Multitask Language Understanding (MMLU) accuracy scores within 0.5% of unquantized models while reducing memory usage by up to 18%

🤔 Original Problem

Current post-training weight-only uniform quantization methods degrade model accuracy and performance.

The authors propose Entropy-Weighted Quantization (EWQ), a method that selectively quantizes parts of an LLM. It figures out which transformer blocks (the building blocks of LLMs) can be safely squeezed into lower precision without causing a major performance drop. The key idea is to analyze the entropy of each block.

So EWQ looks at different parts of the model (called "layers") and decides how much to simplify each one based on something called entropy.

Entropy measures how much unique information a layer holds. Layers with low entropy (less unique info) can be simplified a lot without hurting performance, while layers with high entropy (lots of unique info) need to stay more precise.

💾 It Saves Memory Without Sacrificing Quality

The paper shows EWQ can cut memory use by up to 18% while keeping the model’s performance almost as good as the original (within 0.5% on accuracy tests like MMLU). That’s like shrinking a giant book into a pamphlet without losing the story.

🚀 It’s Fast with FastEWQ

There’s an even quicker version called FastEWQ that figures out how to quantize the model without loading all its data first. Loading big models takes time and resources, so skipping that step makes EWQ super practical—like planning your packing without opening the closet.

🔬 So how exactly is Entropy working here?

In information theory, entropy measures uncertainty or randomness. In this paper, the entropy of a transformer block's weights is used as a proxy for its information content or complexity.

📌 Low-entropy blocks: These blocks have weight distributions that are more predictable or contain redundant information. The paper hypothesizes these can be quantized more aggressively (e.g., to 4-bit or even 1.58-bit) with less impact on performance. Think of it like a highly repetitive image; you can compress it a lot without losing much detail.

📌 High-entropy blocks: These blocks have more complex, less predictable weight distributions. They likely hold crucial information for the LLM's performance and are thus treated more gently – quantized to a higher precision (e.g., 8-bit) or left unquantized.

🚘Gemini smarts are coming to more Android devices and cars, TVs, and watches

Google is embedding its Gemini AI across Android devices—smartwatches, TVs, cars, and XR headsets—to create a unified, multimodal assistant layer. This is a major push to turn Gemini into an always-available, context-aware AI across the Android ecosystem.

⚙️ The Details

→ Wear OS smartwatches will get Gemini in the coming months, enabling natural voice-based tasks like reminders and app queries without needing to touch your phone.

→ Google TV will integrate Gemini later this year for smarter content recommendations and interactive learning—e.g., pulling up YouTube videos for kids' questions.

→ Android Auto is getting Gemini soon, enabling conversational commands while driving—summarizing texts, translating replies, or suggesting stops en route.

→ Gemini’s XR integration with Samsung’s upcoming headset will offer spatial planning tools, combining media, maps, and suggestions into immersive itineraries.

→ This rollout also includes earbuds from Sony and Samsung, transforming Gemini into a continuous assistant across the full Android device stack.

🛠️ ‘AI models are capable of novel research’: OpenAI’s chief scientist on what to expect

🎯 The Brief

OpenAI’s chief scientist Jakub Pachocki confirmed plans to release its first open-weight model since GPT-2, aiming to outperform all current open models. He stressed that AI models can already discover novel insights and anticipates reaching his AGI benchmark — measurable economic and research impact — by decade’s end.

⚙️ The Details

→ Pachocki sees growing evidence that models are capable of generating novel scientific research, though their reasoning differs from human cognition.

→ He highlights OpenAI’s Deep Research tool, which can synthesize information autonomously for 10–20 minutes, producing valuable outputs with minimal compute.

→ The focus is shifting toward more compute-intensive efforts on unsolved problems, like autonomous software engineering and hardware design.

→ Reinforcement learning (RLHF) is becoming central — it's not just shaping behavior, but fostering model-originated reasoning strategies beyond pre-training.

→ Pachocki’s AGI definition hinges on real-world scientific contributions and economic impact, not just conversational abilities.

🏛️ Trump Admin Finally Rolls Back Biden Era 'AI Diffusion Rule'

🎯 The Brief

Trump administration rescinded Biden-era global AI chip export controls, shifting to country-specific negotiations while maintaining strict restrictions on China. This move, backed by major tech players, aims to preserve innovation and global partnerships without blanket limits.

⚙️ The Details

→ The Biden rule, set to start May 15, classified countries into three tiers with varying export restrictions.

→ The Department of Commerce (DOC) canceled the rule just days before activation, citing damage to U.S. innovation and foreign diplomacy.

→ Trump's DOC will instead negotiate country-by-country deals, ditching the broad tiered approach. Bloomberg reports a full regulatory rewrite is in progress.

→ New guidance still treats global use of Huawei’s Ascend AI chips as a U.S. export violation and warns against using American chips in China model training.

→ This shift reflects deeper coordination with U.S. tech giants and signals a pragmatic, industry-driven export control policy.

🪟 Microsoft introduces ADeLe, for Predicting and explaining AI model performance

🎯 The Brief

Microsoft researchers introduced ADeLe, a new evaluation framework using 18 cognitive/knowledge ability scales, predicting AI task performance with 88% accuracy. It moves beyond benchmarks to explain why models fail or succeed, enhancing LLM reliability and diagnostics.

⚙️ The Details

→ ADeLe evaluates how demanding a task is for AI across 18 annotated abilities like reasoning, attention, and domain knowledge, each scored 0–5. It creates a profile mapping a model’s capacity against task requirements.

→ It assessed 63 tasks from 20 benchmarks using 16,000 examples, profiling 15 LLMs including GPT-4o and LLaMA-3.1-405B. Models were compared by ability performance curves and scored for difficulty thresholds (50% success point).

→ Findings: benchmarks often misrepresent what they test. Some include unrelated ability demands, and many omit simple/hard tasks.

→ ADeLe predicts model performance on unseen tasks with ~88% accuracy, outperforming existing methods.

→ Framework generalizes to multimodal systems and could serve policy, auditing, and research standardization.

That’s a wrap for today, see you all tomorrow.