🤖 Google unveils ultra-small and efficient open source AI model Gemma 3 270M that can run on smartphones

Google drops a smartphone-friendly LLM, Meta’s DINOv3 pushes self-supervised vision, Claude adds Opus for coding, and MIT uses generative AI for antibiotic discovery.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (15-Aug-2025):

🤖 Google unveils ultra-small and efficient open source AI model Gemma 3 270M that can run on smartphones.

🛠️ Claude Code has a new /model option: Opus for plan mode.

🦖 Meta AI Just Released DINOv3: A State-of-the-Art Computer Vision Model Trained with Self-Supervised Learning, Generating High-Resolution Image Features

🛠️ New Paper does a Comprehensive Survey of Self-Evolving AI Agents

💊 MIT Researchers just used generative AI to create new drug compounds capable of destroying bacteria that no longer respond to existing drugs

🤖 Google unveils ultra-small and efficient open source AI model Gemma 3 270M that can run on smartphones

Total of 270 million parameters: 170 million embedding parameters due to a large vocabulary size and 100 million for our transformer blocks.

A standout feature of the new model is its energy efficiency.

On a Pixel 9 Pro SoC the INT4-quantized model used just 0.75% of the battery for 25 conversations,

Google is positioning Gemma 3 270M as ideal for high-volume, well-defined tasks such as sentiment analysis, entity extraction, and creative writing.

Despite being small, it can manage complex, domain-specific tasks and be fine-tuned in just minutes for either enterprise or independent projects. It can even run inside a browser, on a Raspberry Pi, and, jokingly, “in your toaster,” as Google’s Omar Sanseviero put it.

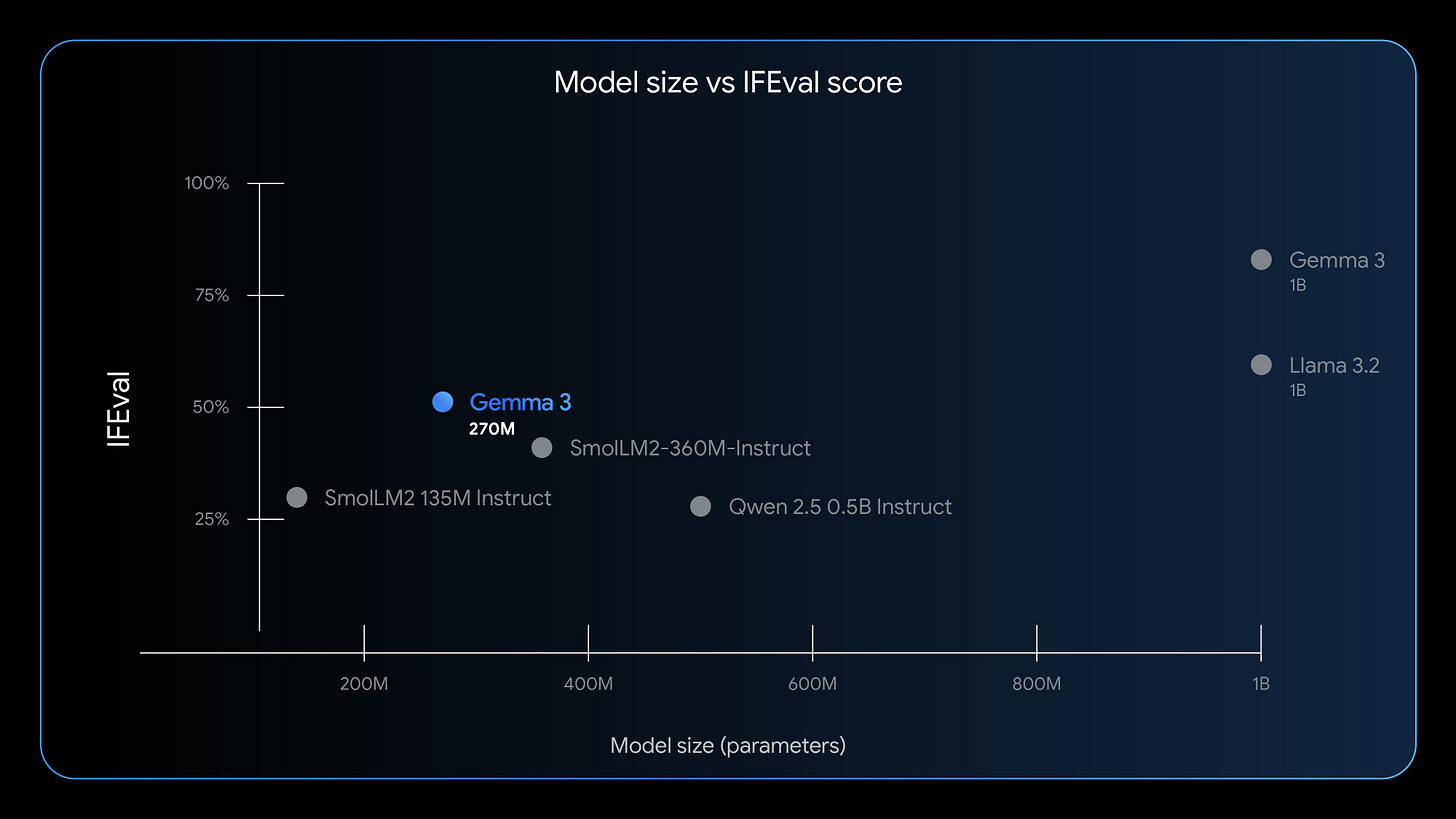

On the IFEval benchmark, the instruction-tuned version scored 51.2%, beating other small models like SmolLM2 135M Instruct and Qwen 2.5 0.5B Instruct, though below Liquid AI’s LFM2-350M at 65.12%.

It’s available as both pretrained and instruction-tuned, with QAT checkpoints for efficient INT4 precision. Google’s positioning is that specialized small models can often outperform larger ones for tasks like sentiment analysis, entity extraction, query routing, and creative writing.

A demo “Bedtime Story Generator” app shows it creating offline, browser-based interactive stories using custom inputs for character, setting, twist, theme, and length.

Gemma 3 270M is released under the Gemma Terms of Use, which allow commercial use with some restrictions, provided the license terms are respected. Businesses retain full rights over outputs. It’s not fully open-source but enables wide commercial deployment.

🛠️ Claude Code has a new /model option: Opus for plan mode.

Claude’s Code’s he new Plan Mode is seriously useful.

You just explain what you need done, and it creates a plan for you.

You can tweak it as many times as you want, and then jump into Coding mode so it can build everything based on that plan.

Opus plan mode is a routing choice in Claude Code where planning runs in “plan mode”, and that mode means read-only analysis.

Use it when you want careful design or review before touching the codebase, for example large refactors, migrations, security reviews, or writing an implementation plan. Anthropic’s SDK page even calls out plan mode as useful for code reviews and planning changes, since it forbids edits and command execution.

To try it out, select “Opus plan mode” in the model selector (/model). You get the benefit of Opus 4.1’s intelligence for planning and Sonnet 4’s speed (and lower cost) for execution.

You can toggle between modes (default, auto-accept, and plan) with Shift+Tab. This setting is available now to all Claude Code users with Opus access in the latest Claude Code update.

🦖 Meta AI Just Released DINOv3: A State-of-the-Art Computer Vision Model Trained with Self-Supervised Learning, Generating High-Resolution Image Features

DINOv3 is revolutionary: a new state-of-the-art vision backbone trained to produce rich, dense image features. I loved their demo video so much that I decided to re-create their visualization tool.

A major step up for self-supervised vision foundation models.

Trained on 1.7B curated images with no annotations

Gram anchoring fixes feature map degradation when training too big too long

Packs high-resolution dense features that redefine what’s possible in vision tasks.

DINOv3 shows a single self-supervised vision backbone can beat specialized models on dense tasks, consistently across benchmarks.

Self-supervised learning means the model learns from raw images without labels by predicting consistency across different views. A vision backbone is the feature extractor that turns each image into numbers a downstream head can use. Frozen means those backbone weights stay fixed while tiny task heads are trained on top.

Scaling is the trick here. The team trains a 7B parameters model on just 1.7B images using a label-free recipe, then exposes high-resolution features that describe each pixel in detail.

Because those features are so rich, small adapters or even a simple linear head can solve tasks with few annotations.

DINOv3 fixes a core problem in self-supervised vision, it keeps per-pixel features clean and detailed at high resolution.

Dense feature maps are the per-pixel descriptors the backbone spits out. Tasks like depth estimation, 3D matching, detection, and segmentation depend on those maps being sharp and geometric, not just semantically smart. If the maps are good, tiny heads can work off the shelf with little post-processing.

Big models trained on huge image piles often drift toward only high-level understanding. That washes out local detail, so the dense maps collapse and lose geometry. Longer training can make this worse.

DINOv3 adds Gram anchoring. In plain terms, it keeps the relationships between feature channels in check, so local patterns stay diverse and structured while the model learns global semantics. This balances global recognition with pixel-level quality, even when training is long and the model is large.

The result is stronger dense feature maps than DINOv2, clean at high resolutions, which directly boosts downstream geometric tasks and makes frozen-backbone workflows far more practical.

🛠️ New Paper does a Comprehensive Survey of Self-Evolving AI Agents

This paper is a brilliant resource: A Comprehensive Survey of Self-Evolving AI Agents

Self‑evolving agents are built to adapt themselves safely, not just run fixed scripts, guided by 3 laws, endure, excel, evolve. The survey maps a 4‑stage shift,

MOP (Model Offline Pretraining) to

MOA (Model Online Adaptation) to

MAO (Multi-Agent Orchestration) to

MASE (Multi-Agent Self-Evolving),

Then lays out a simple feedback loop to optimise prompts, memory, tools, workflows and even the agents themselves.

The authors formalise 3 laws, Endure for safety and stability during any change, Excel for preserving or improving task quality, Evolve for autonomous optimisation when tasks, contexts, or resources shift. These laws act as practical constraints on any update loop, so the agent improves without breaking guardrails it already satisfied.

Key takeaway, improvement happens inside the system through repeated interaction with an environment, with evaluation and safety checks baked into every cycle.

💊 MIT Researchers just used generative AI to create new drug compounds capable of destroying bacteria that no longer respond to existing drugs

Scale and novelty drive this result. Instead of known catalogs, the team created over 36M hypothetical molecules, then scored them with graph neural networks that read atoms and bonds as a graph.

The payoff is reach into new chemical space, delivering structures catalogs do not contain, found quickly and cheaply with learned scoring models.

Basically, instead of searching existing drug shelves, the team asked AI to imagine and rate 36M brand new molecules. Think of it like a massive idea generator that proposes chemical shapes, then scientists pick a small shortlist to build and test.

From that shortlist, chemists made 24 molecules, 7 worked against the target bacteria, and 2 were strong enough to help infected mice recover. That is quick compared with the usual slow hunt through limited catalogs.

Two drug candidates, NG1 and DN1, were generated by the algorithms, each targeting bacteria in ways no existing antibiotic does. Tests in mice showed DN1 removed MRSA skin infections, and NG1 fought off drug-resistant gonorrhea. The MIT researchers believe AI breakthroughs could usher in a “second golden age” for antibiotic research.

The below figure is the study’s workflow for using generative AI to design and validate new antibiotics.

The top panels show 2 entry routes. In fragment based design, the team starts from a small fragment with antibacterial signal, then algorithms propose chemically reasonable modifications and complete molecules. In de novo design, the models generate full molecules from scratch with only basic chemistry rules. In both cases, graph neural network models score candidates for predicted antibacterial activity and filter out toxic or non-druglike ones.

The middle panel emphasizes the generative step, combining chemical mutations and a variational autoencoder to produce many candidates, then rescoring them with the graph models.

The bottom panel shows what happens after in silico selection. Chosen molecules are synthesized, tested in dose response assays that read out reduced bacterial growth, and advanced if active. In this work, compounds emerging from this pipeline were active against N. gonorrhoeae and S. aureus, including MRSA. From 24 synthesized molecules, 7 were selective antibacterials, and 2 leads cleared infections in mouse models while acting through membrane related mechanisms and new targets.

Why this matters. Drug resistance keeps rising, causing about 5M deaths each year. The hard part is finding fresh chemical shapes, because bacteria have learned the old ones. This approach opens a much bigger search area and surfaces ideas that do not look like current drugs.

That’s a wrap for today, see you all tomorrow.