🎙️ Google updated Gemini 2.5 Flash Native Audio for live voice agents, and it added live speech to speech translation to the Translate app.

Gemini’s voice upgrade, NVIDIA’s new 30B reasoning model, Karpathy’s LLM pipeline on HN threads, and sharp AI insights from investor Gavin Baker.

Read time: 6 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (15-Dec-2025):

🎙️ Google updated Gemini 2.5 Flash Native Audio for live voice agents, and it added live speech to speech translation to the Translate app.

🏆 NVIDIA just released Nemotron 3 Nano, a new 30B hybrid open-source reasoning model

🛠️ Tutorial - Andrej Karpathy’s new blog - builds a pipeline that grades decade-old Hacker News threads with an LLM

👨🔧 “Some thoughts on AI” - By Gavin Baker (A leading Silicon Valley Investor)

🎙️ Google updated Gemini 2.5 Flash Native Audio for live voice agents, and it added live speech to speech translation to the Translate app.

better tool calling,

more accurate instruction following, and

smoother and more cohesive multi turn conversation quality.

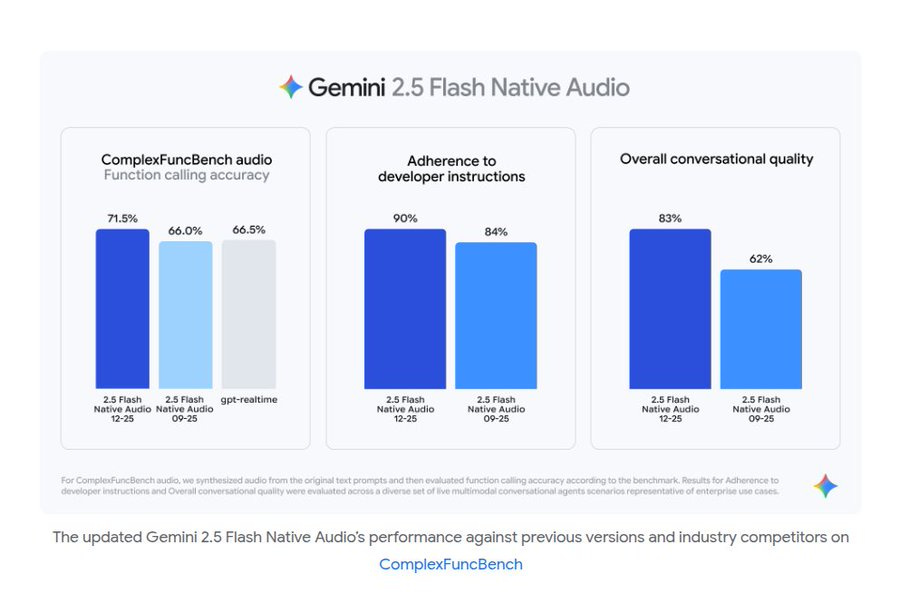

Tool calling is more reliable, it knows when to fetch live info, and it scores 71.5% on ComplexFuncBench Audio. Instruction following improved to 90% adherence, up from 84%, so the agent breaks rules less often.

Multi turn quality improved because it uses earlier context better across longer back and forth chats. Gemini 2.5 Flash Native Audio is available in Google AI Studio and Vertex AI, and it is rolling out into Gemini Live and Search Live.

Live speech translation can keep listening into 1 target language, or run a 2 way chat that switches languages by speaker. Translation keeps tone, pacing, and pitch, and it supports 70+ languages and about 2,000 language pairs with auto detection and noise filtering. The Translate beta is rolling out on Android in the US, Mexico, and India, with iOS and more regions later, and an API release planned for 2026.

🏆 NVIDIA just released Nemotron 3 Nano, a new 30B hybrid open-source reasoning model

The model is meant to make multi-agent AI cheaper, faster, and easier to trust.

Nemotron 3 Nano claims 4x higher throughput than Nemotron 2 Nano and up to 60% fewer reasoning tokens. Get the model on Huggingface.

Multi-agent AI means multiple LLM-based “workers” cooperate on a task, and the hard part is they waste time talking, lose context, and run up inference cost.

Nemotron 3 tries to fix that with a hybrid mixture-of-experts design, where the model routes each token through a small slice of the network instead of firing everything every time.

Nano is 30B parameters but only about 3B active at once, and it also supports a 1M-token context window so longer workflows stay consistent.

NVIDIA also ships the surrounding “open stack”.

- A 3T tokens of datasets, a huge pile of text and task examples used for pretraining, post-training, and reinforcement learning, so people can fine-tune Nemotron for coding, reasoning, and multi-step workflows.

- plus NeMo Gym, which is the set of ready-made training “sandboxes” where an agent can practice tasks and get scored, and NeMo RL is the reinforcement learning toolkit that actually runs that feedback-style training loop at scale.

- And also evaluation tools which are for checking if the model is behaving well, like measuring quality on tasks and running safety checks, so a team can trust what they are shipping.

Nemotron 3 Super and Ultra are expected to be available in the first half of 2026.

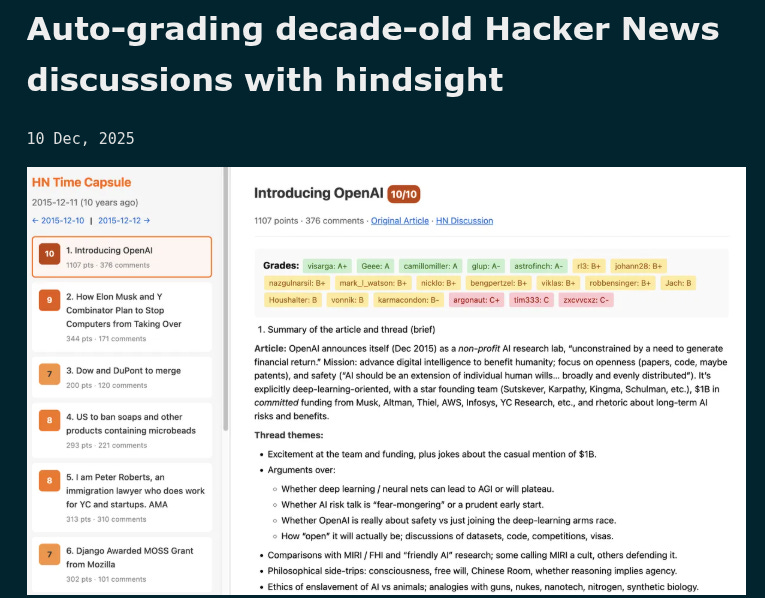

🛠️ Tutorial - Andrej Karpathy’s new blog - builds a pipeline that grades decade-old Hacker News threads with an LLM using hindsight. It compares what people predicted to what actually happened and outputs structured verdicts.

In this blog he shows a working template for using LLMs to audit old predictions at scale, turning messy discussions into clear summaries, winners, mistakes, and scores you can parse and rank.

You can copy the pipeline to grade any archive, learn prompt design, parsing, batch orchestration, and quickly build a static site that surfaces who was right and why.

You fetch the front page by date, scrape each article and full comments with the Algolia API, and wrap everything into a strict 6-part prompt. The model returns summaries, outcomes, “Most prescient”, “Most wrong”, per-user grades, and an interestingness score.

The run covers 31 days with 30 articles per day, so 930 calls. Reported cost is $58 and runtime is about 1 hour.

A parser pulls the “Final grades” block, computes user GPAs, and builds a leaderboard. The site renders as static HTML with a “Hall of Fame” of accurate commenters.

You learn prompt formats that are easy to parse, batch orchestration, cost tracking, and turning raw outputs into a browsable site. The repo includes code to fetch stories, build prompts, call the API, parse grades and scores, and write pages.

👨🔧 “Some thoughts on AI” - By Gavin Baker (A leading Silicon Valley Investor)

$1 trillion in unfunded AI spending commitments is framed as the scary headline, but demand for tokens still looks strong.

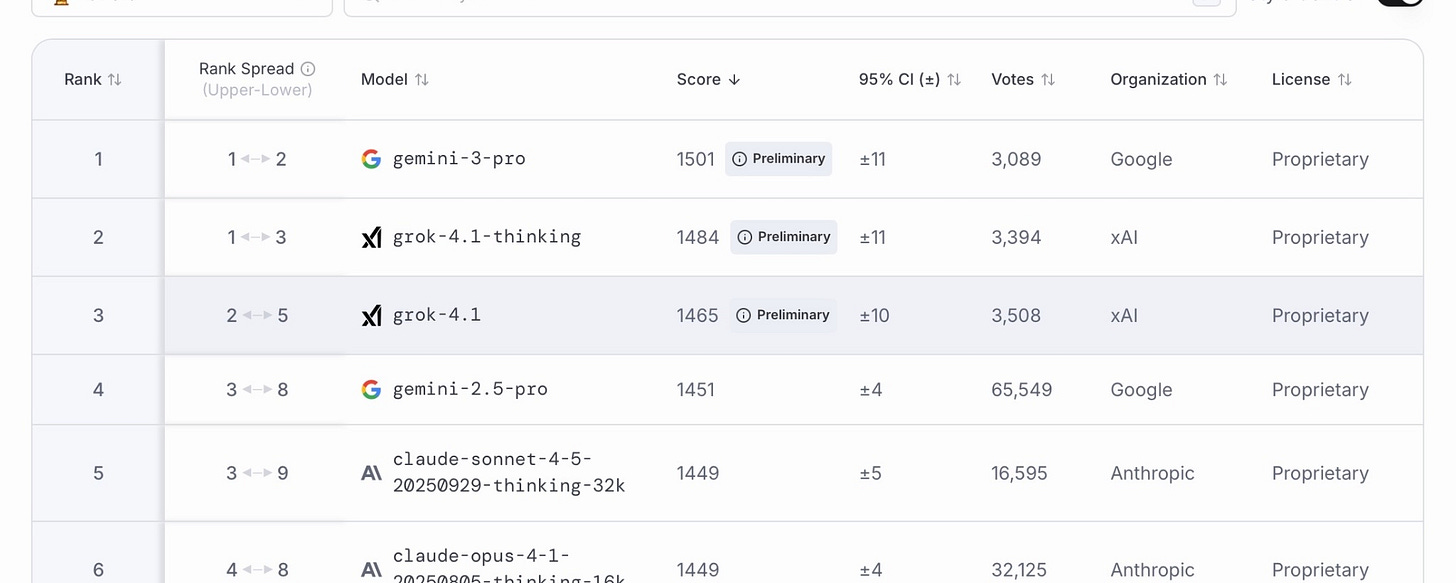

He is basically saying, Gemini 3 is the cleanest recent sign that the old pretraining scaling rules still work when compute really scales. He downplays GPT-5 as a “slowdown signal,” by making the point is that a cheaper routing setup can improve cost without being a true bigger, stronger single model.

“Coherent FLOPs” matters here because training gets better when a huge cluster acts like 1 machine, with fast communication, not just lots of chips.

Blackwell is treated as the next step because it should raise performance per watt, and power is now the hard limit for many data centers.

Once power is the bottleneck, “tokens per watt” becomes the real scoreboard because tokens directly map to product usage and revenue.

Reasoning models change the business loop because real user interactions create fresh training signals, instead of waiting on the next giant pretraining run.

The market structure point is blunt, a small set of labs can afford the best clusters, so catching up gets harder over time.

Hardware life also stretches because older GPUs are still rentable, so assumptions like a 6 year useful life start looking less crazy.

The “ROI on AI” claim is that training spend looks painful short-term, but inference is where the actual payback shows up.

Some companies, especially OpenAI, have promised a massive amount of future spending, around $1T, without clearly secured funding lined up yet. That “unfunded commitments” idea is the scary part because if the money is not actually there, it can force cutbacks, delays, or emergency fundraising, and it makes investors nervous about the whole AI buildout story.

Then he separates that fear from the real usage story by saying, even if one company stumbles, people will still keep using AI systems, which means token consumption, meaning paid inference usage, can keep growing.

That’s a wrap for today, see you all tomorrow.

Really solid coverage of the NVIDIA Nemotron release. The 4x throughput jump with 60% fewer reasoning tokens is wild considering most labs are still throwing more compute at the problem. I've been workign with multi-agent systems lately and the context window expansion to 1M tokens changes the game for long workflows. Curious how the MoE routing compares to traditional architectures when agents actually start passing complex state around.