🛸 Google updated its Veo 3.1 video model with a stronger Ingredients-to-Video workflow

Google upgrades Veo and MedGemma, DeepSeek drops Engram for LLM memory, China’s AGIBOT leads humanoids, and a new finetuning method beats LoRA.

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (14-Jan-2026):

🛸 Google updated its Veo 3.1 video model with a stronger Ingredients-to-Video workflow

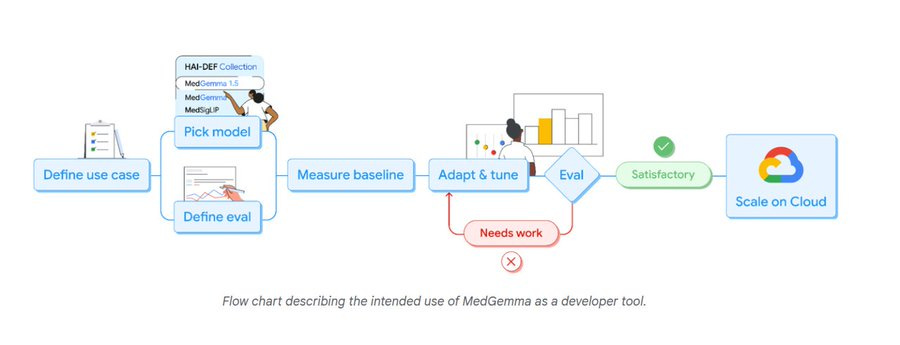

🏆 Google released a major update to their open-source MedGemma model with improved medical imaging support.

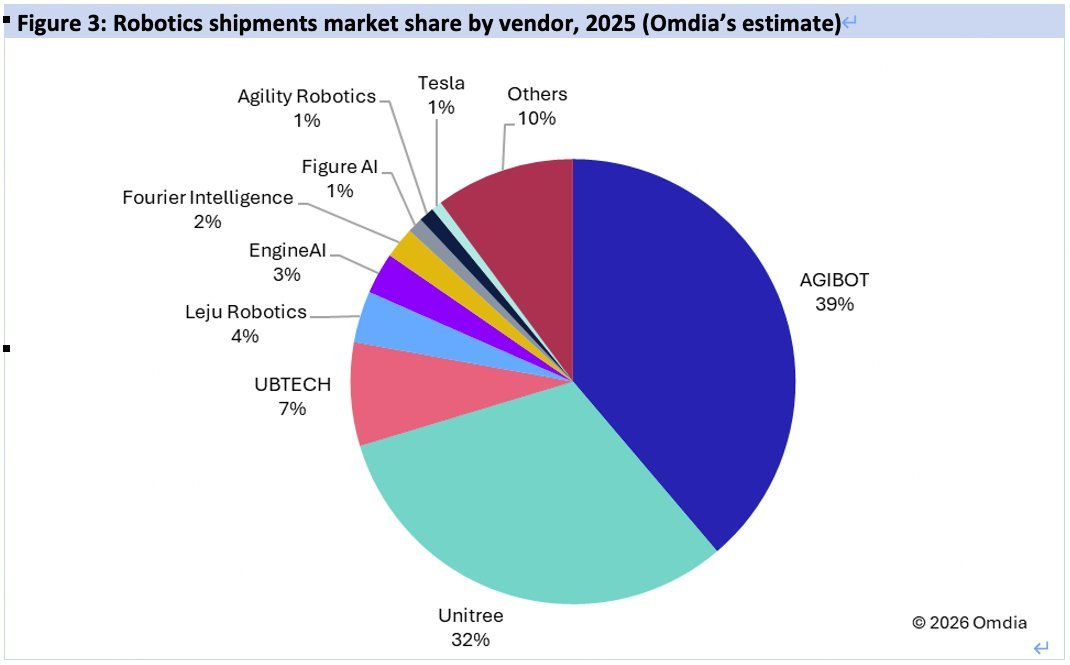

🇨🇳 China’s AGIBOT tops global humanoid robot shipments in 2025 with 39% share, based on Omdia’s numbers.

🛠️ DeepSeek introduces Engram: Memory lookup module for LLMs that will power next-gen models (like V4)

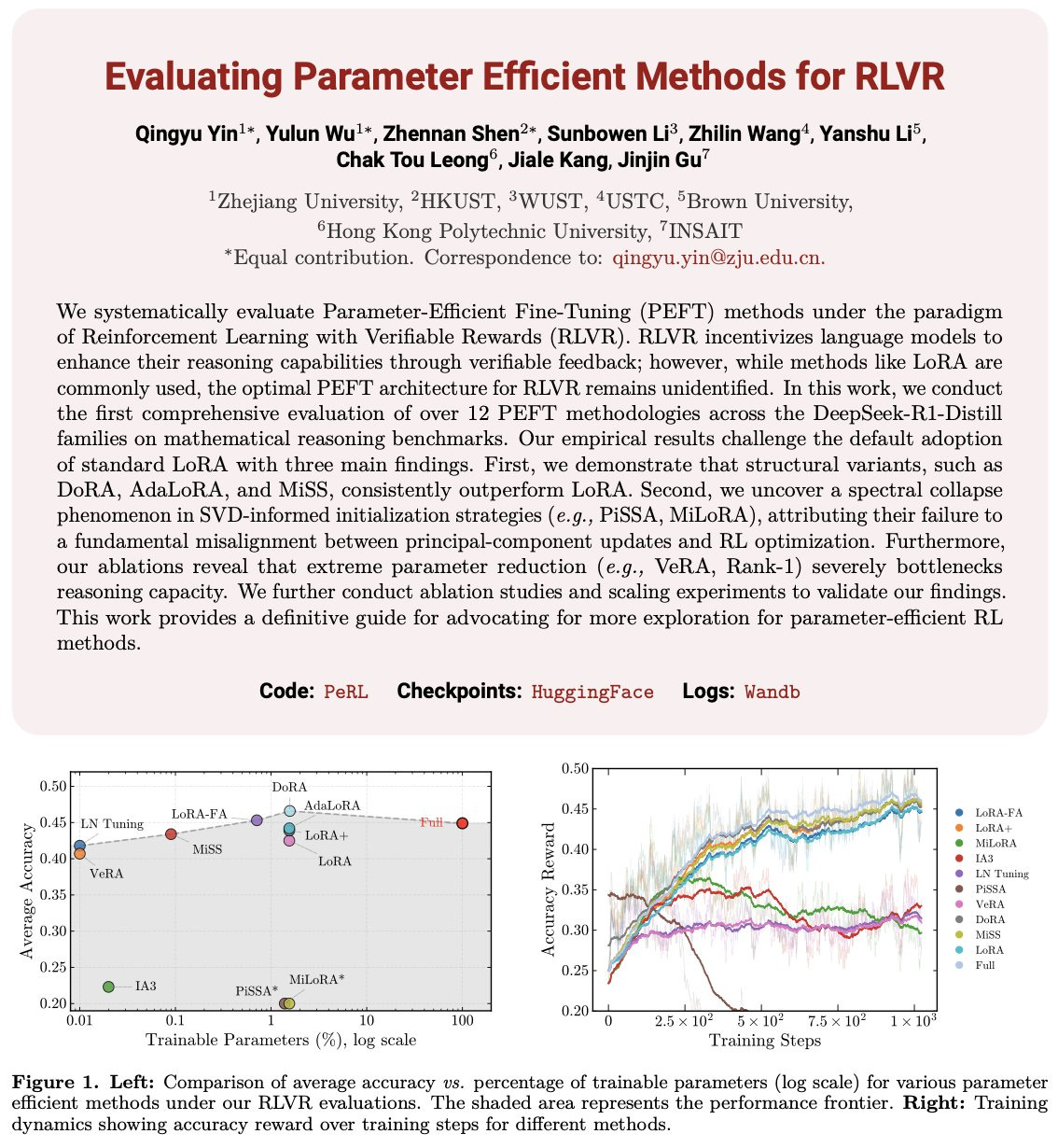

👨🔧 Think LoRA is the standard for finetuning, this paper shows a new method that wins by a clear margin.

🛸 Google has updated its Veo 3.1 AI video model with a stronger Ingredients to Video workflow.

Google’s update for Veo 3.1 and lets users create vertical videos through reference images.

A key focus of the update is Google’s “Ingredients to Video” feature. Ingredients here just means the input “parts” you give the model up front, usually a subject image, a background image, and a style or look reference.

Veo uses those images as anchors while it follows your text prompt to animate motion, add audio like speech and sound effects, and keep details from drifting. It generates a short clip from ingredient images plus a text prompt, with steadier characters and scenes.

With the update, users no longer need to write lengthy or highly detailed prompts to achieve the desired result. Even short instructions can now produce videos with improved storytelling, dialogue, and cinematic quality.

Now we also get native 9:16 output plus 1080p and 4K upscaling make those consistent clips usable for Shorts-style feeds and real edits without cropping. The goal is fewer reruns, because earlier image-to-video often drifts on identity and props across shots.

Veo 3.1 pushes more expressive motion and richer dialogue so clips feel more natural even with simple prompts. Identity consistency is improved, keeping a character’s appearance stable as the setting changes.

Backgrounds, objects, and textures also stay consistent, so the same setting and props can be reused across scenes. Portrait-mode generation outputs 9:16 video directly, which fits Shorts-style feeds without cropping or quality loss.

The features are available across the Gemini app, YouTube, Flow, Google Vids, the Gemini application programming interface (API), and Vertex AI, and upscaling is limited to Flow, the API, and Vertex AI. Videos carry an imperceptible SynthID watermark, and Gemini can check whether a clip came from Google AI.

🏆 Google released a major update to their open-source MedGemma model with improved medical imaging support.

It does this by letting developers pass many CT or MRI slices, or many pathology patches, together with a text prompt so the model can reason over the whole study. The update also adds better support for longitudinal imaging, meaning comparing chest X-rays over time instead of reading a single image.

It adds anatomical localization for chest X-rays, meaning pointing to where anatomy is and measuring overlap with ground truth using intersection over union, a spatial match score. It improves medical document understanding by extracting structured fields from lab reports, and it reports higher macro F1, a balanced precision and recall score averaged across fields.

Alongside imaging, it also says the 4B model improves core text and medical record tasks compared to the prior 4B release. On chest X-ray dictations, word error rate (WER), the share of words wrong, is 5.2% for MedASR vs 12.5% for Whisper large-v3.

🇨🇳 China’s AGIBOT tops global humanoid robot shipments in 2025 with 39% share, based on Omdia’s numbers.

Omdia, a well-known tech market research firm, has released its General-Purpose Embodied Robot Market Radar report, saying that AGIBOT holds the top global position in humanoid robot shipments.

The report gives a deep look at 2025 market trends, commercialization data, and the overall tech progress in the humanoid robot industry.

AGIBOT Achieved annual shipments of more than 5,100 units. A great portion of this early deliveries are to factories & entertainment purposes.

At CES 2026, Nvidia CEO Jensen Huang also featured AGIBOT G2 on stage.

We are witnessing the rise of embodied intelligence in 2026.

🛠️ DeepSeek introduces Engram: Memory lookup module for LLMs that will power next-gen models (like V4)

This new paper from DeepSeek just uncovered a new U-shaped scaling law.

Shows that N-grams still matter. Instead of dropping them in favor of neural networks, they hybridize the 2. This clears up the dimensionality problem and removes a big source of inefficiency in modern LLMs.

The core concept of DeepSeek’s Engram is just so beautiful.

A typical AI model treats “knowing” like a workout. Even for basic facts, it flexes a bunch of compute, like someone who has to do a complicated mental trick just to spell their own name each time.

The reason is that standard models do not have a native knowledge lookup primitive. So they spend expensive conditional computation to imitate memory by recalculating answers over and over.

💡Engram changes the deal by adding conditional memory, basically an instant-access cheat sheet.

It uses N-gram embeddings, meaning digital representations of common phrases, which lets the model do a O(1) lookup. That is just 1-step retrieval, instead of rebuilding the fact through layers of neural logic.

This is also a re-balance of the whole system. It solves the Sparsity Allocation problem, which is just picking the right split between neurons that do thinking and storage that does remembering.

They found a U-shaped scaling law. When the model is no longer forced to waste energy on easy stuff, static reconstruction drops, meaning it stops doing the repetitive busywork of rebuilding simple facts.

Early layers get breathing room, effective depth goes up, and deeper layers can finally concentrate on hard reasoning. That is why general reasoning, code, and math domains improve so much, because the model is not jammed up with alphabet-level repetition.

For accelerating AI development, infrastructure-aware efficiency is the big win. Deterministic addressing means the system knows where information lives using the text alone, so it can do runtime prefetching.

That makes central processing unit (CPU) random access memory (RAM) usable as the cheap, abundant backing store, instead of consuming limited graphics processing unit (GPU) memory. Local dependencies are handled by lookup, leaving attention free for global context, the big picture.

👨🔧 Think LoRA is the standard for finetuning, this paper shows a new method that wins by a clear margin.

Result: Standard LoRA underperforms. Variants like DoRA, AdaLoRA, and MiSS do better, with DoRA hitting 46.6% average accuracy — even above full fine-tuning at 44.9%.

The paper shows several lightweight fine tuning methods beat Low Rank Adaptation (LoRA) for reinforcement learning with verifiable rewards.

Authors tried multiple “cheap to train” fine tuning tricks, and they found a few of them gave better reasoning accuracy than plain LoRA when doing RLVR training.

Reinforcement learning with verifiable rewards (RLVR) trains a model using a checker that returns only 1 or 0, so learning is slow and costly.

Parameter efficient fine tuning (PEFT) saves compute by training small extra adapter weights, while keeping the original model weights frozen.

The authors benchmark over 12 PEFT methods on DeepSeek-R1-Distill 1.5B and 7B models, using math reasoning tasks.

They find adapter designs that change the LoRA structure, like DoRA, beat basic LoRA and sometimes training all weights.

But methods that initialize adapters from the model’s biggest weight patterns (singular value decomposition, SVD) often crash, because RLVR needs different kinds of updates.

They also find a clear floor, moderate parameter savings still work, but extreme compression leaves too little freedom for reasoning.

For teams training RLVR reasoning models, this gives a practical way to save compute without losing accuracy.

That’s a wrap for today, see you all tomorrow.