🧠 GoogleDeepMind just dropped the paper explaining some of the key factors that made Gemini DeepThink shine at this year’s IMO.

Gemini’s math IQ secrets, AI chip stalls from power crunch, falling LLM token prices, arXiv spam crackdown, and Google’s solar-powered space training plan.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (5-Nov-2025):

🧠 GoogleDeepMind just dropped the paper explaining some of the key factors that made Gemini DeepThink shine at this year’s IMO.

🛑 The arXiv finally had enough of the AI paper spam — it’s changing the rules to keep the bots out.

📡 LLM token prices are collapsing fast, and the collapse is steepest at the top end.

🛠️ Sam Altman just said a compute glut will likely happen for sure. 😯

👨🔧 Satya Nadella said that the company doesn’t have enough electricity to power all its AI GPUs. He said they might end up with a pile of chips just sitting around because there’s not enough power to plug them in.

🔥 Google’s Project Suncatcher aims to train AI models in orbit using TPUs powered directly by sunlight, skipping the limits of Earth’s grid power and cooling

🧠 GoogleDeepMind just dropped the paper explaining some of the key factors that made Gemini DeepThink shine at this year’s IMO.

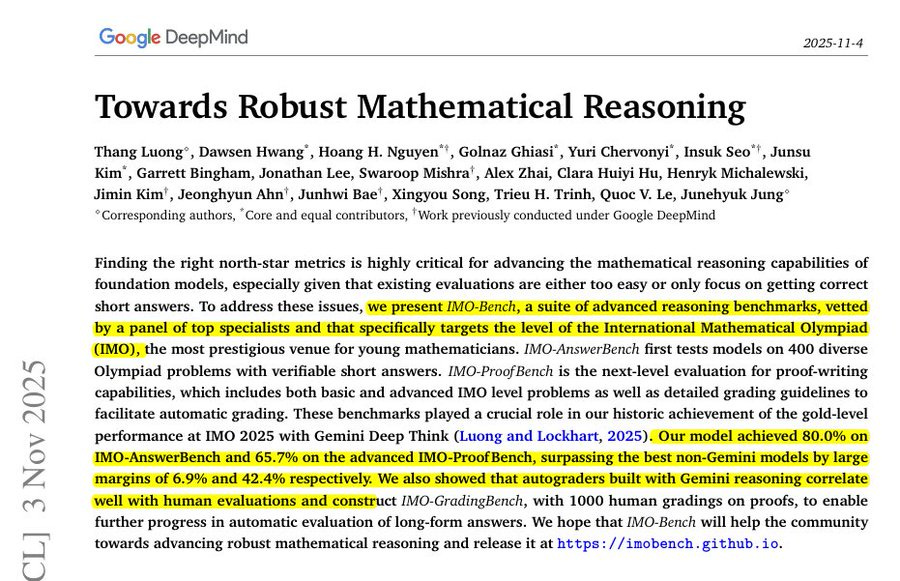

Introduces IMO-Bench, a suite of advanced reasoning benchmarks that played a crucial role in GDM’s IMO-gold journey. Vetted by a panel of IMO medalists and mathematicians.

They present IMO-Bench, a full suite to test math reasoning at International Mathematical Olympiad level, not just final answers. It has 3 parts, IMO-AnswerBench for short answers, IMO-ProofBench for full proofs, and IMO-GradingBench for grading skill.

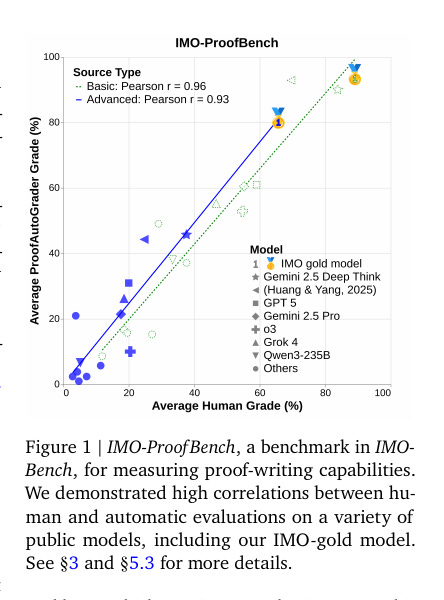

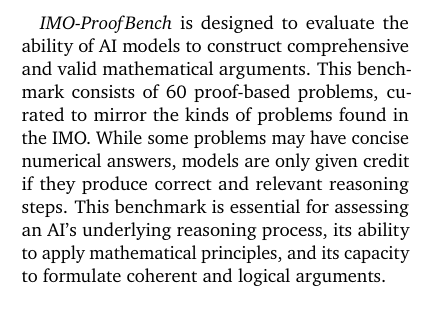

AnswerBench has 400 Olympiad-style questions with unambiguous final answers, plus “robustified” variants that paraphrase and tweak details to block memorization. A dedicated AnswerAutoGrader extracts the final answer from messy model outputs and judges meaning, not formatting. ProofBench has 60 problems split into basic and advanced sets, and it requires full step-by-step arguments scored on the standard 0–7 scale.

This figure shows that the automatic proof grader’s scores match human grades closely on IMO-level proofs.

It reports very strong correlations on both the basic set and the advanced set, which means the grader tracks human judgment well even on harder problems. It also shows different models plotted along the line of perfect agreement, so better points near the top right are getting high human and autograder scores together.

⚙️ The Core Concepts

The work argues that many popular math sets are saturated or reward surface tricks, so it builds a suite that checks not only what answer a model gives but how it argues, and it treats proof correctness and grading reliability as first‑class metrics. It sets the standard at the IMO level because those problems force multi‑step originality, not template play, which exposes brittle reasoning.

🧪 How short answers are graded

AnswerAutoGrader uses a strong model to extract a single final answer from messy outputs and judge semantic equivalence to the ground truth across numeric, algebraic, and set forms, so formatting quirks do not break grading. Against human judges it reaches 98.9% agreement on the positive class and near‑perfect alignment overall, which makes large‑scale answer evaluation practical without narrowing to only numeric responses. When problems are robustified, accuracy drops consistently across models, showing the benchmark resists recall of seen templates and better measures real skill.

🛑 arXiv finally had enough of the AI paper spam — it’s changing the rules to keep the bots out.

arXiv states that authors must now show proof of successful peer review when submitting review or position papers in computer science, which tightens enforcement of existing practice rather than creating a brand-new rule, as explained in its own update.

Coverage notes that this change arrives after moderators saw hundreds of CS review submissions each month and a rise in AI-assisted survey churn, with outside reports summarizing the shift and its context in plain terms here.

A separate write-up adds that the enforcement is meant to free moderators to focus on original research uploads, and that similar moves could appear in other fields if spam grows there.

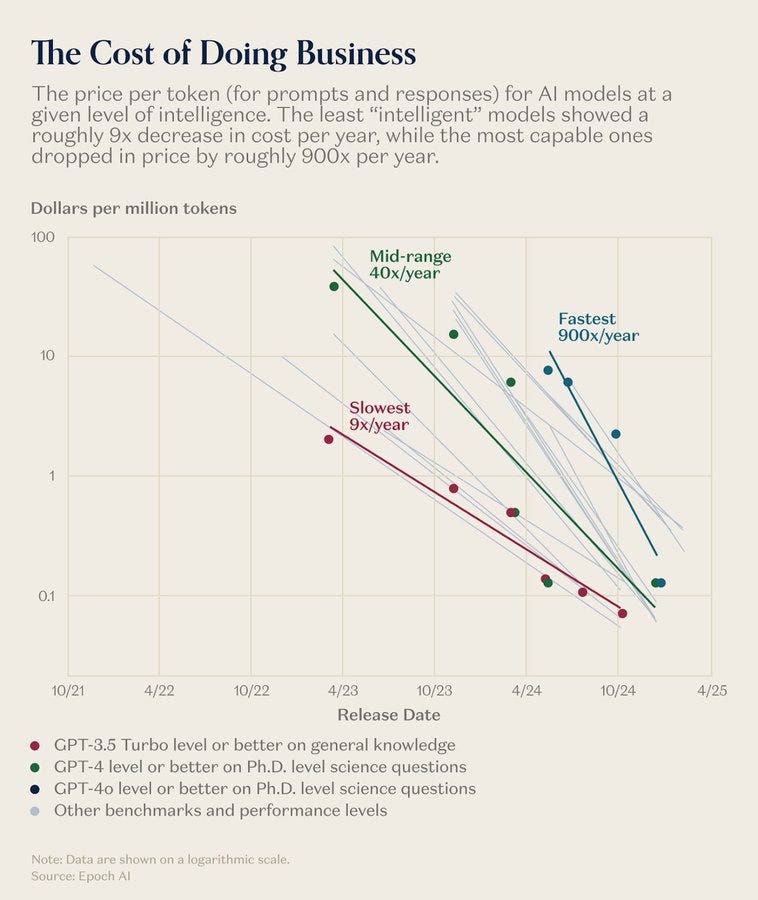

📡 LLM token prices are collapsing fast, and the collapse is steepest at the top end.

Was same with “Moore’s Law, the best contemporary example of Jevons paradox. This extraordinary collapse in computing costs – a billionfold improvement – did not lead to modest, proportional increases in computer use. It triggered an explosion of applications that would have been unthinkable at earlier price points. “

😯 Sam Altman just said a compute glut will likely happen for sure

He talks about the drivers of this. Which are, if cheap energy arrives at scale, existing long term capacity and power contracts could look terrible, and some builders will get burned.

If the cost per unit of intelligence keeps dropping very fast, like 40x per year historically, demand will surge, then later you might overshoot and end up with excess capacity. In an extreme case, if models become efficient enough to run a powerful assistant locally on laptops, a lot of centralized buildout could be stranded.

👨🔧 Satya Nadella said that the company doesn’t have enough electricity to power all its AI GPUs. He said they might end up with a pile of chips just sitting around because there’s not enough power to plug them in.

He actually said they might end up with a pile of chips just sitting around because there’s not enough power to plug them in.

OpenAI is pushing the U.S. government to add 100 gigawatts per year of generation as a strategic asset for AI. This power gap creates stranded capital since GPUs depreciate while waiting for buildings, substations, and transmission to be ready.

Even if training stays centralized, inference demand is the big driver for electricity, so any shift of queries to efficient edge devices would change grid and data center sizing assumptions. The main problem is, power delivery and construction schedules run on multi-year permitting and build cycles, while GPU supply ramps in quarters.

🔥 Google’s Project Suncatcher aims to train AI models in orbit using TPUs powered directly by sunlight, skipping the limits of Earth’s grid power and cooling

Orbit gives near-constant power, since panels stay in the sun almost all day with no clouds or night, so energy per square meter is higher, Google cites up to 8x over ground arrays in some orbits.

More steady power means training clusters can run flat-out without chasing grid peaks or buying pricey backup capacity. No land, substation, or water permits are needed, which cuts site time and removes limits that slow large terrestrial builds.

The idea is to run Trillium v6e TPUs inside clusters of small satellites that orbit Earth in constant sunlight. This setup avoids the main problems of data centers, where power use and cooling are the biggest bottlenecks. In orbit, solar panels can generate up to 8 times more energy and run almost all day without night interruptions.

The challenge is that electronics face intense radiation and no air for cooling in space. Google tested its TPU chips under radiation similar to low-Earth orbit. They survived up to 15 krad(Si) exposure, which is about 3 times higher than what a 5-year mission would see. Only the high-bandwidth memory showed small issues, which is a good sign for long-term reliability.

To move data between satellites, Google built optical laser links that reached 1.6 Tbps bandwidth on the ground. They use dense wavelength-division multiplexing (DWDM), a way to send many signals on different laser colors through one channel. Satellites would fly in close clusters, only 100–200 meters apart, to keep strong signal power.

When satellites are close together, the laser signal is much stronger because signal strength rises fast as distance gets smaller. Stronger signal means each laser can carry many channels at once using DWDM, which is just stacking many colors of light in the same beam.

With short gaps and many channels, a single optical link can hit about 10 Tbps, which is data center level speed. The motion and spacing are modeled with Hill-Clohessy-Wiltshire equations, refined using JAX-based physics models.

Economically, the paper argues the stack becomes competitive if launch drops to $200/kg, putting space power cost in the same ballpark as US data centers on a per kW per year basis. Two test satellites with Planet will launch in 2027 to try TPU-based compute and laser networking in orbit.

That’s a wrap for today, see you all tomorrow.