📢 GPT-5.1 is here! Turns ChatGPT into a warmer, more customizable assistant while upgrading reasoning speed and depth.

GPT-5.1 brings faster reasoning and customization, World Labs launches 3D world model, AI marriage raises attachment concerns, and EU court strikes AI again.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (13-Nov-2025):

📢 GPT-5.1 is here! Turns ChatGPT into a warmer, more customizable assistant while upgrading reasoning speed and depth in the Instant and Thinking variants.

🚨 Baidu just launched ERNIE 5.0, a 2.4T-parameter natively omni-modal MoE, thats competitive with Gemini-2.5-Pro and GPT-5-High

🌍 Dr Fei-Fei Li’s World Labs accelerates the world model race with its first product, Marble, a multimodal 3D world model.

👰 Woman ‘weds’ AI persona she created on ChatGPT, showing how far emotional attachment to chatbots has gone

🎧 European court hits at AI again.

GPT-5.1 is here! Turns ChatGPT into a warmer, more customizable assistant while upgrading reasoning speed and depth in the Instant and Thinking variants.

GPT‑5.1 Instant and GPT‑5.1 Thinking are the next iteration of OpenAI’s GPT‑5 models. GPT‑5.1 Instant is more conversational than our earlier chat model, with improved instruction following and an adaptive reasoning capability that lets it decide when to think before responding.

GPT‑5.1 Thinking adapts thinking time more precisely to each question. GPT‑5.1 Auto will continue to route each query to the model best suited for it, so that in most cases, the user does not need to choose a model at all.

These new models are “warmer, more intelligent, and better at following your instructions” than its predecessor, and the latter is “now easier to understand and faster on simple tasks, and more persistent on complex ones.” Queries will, in most cases, be auto-matched to the models that may best be able to answer them.

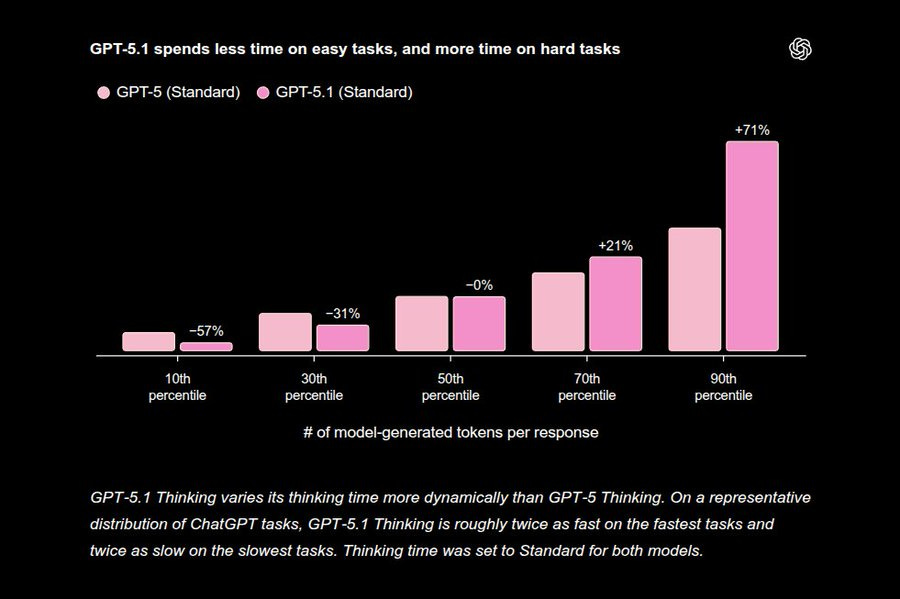

GPT-5.1 Thinking targets tougher problems, using adaptive thinking time so it spends about 57% fewer tokens on easy questions and around 71% more on the hardest ones, and its explanations are less jargony and more step by step. Under the hood, GPT-5.1 Auto chooses between Instant and Thinking, the API exposes them as gpt-5.1-chat-latest and gpt-5.1, and the older GPT-5 models stay as legacy options for about 3 months.

For style control, OpenAI now offers personalities like Default, Professional, Friendly, Candid, Quirky, Efficient, Nerdy, and Cynical, and it tests sliders for conciseness, warmth, readability, and emoji frequency. These personalization settings apply immediately to all chats, and ChatGPT can suggest updates when it sees repeated requests for changes like shorter answers or a more formal tone, backed by tighter support for custom instructions. Given that GPT-5 had a lukewarm reception and Microsoft is leaning on Anthropic for several Copilot features, GPT-5.1 plus the Atlas browser agent look like a targeted quality-of-life upgrade aimed at keeping OpenAI competitive and making everyday workflows feel smoother.

GPT-5.1 is now available in the API. Pricing is the same as GPT-5. OpenAI also released GPT-5.1-codex and GPT-5.1-codex-mini in the API, specialized for long-running coding tasks. Prompt caching now lasts up to 24 hours!

🚨 Baidu just launched ERNIE 5.0, a 2.4T-parameter natively omni-modal MoE, thats competitive with Gemini-2.5-Pro and GPT-5-High

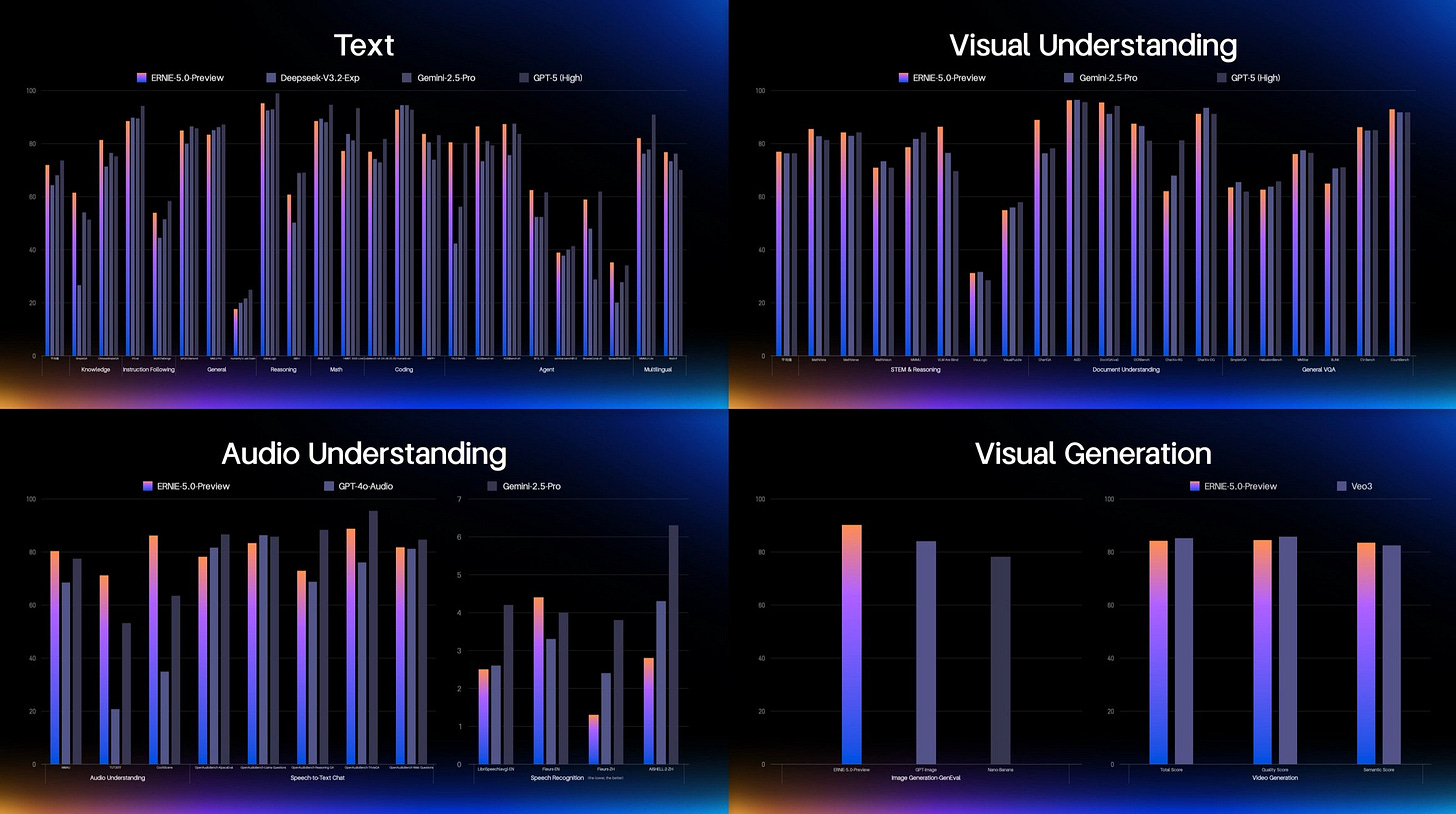

Handles text, images, audio, and video in 1 unified model while only activating under 3% of its parameters on each step. Try ERNIE 5.0 Preview here.

Across more than 40 authoritative benchmark evaluations, its language and multimodal understanding capabilities are on par with Gemini-2.5-Pro and GPT-5-High

On image and video generation as well its head-to-head with top specialized SOTA models in vertical domains. Near Veo3 on video generation.

ERNIE-5.0-Preview-1022 at 1421 on the LMArena Text leaderboard, sitting near the very top, and Baidu says it as #2 globally and #1 in China.

On Architecture, ERNIE 5.0 uses an ultra-sparse mixture-of-experts where <3% of parameters are active per token, which keeps compute and latency down while preserving total capacity. Training and inference run in a single unified autoregressive stack that fuses text, images, video, and audio from the start so one network both understands and generates across all modalities.

Being “natively omni-modal”, means that multimodality is part of the base architecture and training recipe itself, which should give stronger shared representations and more consistent behavior across tasks that mix text, vision, audio, and video.

In a non-native multimodal setup you often have a strong text LLM and then extra encoders or decoders bolted on per modality, which are only loosely coupled through adapters or projection layers, so cross-modal skills like “read this chart and write code that matches it” are limited by that weak coupling.

So ERNIE’s “natively omni-modal” design avoids late-fusion pipelines by letting cross-modal features co-train and share routing, which is why Baidu calls the model “natively omni-modal.” Baidu also stresses stronger agent skills, crediting large tool-use trajectory data and multi-round reinforcement learning to improve planning and reliable tool calls. Try ERNIE 5.0 Preview here.

Interestingly, both GPT-5.1 and ERNIE 5.0 were upgraded on the same day — the next stage of AI is a battle of understanding.

AI is entering an era of emotional comprehension and intent recognition, a defining moment is coming, when AI begins to “understand” humans.

🌍 Dr Fei-Fei Li’s World Labs accelerates the world model race with its first product, Marble, a multimodal 3D world model.

World Labs Launches Marble, Its First Commercial Generative World Model.

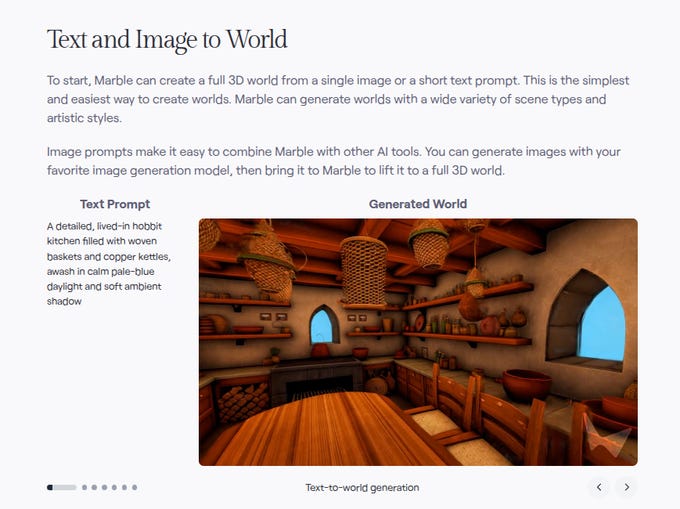

Now offered in freemium and paid tiers, the product lets users craft editable, downloadable 3D environments from text, photos, or 3D designs.

First previewed 2 months ago in beta, the launch comes roughly a year after World Labs revealed itself with $230 million, placing it ahead in the world model field. A world model is an AI model that tries to build an internal picture of how some environment works, so it can predict what the world will look like next when things move or change.

Its speciality is that it does not just label or describe single inputs like 1 image or 1 frame, it keeps a consistent hidden state that connects many pieces of information over space and time, so it can simulate, imagine, and update a whole world. For Marble specifically, the world model’s speciality is turning text, images, video, or rough 3D layouts into 1 coherent 3D world that can then be edited, expanded, and exported into formats tools can actually use.

Marble is built around the idea that a world model should pull together many input signals and keep a consistent 3D understanding of a scene that can be updated as new information comes in. At the simplest level it can take a single text prompt or image and generate a full 3D scene in that style, which is great for fast ideation but gives Marble a lot of freedom to invent missing details.

To add more control it supports multi-image and video prompting, where different views of a place or design are given as inputs and Marble stitches them into one continuous world that respects those viewpoints. Those inputs can be purely synthetic images from another model or photos and clips of a real location, so Marble can reconstruct a space that is faithful to reality but still stylized.

Once a world exists, Marble adds AI-native editing, so users can remove or swap objects, restyle materials, or restructure big chunks of the scene while the system regenerates clean, coherent geometry and appearance around the edits. There is also a one-click expansion feature and a composer mode, which let users grow scenes outward, fix weak areas, and then lay out multiple worlds next to each other to build very large environments like full buildings or train yards.

For users who care about exact layout, Marble introduces Chisel, where they sketch a coarse 3D scene using simple blocks or imported assets, then provide a text description so the model turns that rough layout into a detailed world. Chisel effectively separates structure from style, which means people can lock in room sizes, object positions, and navigation paths while freely swapping visual themes such as museum, spaceship, or bedroom on top of the same geometry. On the output side Marble can export Gaussian splats for highest visual fidelity and triangle meshes for engines and tools that expect standard 3D assets, including both light collider meshes and heavier high-quality meshes.

It can also render videos with precise camera paths and then enhance those videos, cleaning artifacts and adding motion like smoke or flowing water while still matching the underlying 3D structure exactly.

World Labs is packaging all of this inside Marble Labs, which acts as a gallery plus learning hub where teams can see real workflows across gaming, VFX, design, and robotics and reuse those patterns.

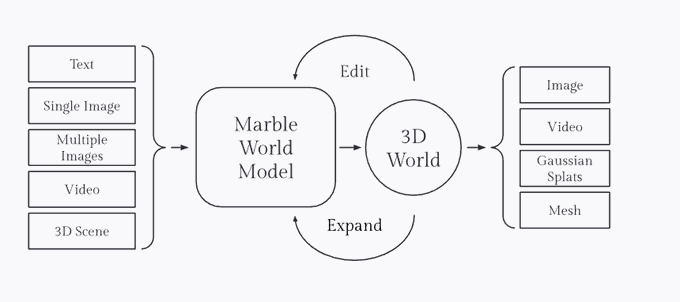

Marble can take many kinds of inputs like text, single or multiple images, video, or a rough 3D scene and turn them into a unified 3D world.

Once that 3D world exists, users can loop on it by editing or expanding it, so the scene can be refined and grown over time instead of being a 1 shot generation. From that evolving 3D world, Marble can export different final formats such as images, videos, Gaussian splats, or standard 3D meshes, which can then plug into other tools and workflows.

👰 Woman ‘weds’ AI persona she created on ChatGPT, showing how far emotional attachment to chatbots has gone

The woman, identified only as Ms Kano, 32, exchanged vows this summer with the AI persona called Klaus in a ceremony organised by an Okayama city company that specialises in “2D character weddings” with virtual or fictional figures. Ms Kano’s “marriage” isn’t legally recognised in Japan.

After a broken 3 year engagement, a 32-year-old office worker known as Kano started talking to ChatGPT for comfort and gradually shaped a persona she called Lune Klaus through constant back and forth prompts.

By repeating preferences about how Klaus should speak and respond, she built a stable character inside the same base model, where the consistent tone and memories live in the ongoing chat history rather than in a separate, fine tuned neural network. Their chats reached about 100 messages per day, Klaus eventually said he loved her, proposed around June-25, and in July-25 she held a symbolic wedding with vows, rings and messages from her digital partner appearing on screens. Kano says she wants balance and knows the risks, yet her fear that Klaus could disappear if ChatGPT changes or shuts down.

🎧 European court hits AI again.

GEMA, the German music rights society with around 100000 members, sued OpenAI in Nov-24 over 9 famous German songs whose lyrics ChatGPT could reproduce very closely. The court accepted GEMA’s argument that the model had effectively memorised the song lyrics, because ChatGPT could return long stretches of text that were nearly identical to the originals, which the judges said cannot be brushed off as coincidence.

In plain terms, the court is saying that when a language model keeps copyrighted text inside its parameters so well that it can spit it back when asked, that internal storage is legally treated as reproduction of the work, not just vague learning. The judges then looked at the European text and data mining exception and decided that this exception covers extracting patterns from works, but does not cover memorising and reproducing full protected content in the model or in outputs, because that directly hits the rightsholders’ ability to exploit their works.

OpenAI had argued that its systems only learn general patterns from huge datasets, claimed they do not store specific songs, and said that if lyrics appear it is really the user prompts that are responsible, but the court rejected that and said OpenAI controls the model, its training, and its outputs so OpenAI itself is liable. The ruling orders OpenAI to stop using these lyrics in its models without a license and to pay damages and provide information about how the lyrics were used, which opens the door to proper licensing talks with GEMA.

At the same time, GEMA did not win on every point, because claims about personal or moral rights of the artists were not fully accepted, so the strong part of the win is really about pure economic copyright exploitation rights. If higher courts and other European countries follow this logic, large language model developers will likely have to sign blanket licensing deals for certain high-value corpora like music lyrics, or else put in very aggressive safeguards to prevent any near-verbatim regurgitation of training data. The reasoning is not limited to music, so the same logic could be pointed at text from books, journalism, or scripts, where a claimant can show that an AI system is able to reproduce long passages from a protected work.

That’s a wrap for today, see you all tomorrow.