GraphInstruct: Empowering Large Language Models with Graph Understanding and Reasoning Capability

GraphInstruct: Comprehensive benchmark with 21 classical graph reasoning tasks

GraphInstruct: Comprehensive benchmark with 21 classical graph reasoning tasks

Results 📊:

• Strong performance maintained on unseen graph sizes and description formats

• Lower performance on out-of-domain tasks highlights areas for future work

Original Problem 🔍:

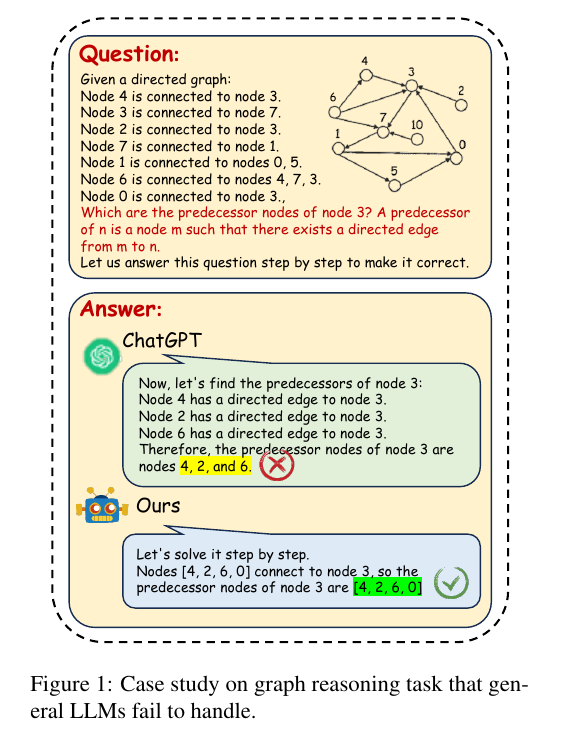

LLMs lack graph understanding capabilities, crucial for advancing general intelligence. Existing benchmarks are limited in scope and diversity.

Solution in this Paper 🛠️:

• Diverse graph generation: Various structures, sizes, and description formats

• GraphLM: Fine-tuned Vicuna-7b using LoRA on GraphInstruct

• GraphLM+: Enhanced with step mask training strategy for improved reasoning

Key Insights from this Paper 💡:

• Intermediate reasoning steps crucial for enhancing graph reasoning capability

• Label mask training filters redundant information while preserving graph structure

• LLMs can generalize to unseen graph sizes and description formats

• Out-of-domain task performance indicates room for improvement

💡 To enhance reasoning abilities, GraphLM+ was created using a step mask training strategy.

This involved incorporating intermediate reasoning steps as supervised signals during training, with a label mask to filter out redundant information while preserving essential graph structure data.

📊 GraphInstruct includes 21 classical graph reasoning tasks across three levels:

Node level: Degree, PageRank, Predecessor, Neighbor, Clustering Coefficient

Node-pair level: Common Neighbor, Jaccard, Edge, Shortest Path, Connectivity, Maximum Flow

Graph level: DFS, BFS, Cycle, Connected Component, Diameter, Bipartite, Topological Sort, MST, Euler Path, Hamiltonian Path

🤖 GraphLM was created by fine-tuning Vicuna-7b on GraphInstruct using LoRA.

Paper - "GraphInstruct: Empowering Large Language Models with Graph Understanding and Reasoning Capability"

🔬 Introduces GraphInstruct: benchmark for evaluating LLMs' graph understanding abilities

21 classical graph reasoning tasks

Diverse graph generation pipelines

Detailed reasoning steps

🧠 Develops GraphLM: LLM with enhanced graph comprehension

Created through efficient instruction-tuning on GraphInstruct

🚀 Proposes GraphLM+: further improved graph reasoning

Uses step mask training strategy

🔍 Key contributions:

First major effort to boost LLMs' graph understanding

GraphLM and GraphLM+ outperform other LLMs in experiments