"Great Models Think Alike and this Undermines AI Oversight"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.04313

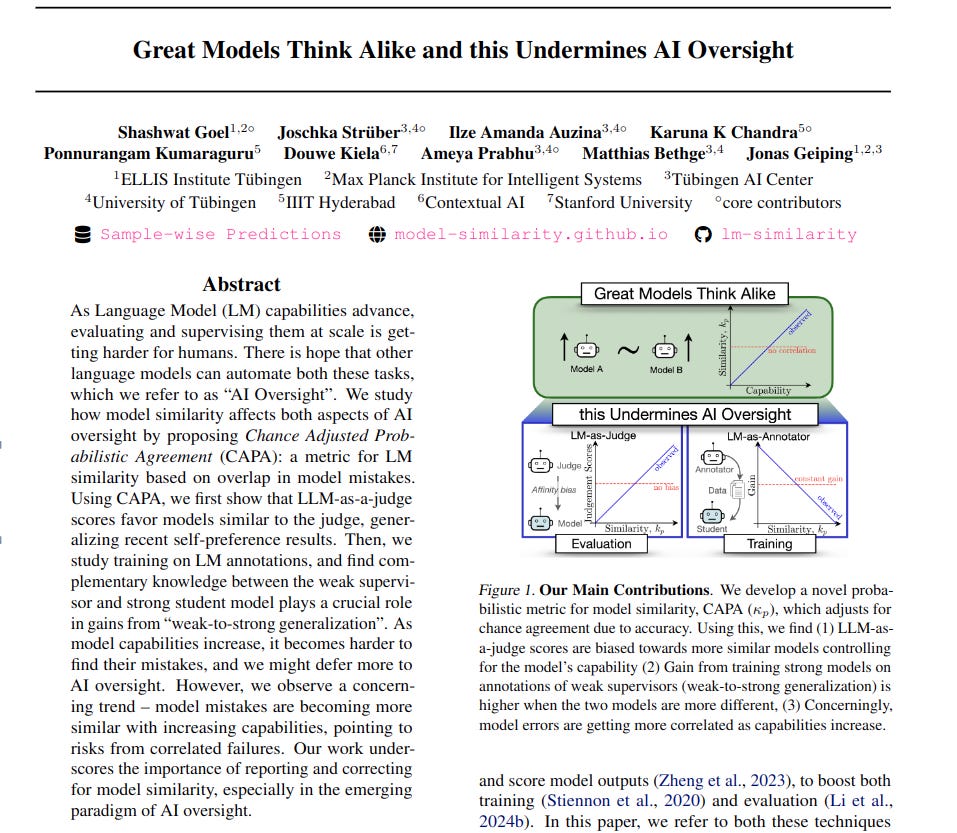

This paper addresses the challenge of evaluating and supervising increasingly capable LLMs, termed "AI Oversight". The paper argues that relying on similar LLMs for oversight can be problematic due to shared biases.

This paper proposes a new metric, Chance Adjusted Probabilistic Agreement (CAPA), to quantify the functional similarity between LLMs based on their prediction overlaps, while adjusting for chance agreements. This metric is then used to analyze bias in LLM judges and the effectiveness of weak-to-strong generalization in training.

-----

📌 CAPA offers a practical method to quantify and mitigate affinity bias in LLM evaluations. By using CAPA, we can select diverse judge models, leading to fairer and more robust benchmark results.

📌 The Chance Adjusted Probabilistic Agreement metric enables strategic weak-to-strong training. It guides the selection of weak supervisor models that are functionally different from the strong student, maximizing knowledge transfer.

📌 This paper highlights a critical challenge: increasing LLM capability might reduce model diversity. CAPA serves as an essential tool to monitor and address error correlation in evolving AI oversight systems.

----------

Methods Explored in this Paper 🔧:

→ This paper introduces CAPA, a novel metric for measuring LLM similarity. CAPA refines existing error consistency metrics by considering differences in prediction probabilities, not just correctness.

→ CAPA computes 'observed agreement' as the probability of agreement if predictions were sampled from model outputs. It calculates 'chance agreement' by considering the expected agreement of independent models given their accuracies and the number of options in multiple-choice questions.

→ CAPA is formulated as (observed agreement - chance agreement) / (1 - chance agreement). This normalized metric indicates similarity beyond chance, with 0 meaning agreement at chance levels, positive values indicating more similarity, and negative values indicating disagreement.

-----

Key Insights 💡:

→ LLM judges exhibit affinity bias, favoring models functionally similar to themselves when assigning scores, even after accounting for model capability.

→ Weak-to-strong generalization, training a strong student model on annotations from a weaker supervisor, yields greater performance gains when the supervisor and student models are less similar. This suggests complementary knowledge between dissimilar models is crucial for learning.

→ As LLM capabilities increase, model errors become more correlated as measured by CAPA. This trend raises concerns about potential correlated failures and reduced effectiveness of AI oversight using similar models.

-----

Results 📊:

→ LLM judges show a significant positive correlation (average Pearson r=0.84) between judgment scores and model similarity (CAPA).

→ Partial correlation analysis confirms a significant effect of similarity on judge scores (p < 0.05), even after controlling for model accuracy.

→ Weak-to-strong training gains are inversely correlated with CAPA similarity (r = −0.85), indicating higher gains with less similar models.