🥉Huawei releases open source LLM Pangu Pro with a new innovative architecture

Huawei open-sources Pangu Pro LLM; Claude Code hooks simplify agent alerts; Musk raises $10 B for xAI infra; Perplexity launches $200 Max tier with unlimited Labs and Comet browser beta

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (2-July-2025):

🥉Huawei releases open source LLM Pangu Pro with a new innovative architecture

🏗️ Anthropic Claude Code introduced hooks to make it way easier to build few things, like agent notifications

📡 Elon Musk raises $10B of fresh capital for powering the infrastructure of xAI

🛠️ Perplexity launches $200-a-month Max: unlimited Labs, early Comet browser, priority o3-pro and Opus 4

🥉 Huawei releases an open weight model Pangu Pro 72B A16B, trained entirely on Huawei Ascend NPUs

China’s Open-Source AI Push Expands After DeepSeek and Baidu. 🔥 AND THIS TIME WITH ITS OWN HARDWARE, Huawei’s Ascend

Huawei now releases an open weight model Pangu Pro 72B A16B, with a new innovative Mixture of Grouped Experts (MoGE) architecture, specifically engineered for high efficiency on Huawei’s Ascend hardware.

⚙️ What are those Ascend NPU by Huawei

Huawei names its AI chips Ascend and calls the cores inside them DaVinci Neural Processing Units, or NPUs. Each NPU is an ASIC built only for matrix multiply, vector and convolution workloads, so the silicon saves area that a GPU spends on graphics or shading logic.

Ascend 910 released in 2019 set the baseline: 256 TFLOPS at FP16 and 512 TOPS at INT8 while drawing about 310 W.

🏗️ Hardware shape compared to NVIDIA

• Compute per chip: Ascend 910 delivers 256 TFLOPS FP16 while an NVIDIA A100 reaches 312 TFLOPS FP16 and an H100 touches 1,979 TFLOPS FP16 via Tensor Cores.

• Memory: H100 carries 80 GB to 94 GB HBM3 at up to 3.9 TB/s, Ascend 910 pairs on‑package HBM2E but with lower bandwidth that Huawei does not publish publicly.

• Power: Ascend 910 tops out near 310 W, an H100 SXM draws 700 W inside NVL modules.

• Scale‑out fabric: NVIDIA uses NVLink and NVSwitch, Huawei uses PCIe 4 plus custom 200 Gb/s RoCE and the HCCS interconnect to tie 8 or 16 NPUs.

Because Ascend lacks graphics and display blocks, almost all die area feeds tensor engines, so the chip can reach high throughput per watt but still trails H100 peak math.

🔧 Software stack differences

NVIDIA’s CUDA is a mature C++ runtime with cuDNN, NCCL and optimiser libraries that every major ML framework calls.

Ascend ships the CANN driver layer plus the MindSpore deep‑learning framework. CANN maps kernels to DaVinci instructions, MindSpore plays the role PyTorch plays on CUDA.

Most open‑source LLM repos publish CUDA first, so Huawei maintains graph converters and custom kernels to bridge PyTorch or TensorFlow models onto Ascend.

📌 The weights for Pangu Pro 72B are on Huggingface. It competitive with Qwen3 32B and it was trained entirely on Huawei Ascend NPUs.

📌 With MoGE architecture, during expert selection the experts are divided into groups, and each token is constrained to activate the same number of experts inside its group. This strategy gives natural load balance across devices.

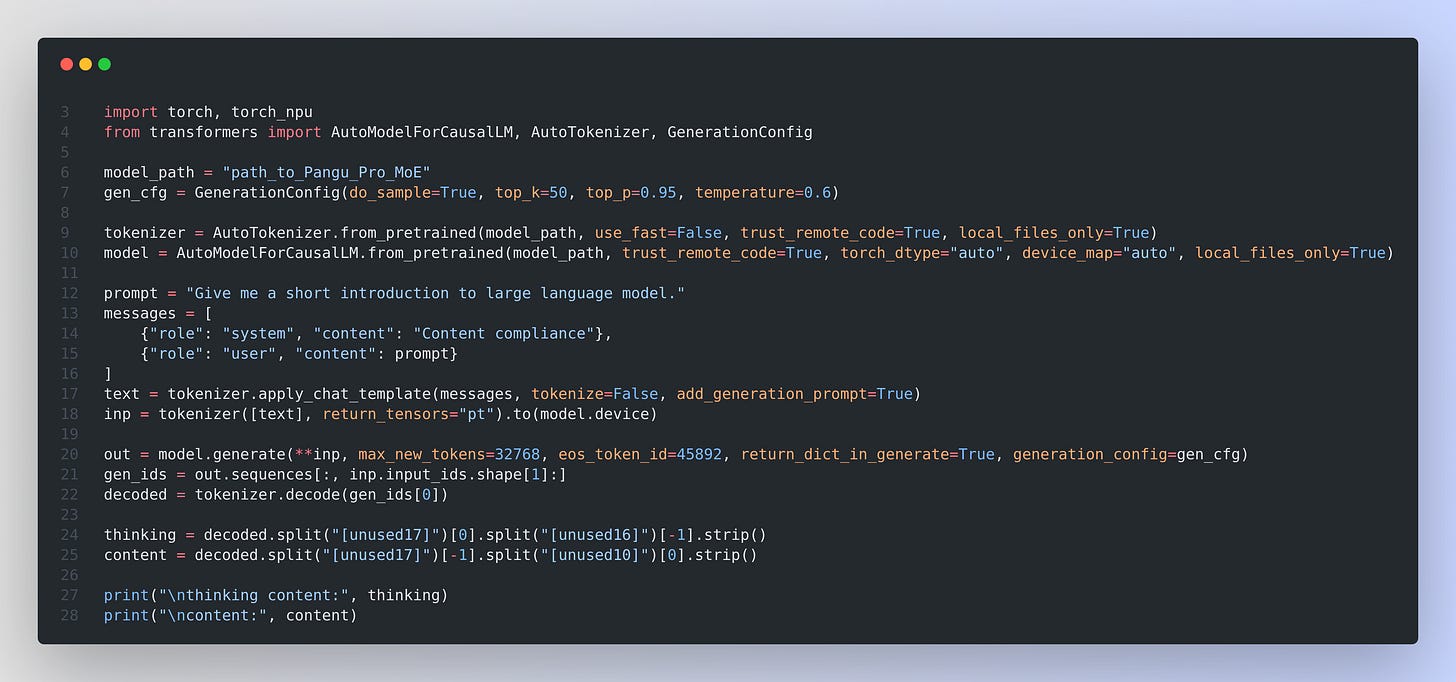

Inference examples: Code for the Ascend inference system, together with MindIE and vLLM-Ascend support, is already available. Quantized weights will be released soon. The model can run in the Transformers framework. Required versions are torch 2.1.0 or newer.

⚙️ The innovative architecture it has

Mixture of Grouped Experts (MoGE) sticks a global router on top of 64 experts and then slices those experts into equal groups that match the number of devices. Read the Paper.

When the router scores experts for a token it picks the top one inside every group instead of the global top 8. That rule locks the imbalance score at 0, meaning no device waits for another.

🪄 How Grouping Fixes Load Balance

Old Mixture of Experts can accidentally send most tokens to the same few specialists, so one card stalls while others idle. The paper defines an imbalance score as the gap between the busiest and the emptiest card divided by batch size, and shows Monte Carlo runs where that score is almost always above 0 for standard routing.

MoGE’s per‑group top‑1 routing makes the score zero by construction because each card always gets one expert call per token.

🏗️ Anthropic Claude Code introduced hooks to make it way easier to build few things, like agent notifications

Anthropic’s new hooks feature is a shell based hooks that run automatically at key moments, letting you script exactly what must happen during every coding session.

The feature removes uncertainty by turning informal chat instructions into commands that always fire, so formatting, logging, or safety checks never depend on the model remembering a prompt.

Claude Code originally relied on the LLM to decide when to run tools or request confirmation. That approach left gaps because the model could forget, misinterpret intent, or vary its behavior between sessions.

Hooks solve this gap by letting users register any shell command that fires at predefined lifecycle events.

Right now there are 4 hooks: PreToolUse, PostToolUse, Notification, and Stop. So with it, Claude Code lets you attach small shell programs, called hooks, to moments such as PreToolUse, PostToolUse, Notification, and Stop. A hook runs with your own user permissions, so it can log, block, or edit what Claude is about to do.

The user decides whether a command runs before a tool call, after it, on each notification, or when the assistant tries to finish, so control moves from probabilistic prompting to deterministic scripting.

A hook definition sits in a JSON settings file at either user or project scope. Each entry pairs a matcher that filters tool names with an array of shell commands.

When an event matches, Claude Code streams structured JSON about the action into the command through stdin, waits up to 60 seconds, then interprets the exit code or optional JSON output to decide how to continue.

Because hooks execute with the user’s full permissions they can read or modify anything the account can access. The documentation lists safety steps such as validating inputs, quoting variables, and skipping sensitive paths.

For devs working with multiple Model Context Protocol servers, hooks recognize tool names prefixed with mcp__, so one pattern can target an entire server or a single remote tool, keeping policy enforcement consistent across local and cloud actions.

The result is a coding environment where style guides, security rules, and workflow conventions run as part of the platform itself, not as suggestions embedded in prompts, giving you predictable and auditable behavior.

Example hook code

The JSON below registers one PreToolUse hook for every Bash command. Claude passes the intended command to a tiny Bash script that refuses to run if the text contains “rm -rf”. Save both snippets, then reload /hooks once.

How the hook makes a difference

When Claude wants to execute any Bash command, your PreToolUse hook runs first. The matcher string “Bash” restricts the hook to Bash tool calls only.(docs.anthropic.com) Because the script exits with 2 when “rm -rf” appears, Claude immediately cancels the command. The error text shown above is routed back to the model, so Claude sees that the action was blocked and can propose a safer alternative. The exit-code behaviour is documented in the hook output rules.(docs.anthropic.com)

Without the hook, the same destructive command would reach the normal permission dialog. If the user clicked “Yes, don’t ask again”, Bash would receive full control and could erase files. The separate permission system is described under Identity and Access Management, but it only asks once, whereas the hook enforces the rule on every attempt.

Hooks can do more than blocking. The quick-start example logs every Bash command to ~/.claude/bash-command-log.txt, proving that hooks can capture data silently. They can also run formatters after file edits, send desktop notifications, or inject custom policy checks, as shown in tutorials and community posts.

This short example shows how a 7-line script, connected through a few lines of JSON, turns a risky command into a blocked event every time it appears, giving you deterministic safety that cannot be skipped by manual clicks.

📡 Elon Musk raises $10B of fresh capital for powering the infrastructure of xAI

xAI raises $10B of fresh capital. This $10B of new capital is split evenly between new equity and secured debt, lifts its post-money valuation to about $75B, and demonstrates continuing appetite among large institutional investors to bankroll an aggressive expansion of proprietary compute for the Grok model family.

The combination of debt and equity reduces the overall cost of capital and substantially expands pools of capital available to xAI.

1. Raise structure: Morgan Stanley led the $10B package. The $5B debt slice is a mix of term loans and secured notes backed by GPU clusters plus future software contracts.

2. Use of proceeds: Almost all funds go to infrastructure. The Memphis Colossus campus opened in 2024 with 100,000 Nvidia H100 GPUs, has already doubled to 200,000, and targets 1M once land and power permits finalize. xAI bought another 1M sq ft nearby, installed 165 Tesla Megapacks, and added a SpaceX-derived ceramic-membrane water plant that recycles 13M gal daily and trims peak-load costs.

3. Valuation and burn: TipRanks pegs xAI at $75B. Sovereign backers hold an option for another $20B of equity at richer terms if revenue goals land. Bloomberg analysis cited by Tom’s Hardware puts cash burn near $1B per month, so 2025 burn could hit $13B against just $0.5B of projected revenue, which explains using cheaper debt alongside equity.

4. Strategy: Elon Musk sells xAI as a “maximum truth-seeking” foil to incumbents and is chasing compute scale to match the $40B infrastructure plan OpenAI announced in 2025-03.

Synergies with other Musk ventures are evident. Tesla Megapacks provide battery buffering, and the Memphis water system’s ceramic membranes, originally developed for SpaceX, recycle 13 M gal of wastewater daily for cooling, reducing both cost and environmental impact.

5. Risks: Reuters noted investor worry that Musk’s political noise might hurt the debt book, yet the tranche closed oversubscribed. Local regulators are still vetting air-quality permits for gas turbines at Memphis, so delays or extra mitigation spend remain possible.

6. Outlook: If xAI completes its planned 1 M-GPU cluster by mid-2026 and lifts annual revenue to about $2B, bankers expect the optional $20 B follow-on equity sale to proceed, pushing valuation well above $120B and cementing xAI as a top-tier model provider. Failure to narrow the burn gap or secure GPU supply could force xAI to refinance its secured notes at less favorable terms when step-ups begin in 2027.

🛠️ Perplexity launches $200-a-month Max: unlimited Labs, early Comet browser, priority o3-pro and Opus 4

Perplexity Max targets heavy search and analysis users who hit rate limits on the $20 Pro plan. It unlocks unlimited spreadsheet and report generation in Labs, first access to experimental features, and front-of-queue slots for every new frontier model Perplexity plugs in.

The firm now sells three consumer tiers: Free, Pro at $20, and Max at $200. Business buyers pay $40 per seat for Enterprise Pro, with an Enterprise Max promised soon at a higher price.

Perplexity Max is designed for:

Professionals who need unlimited access to comprehensive analysis tools

Content creators and writers who require extensive research capabilities

Business strategists conducting competitive intelligence and market research

Academic researchers working on complex, multi-faceted projects

Max mirrors OpenAI’s Pro tier cost, signaling a shift to hyper-premium pricing across AI tools. Google, Anthropic, and Cursor introduced similar offerings, validating willingness among power users to pay 10× for speed and headroom.

Perplexity booked about $34 million revenue last year, largely from Pro subscriptions, yet burned roughly $65 million on cloud and model API fees. Internal figures peg current ARR near $80 million, still far below the valuation it seeks in a possible $500 million raise.

With Google’s AI Mode and ChatGPT’s integrated search crowding the space, Max revenue could finance more model contracts and keep Perplexity competitive without owning its own LLM stack.

That’s a wrap for today, see you all tomorrow.