👨🔧 Inside the NVIDIA Rubin Platform: Six New Chips, One AI Supercomputer - at CES 2026

NVIDIA drops 6 Rubin chips and an AI supercomputer, Jensen dominates CES 2026, plus a guide to Claude Code 2.0 and boosting it with claude-mem.

Read time: 6 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (6-Jan-2026):

👨🔧 Inside the NVIDIA Rubin Platform: Six New Chips, One AI Supercomputer

🎯 Jensen Huang is on fire at CES 2026 Event

🧑🎓 Tutorial to follow - “A Guide to Claude Code 2.0 and getting better at using coding agents”

📡 Github resource: claude-mem, to give Claude Code infinite memory

👨🔧 Inside the NVIDIA Rubin Platform: Six New Chips, One AI Supercomputer

Key takeaways from Nvidia's official blog

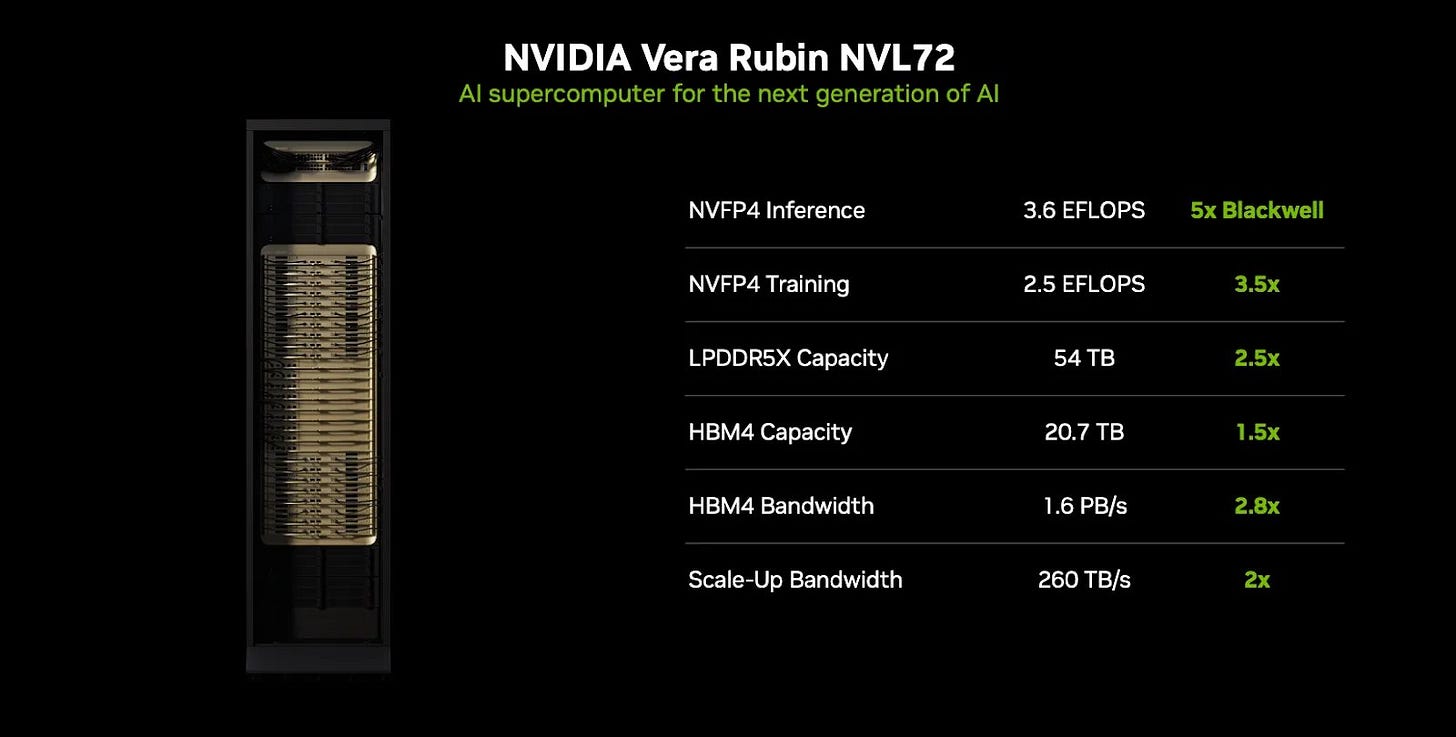

To train a 10T MoE in 1 month, Rubin would use 75% fewer GPUs than Blackwell. For inference, at the same power draw, Rubin can serve about 10x more tokens per second. If a 1 MW cluster delivered 1 million tokens per second before, Rubin would deliver about 10 million. For cost, the price to serve 1 million tokens could drop by about 10x with Rubin.

The Vera Rubin NVL72 integrates 6 new chips so compute, networking, and control act as 1 system of compute: Rubin GPU, Vera CPU, NVLink 6 switch, ConnectX-9 SuperNIC, BlueField-4 DPU, and Spectrum-6 Ethernet.

Vera CPU is a data movement engine, not just a host. It has 88 Olympus cores, Spatial Multithreading, up to 1.2 TB/s memory bandwidth, up to 1.5 TB LPDDR5X, and 1.8 TB/s coherent NVLink-C2C to GPUs. Compared to Grace, it doubles C2C bandwidth and adds confidential computing.

Rubin GPU raises sustained throughput across compute, memory, and communication. It offers 224 SMs with 6th gen Tensor Cores, NVFP4 inference up to 50 PFLOPS, FP8 training 17.5 PFLOPS, and HBM4 with up to 288 GB and about 22 TB/s bandwidth.

NVLink 6 doubles per GPU scale up bandwidth to 3.6 TB/s and enables uniform all to all across 72 GPUs. NVLink 6 also adds SHARP in network compute, reducing all reduce traffic up to 50% and improving tensor parallel time up to 20% for large jobs.

At the network edge, ConnectX-9 provides 1.6 Tb/s per GPU with programmable congestion control and endpoint isolation.

BlueField-4 offloads infrastructure with a 64 core Grace CPU and 800 Gb/s networking, introduces ASTRA for trusted control, and powers “Inference Context Memory Storage,” enabling shared KV cache reuse that can boost tokens per second up to 5x and power efficiency up to 5x.

Spectrum-6 switches, part of Spectrum-X Ethernet Photonics, double per chip bandwidth to 102.4 Tb/s and use co packaged optics for about 5x better network power efficiency and 64x better signal integrity, tuned for bursty MoE traffic.

Operations add Mission Control, rack scale RAS with zero downtime self test, and full stack confidential computing with NRAS attestation.

Energy features target “tokens per watt.” NVL72 uses warm water DLC at 45°C, rack level power smoothing with about 6x more local energy buffering than Blackwell Ultra, and grid aware DSX controls, enabling up to 30% more GPU capacity in the same power envelope.

🎯 Jensen Huang explained how AI has changed the $10Trillion Software industry at CES 2026 Event 🎯

“How you run the software, how you develop the software fundamentally changed. The entire five-layer stack of the computer industry is being reinvented. You no longer program the software, you train the software. You don’t run it on CPUs, you run it on GPUs. And whereas applications were pre-recorded, pre-compiled, and run on your device, now applications understand the context and generate every single pixel, every single token, completely from scratch every single time.

Computing has been fundamentally reshaped as a result of accelerated computing, as a result of artificial intelligence. Every single layer of that five-layer cake is now being reinvented.

Well, what that means is some ten trillion dollars or so of the last decade of computing is now being modernized to this new way of doing computing. What that means is hundreds of billions of dollars, a couple of hundred billion dollars in VC funding each year, is going into modernize and inventing this new world. And what it means is a hundred trillion dollars of industry, several percent of which is R&D budget, is shifting over to artificial intelligence.

People ask where is the money coming from? That’s where the money is coming from. The modernization to AI, the shifting of R&D budgets from classical methods to now artificial intelligence methods. Enormous amounts of investments coming into this industry, which explains why we’re so busy. And this last year was no difference. This last year was incredible. This last year... there’s a slide coming.”

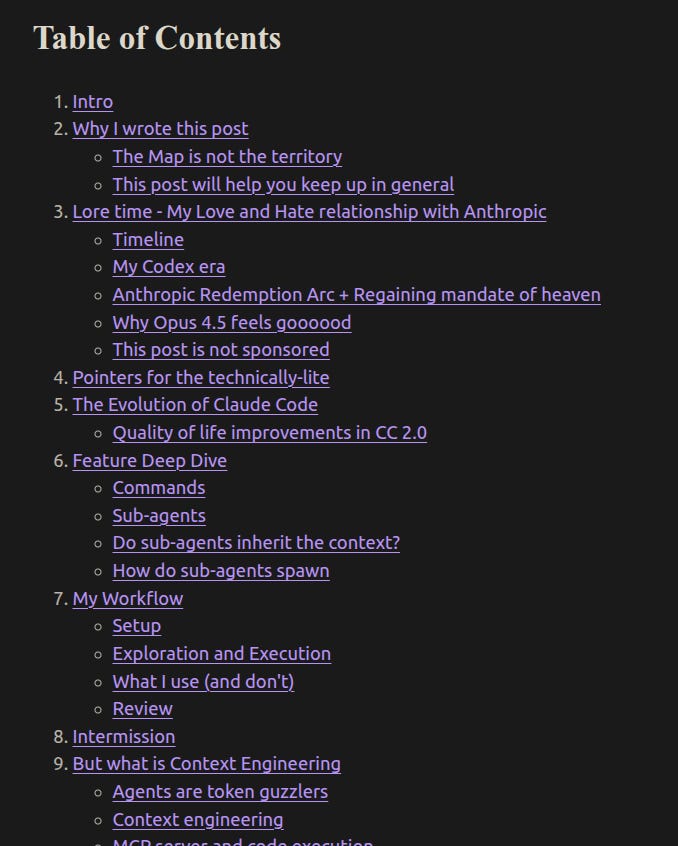

🧑🎓 Tutorial to follow - “A Guide to Claude Code 2.0 and getting better at using coding agents”

This guide shows a compact, repeatable way to use Claude Code 2.0 with sub-agents, skills, and tight feedback loops so you ship changes with fewer regressions.

You start by clarifying requirements, run /ultrathink for deeper passes, then let the agent edit while you supervise and keep diffs small. For risky work, draft on a branch, compare to your plan, iterate.

You speed review with syntax highlighting in diffs, LSP support, prompt suggestions, and history search. You watch /context, /usage, /stats, and use /rewind when experiments go off track.

You use sub-agents wisely, with Explore for fast read only search using glob, grep, and safe shell. General purpose and plan agents can inherit full context, while Explore starts clean to avoid noise.

You learn how sub-agents spawn through the Task tool with fields for description, prompt, subagent_type, optional model, resume, and run_in_background. Background runs help when you only need a final artifact or want to tail logs.

You practice context engineering because agents add lots of tokens from tool outputs. You compact or restart near 50 to 60% usage, keep goals in todo.md and plan.md, and push fresh summaries near the tail.

You reduce token load with code execution via MCP, where the agent writes small scripts in a sandbox instead of loading heavy tool definitions. This keeps your main prompt clean and cheaper.

You load domain rules with skills defined by SKILL.md, trigger scripts with hooks on Stop or UserPromptSubmit, and bundle sharables as plugins for teams.

You run a simple review loop, ask for severity tagged findings, and only accept diffs after specific issues are fixed. You also see the Task tool schema, .claude/agents markdowns, SKILL.md layout, and sample hook scripts.

📡 Github resource: claude-mem - A Claude Code plugin that automatically captures everything Claude does during your coding sessions, compresses it with AI (using Claude’s agent-sdk), and injects relevant context back into future sessions.

You can now give Claude Code infinite memory. Claude-Mem seamlessly preserves context across sessions by automatically capturing tool usage observations, generating semantic summaries, and making them available to future sessions.

It basically saves the full working context so Claude can pick up right where you left off without needing you to explain everything again.

Claude-Mem adds persistent memory to Claude Code by recording what happens in your coding sessions. It tracks tool usage, short notes, and quick summaries, then stores everything locally on your system. When you start another session, it automatically reloads that compressed context so Claude remembers what was done before.

The way it keeps memory efficient is pretty clever. Instead of saving long transcripts, it stores short semantic summaries. It also searches through your history before pulling in the full details, so tokens stay low even for long sessions.

A new feature called Endless Mode makes this even better. With it, sessions use up to 95% fewer tokens and allow about 20 times more tool calls before hitting the context limit. It’s already available from the beta channel.

You still have full control over how it works. All the data is stored locally using SQLite, so nothing is uploaded anywhere. You can tag sensitive stuff to keep it private, and you can also tweak how much context gets injected during future runs.

That’s a wrap for today, see you all tomorrow.