Knowledge or Reasoning? And Why Accuracy Lies for Language Model

Reasoning evals, YC’s 6-page prompts, DeepSearch stack, recursive chains, GRPO finetune boost, AI Studio update, and a direct SGLang vs vLLM tutorial.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (7-Jun-2025):

🥉 We need to evaluate reasoning steps separately for knowledge correctness and reasoning quality for Language Models

🚨 YC reveals how AI startups prompt LLMs: 6-page specs, XML outputs, evals as core IP.

🛠️ Github: Chain of Recursive Thoughts

🛠️ Github: Google opensources DeepSearch stack

🗞️ Byte-Size Briefs:

Unsloth publishes GRPO fine-tuning 2× faster, 70% less VRAM for DeepSeek-R1-0528-Qwen3

Google clarifies AI Studio free tier stays, moves behind API

🧑🎓 Deep Dive Tutorial: SGLang vs. vLLM? They Perform the Same

🥉 We need to evaluate reasoning steps separately for knowledge correctness and reasoning quality for Language Models

LLMs give a single right answer but hide wrong facts or sloppy reasoning. This paper scores each reasoning step, exposing which sentences supply real knowledge and which sentences actually push the answer probability upward.

🛑 The Problem

Developers judge models by final accuracy. A pass hides hallucinated facts and filler logic, blocking targeted debugging.

🧩 Proposed Framework

The answer is split into small steps. Each step is labelled with one factual snippet and the logical move built on it. Two new scores evaluate these parts separately.

📚 Knowledge Index

Every fact snippet is checked against a trusted database. Knowledge Index equals the % of steps that pass. A low score flags hallucination hotspots.

📉 Information Gain

A smaller, frozen language model sits outside the system as a judge.

• It first reads the question and the partial chain of thought up to step i - 1. From this context it assigns a probability p_prev to the correct final answer.

• Then it reads step i and updates that probability to p_after.

Information Gain for step i is log p_after minus log p_prev.

Meaning:

• A large positive value shows the step contained reasoning that clearly pushed the answer toward the truth.

• A value near zero means the step was decorative; it did not help the model narrow its uncertainty.

• A negative value signals confusion—the step actually pulled the prediction away from the right answer.

By adding these gains across the chain, the framework highlights which sentences do real logical work and which ones are fluff.

So the paper’s framework adds a preprocessing step: GPT-4o breaks the chain-of-thought into ordered steps s1…st. Each step holds one factual snippet k_i and the logical move built from it. Splitting this way lets later checks point at the exact line that goes off track.

This step-level structure is the basis for the two new metrics (Knowledge Index and Information Gain).

🧪 Experiments

Two 7 B backbones—Qwen-Base and math-focused DeepSeek-R1—are trained with Supervised Fine-Tuning or PPO Reinforcement Learning on five medical and five math benchmarks. The framework scores every output.

📊 Key Findings

• Fine-Tuning boosts medical Knowledge Index by about 6 % but drops Information Gain 39 %, showing verbose yet fact-rich chains.

• PPO trims filler, restoring Information Gain and adding 12 % verified facts without more data.

• Medical accuracy follows Knowledge Index, while math accuracy follows Information Gain, so both scores are needed.

🧩 Overal Takeaway

Separating knowledge from reasoning shows each skill behaves differently. Supervised fine-tuning supplies domain facts and lifts accuracy, but it bloats chains of thought and cuts Information Gain. Reinforcement learning prunes wrong or redundant facts, raising reasoning quality and often raising factual correctness too. Treating the two dimensions independently yields clearer diagnostics and steers targeted training for each domain.

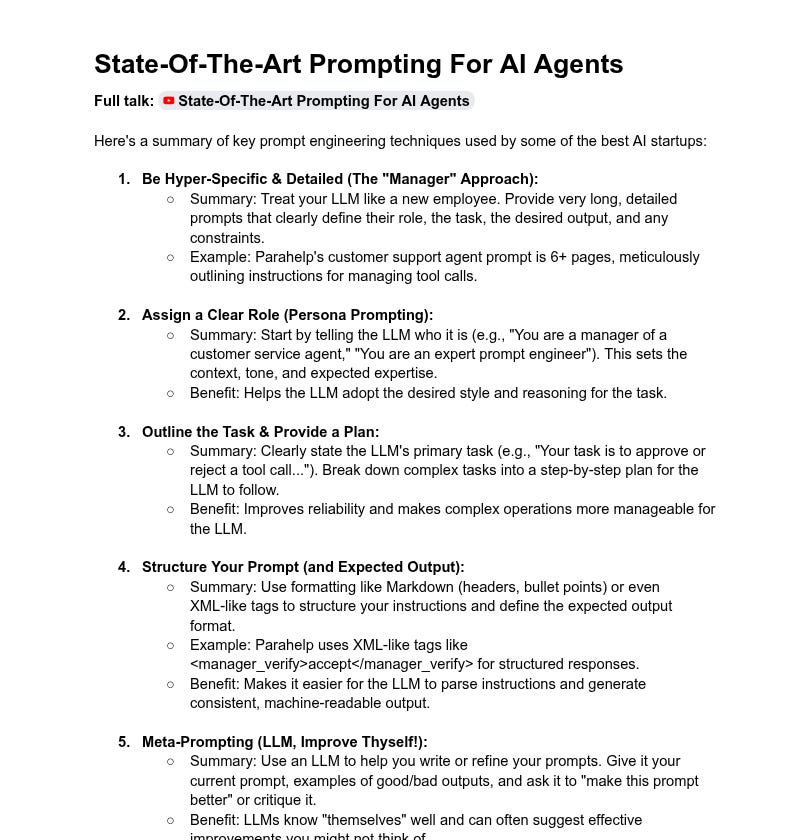

🚨 YC reveals how AI startups prompt LLMs: 6-page specs, XML outputs, evals as core IP.

Y Combinator breaks down how top AI startups prompt LLMs: 6+ page prompts, XML tags, meta-prompts, evals as core IP. Leading AI startups treat prompts like onboarding manuals.

⚙️ Key Learning

→ Top AI startups use "manager-style" hyper-specific prompts—6+ pages detailing task, role, and constraints. These aren't quick hacks; they’re structured like onboarding docs for new hires.

→ Role prompting anchors the LLM’s tone and behavior. Clear persona = better alignment with task. Example: telling the LLM it's a customer support manager calibrates its output expectations.

→ Defining a task and laying out a plan helps break complex workflows into predictable steps. LLMs handle reasoning better when guided through each sub-task explicitly.

→ Structuring output using markdown or XML-style tags improves consistency. Parahelp, for instance, uses tags like <manager_verify> to enforce response format.

→ Meta-prompting means using LLMs to refine your own prompts. Feed it your prompt, outputs, and ask it to debug or improve—LLMs self-optimize well if given context.

→ Few-shot prompting with real examples boosts accuracy. Startups like Jazzberry feed challenging bug examples to shape LLM behavior.

→ Prompt folding lets one prompt trigger generation of deeper, more specific prompts. Helps manage workflows in multi-step AI agents.

→ Escape hatches instruct LLMs to admit uncertainty. Prevents hallucination and improves trust.

→ Thinking traces (model reasoning logs) and debug info expose the model’s internal logic. Essential for troubleshooting and iteration.

→ Evals (prompt test cases) are more valuable than prompts themselves. They help benchmark prompt reliability across edge cases.

→ Use big models for prompt crafting, then distill for production on smaller, cheaper models. Matches quality with efficiency.

🛠️ Github: Chain of Recursive Thoughts

With this Github repo, you can make a model smarter by forcing it to argue with itself actually works.

Recursive self-critique turns small models into sharper coders.

Single-pass answers from small LLMs often overlook errors and weak logic.

CoRT (Chain of Recursive Thoughts) makes the model debate itself through repeat-generate, score, and select loops, sharpening reasoning without extra training.

🧠 The Core Concepts

CoRT (Chain of Recursive Thoughts) wraps any chat endpoint with three skills: self-evaluation, competitive answer generation, and iterative refinement. The model also sets its own recursion depth, so tougher prompts trigger more rounds while easy ones finish fast.

🔧 How It Works

Generate first reply.

Ask the model how many rethink rounds it needs.

Each round: create three alternatives, score all, keep the top.

Return the final survivor.

🖥️ Using the Repo

Run recursive-thinking-ai.py for a CLI test or launch the Web UI with start_recthink.bat. Provide an OpenRouter key, point to Mistral-7B, 24B, or any compatible model, and watch responses upgrade in real time.

📈 What You Gain

On coding tasks Mistral-24B moved from average to near GPT-4 quality, with no extra compute beyond the chosen rethink rounds. The same loop rescues explanations, step-by-step solutions, and bug-fix suggestions.

🛠️ Extend It Yourself

Change round counts, swap the scoring prompt, or plug in a custom evaluator. The skeleton stays the same, so experimentation costs only a few lines.

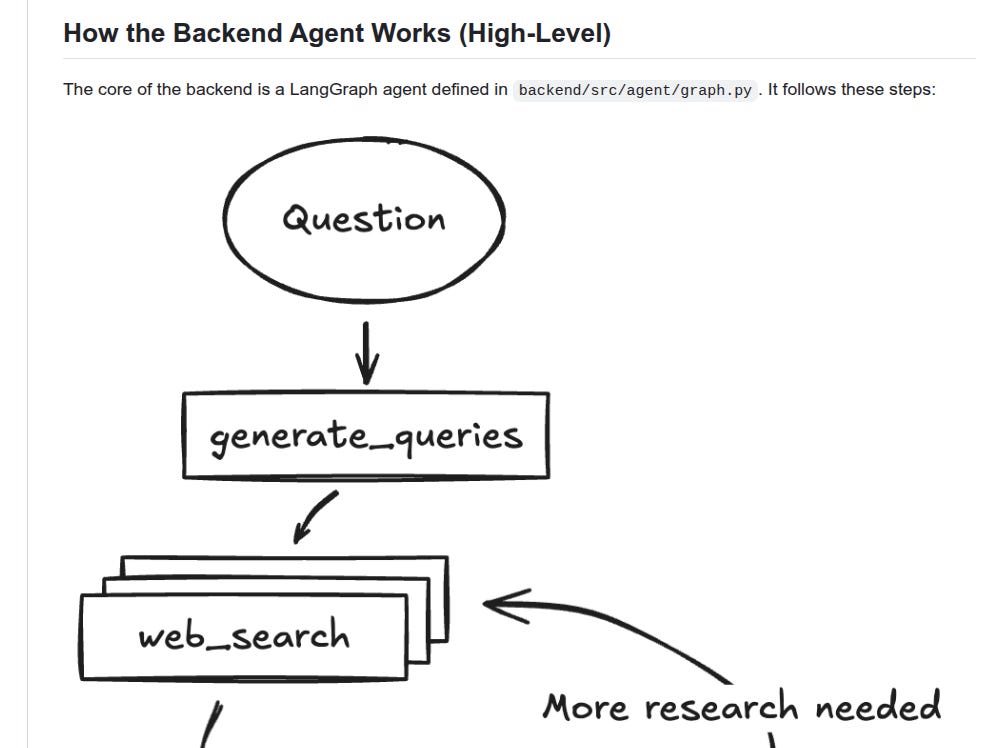

🛠️ Github: Google opensources DeepSearch stack

Get started with building Fullstack Agents using Gemini 2.5 and LangGraph

📝 Overview

This project has a React frontend and a FastAPI backend built on LangGraph. The agent turns user input into search queries with Gemini, fetches web results via Google Search API, reflects on them to spot missing info, and loops until it crafts a final answer with citations.

⚙️ The Core Concepts

Agent starts by generating initial queries with a Gemini model. It calls Google Search API to get web pages. It runs a reflection step to check if results cover the topic. If gaps exist, it refines queries and repeats until confidence is high, then synthesizes a well-cited answer.

🧰 Deployment Details

In production, backend serves the optimized frontend build. LangGraph needs Redis for streaming real-time output and Postgres to save assistant states, threads, and task queues. Docker image builds frontend and backend together. Environment keys (Gemini and LangSmith) must be set before running docker-compose.

🔥 The Most interesting concept here is

The agent’s reflective loop, where it analyzes search outcomes, spots gaps, and refines queries, ensures deeper, more accurate research without manual prompts. So here we are moving agents from static search to adaptive reasoning.

However note that it’s NOT the same as Gemini Deep Research!

🗞️ Byte-Size Briefs

⚡️Unsloth launches faster DeepSeek-R1-0528-Qwen3 fine-tuning with 70% less VRAM and 40% better multilingual output using GRPO. Their free notebook applies a new reward function that boosts response rates while making GRPO-based fine-tuning 2× faster and more memory efficient across languages or domains.

If you want to master supervised fine-tuning and preference optimization (with and without RL), I recommend experimenting with Unsloth’s numerous notebooks. I think they’re one of the best learning tools, especially since Unsloth is designed to run efficiently on consumer-grade hardware.Rumors earlier circulated that Google AI Studio's free tier was ending. Logan from Google clarified on Reddit: that’s not happening anytime soon. The platform is shifting to be API key-based, but this does not mean all access will be paid. The free tier is moving behind the API, which already serves millions. Google aims to maintain free access to many models, depending on cost and demand. Also Gemini Pro plan users can now send 100 queries per day—up from the previous 50

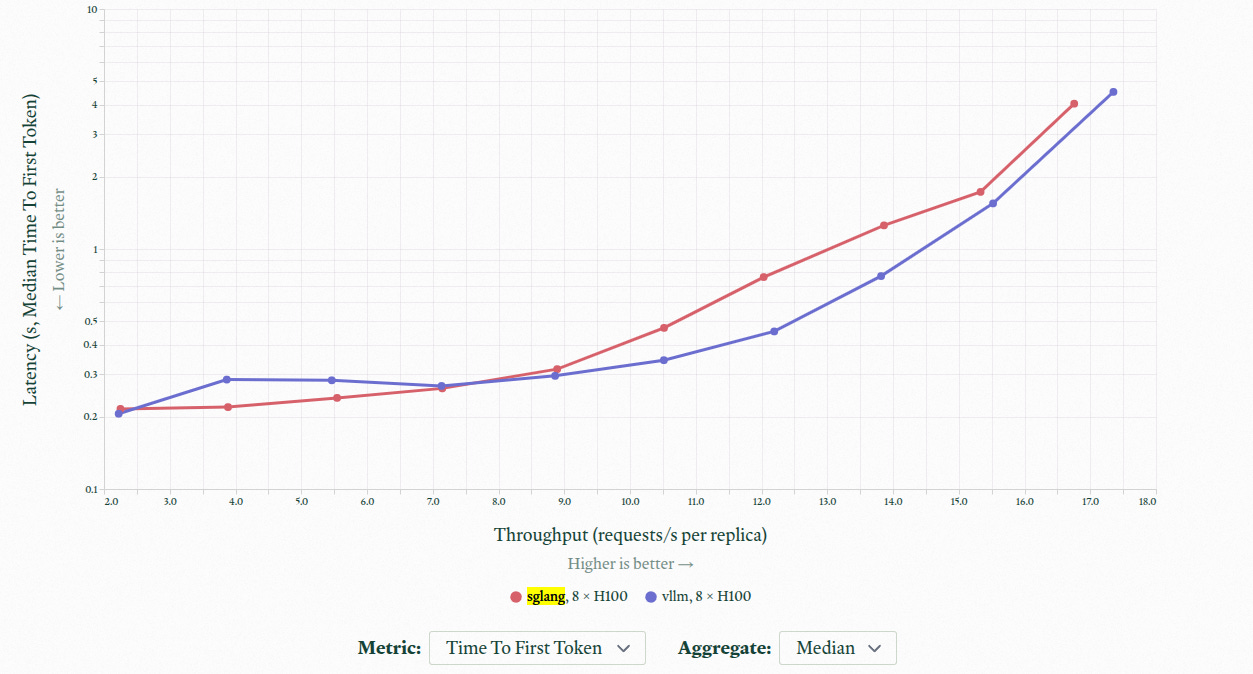

🧑🎓 Deep Dive Tutorial: SGLang vs. vLLM? They Perform the Same

If you are a Tech lead you need a clear way to choose and run open-source LLM engines for self-hosting.

This report compares vLLM, SGLang, and TensorRT-LLM across real workloads. It shows when open-weights models and engines beat proprietary APIs on cost, latency, and data control.

Quick Comparison

SGLang

Uses a structured-program frontend and RadixAttention to reuse KV caches, driving up to 6.4× higher throughput than older systems on complex tasks like JSON decoding and multi-turn chat arxiv.org.

Benchmarks show up to 3.1× higher throughput than vLLM on Llama-70B workloads lmsys.org.

vLLM

Implements PagedAttention to eliminate KV-cache fragmentation and share caches across requests, giving 2–4× throughput gains over systems like FasterTransformer at similar latency arxiv.org.

Delivers batch throughput on par with SGLang across model sizes from 8 B to 405 B parameters lmsys.org.

Choose SGLang for structured programs and lower tail latency, vLLM for memory efficiency and rich performance features.

However overall, no major difference in performance between vLLM and SGLang across model sizes and sequence lengths. Batch inference benchmarks are neck and neck. So, throughput isn’t a tiebreaker.

vLLM ships features first, while SGLang boots faster by skipping Torch compile.

📊 When working with Open-source models

Open-weights models already match proprietary ones for code completion, chat, and extraction when “smart enough” is all that matters, so engine choice, not model size, drives feasibility.

💰 Cost Edge

Batch jobs with short outputs let a single 8-GPU Llama-3-70B node push about 20k tokens per second for roughly 0.50 USD per million tokens, undercutting hosted APIs.

⚙️ Build Order

Start with an offline batch token factory; streaming chat needs tighter latency budgets and more tuning, so experience from batch helps avoid early infrastructure debt.

🚀 TensorRT-LLM

Hand-tuned builds can cut tail latency, but the Python wrapper is thin, flags are opaque, and each workload may demand days of expert GPU profiling.

That’s a wrap for today, see you all tomorrow.