Large Language Model Evaluation via Matrix Nuclear-Norm

Matrix Nuclear-Norm, proposed in this paper, slashes LLM evaluation time while maintaining accuracy through clever math tricks

Matrix Nuclear-Norm, proposed in this paper, slashes LLM evaluation time while maintaining accuracy through clever math tricks

Original Problem 🔍:

LLMs lack efficient evaluation metrics for assessing information compression and redundancy reduction capabilities. Existing methods like Matrix Entropy are computationally intensive for large-scale models.

Solution in this Paper 🧠:

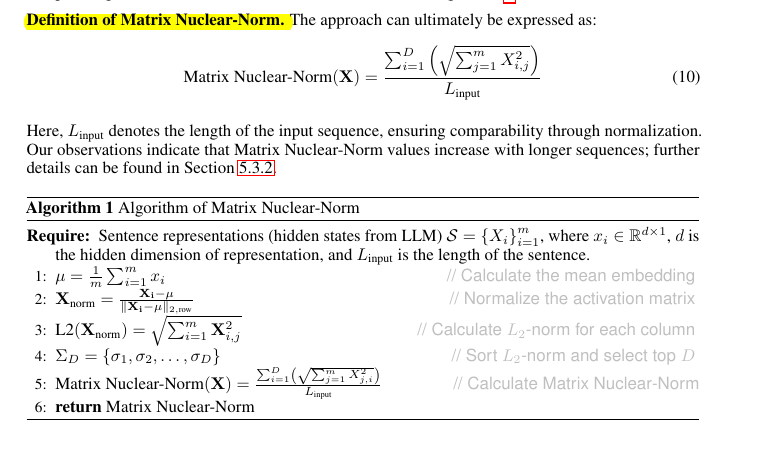

• Introduces Matrix Nuclear-Norm as a novel evaluation metric

• Utilizes L1,2-norm to approximate nuclear norm, eliminating SVD computation

• Reduces time complexity from O(n^3) to O(n^2)

• Provides convex approximation of matrix rank to capture predictive discriminability and diversity

• Normalizes by input length for comparability across different sequence lengths

Key Insights from this Paper 💡:

• Matrix Nuclear-Norm values decrease as model size increases, indicating better compression

• Consistent performance across various model families and sampling strategies

• Sensitive to input length, prompts, and sentence manipulations

• Effectively ranks models based on performance on benchmark datasets

• Balances computational efficiency with evaluation accuracy

Results 📊:

• 8-24x faster than Matrix Entropy for CEREBRAS-GPT models (111M to 6.7B parameters)

• Consistent decrease in values as model size increases across multiple datasets

• Strong correlation with other metrics like perplexity and loss

• Effectively ranks models on AlpacaEval and Chatbot Arena benchmarks

• Demonstrates robustness across different sampling strategies and model families

The Matrix Nuclear-Norm demonstrates:

Consistent decrease in values as model size increases, indicating better compression capabilities in larger models

Strong correlation with other metrics like perplexity and loss in evaluating model performance

Ability to effectively rank models based on performance on benchmark datasets

Sensitivity to factors like input length, prompts, and sentence manipulations, providing insights into model behavior