"Lifelong Sequential Knowledge Editing without Model Degradation"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2502.01636

The problem is that sequentially editing knowledge in LLMs leads to performance decline. This paper addresses this degradation in LLMs during extensive sequential knowledge updates.

This paper introduces ENCORE, a method combining Most Probable Early Stopping (MPES) and norm-constrained objective. ENCORE aims to enable long-term sequential editing without harming the original model's capabilities.

-----

📌 ENCORE's MPES offers a smart gradient descent halt. It stops editing at optimal fact probability. This prevents over-tuning to specific facts and boosts overall model generalization.

📌 Norm constraint in ENCORE directly tackles weight matrix norm explosion. This stabilizes edited layers, preventing "importance hacking" and preserving original model balance.

📌 ENCORE provides a practical, faster knowledge editing method. By combining MPES and norm control, it achieves robust sequential edits without losing downstream task performance.

----------

Methods Explored in this Paper 🔧:

→ The paper presents locate-then-edit knowledge editing as a two-step fine-tuning process.

→ The first step uses gradient descent to find target activation vectors for the matrix to be edited.

→ The second step updates the matrix using a preservation-memorization objective with a least-squares loss function.

→ Most Probable Early Stopping (MPES) is proposed to halt gradient descent when the edited fact becomes the most probable token across different contexts. MPES prevents overfitting on edited facts.

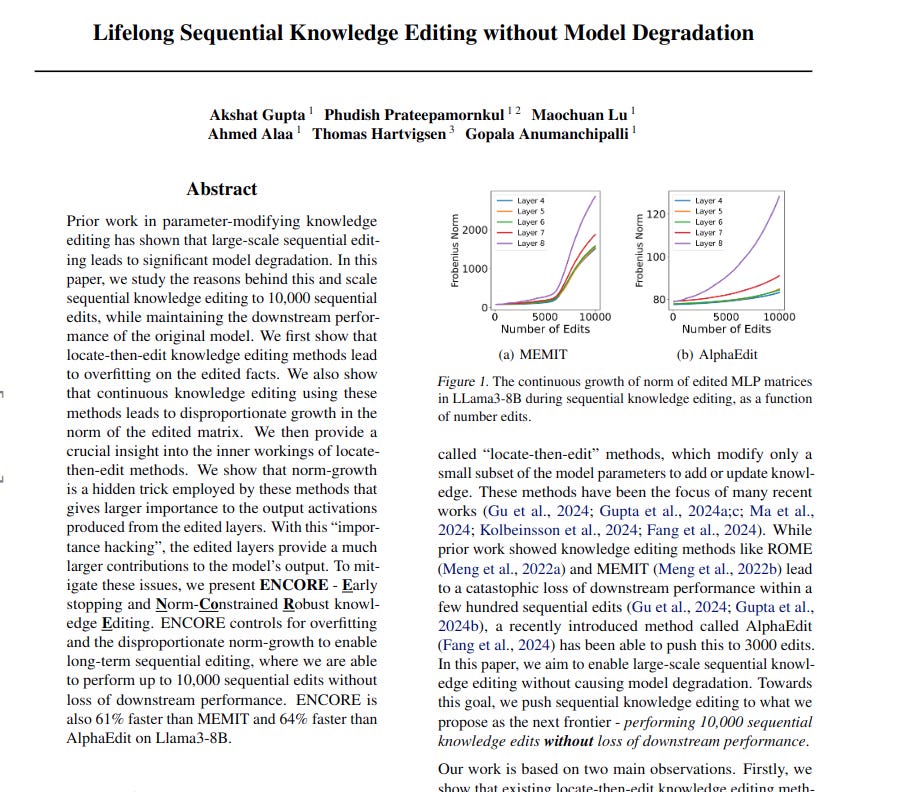

→ A Frobenius-norm constraint is added to the MEMIT objective to control the norm growth of the edited matrix during sequential edits.

→ ENCORE combines MPES with the norm-constrained objective.

-----

Key Insights 💡:

→ Locate-then-edit methods overfit on edited facts, resulting in unnaturally high probabilities for these facts compared to pre-trained knowledge.

→ Sequential knowledge editing causes a continuous and disproportionate increase in the norm of the edited weight matrix.

→ This norm growth is termed "importance hacking," where edited layers gain undue influence over the model's output due to increased activation norms.

→ Importance hacking, while enabling edit success, leads to a loss of general model abilities and downstream performance over many sequential edits.

-----

Results 📊:

→ ENCORE enables 10,000 sequential edits without downstream performance loss.

→ ENCORE is 61% faster than MEMIT and 64% faster than AlphaEdit on Llama3-8B.

→ MPES reduces editing time by 39% - 76% across methods and models.

→ With MPES, edited fact probabilities are reduced to more natural levels, closer to original fact probabilities.

→ ENCORE achieves improved editing metrics like Edit Score, Paraphrase Score, Neighborhood Score and Overall Score compared to MEMIT and AlphaEdit baselines.