LOKI: A Comprehensive Synthetic Data Detection Benchmark using Large Multimodal Models

New benchmark LOKI shows how well AI can spot other AIs' creative work.

New benchmark LOKI shows how well AI can spot other AIs' creative work.

LOKI stress-tests AI models by making them spot fake content across text, images, audio, and video

Original Problem 🔍:

LOKI addresses the lack of comprehensive benchmarks for evaluating LLMs in detecting synthetic data across multiple modalities.

Solution in this Paper 🛠️:

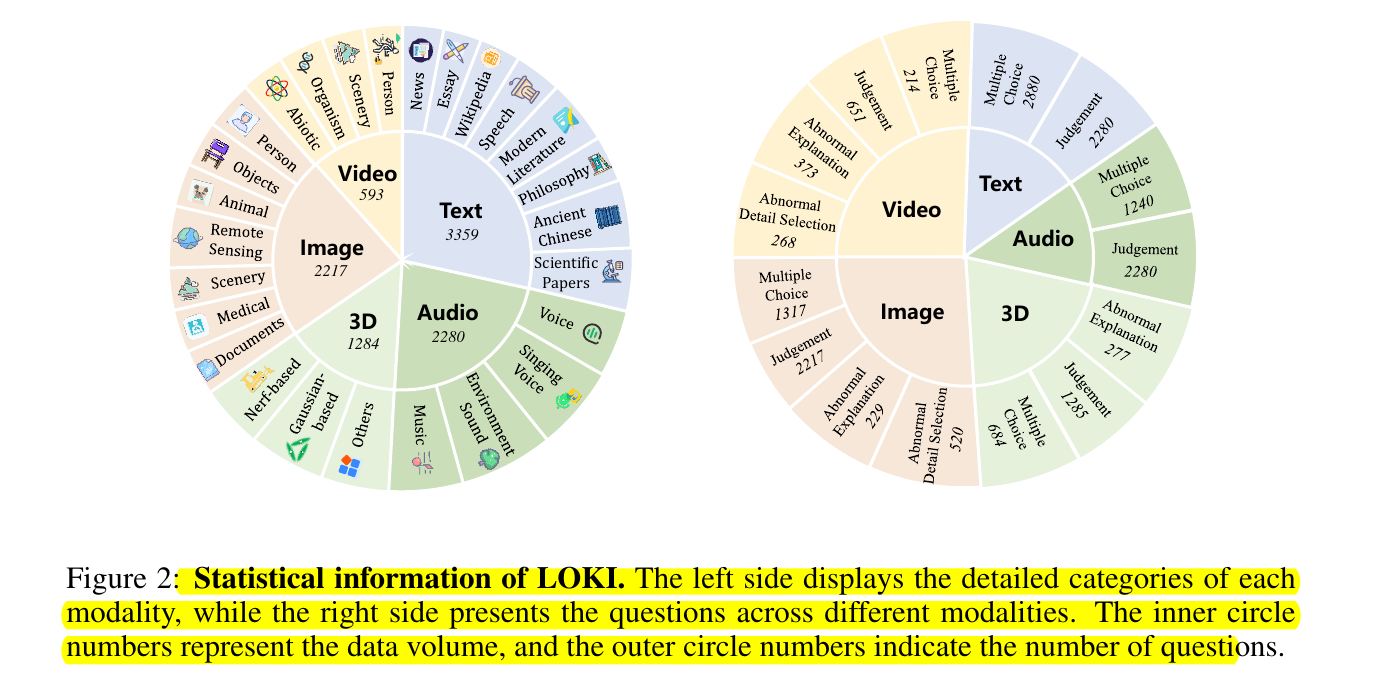

• Introduces LOKI: a multimodal benchmark for synthetic data detection

• Covers video, image, 3D, text, and audio modalities

• Includes 26 detailed subcategories and over 18k questions

• Features multi-level annotations and fine-grained anomaly explanations

• Proposes a comprehensive evaluation framework for various LMMs

Key Insights from this Paper 💡:

• LMMs show moderate capabilities in synthetic data detection with some explainability

• Most LMMs exhibit model biases in their responses

• LMMs lack expert domain knowledge in specialized image types

• Current LMMs show unbalanced multimodal capabilities

• Chain-of-thought prompting enhances LMMs' performance in synthetic data detection

Results 📊:

• GPT-4o achieves 63.9% overall accuracy in judgment tasks, 73.7% in multiple-choice

• Claude-3.5 outperforms in text modality with >70% accuracy

• LMMs underperform in 3D and audio tasks compared to image and text

• Human performance exceeds LMMs by ~10% in judgment and multiple-choice tasks

• Expert models show limited generalization on LOKI's diverse synthetic data