LPZero: Language Model Zero-cost Proxy Search from Zero

Automated Zero-cost proxy design enhances efficiency in evaluating language model architectures.

Automated Zero-cost proxy design enhances efficiency in evaluating language model architectures.

Original Problem 🔍:

Existing Zero-cost (ZC) proxies for Neural Architecture Search heavily rely on expert knowledge and trial-and-error, limiting their effectiveness for language models.

Solution in this Paper 🛠️:

• LPZero: A framework for automatic Zero-cost (ZC) proxy design

• Models proxies as symbolic equations

• Unified proxy search space encompassing existing ZC proxies

• Genetic programming for optimal proxy composition

• Rule-based Pruning Strategy (RPS) to eliminate unpromising proxies

• Applicable to large language models (LLMs) up to 7B parameters

Key Insights from this Paper 💡:

• Automatic proxy design outperforms human-designed proxies

• Unified search space enables comprehensive exploration

• RPS significantly improves search efficiency

• LPZero effectively evaluates LLM architectures without extensive training

Results 📊:

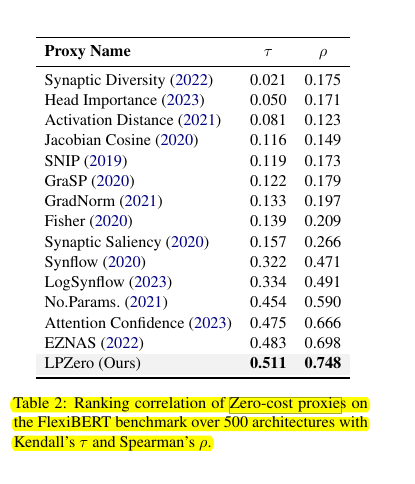

• FlexiBERT benchmark: Highest ranking consistency (τ = 0.51, ρ = 0.75)

• GPT-2 benchmark: Outperforms other ZC proxies (τ = 0.87, ρ = 0.98)

• LLaMA-7B: Competitive performance on commonsense reasoning tasks

• Reduces computational demands in neural architecture search

🧠 How does LPZero address the limitations of existing Zero-cost proxies?

LPZero overcomes the reliance on expert knowledge and trial-and-error by:

Modeling ZC proxies as symbolic equations

Incorporating a unified proxy search space

Using genetic programming to find optimal compositions

Implementing a Rule-based Pruning Strategy to eliminate unpromising proxies

🔢 Structured of the proxy search space in LPZero:

Encompasses existing ZC proxies

Uses six types of inputs: Activation, Jacobs, Gradients, Head, Weight, and Softmax

Applies unary and binary operations to these inputs

Represents proxies as symbolic expressions

Includes 20 unary operations and 4 binary operations

Results in a combinatorial space of 24,000 potential ZC proxies

🔬 The way the Rule-based Pruning Strategy (RPS) contribute to LPZero's efficiency

Preemptively eliminating unpromising proxies

Reducing the risk of proxy degradation

Improving search efficiency in early iterations

Helping to navigate the sparse proxy search space

🔧 How does LPZero compare to other automatic methods for proxy searching?

Compared to methods like AutoML-Zero, EZNAS, Auto-Prox, and EMQ, LPZero:

Focuses specifically on large language models (up to 7B parameters)

Doesn't require retraining, reducing computational costs

Uses a novel rule-based pruning strategy

Aims to find optimal symbolic equations for predicting LLM performance