The paper proposes, the Decoupled Clip and Dynamic sAmpling Policy Optimization (DAPO) algorithm, and fully open-source a state-of-the-art large-scale RL system

Problem and Motivation

Large Language Models often fail to maintain stable performance when fine-tuned with Reinforcement Learning methods for long chain-of-thought reasoning. DeepSeek-R1 introduced large-scale RL to boost reasoning but kept important details private, making replication difficult. DAPO tackles the same challenge with full openness. It closes the performance gap by introducing Decoupled Clip and Dynamic sAmpling Policy Optimization (DAPO). It achieves 50 points on the AIME 2024 math benchmark using the Qwen2.5-32B base model and outperforms the previous 32B DeepSeek-R1 results while requiring fewer training steps.

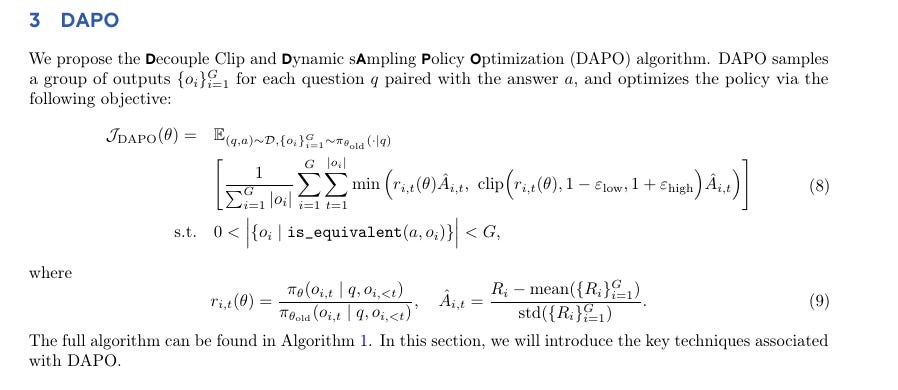

Core Algorithm

DAPO builds on GRPO (Group Relative Policy Optimization) but removes a KL divergence term that was unneeded. It also uses a rule-based reward: if the answer is correct, reward is 1; otherwise, reward is -1. The advantage function compares each sampled response against others. The policy is updated in smaller, safer steps to stabilize training.

Background on GRPO

GRPO (Group Relative Policy Optimization) is a reinforcement learning method that handles multiple responses (or “action samples”) per prompt. It calculates the advantage of each response relative to other responses sampled under the same prompt. The model then updates its parameters based on these advantage values, ensuring that high-reward responses become more probable and low-reward ones become less probable.

The KL Divergence Term

In some RL methods (e.g., PPO), a KL divergence term is added during optimization. The goal is to keep the updated model from drifting too far from a reference model. This prevents the policy from “over-updating” and becoming unstable.

Why DAPO Removes KL

DAPO inherits most of GRPO’s mechanics—sampling multiple responses, computing advantages, clipping updates—but removes the KL component. This is because DAPO targets long chain-of-thought reasoning, where the model’s output distribution often shifts significantly from the original (pretrained) distribution. Constraining it with KL divergence would hamper the model from exploring these bigger changes. DAPO lets the model adapt more freely without being forced to stay close to the original behavior.

Impact

Fewer Constraints on Exploration: Without KL, DAPO does not push the model back to a “safe zone” near the pretrained distribution.

Better for Long-Form Outputs: Long chain-of-thought solutions require tokens that might be unlikely under the original model. KL could suppress them and reduce reasoning improvements.

Maintains Stability Elsewhere: DAPO still uses clipping (through techniques like Clip-Higher) to keep updates controlled. This keeps training stable without a KL penalty.

Overall, DAPO builds on GRPO but drops the KL divergence to allow deeper updates in chain-of-thought RL, avoiding the reference-model tether and enabling more extensive exploration.

Four Key Techniques of DAPO

Clip-Higher

Traditional RL clipping sets a single clipping range around 1, for example [0.8, 1.2], to keep the new probability (p_new) close to the old probability (p_old). This prevents overly large updates.

Why standard clipping is restrictive

When the clip range is small (say 0.2), a low-probability token with p_old = 0.01 can only rise to p_new = 0.012. That may be too small. This holds back tokens that might become important but started with low probability. Those tokens stay suppressed, limiting the model’s exploration. Over time, the model overconfidently focuses on a narrow set of responses, called entropy collapse.What Clip-Higher changes

Clip-Higher separates the lower bound and upper bound. The lower bound remains near 1 - epsilon_low (for example 0.8), but the upper bound is set higher (for example 1 + epsilon_high = 1.28). This higher ceiling helps low-probability tokens climb further within a single training step.Why it matters

More exploration: Tokens once deemed improbable can now gain enough probability mass to appear more often.

Less collapse: The model avoids locking onto a few tokens. Entropy remains healthier, so the system explores more reasoning paths.

Better diversity: A richer token distribution emerges, leading to better coverage of potential solutions.

By decoupling the clip bounds, Clip-Higher prevents the model from stagnating on a narrow set of responses, allowing it to discover better reasoning paths and maintain diversity.

Dynamic Sampling

When every sampled response for a prompt is correct, the advantage for that prompt becomes zero. This stalls training for those samples. DAPO filters out these zero-signal samples by swapping them with new prompts until each batch contains prompts whose responses yield nonzero advantage. This maintains strong training signals without slowing throughput.Token-Level Policy Gradient Loss

11GRPO averages losses at the sample level. Longer responses get diluted weight, so their tokens are under-penalized or under-rewarded. DAPO aggregates over all tokens across the entire batch. This ensures each token’s contribution scales with its presence. Long high-quality reasoning gets reinforced more accurately, and long poor-quality outputs are penalized properly.

Overlong Reward Shaping

In RL, responses sometimes exceed a maximum token count set by the training system. The system chops off any excess tokens, so the response is “truncated.”

When a response is truncated, some training setups automatically assign it a “bad” reward. That negative signal may be misleading. The reasoning process might be correct but just too verbose. Penalizing it with a flat negative reward confuses the model.

Overlong Filtering

DAPO skips using these truncated samples when updating the model.

The model does not see an incorrect penalty for what could be a valid reasoning chain.

This avoids mixing “overly long” with “low-quality.”

Soft Overlong Punishment

DAPO applies a small penalty once the response length goes past a certain threshold, and the penalty grows if the output gets even longer.

The aim is to nudge the model to be concise without giving it a harsh, all-or-nothing punishment.

The model still keeps any correct logic it used so far, but sees an incentive to shorten future outputs.

This two-step method keeps training signals clear. Good reasoning is reinforced, while the model is gently encouraged to avoid excess length.

The Algorithm of DAPO

Input

πθ: The policy model we are currently training.

R: The reward function that assigns a score to each generated response.

D: The dataset (or collection of prompts) we use to query the model.

ε_low, ε_high: The two key hyperparameters that set the lower and upper clip range.

Main Loop (step = 1 to M)

Sample a batch Db from D

We pick a batch of prompts from our training set D. These are the questions (or tasks) for which the model will generate responses and receive rewards.

Update the old policy model πθ_old ← πθ

We make a copy of our current policy parameters so we can compare old probabilities to new ones during policy updates.

Sample G outputs {oi} for each question in Db using πθ_old

For every prompt q in this batch, we ask the old policy model to generate G different responses.

These multiple samples let us measure relative quality among responses for the same prompt.

Compute rewards {ri} for each output by running R

Each response gets a reward, for example 1 if the answer is correct, -1 if incorrect.

Other forms of reward can also be applied, as long as they map each response to a numeric score.

Filter out oi and add the remaining to the dynamic sampling buffer

Dynamic Sampling means we only keep prompts that do not yield all-correct or all-wrong outputs.

If all G responses for a prompt are correct, there is no training signal (the advantages become zero). If all are wrong, we often skip them for a similar reason.

We keep only prompts that have meaningful variation in correctness and store them in a buffer.

Check buffer size nb

If the buffer has fewer than N items (N is a chosen threshold), we skip the update for now.

This ensures each update sees enough training signals.

Compute advantages A_i,t for each token

For each response that survived filtering, we compare the reward of that response to the reward of other responses for the same prompt.

This advantage is token-level, meaning we assign each token’s share of credit for the overall reward.

Perform multiple gradient updates

We loop over a small number of gradient-update steps (μ).

In each update, we maximize the DAPO objective, which applies clipping (with ε_low and ε_high) at each token.

This step nudges the policy toward higher-reward responses and away from lower-reward responses.

The key difference vs. older algorithms is the decoupled clip range and the token-level averaging, plus dynamic sampling.

Output

The final trained policy πθ after M iterations.

It has learned to produce more correct or higher-reward outputs by repeatedly sampling, comparing, and updating based on these feedback signals.

Dataset and Training

The team curated DAPO-Math-17K, a math dataset with integer-answer problems to simplify rule-based scoring. Qwen2.5-32B was used as the base model. The RL training starts from that checkpoint, applies DAPO, and samples multiple responses per prompt. Each step updates the model by maximizing the difference in reward between good and bad responses.

Results

Accuracy on the AIME 2024 benchmark climbed from 30 points under naive GRPO to 50 points under DAPO. Dynamic Sampling, Clip-Higher, Overlong Reward Shaping, and Token-Level Loss each contributed to closing the gap. DAPO needed roughly half the number of training steps compared to the previous 32B DeepSeek-R1.

Observations on Reasoning Behaviors

During training, the model began to reflect on its reasoning and revise steps mid-solution, a behavior absent initially. This emergence suggests DAPO fosters exploration of more complex reasoning paths.

DAPO’s codebase and dataset are fully open-sourced, enabling reproducibility of large-scale RL training for advanced reasoning tasks. These techniques stabilize training, preserve exploration, and encourage correct long-form reasoning in LLMs.