🧪 Meta proposes a great idea - the Rule of Two for agent security, pick only 2 of 3 powers

Meta's agent security rule, AWS’s massive AI cluster, Ilya reveals there was a OpenAI-Anthropic merger talk, $420B AI capex wave, and IBM's agent-optimized Granite 4.0 Nano.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (3-Nov-2025):

🧪 Meta proposes a great idea - the Rule of Two for agent security, pick only 2 of 3 powers.

🏆 AWS activates Project Rainier: One of the world’s largest AI compute clusters comes online.

🔥 OpenAI’s Board Had Considered Merging With Anthropic After Sam Altman’s Ouster In 2023: Ilya Sutskever

💼 Big Tech is committing $420B in AI capex next year and OpenAI has lined up roughly $1.4T in multi-year infrastructure commitments.

👨🔧 IBM dropped Granite 4.0 Nano, a brand-new family of models built specifically with agents in mind

🧪 Meta proposes a great idea - the Rule of Two for agent security, pick only 2 of 3 powers.

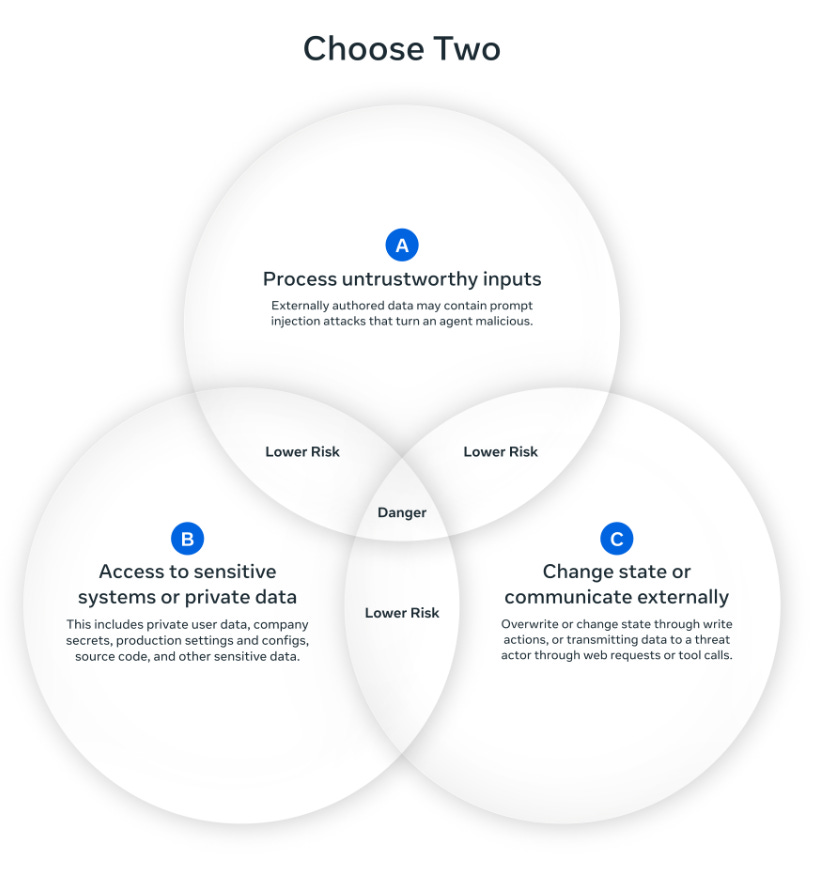

Meta’s new research says an agent should only have 2 of 3 powers at once, handling untrusted input, accessing private data, or changing state and talking to outside systems.

This helps stop prompt injection, where malicious text tricks the agent into breaking its rules or leaking data. If an agent needs all 3 powers, it should restart or ask a human before acting.

The idea is simple, you can’t let one session both read unsafe input, see private data, and send data out. For example, a travel bot can read messages and see user data but must ask before booking tickets, while a research bot can browse the web safely if it doesn’t have access to private files.

A new study tested 12 security defenses and found attackers broke over 90% of them, and human red-teamers reached 100%, proving most filters don’t hold up when tested hard against Prompt injection. Prompt injection means strings from outside sources can overwrite the agent’s instructions, so once an agent reads hostile content it may leak data or take actions it was never supposed to take.

This “choose 2” framing cuts the classic exfiltration chain because an attacker cannot drive A → B → C in one sweep without a deliberate checkpoint that stops the leak or the action. i.e. the agent can’t be tricked into doing all three steps of an attack at once. An attacker can’t read bad input, grab private data, and send it out in one go.

The rule forces a pause or human check before that full chain happens. A travel assistant can safely run A+B by forcing human confirmation on purchases and outbound messages so C is gated, while a research browser can run A+C inside a sandbox with no sensitive cookies so B is off, and an internal coding agent can run B+C by strictly filtering what untrusted inputs ever reach its context so A is blocked.

The attackers in the new study do not rely on one magic sentence, they iterate with gradient-style tweaks, reinforcement-learning style trial and error, and search that generates and rates many candidates until the defense cracks. Because tool wiring is getting standardized, for example via Model Context Protocol, it becomes even more important to declare and enforce a session’s A/B/C policy at the tool boundary so new connectors do not silently grant C when A and B are already on. The practical takeaway is simple, treat filters as best-effort and design agent sessions so at most 2 powers are available at once, with human review or policy checks whenever a flow would cross into the third.

🏆 AWS activates Project Rainier: One of the world’s largest AI compute clusters comes online.

AWS connected multiple US data centers into one UltraCluster so Anthropic can train larger Claude models and handle longer context and heavier workloads without slowing down. Each Trn2 UltraServer links 64 Trainium2 chips through NeuronLink inside the node, and EFA networking connects those nodes across buildings, cutting latency and keeping the cluster flexible for massive scaling.

Trainium2 is optimized for matrix and tensor math with HBM3 memory, giving it extremely high bandwidth so huge batches and long sequences can be processed without waiting for data transfer. The UltraServers act as powerful single compute units inside racks, while the UltraCluster spreads training across tens of thousands of these servers, using parallel processing to handle giant models efficiently.

AWS says Project Rainier is its largest training platform ever, delivering >5x compute than what Anthropic used before, allowing faster model training and easier large-scale experiments. For energy use, AWS reports a 0.15 L/kWh water usage efficiency, matching 100% renewable power and adding nuclear and battery investments to keep growing while staying within its 2040 net-zero goal.

🔥 OpenAI’s Board Had Considered Merging With Anthropic After Sam Altman’s Ouster In 2023: Ilya Sutskever

Sutskever’s testimony centers on a 52-page memo alleging a pattern of lying and internal manipulation by Altman. The talks unfolded during a 5-day meltdown when nearly 700 employees threatened to quit unless Altman returned, which pushed the board toward a reversal.

Sutskever later posted that he deeply regretted his role in the ouster. Mira Murati briefly served as interim CEO in Nov-23 then left OpenAI in Sept-24 to form new Company (Thinking Machine).

The board’s original public rationale was that Altman was not consistently candid with directors. OpenAI’s unusual governance structure, a nonprofit board supervising a capped-profit operator, made breakdowns in CEO-board trust especially combustible.

💼 Big Tech is committing $420B in AI capex next year and OpenAI has lined up roughly $1.4T in multi-year infrastructure commitments.

He said large companies are betting on massive job replacement because that is where the money is. And some of the signals match the claim, with Amazon cutting 14,000 corporate roles while Andy Jassy had already told staff in June that AI would reduce the corporate workforce over the next few years.

Entry-level funnels are thinning, with UK postings down 32% since ChatGPT and US analyses showing 35%+ fewer entry-level openings versus early 2023. The economic story is straightforward, when output per worker jumps faster than demand or new roles, firms substitute automation to protect margins, so profits improve only if headcount or labor hours fall.

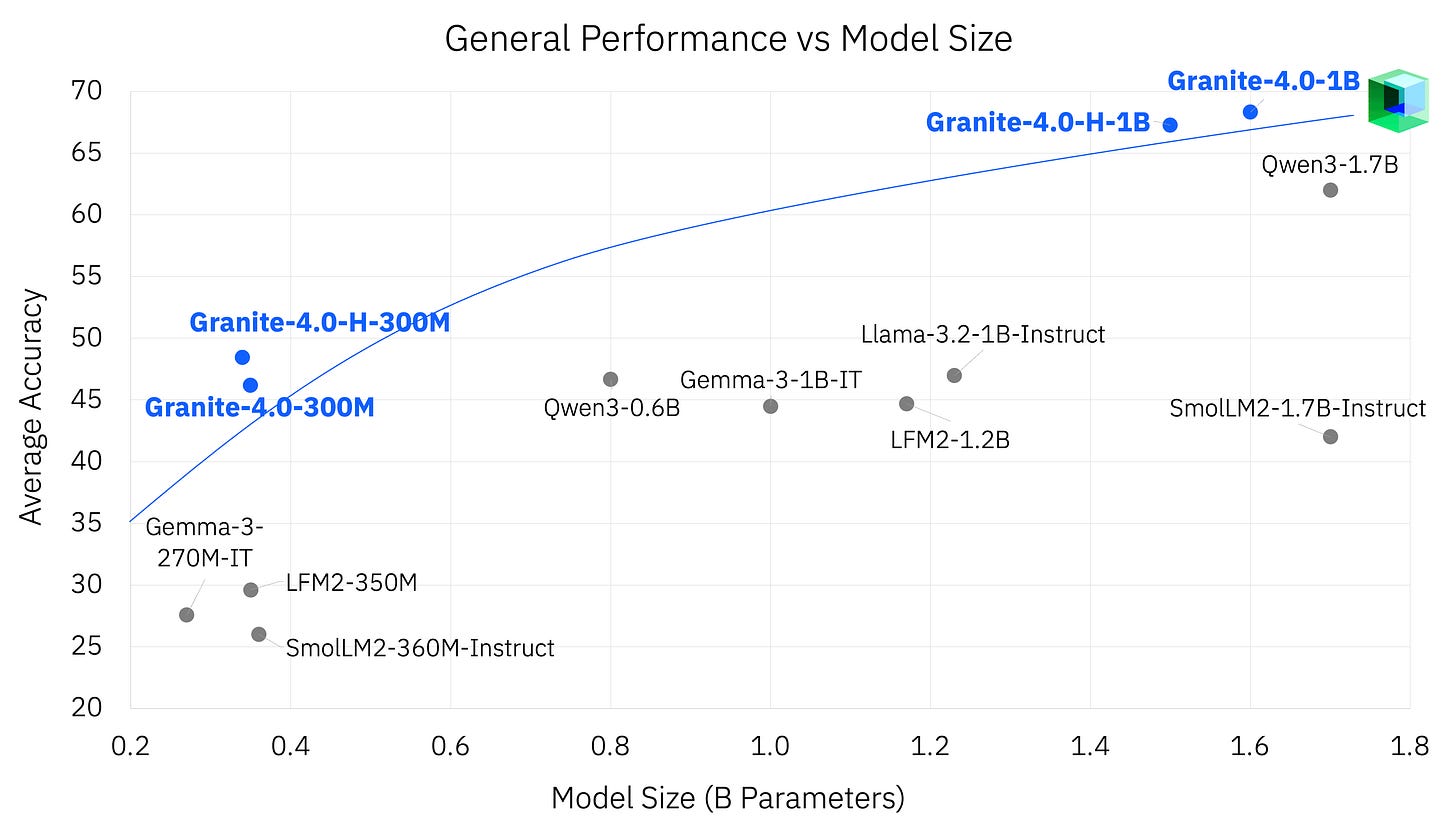

👨🔧 IBM dropped Granite 4.0 Nano, a brand-new family of models built specifically with agents in mind

These models are designed to be highly accessible: the 350M variants can run comfortably on a modern laptop CPU with 8–16GB of RAM, while the 1.5B models typically require a GPU with at least 6–8GB of VRAM for smooth performance — or sufficient system RAM and swap for CPU-only inference. This makes them well-suited for developers building applications on consumer hardware or at the edge, without relying on cloud compute.

Hybrid Mamba-2/Transformer design

70% lower memory use

2x faster inference

Better at handling long sessions and large contexts

State-of-the-art on instruction following and tool use

Edge-ready

Apache 2.0 license

The real standout here is how well these models do in instruction following and tool calling, which are essential for agentic systems. Everyone’s now pushing to make smaller models powerful enough for edge devices and multi-agent setups, and IBM just moved the bar higher.

What’s especially wild is the performance jump in the sub-400M range. Models that small usually lag way behind, but Granite 4.0 Nano’s smallest versions are surprisingly accurate. That gap between <400M and <1.8B models is closing fast, and the next wave of improvements will probably come from smarter architectural tweaks or optimization tricks.

That’s a wrap for today, see you all tomorrow.

I'd say that qualifies as a proof of life at Meta! Been a long summer, looking forward to something this Fall to put the team back on the map.

The Rule of Two is elegant in its simplicity, especially considering the 90%+ attack success rate against traditional defenses. What's interesting is how this forces developers to think about agent architecture from the ground up rather than bolting on security filters after the fact. The travel bot example is particuarly useful because it shows where the human checkpoint naturally fits without breaking user experience. This feels like it could become industry standard once Model Context Protocol adoption accelerates.