🧠 Meta published the first real research on scaling reinforcement learning with LLMs

Scaling RL compute for LLMs, Baidu's new PaddleOCR-VL-0.9B for document parsing, and a Keras guide on speeding up models with quantized low precision.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (17-Oct-2025):

🧠 Meta published the first real research on scaling reinforcement learning with LLMs

📢 In a new report, Goldman Sachs says Don’t fear the AI bubble, it’s about to unlock an $8 trillion opportunity

📚 Baidu just launched and open-sourced a BRILLIANT model for document parsing - PaddleOCR-VL-0.9B.

🛠️ Tutorial: Run your models faster with quantized low precision in Keras!

🧠 Meta published the first real research on scaling reinforcement learning with LLMs

A really important paper just dropped by AIatMeta

This is probabaly the first real research on scaling reinforcement learning with LLMs. Makes RL training for LLMs predictable by modeling compute versus pass rate with a saturating sigmoid.

1 run of 100,000 GPU-hours matched the early fit, which shows the method can forecast progress. The framework has 2 parts, a ceiling the model approaches, and an efficiency term that controls how fast it gets there.

Fitting those 2 numbers early lets small pilot runs predict big training results without spending many tokens. The strongest setup, called ScaleRL, is a recipe of training and control choices.

ScaleRL combines PipelineRL streaming updates, CISPO, FP32 at the final layer, prompt averaging, batch normalization, zero-variance filtering, and no-positive-resampling. The results say raise the ceiling first with larger models or longer reasoning budgets, then tune batch size to improve efficiency.

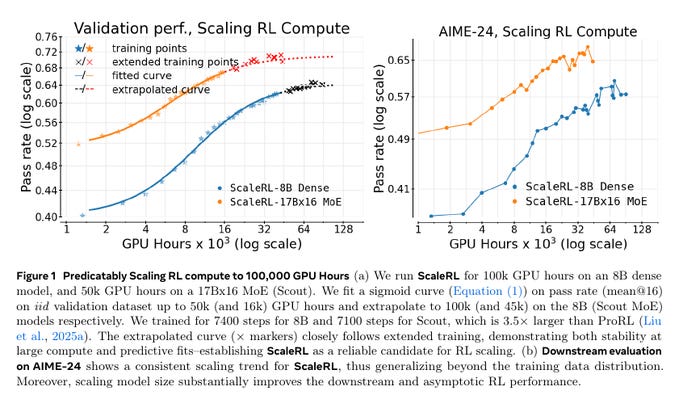

How the ScaleRL method predicts and tracks reinforcement learning performance as compute increases.

The left graph shows that as GPU hours go up, the pass rate improves following a smooth curve that starts fast and then slows down. Both the 8B dense and 17Bx16 mixture-of-experts models fit the same kind of curve, which means the model’s progress can be predicted early on without needing full-scale runs.

The right graph shows that this predictable scaling also holds on a new test dataset called AIME-24. That means ScaleRL’s performance trend is not just tied to training data but generalizes to unseen problems too. Together, the figures show that ScaleRL can be trusted for long runs up to 100,000 GPU-hours because its early scaling pattern remains stable even as compute grows.

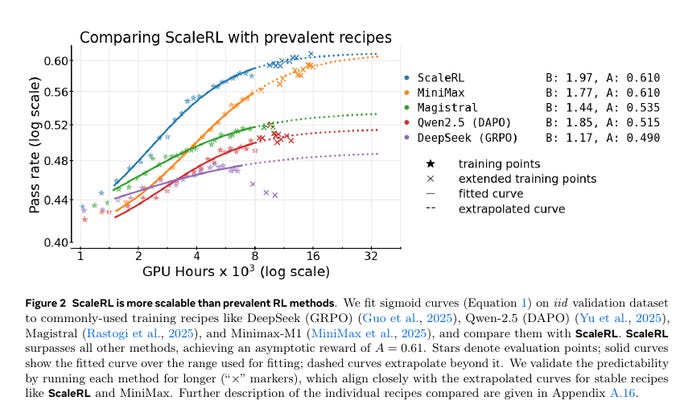

This figure compares ScaleRL with other popular reinforcement learning recipes used for training language models.

Each line shows how performance improves as more GPU hours are used. ScaleRL’s curve keeps rising higher and flattens later than the others, which means it continues to learn efficiently even with large compute.

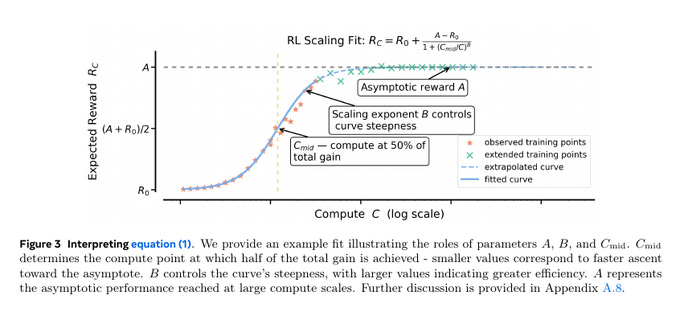

How the paper mathematically models reinforcement learning performance as compute increases.

The curve starts low, rises sharply, then levels off at a maximum value called the asymptotic reward A. The point called Cmid marks where the system reaches half of its total gain. Smaller Cmid means the model learns faster and reaches good performance sooner.

📢 In a new report, Goldman Sachs says Don’t fear the AI bubble, it’s about to unlock an $8 trillion opportunity

They note AI investment levels are sustainable despite concerns, and even if it’s not yet clear who the biggest winners will be. And values the potential capital revenue unlocked by AI productivity gains at about $8 trillion, with possible estimates ranging from $5 trillion to $19 trillion. They add that the S&P 500’s market cap of $671.94 billion shows there’s still a lot of room for AI-driven growth.

Reason.

AI is driving productivity higher, but getting those gains takes a lot more compute power as models keep scaling.

That AI investment in the U.S. is still under 1% of GDP, much lower than past major tech cycles that hit 2–5%.

The only possible hiccup, Goldman wrote, was that investors are betting heavily on companies early in the process. First movers are not always the ultimate winners in battles like this.

“The current AI market structure provides little clarity into whether today’s AI leaders will be long-run AI winners,” Goldman analysts wrote. According to Goldman Sachs analysts’ estimates, current AI-related investment in the U.S. is still less than 1% of GDP, whereas during previous technological waves (including railway expansion, the electrification surge of the 1920s, and the internet era of the late 1990s), investment typically accounted for 2% to 5% of GDP.

Goldman Sachs Analysts wrote, ‘In infrastructure development, ‘first movers’ may underperform, as seen in examples like railways and telecommunications. In many cases, late entrants have achieved better returns by acquiring assets at lower costs.’

This scenario may repeat itself in the AI era. ‘The current structure of the AI market makes it difficult to determine whether today’s AI leaders will become long-term winners,’ the analysts noted.

They wrote, ‘First-mover advantages are more pronounced when complementary assets (such as semiconductors) are scarce and production is vertically integrated—meaning today’s leaders may perform well—but during periods of rapid technological change like the present, these advantages tend to diminish.’ Goldman Sachs’ baseline scenario assumes a 15% productivity uplift from gen AI, a gradual adoption curve through 2045. And in industries where AI is heavily used—like tech, finance, and manufacturing—41% of the total economic output comes from capital, not from labor.

📚 Baidu just launched and open-sourced a BRILLIANT model for document parsing - PaddleOCR-VL-0.9B.

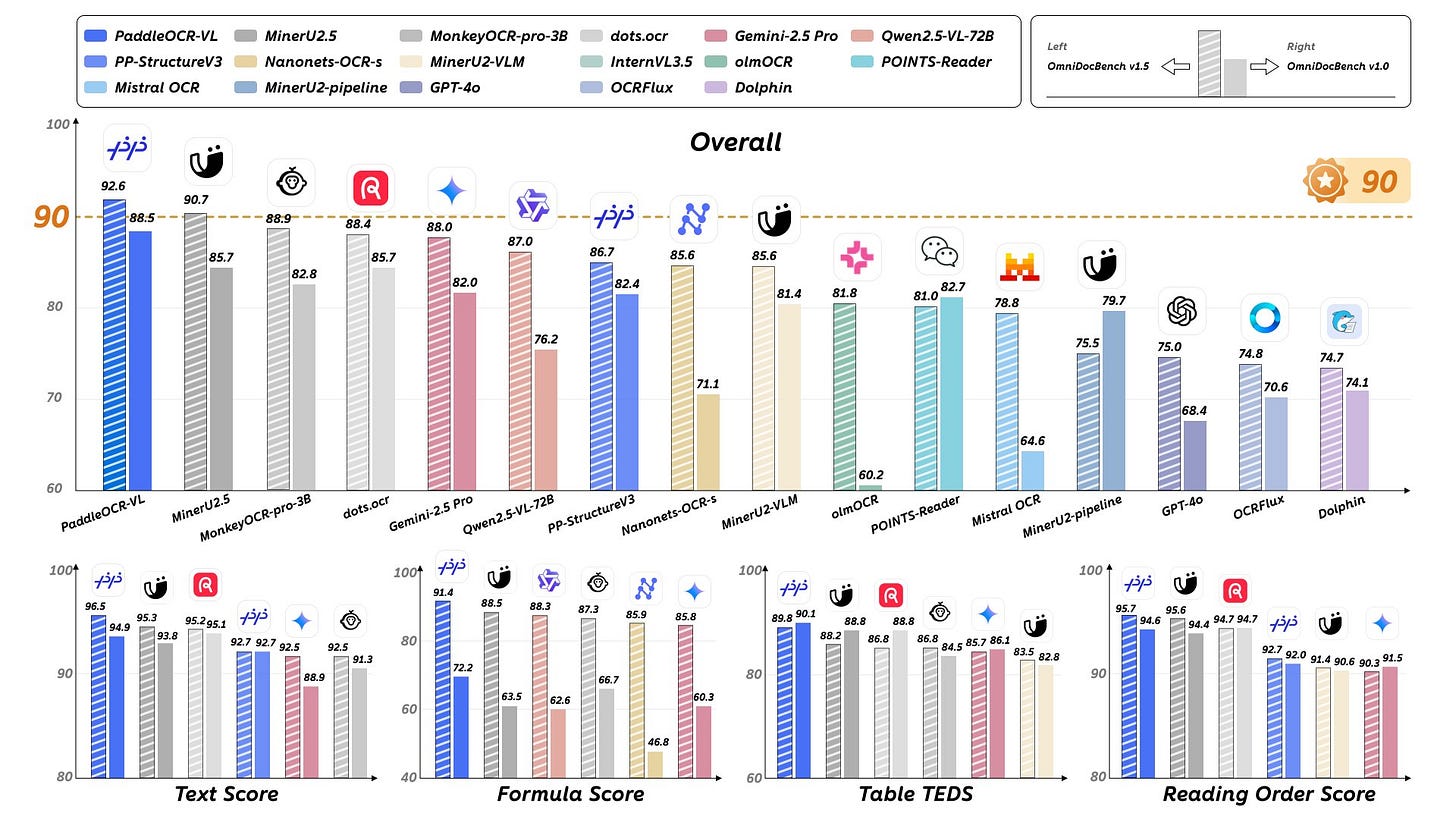

It reaches #1 on OmniDocBench v1.5 with 90.67, beating GPT-4o, Gemini 2.5 Pro, and Qwen2.5-VL-72B , as well as smaller OCR models like InternVL 1.5, MonkeyOCR-Pro-3B, and Dots.OCR.

While staying incredibly compact.

By integrating ERNIE-4.5-0.3B with an encoder, it can handle complex layouts in 109 languages with high precision.

Lightweight, high-accuracy, and open-sourced — a new benchmark for multimodal document intelligence.

A NaViT-style dynamic high-resolution vision encoder pairs with ERNIE-4.5-0.3B as the decoder, which boosts recognition while keeping compute low.

The small size and fast inference make it practical for batch processing on modest GPUs and for on prem deployments where data cannot leave the site.

Overall this release is lightweight, accurate, multilingual, and open source, and it raises the bar for everyday document intelligence.

Its Github has 57K stars and deep integration into leading projects like MinerU, RAGFlow, and OmniParser, PaddleOCR has become the premier solution for developers building intelligent document applications in the AI era.

🛠️ Tutorial: Run your models faster with quantized low precision in Keras!

Keras published its Official guide on “Quantization in Keras”

Why this matters

Most LLM inference waits on memory movement, not math. Each weight is stored as bytes and must travel from GPU memory to the compute units for every forward pass. If you store a weight in 32-bit float that is 4 bytes, in 8-bit integer that is 1 byte, and in 4-bit that is 0.5 byte. Fewer bytes moved means less waiting, so throughput rises and VRAM drops. That is what quantization does.

What keras gives you

Keras adds post training quantization with a single call on a model or on any layer. You choose a mode, Keras rewrites the graph, swaps weights, and keeps all metadata so you can run or save immediately. It works on JAX, TensorFlow, and PyTorch backends.

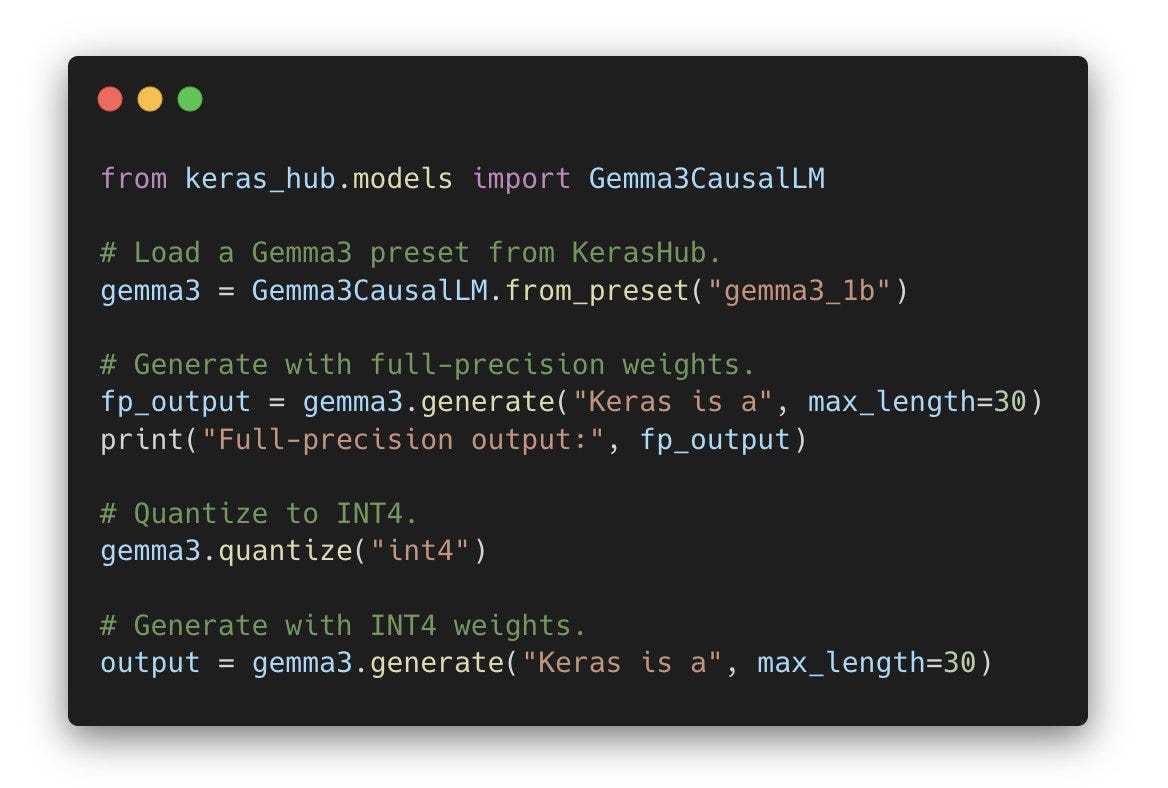

You learn post training quantization with int8, float8, int4, and GPTQ. The tutorial explains memory-bound inference and how fewer bits cut bytes and raise bandwidth. You call model.quantize(”<mode>”) or layer.quantize(”<mode>”), then run or save.

It covers per-channel scales, AbsMax calibration for activations, and 4-bit packing for int4 weights. You learn when to quantize whole models and when to mix precisions per layer to protect sensitive blocks like residual paths or logits.

It also shows coverage for Dense, Embedding, attention blocks, and KerasHub LLM presets. The example walks through quantizing a Gemma3 Causal LM to int4 and comparing outputs before and after. The workflows run on JAX, TensorFlow, and PyTorch backends with speedups where low-precision kernels exist.

That’s a wrap for today, see you all tomorrow.