Meta releases V-JEPA 2: 30x faster physics-aware AI model for real-world Robotics

Hollywood’s AI lawsuit, Altman’s Stargate moonshot, Cosine’s Genie, Codex-to-Grok cost cuts, BrightEdge referrals, Projects updates, Wikipedia pause and AI ad shaving weeks to hours

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (12-Jun-2025):

🎢 Meta's new AI V-JEPA 2 learns real-world physics from 1M hours of video

👨🏻⚖️ Hollywood finally sues over AI model training on copyrighted characters, files 110-page lawsuit

📡Sam Altman’s $500-Billion Moonshot: Why “Stargate” Could Redefine the Physics of AI

🛠️ Stop babysitting IDEs; Get ready for the post-IDE World, with the cutting-edge Genie model from Cosine.

🗞️ Byte-Size Briefs:

OpenAI announces Codex-rewritten inference stack, 80% cheaper and faster

Manus launches free unlimited AI chat with Agent Mode upgrade

xAI unveils Grok 3.5 voice mode, reasons via first-principles physics

BrightEdge finds 90%+ AI search referrals from desktop users

OpenAI updates ChatGPT Projects: web research, voice, memory, file uploads

Wikipedia pauses AI-generated summaries after editor backlash

AI achieves 2.3M-view ad, slashes production from weeks to hours

🎢 Meta's new AI V-JEPA 2 learns real-world physics from 1M hours of video

→ Meta has released V-JEPA 2, a 1.2B parameter vision-based world model that learns physical dynamics from video. It’s trained on 1M+ hours of unlabeled video and 1M images using self-supervised learning, with no manual annotation.

So the core concept is V-JEPA 2 learns physical dynamics from video, then uses its predictions to guide robots without any scene-specific fine-tuning.

→ The architecture uses a joint embedding predictive model. An encoder extracts state embeddings from raw video, and a predictor forecasts future states based on those embeddings and possible actions.

→ After initial pretraining, a second phase adds action-conditioned training using just 62 hours of robot control data. This enables the model to simulate physical outcomes of actions for downstream planning.

→ V-JEPA 2 supports zero-shot robot control, handling new environments and unseen objects. It achieves 65–80% success rates on multi-step robotic manipulation tasks with only visual goal images.

→ Compared to NVIDIA’s Cosmos model, V-JEPA 2 runs 30x faster while matching or exceeding performance on major video benchmarks like Something-Something v2, Epic-Kitchens-100, and Perception Test.

→ The model is open source, and available for commercial and research use. Licensing specifics are not stated but checkpoints and code are provided openly.

→ Meta also released 3 new physical reasoning benchmarks:

IntPhys 2: Detects physically implausible events.

MVPBench: Tests robustness using minimal visual changes.

CausalVQA: Evaluates causal, counterfactual, and planning questions.

These show that while humans perform at 85–95%, top AI models still lag far behind in physical reasoning, especially for causal and counterfactual tasks. And hence V-JEPA 2 will be such a landmark research, as connecting AI to physical reality instead of relying only on text-based logic is key as AI agents and robots take on more real-world jobs. And now we can teach a robot physics by letting it binge-watch a million hours.

Why V-JEPA-2 model can be a big deal for real-world AI

It uses self-supervised learning on raw video to predict the future. So it learns object interactions and physics over time without labels. It builds its own internal simulations of how objects move and interact—no need for massive hand-labelled datasets. That makes it far more compute- and data-efficient, ideal for real-world systems like robotics or self-driving cars that need reliable, real-time scene understanding.

Enables zero-shot planning and control, meaning robots or self-driving cars can predict what will happen next and plan actions in brand-new environments without extra fine-tuning.

Brings human-like intuition to machines, letting them adapt on the fly to unfamiliar settings, which is exactly what you need for real-world robotics and autonomous systems.

👨🏻⚖️ Hollywood finally sues over AI model training on copyrighted characters, files 110-page lawsuit

→ Disney, Marvel, Lucasfilm, and Universal have filed a joint copyright lawsuit against Midjourney in California federal court. They claim Midjourney trained its AI image models on copyrighted characters without permission, violating intellectual property laws.

→ The complaint includes dozens of side-by-side examples showing AI-generated images resembling characters like Spider-Man, Darth Vader, Shrek, Yoda, Minions, and Homer Simpson. The studios argue that these generations are unauthorized derivatives of protected IP.

→ The studios previously sent Midjourney cease-and-desist notices, which they allege were ignored. This lawsuit now seeks a jury trial, monetary damages, and a court injunction to stop Midjourney from using their content further.

→ Hollywood’s move marks a major escalation compared to news media outlets, which have mostly pursued licensing deals. Studios are signaling a hard stance: no use of their content without explicit permission.

→ The legal core of the case focuses on whether Midjourney’s use of public internet data, including copyrighted media, qualifies as “fair use” under U.S. law. This mirrors other ongoing AI copyright battles involving OpenAI, Anthropic, and music companies.

But, while many other top media outlets are cashing in on AI licensing, Hollywood studios are holding out. The outcome here could define fair use in AI, possibly affecting the entire ecosystem including Midjourney.

→ Outcome of this case could set a precedent impacting AI model training practices, especially for generative tools that rely on internet-scale data scraping.

→ Midjourney hasn’t issued a public response yet. If the court rules against it, the entire generative image model space may need to rethink its training pipelines.

→ Midjourney founder David Holz has previously admitted in interviews that he wasn’t selective about the materials he used to train his model. “It’s just a big scrape of the internet,” he told Forbes in 2022. “We weren’t picky.”

📡 Sam Altman’s $500-Billion Moonshot: Why “Stargate” Could Redefine the Physics of AI

OpenAI’s Sam Altman discusses the Stargate data center project, OpenAI's product roadmap, future ambitions, humanoid robots and life as a new father.

→ Inference, not training, is the choke point. Real-world traffic shattered forecasts, forcing GPU borrowing from research and feature throttles.

→ Stargate equals regional mega-centers that blend chips, energy, and fiber into one stack. SoftBank bankrolls, Oracle builds, Microsoft supplies more but can’t meet total need.

→ Early budget math shows 500B dollars covers only the next few years of growth; Altman claims value return will match spend.

→ Efficiency gains—better silicon, algorithms, cooling—arrive yearly, yet Jevons paradox means cheaper tokens amplify usage faster than savings shrink cost.

(Jevons paradox says that improving efficiency lowers the cost per use, which then drives up demand so much that total resource consumption actually increases.)

→ Competitive edge shifts from model quality to infrastructure density: whoever owns the lowest-latency, highest-capacity fleet wins user loyalty and dev mindshare.

→ Policy now matters as much as research. White House backing streamlines permits for power and land; chip vendor diversity remains risk, but Nvidia dominance reflects product lead.

→ Job impact narrative flips: data-center builds create roles while autonomous agents and soon humanoid robots erase others. Speed of change, not inevitability, drives anxiety.

→ Long-term vision centers on AI-accelerated science. Altman pegs 2025 for agent workflows and 2026 for fresh discoveries, assuming compute keeps up.

🛠️ Stop babysitting IDEs; Get ready for the post-IDE World, with the cutting-edge Genie model from Cosine.

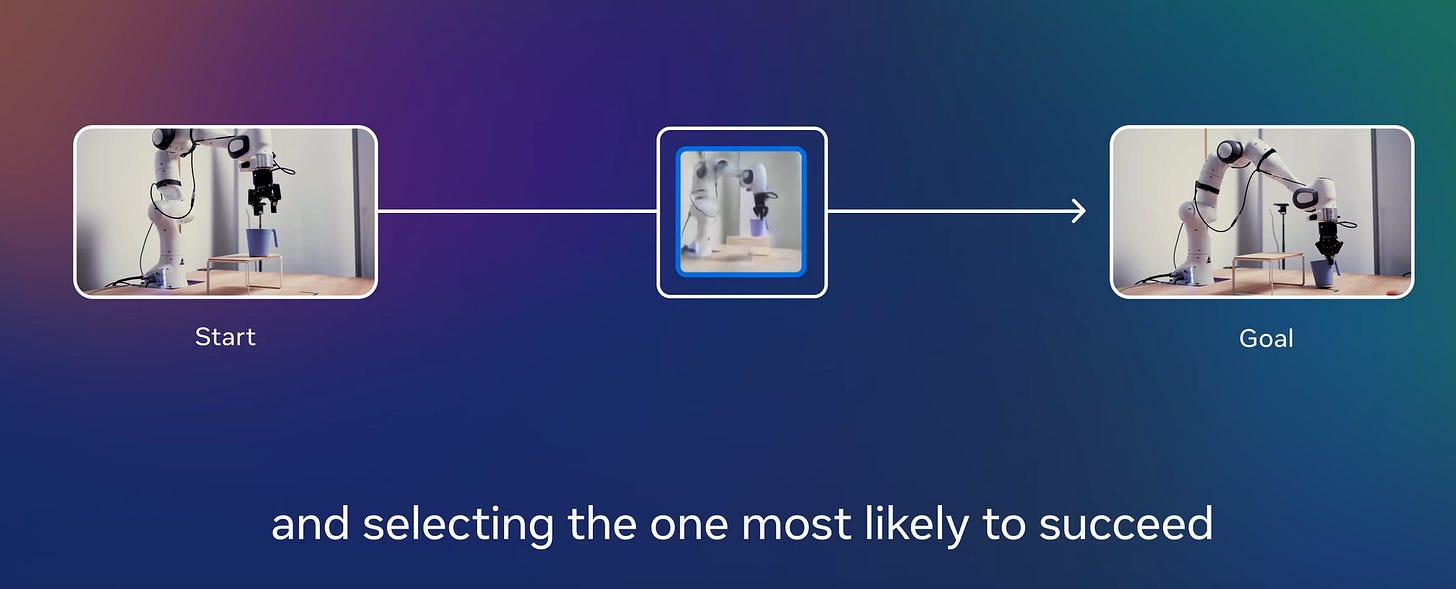

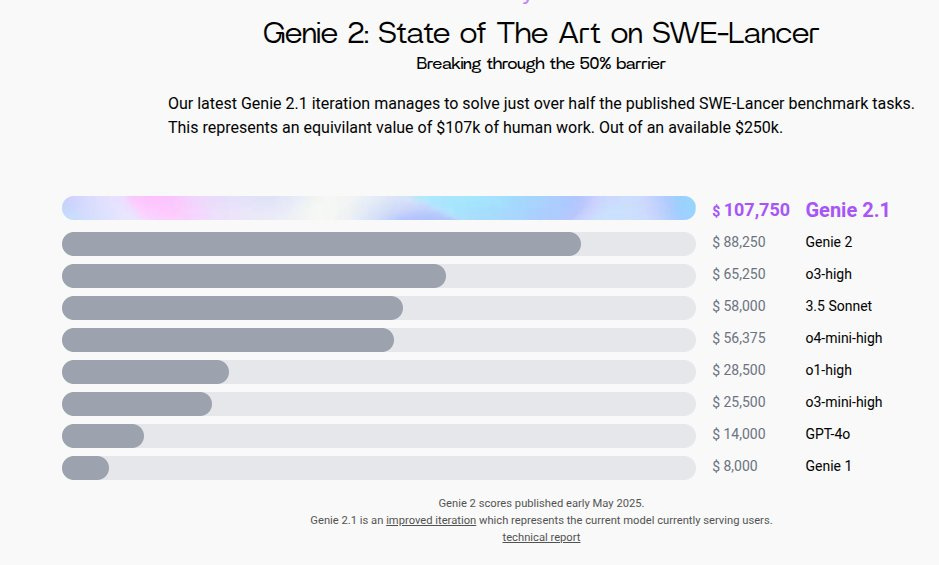

Cosine launched Genie - The dev agent that will work for you, on your giant codebase, like a hired autonomous freelancers that never clock out. Better than Codex and Cursor. Genie is the first truly native multi-agent coding product that can navigate, research, plan, and iterate across multiple tasks, at the same time. On the SWE-Lancer suite Genie 2 solved 116 of 237 issues, scoring USD 88,250 and nearly doubling older agents’ pass rates.

All of these tasks were done at the same time with the Laptop OFF!

Ex: "Add Relevance Sorting to File Search Results" -> Done.

Ex: "Implement Mobile-Friendly Table Page with useScreenSizeContext Hook" -> Done.

Ex: "Integrate CoreDNS with Traefik for ECS Service Resolution" -> Done.

An AI agent that manages other agents for complex codebases

Try it for free now 👉 go.cosine.sh/-autopm

🗞️ Byte-Size Briefs

According to Twitter post from an OpenAI insider, OpenAI used Codex to rewrite its inference stack. Massive gains followed.

To give you the context, OpenAI’s o3 model got both faster and 80% cheaper without any change to the model itself. OpenAI confirmed it's not a distilled or smaller version. It's the exact same architecture and weights. This led to speculation: if nothing changed in the model, what's driving the performance jump? Only two possibilities, better GPU clusters or optimized infrastructure.

Then Satoshi (an OpenAI insider) hinted at a deeper reason: OpenAI used Codex internally to automate system-level optimizations. That likely means tighter kernel-level code, smarter scheduling, less memory overhead, and refined CUDA paths. Codex, trained on programming, could recursively improve its own serving pipeline.Manus Chat Mode launches free unlimited AI chat. It offers unlimited free conversational AI with personalized instant answers, plus a one-click upgrade to advanced Agent Mode, seamlessly enabling simple queries and complex outputs in a browser-based interface, securely streamlining workflows for developers, data scientists, and AI practitioners globally

Grok 3.5 release rumored to be very close. Launches voice mode on Web. Elon Musk says on Grok 3.5 in an interview - "it's trying to reason from first principles. The focus of Grok 3.5 is to find the fundamentals of physics and applying physics tools across all lines of reasoning"

BrightEdge just dropped a surprising stat: while AI search tools are gaining traction, mobile is barely in the picture. BrightEdge found over 90% of AI search referrals come from desktop devices. Even though mobile dominates overall web traffic, AI search hasn’t followed suit. ChatGPT, Perplexity, Bing, and Gemini all send 90%+ of referrals from desktop. Google Search is the exception, with 53% of AI referrals coming from mobile. It’s not just a statistic; it’s a strategic cue. Mobile users aren’t desktop users with smaller screens—they act differently.

OpenAI just announced that ChatGPT projects now include deep web research, voice mode, chat memory, file uploads and mobile model selection—rolling out to Plus, Pro and Team users (memory on Plus/Pro) to streamline workflows and cut context-switching.

Separately note, that a project in ChatGPT is a smart workspace that groups related chats, reference files, and custom instructions into a single context. It leverages memory and flexible tools to keep the assistant on-topic across long-running efforts. Projects are ideal for iterative work like writing, research, planning, and more.Wikipedia Pauses AI-Generated Summaries, just two days after launch, after editor backlash. The test, which began June 2, displayed “simple summaries” atop articles for 10% of opted-in mobile users, generated by Cohere Labs’ Aya model and marked “unverified.” Volunteers warned AI could harm credibility. Despite halting the trial, Wikipedia says it will continue exploring tools to make content more accessible, though human editors will still control what appears on the platform.

World’s on warp speed. Old industry mindsets won’t keep up. This ad generated 2.3 views on a 30-sec ad that’s 100% AI generated. AI slashes production time from weeks to hours. And also you no more need $100K+ budget.

That’s a wrap for today, see you all tomorrow.