Microsoft Introduces Dragon Copilot, its new healthcare AI assistant

Microsoft launches Dragon Copilot for healthcare, Google debuts a Data Science Agent in Colab, xAI’s Grok-3 outperforms GPT-4.5, and a tutorial on building an Agentic RAG with Llama_index and Mistral.

Read time: 6 min 27 seconds

📚 Browse past editions here.

( I write daily for my for my AI-pro audience. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (4-March-2025):

🚀 Microsoft Introduces Dragon Copilot, its new healthcare AI assistant

🔥 Google Unveils Data Science Agent in Google Colab

🗞️ Byte-Size Briefs:

Google adds live video & screen-sharing to Project Astra for Gemini Advanced.

xAI’s Grok-3 tops Chatbot Arena, surpassing OpenAI’s GPT-4.5.

🧑🎓 Deep Dive Tutorial: Build an Agentic RAG with Llama_index and Mistral Model

🚀 Microsoft Introduces Dragon Copilot, its new healthcare AI assistant

🎯 The Brief

Microsoft introduces Dragon Copilot, a single voice AI assistant merging Dragon Medical One dictation and DAX ambient listening. It reduces administrative load and addresses clinician fatigue by generating notes, referrals, and summaries automatically.

⚙️ The Details

Built on Microsoft Cloud for Healthcare, it integrates speech recognition, ambient note creation, and AI-powered searches in one interface. Each step cuts down repetitive tasks and accelerates documentation.

Early outcomes show an average of 5 minutes saved per patient encounter. Around 70% of clinicians report less burnout, 62% feel less likely to leave, and 93% of patients see better experiences.

Dragon Copilot supports multiple languages, templates, voice commands, and editing in a consolidated workspace. It leverages generative AI and healthcare-specific safeguards to ensure accuracy.

Availability will start in the U.S. and Canada, followed by more countries including the UK, Germany, France, and the Netherlands. Its secure architecture and partner ecosystem deliver reliable deployments without heavy integration burdens.

🔥 Google Unveils Data Science Agent in Google Colab

🎯 The Brief

Google introduces Data Science Agent in Colab, generating entire notebooks for data tasks via Gemini, now available to users 18+ in select countries and languages. It ranks 4th on DABStep, surpassing GPT 4.0-based ReAct.

⚙️ The Details

• It accepts natural language prompts in a Gemini side panel and outputs a fully executable notebook, cutting setup time. Its approach integrates import statements, data loading, and boilerplate code.

• University teams leverage it to speed research, thanks to streamlined data processing and analysis. It yields shareable outputs using standard Colab collaboration features.

• The workflow is simple. You open a blank notebook, upload data, and specify tasks like visualizing trends or building a prediction model. The Agent then delivers a functioning notebook, ready to tweak.

• It surpassed ReAct agents based on GPT 4.0, Deepseek, Claude 3.5 Haiku, and Llama 3.3 70B, reflecting multi-step reasoning gains in DABStep. It handles popular datasets like Stack Overflow Survey, Iris Species, or Glass Classification.

Here note, DABStep (Data Agent Benchmark for Multi-step Reasoning) is a benchmark specifically designed to evaluate the capabilities of AI agents in performing complex, multi-step data analysis tasks. It comprises over 450 real-world data analysis challenges that go beyond simple question answering or code generation.

• And ofcourse, Google Colab remains free to use, providing access to Google Cloud GPUs/TPUs, making heavy computations accessible.

🗞️ Byte-Size Briefs

Google is rolling out live video and screen-sharing features in Project Astra for Gemini Advanced subscribers on Android this month. This is part of the Google One AI Premium plan, enhancing AI-powered interactivity on mobile devices.

xAI's latest model, Grok-3-Preview-02-24, has ascended to the top of the Chatbot Arena leaderboard, surpassing OpenAI's GPT-4.5 by a narrow margin.

🧑🎓 Tutorial: Build an Agentic RAG with Llama_index and Mistral Model

The project creates an agent that smartly blends a fixed, pre-indexed knowledge base with the ability to dynamically fetch the latest research from ArXiv. It also supports downloading PDFs, making it a comprehensive tool for research assistance.

Checkout the associated Googl Colab with exhaustive explanations for each of the steps.

The agent can both quickly answer queries from stored data and retrieve new information as needed.

How It Works Under-the-Hood

• The agent uses RAG to quickly retrieve paper details if the paper is already indexed, saving time and API calls. • When the topic is not available, the fetch tool is invoked, which directly queries ArXiv, ensuring fresh data. • The download tool handles file retrieval, allowing users to save PDFs for offline review.

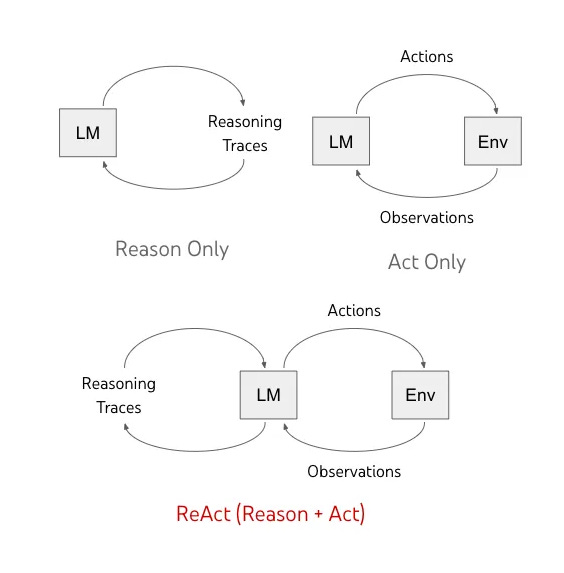

Each tool is wrapped as a Python function but exposed as a callable “tool” for the agent. The ReAct agent switches between reasoning (deciding which tool to use) and acting (executing the tool) until it forms a final answer.

The project is referring to this cookbook.

What is a ReAct Agent

ReAct, short for “Reasoning and Acting,” is a groundbreaking approach that combines reasoning traces and task-specific actions within LLMs. This integration allows the model to perform dynamic and context-aware decision-making, bridging the gap between reasoning and acting, which were traditionally studied as separate topics.

The ReAct Agent here is built to alternate between “thinking” (reasoning) and “doing” (acting) in order to handle your query effectively.

1. Agent Initialization:

• The code creates the agent using a list of three tools:

A RAG query engine (which searches your local indexed papers),

An ArXiv fetcher (which retrieves new papers if needed), and

A PDF downloader (which saves paper PDFs locally).

• The agent also gets a language model (LLM) that powers its reasoning.

• With verbose=True, the agent will log its internal steps, making it easier to see how it decides what to do.

2. Reasoning Phase (“Think”):

When you give the agent a query (e.g., “I’m interested in Audio-Language Models”), the agent uses the LLM to “understand” the request.

It checks its internal memory (or context) and evaluates if the local index (via the RAG tool) contains relevant papers.

The LLM weighs whether to use a tool or directly answer. If it finds that the local index doesn’t have enough data, it plans to call the ArXiv fetcher.

3. Acting Phase (“Do”):

• Based on the reasoning, the agent “acts” by invoking one of the tools. For example:

It might call the RAG query engine tool to fetch details from the static index.

If needed, it calls the ArXiv fetcher to pull in recent research dynamically.

Later, when you ask to “Download the papers,” it uses the PDF downloader tool.

• Each tool call returns results that are then fed back into the agent’s context.

4. Iterative and Contextual Interaction:

• The agent’s process is iterative: after each tool call, it “re-thinks” with the new information. • This means that a follow-up command (like downloading PDFs) is processed with the context of the earlier results. • The agent retains the conversation history so it knows which papers were mentioned before and can act accordingly.

In Summary:

The ReAct Agent uses a loop of reasoning (powered by the LLM) and acting (via the three tools) to handle your requests. It starts by trying to answer from its static index. If that doesn’t suffice, it fetches fresh data from ArXiv. Then, when you ask for further action (like downloading PDFs), it uses the stored context to know exactly what to download. This seamless combination makes the agent both responsive and context-aware.

That’s a wrap for today, see you all tomorrow.

Amazing newsletter, this is the first time I am ever reading a newsletter after subscribing to it. I'll surely try to implement the agentic RAG example showcased here.