⚡Microsoft released Phi-4, a 14B parameter language model that performs at par with GPT-4o-mini and Llama-3.3-70B

Microsoft Phi-4, Cohere R7B, ChatGPT Projects launch, NotebookLM updates, plus new findings from OpenAI and Google.

⚡In today’s Edition (13-Dec-2024):

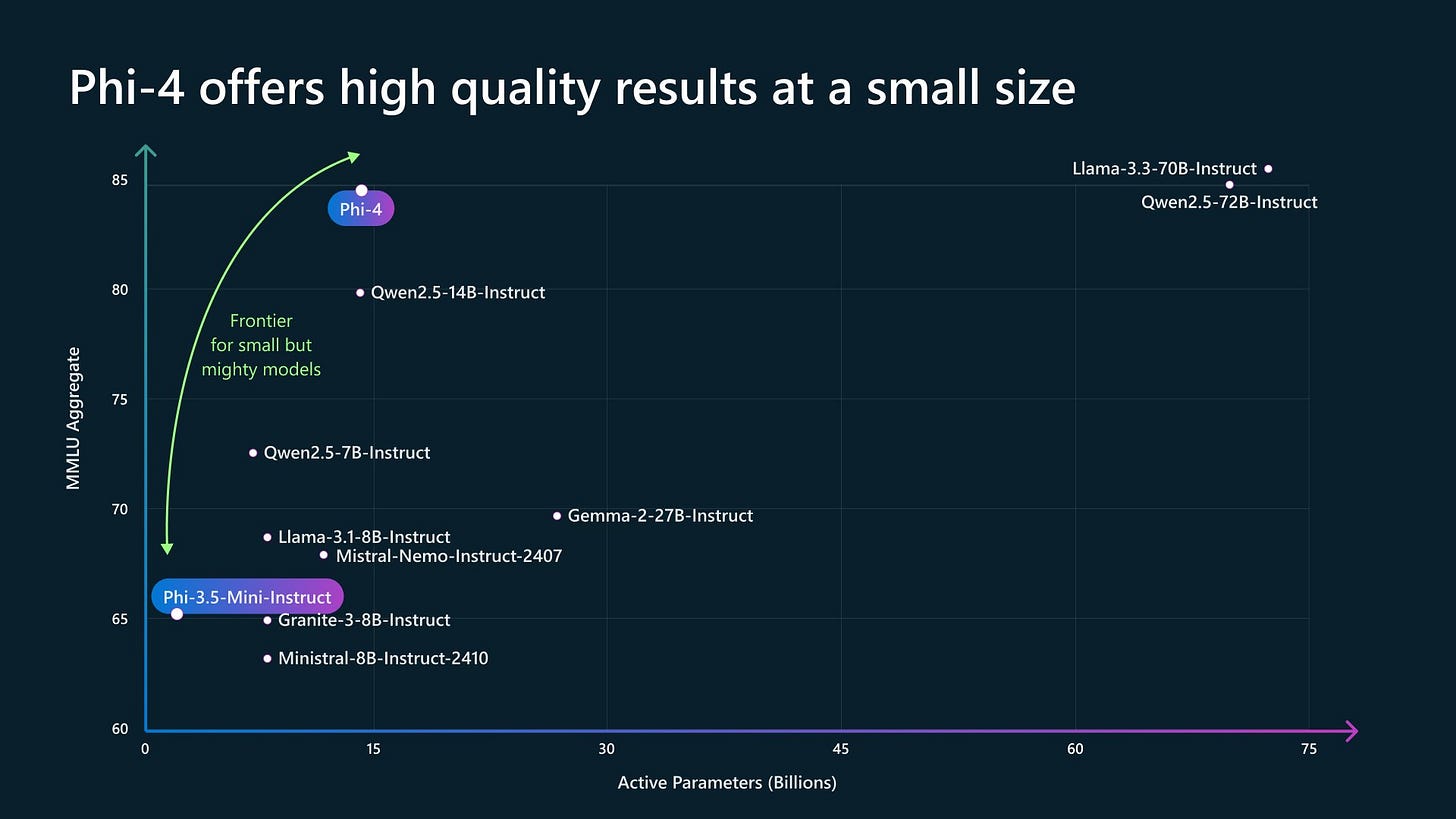

⚡Microsoft released Phi-4, a 14B parameter language model that performs at par with GPT-4o-mini and Llama-3.3-70B

🏆 ChatGPT introduced Projects, same feature like Claude's Projects

🎤 Google’s NotebookLM release major udates - the new 3-panel design, interactive mode and premium subscription version

📡Anthropic’s new research shows Coding/App-development and Content creation is the top real-world use case of Claude model

🚀 Cohere released Command R7B open-source model its smallest model in the R series

=================================

🗞️ Byte-Size Brief:

OpenAI's Sora dominates AI video generation, outperforming competitors across 3.7K evaluations.

Google's Gemini extends mathematical supremacy across three key benchmarks

=========================================

🧑🎓 Deep Dive Tutorial:

Scaling Laws – O1 Pro Architecture, Reasoning Training Infrastructure

⚡Microsoft released Phi-4, a 14B parameter language model that performs at par with GPT-4o-mini and Llama-3.3-70B

🎯 The Brief

Microsoft releases Phi-4, a 14B parameter LLM matching GPT-4 performance in complex reasoning while being significantly smaller, achieving 91.8% accuracy on math problems. However its a non-commercial license.

⚙️ The Details

→ The model demonstrates exceptional capabilities in mathematical reasoning, outperforming larger models including Gemini Pro 1.5 and Llama-3.3-70B on AMC 10/12 math competition problems.

→ The model achieves 91.8% accuracy on AMC 10/12 math competition problems, surpassing Gemini Pro 1.5 and other larger models

→ Technical innovations driving Phi-4's performance include sophisticated synthetic data generation techniques, high-quality organic data curation, and advanced post-training methods like Pivotal Token Search (PTS) in DPO.

→ The model addresses the industry's "pre-training data wall" through innovative synthetic data generation approaches, showing particular strength in complex mathematical problem-solving and symbolic reasoning.

→ One of the key innovative method used in Phi-4 is Pivotal Token Search (PTS). PTS identifies and processes only the most influential tokens during LLM inference, using a dynamic token importance scoring mechanism and pruning strategy. The system automatically adjusts selection criteria based on content complexity. This optimization achieves 3x faster inference while maintaining 95%+ accuracy and reduces memory usage by 40-60%. The technique requires minimal architectural changes, works in both training and inference phases, and allows custom scoring functions for specific tasks.

→ Currently available on Azure AI Foundry under Microsoft Research License Agreement, with planned release on Hugging Face.

⚡ The Impact

Proves smaller models can match larger ones through better training, potentially reducing computational requirements for AI deployment. However, a non-commercial license makes it a hindrance.

🏆 ChatGPT introduced Projects, same feature like Claude's Projects

🎯 The Brief

OpenAI launches Projects feature in ChatGPT, enabling users to upload files, organize conversations, and set custom instructions in folder-like workspaces for Plus, Pro, and Teams users.

⚙️ The Details

→ Projects functions as smart folders with integrated capabilities for file management, conversation organization, and custom instructions. Canvas support and web search features are fully operational within project contexts.

→ Coding and Development workflows specially benefit from context-specific coding assistance. Now you can upload the entire documentations or guideline files and can refer to those for the entirety of your chat coding session within ChatGPT.

⚡ The Impact

Feature streamlines workflow organization in ChatGPT, enabling focused context-specific interactions across multiple use cases.

🎤 Google’s NotebookLM release major udates - the new 3-panel design, interactive mode and premium subscription version

🎯 The Brief

NotebookLM gets many major updates with enhanced interface, audio interactivity, and premium features, reaching millions of users and tens of thousands of organizations globally. These new features will be rolling out to users globally over the next few days.

⚙️ The Details

→ The platform introduces experimental Gemini 2.0 Flash integration alongside a redesigned interface divided into three panels: Sources for information management, Chat for AI discussions with citations, and Studio for content creation.

→ A standout feature is interactive Audio Overviews, which users can "join" to engage directly with AI hosts through voice interactions. So you can now “join” Audio Overviews with your voice and engage with the AI hosts to ask a question or steer the conversation. They'll adapt on the fly to (almost) everything you ask of them. Over the last three months, people have generated more than 350 years worth of Audio Overviews.

→ NotebookLM Plus subscription offers 5x more Audio Overviews, notebooks, and sources per notebook. Features include customizable responses, team notebooks with analytics, and enterprise-grade security. Available through Google Workspace or Google Cloud, with future integration into Google One AI Premium. And you get 300 sources per NotebookLM (up to 150 million words)—up from 50 with NotebookLM Plus.

⚡ The Impact

A wider acceptance of Google’s NotebookLM has the potential to change education for ever. Also given the fact you can listen to it on the move, makes learning new things massively efficient. You no more need to give a dedicated time to learn something new.

📡Anthropic’s new research shows Coding/App-development and Content creation is the top real-world use case of Claude model

🎯 The Brief

Anthropic just released Clio (Claude insights and observations), an analytics system monitoring real-world Claude usage. It analyzes 1 million conversations to understand user behavior by automatically identifying trends in Claude usage across the world.

⚙️ The Details

→ The system reveals dominant use cases: Web development (10.4%), content creation (9.2%), academic research (7.2%), and education (7.1%). Conversations are automatically clustered and analyzed while maintaining user privacy through strict data controls.

→ Clio implements a four-stage process: extracting facets, semantic clustering, cluster description, and building hierarchies. The system achieved a correlation of 0.71 with existing Trust and Safety classifiers.

→ Privacy protection includes anonymization, aggregation, minimum thresholds for clusters, and restricted access controls. Notably, Clio helped identify translation-based policy violations and reduce false positives in security monitoring.

⚡ The Impact

Enables data-driven AI safety improvements while protecting user privacy, setting standards for responsible LLM deployment monitoring.

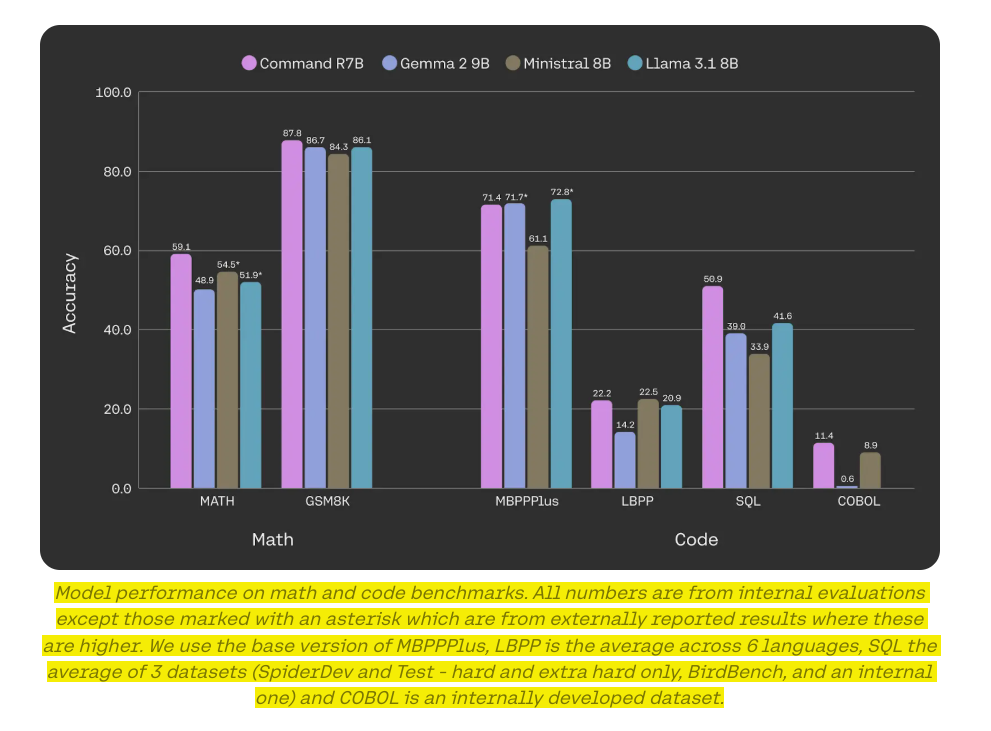

🚀 Cohere released Command R7B open-source model its smallest model in the R series

🎯 The Brief

Cohere releases Command R7B, a compact 7B parameter LLM with 128k context offering state-of-the-art performance for enterprise applications at $0.0375/1M input tokens and $0.15 / 1M or output tokens.

⚙️ The Details

→ Command R7B ranks first on HuggingFace Open LLM Leaderboard among similar-sized open-weights models, delivering superior performance across all evaluation tasks with an average score of 31.4.

→ The model excels in enterprise use-cases, achieving 69.2% on ChatRAGBench, 74.4% on BFCL tool use, and outperforming competitors in RAG applications. Its native in-line citations significantly reduce hallucinations.

→ Technical benchmarks show strong performance in math (59.1%), code generation (56%), and multilingual tasks. The model supports efficient deployment on commodity GPUs, CPUs, and MacBooks.

→ License: Creative Commons Attribution-NonCommercial 4.0. Meaning, its a NonCommercial license that allows users to freely use and modify the model for non-commercial purposes only, with proper attribution to Cohere and without monetization.

⚡ The Impact

Enterprise-grade performance in a lightweight model enables cost-effective AI deployment across diverse business applications.

🗞️ Byte-Size Brief

Sora beats every other AI video maker in thousands of quality comparison tests. After competing against Kling 1.5, Hailuo, and Mochi 1 in over 3,700 evaluations, OpenAI's model achieved an impressive ELO score of 1,151, establishing clear dominance in video generation quality.

Gemini keeps beating every math test thrown at it. The model maintains its top position across LiveBench, FrontierMath, and now U-MATH benchmarks, showcasing consistent mathematical superiority through its specialized problem-solving architecture.

🧑🎓 Deep Dive Tutorial:

📚 Scaling Laws – O1 Pro Architecture, Reasoning Training Infrastructure

This very detailed blog analyzes how AI scaling laws are evolving beyond pre-training into multiple dimensions, with inference-time scaling becoming crucial for model performance.

📚 Technical Learning Breakdown

LLM scaling laws beyond traditional pre-training metrics and into multiple optimization dimensions

test-time compute scaling techniques demonstrated by o1 Pro's performance gains in complex reasoning tasks

synthetic data generation architecture for overcoming data bottlenecks in model training

Master Reinforcement Learning with AI Feedback (RLAF) implementation - faster than RLHF with more comprehensive model improvement

Understand large-scale training infrastructure requirements through case studies like Amazon's 400k Trainium2 chip deployment

advanced reasoning capabilities through chain-of-thought architectures integrating generator, verifier and reward models

Study practical implementation details of:

Rejection sampling methods

Automated verification systems

Distributed training clusters

High-bandwidth networking optimization

Multi-model integration patterns