🔬 Microsoft research finds AI is not yet ready for real-world medical diagnosis

Microsoft questions real-world value of medical AI benchmarks, DeepMind drops new robotics AI, new RAG method boosts speed+accuracy, and AI cracks tough finance exam.

Read time: 12 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (26-Sept-2025):

🔬 Microsoft research finds AI is not yet ready for real-world medical diagnosis

🏆 Google DeepMind just released its very first robotics AI models, called Gemini Robotics 1.5 and Gemini Robotics-ER 1.5.

🛠️ New paper shows a great way to make RAG much faster and more accurate

💼 AI just passed a brutal finance exam most humans fail

🧠 The Illusion of Readiness: Stress Testing Large Frontier Models on Multimodal Medical Benchmarks

🚨 This research is quite a bad news for Medical AI models. Just shows so much work is still needed before AI can be regularly integrated with mainstream Medical diagnosis.

The Microsoft paper says current medical AI models may look good on standard medical benchmarks but those scores do not mean the models can handle real medical reasoning.

The key point is that many models pass tests by exploiting patterns in the data, not by actually combining medical text with images in a reliable way. The key findings are that models overuse shortcuts, break under small changes, and produce unfaithful reasoning. This makes the medical AI model’s benchmark results misleading if someone assumes a high score means the model is ready for real medical use.

The specific key findings from this paper 👇

Models keep strong accuracy even when images are removed, even on questions that require vision, which signals shortcut use over real understanding.

Scores stay above the 20% guess rate without images, so text patterns alone often drive the answers.

Shuffling answer order changes predictions a lot, which exposes position and format bias rather than robust reasoning.

Replacing a distractor with “Unknown” does not stop many models from guessing, instead of abstaining when evidence is missing.

Swapping in a lookalike image that matches a wrong option makes accuracy collapse, which shows vision is not integrated with text.

Chain of thought often sounds confident while citing features that are not present, which means the explanations are unfaithful.

Audits reveal 3 failure modes, incorrect logic with correct answers, hallucinated perception, and visual reasoning with faulty grounding.

Gains on popular visual question answering do not transfer to report generation, which is closer to real clinical work.

Clinician reviews show benchmarks measure very different skills, so a single leaderboard number misleads on readiness.

Once shortcut strategies are disrupted, true comprehension is far weaker than the headline scores suggest.

Most models refuse to abstain without the image, which is unsafe behavior for medical use.

The authors push for a robustness score and explicit reasoning audits, which signals current evaluations are not enough.

The below figure tells us that high scores on medical benchmarks can mislead, because stress tests reveal that current models often rely on shallow tricks and cannot be trusted for reliable medical reasoning.

The first part highlights 3 hidden fragilities: hallucinated perception, shortcut behavior, and faulty reasoning. The second part compares benchmark accuracy with robustness scores, and while accuracy looks high, robustness drops sharply, which means models get brittle under small changes.

The heatmap shows how stress tests like removing images, shuffling answers, or replacing distractors reveal specific failure patterns in each model. The example at the bottom shows that a model can still give the right answer even without seeing the image, which is a shortcut, or it can make up a detailed explanation that mentions things not actually in the image, which is fabricated reasoning.

This below figure shows that removing images from diagnostic questions makes accuracy drop, so models are overestimating how much they truly use vision. Different benchmarks react differently, which means visual understanding is inconsistent across datasets and question types.

Even without images, most models still score above the 20% guess rate, which signals they rely on text cues, memorized pairs, or co-occurrence patterns. One model even falls below chance on text-only inputs, which suggests fragile behavior rather than stable reasoning. Overall, the message is that high Image+Text scores can hide shortcut use, so vision-language robustness is weaker than the headline numbers suggest.

Medical AI benchmarks should guide understanding of strengths and failures, not act like a single badge of quality.

🏆 Google DeepMind just released its very first robotics AI models, called Gemini Robotics 1.5 and Gemini Robotics-ER 1.5.

📦 Gemini Robotics 1.5 pairs a planning brain with an action body, letting robots search, reason, and execute long real-world tasks. Gemini Robotics-ER 1.5 plans with tools and tracks progress, while Gemini Robotics 1.5 uses vision and language to emit motor commands.

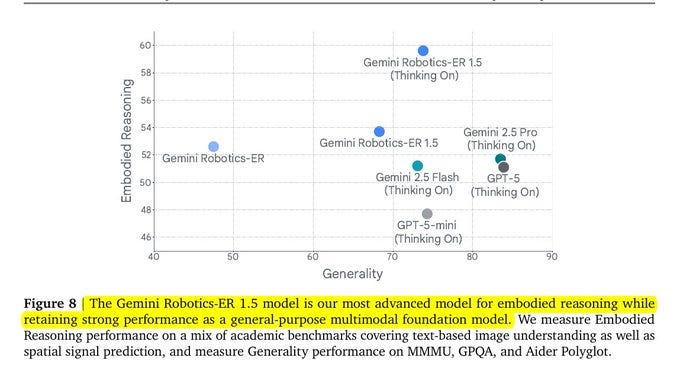

This split makes behavior readable because the planner writes its thoughts in plain text, and it also limits error buildup on long missions. On 15 embodied reasoning benchmarks the ER model reaches state-of-the-art aggregated performance, improving over ER 1.0 and GPT-5 variants.

The executor thinks before acting, breaks long tasks into short segments, and adapts when the environment changes. Skills transfer across embodiments, so motions from ALOHA 2 carry to Apollo and bi-arm Franka without per-robot training.

💡 Gemini Robotics 1.5 features a novel architecture and a Motion Transfer (MT) mechanism. The new architecture in Gemini Robotics 1.5 is built to share one motion brain across many robots.

Traditionally, to get robots to move around in a space and take action requires plenty of meticulous planning and coding, and this training was often specific to a particular type of robot, such as robotic arms. This “motion transfer” breakthrough could help solve a major bottleneck in AI robotics development, which is the lack of enough training data.

Instead of learning raw motor commands tied to each robot, it learns a general motion space that describes the effect of actions on the world. With Motion Transfer, the system aligns movements from different robots, so skills learned on one robot (like opening a drawer) can work on another without retraining.

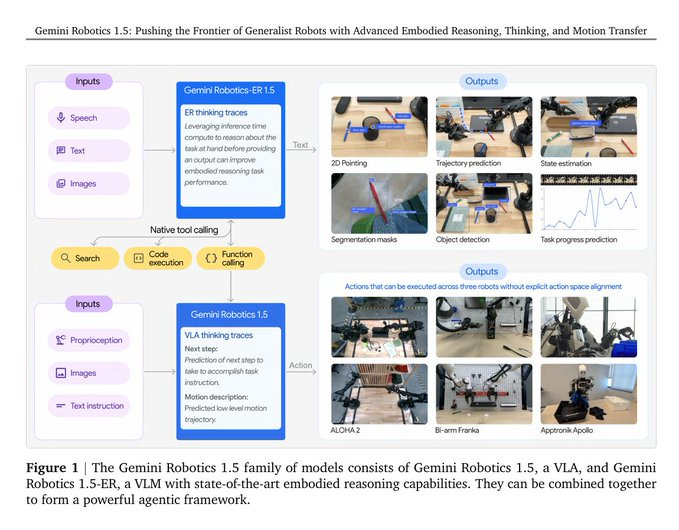

This diagram shows how the Gemini Robotics 1.5 system is structured and what each part does. At the top, Gemini Robotics-ER 1.5 handles reasoning tasks. It works with text, images, and speech as inputs, and produces outputs like pointing, predicting object paths, estimating states, detecting objects, segmentation, and tracking task progress. It can also call tools like search or code execution when needed.

The key point is that ER 1.5 focuses on understanding and reasoning about the world. At the bottom, Gemini Robotics 1.5 is the action model. It takes in body signals from the robot, images, and text instructions. Then it produces motion trajectories, meaning actual robot movements. The big highlight is that the same model can drive different robots like ALOHA, Bi-arm Franka, and Apollo humanoid, without needing separate retraining for each.

Together, these 2 models form a complete system. One side plans and reasons, while the other side converts plans into physical actions. This pairing is what lets Gemini 1.5 robots carry out long and complex tasks more reliably.

🧩 What’s this new model architecture Motion Transfer that Google brought

Normally, when you train a robot model, you tie it pretty closely to one robot’s body and motion space. For example, the arm angles of a Franka robot are not the same as the actuators of a humanoid robot. Most models learn only within that one space.

The new architecture in Gemini Robotics 1.5 is designed differently. Instead of locking into one robot’s body, it builds a shared internal representation of motion and physical effects.

That means it does not store knowledge as “Franka joint 3 at angle X,” but as a more general “move gripper closer to object.” Normally, when you train a robot model, you tie it pretty closely to one robot’s body and motion space. For example, the arm angles of a Franka robot are not the same as the actuators of a humanoid robot. Most models learn only within that one space.

The new architecture in Gemini Robotics 1.5 is designed differently. Instead of locking into one robot’s body, it builds a shared internal representation of motion and physical effects. That means it does not store knowledge as “Franka joint 3 at angle X,” but as a more general “move gripper closer to object.”

🔁 Where Motion Transfer comes in

Motion Transfer is the recipe that lets the model align movements and contact patterns from different robots. So, whether it’s ALOHA’s hands, Franka’s arms, or Apollo’s humanoid body, the model can map them onto the same “conceptual motion space.”

This way, when it learns something on Franka, like closing a drawer, it can apply that skill to ALOHA without retraining. That’s what they mean by zero-shot cross-embodiment transfer.

✨ Why this is a big deal

One model, many robots: A single checkpoint works across very different machines.

Better use of data: Training on one robot helps all others, since the model learns motion concepts, not just raw commands.

Stronger generalization: It adapts better to new tasks and environments by focusing on outcomes, not body specifics.

Scales easily: More robot data keeps improving the same model instead of splitting into separate versions.

🧭 Embodied reasoning, the spatial brain

GR-ER 1.5 adds strong spatial skills like pointing to exact parts, sequencing points into safe trajectories, and counting by pointing, with average pointing accuracy 52.6% across mixed benchmarks. It keeps broad multimodal strength while scoring higher on embodied reasoning sets that need visuo-spatial and temporal understanding, so it acts like a general model that happens to be good at robotics. These skills become handy handles for robots, since a point or short trajectory is a compact, precise instruction that maps cleanly to grasping or pathing.

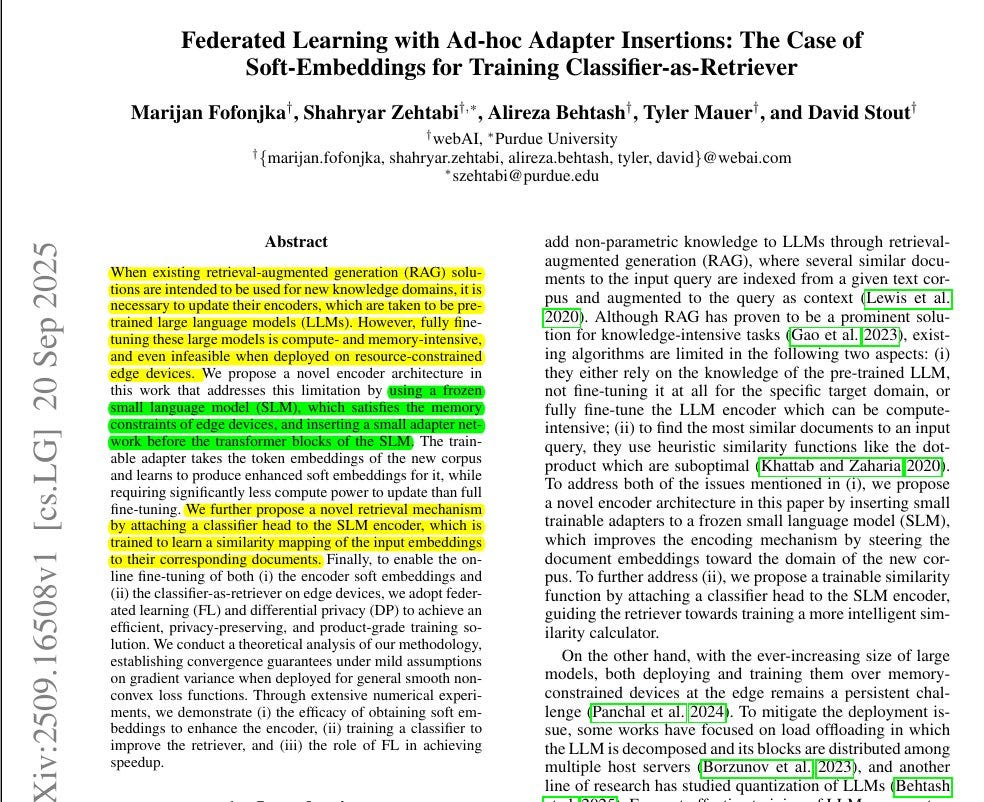

🛠️ New paper shows a great way to make RAG much faster and more accurate

New paper from webAI shows an innovative way to make RAG much faster and more accurate by freezing a small language model, then only training a tiny adapter and a classifier head.

Result: 99.95% top-1 with soft embeddings + classifier vs 12.36% for frozen LLM + MIPS, plus 2.62x federated learning speedup.

With federated learning (FL), the data is split across multiple clients (machines). Each client trains its local copy in parallel, then the server averages the updates. Because the heavy computation is shared, total training time goes down.

🧠 The idea (How its done)

An embedding is just a vector representation of text, and in retrieval-augmented generation (RAG) these embeddings are used to compare a query with documents.

Most retrieval systems use a fixed similarity measure like dot products compare a query and documents. The embeddings (vector representations of text) are compared with a dot product, and the top results are chosen.

Now, if the embeddings are not tuned for the new dataset, then the dot product gives poor matches. To fix that, one could fine-tune the entire language model so that its embeddings fit the new domain. But that is heavy, slow, and not practical for small devices.

This paper freeze a small language model, add a tiny trainable adapter to learn soft embeddings. Then bolt on a classifier head that acts as the retriever, which means retrieval is learned rather than fixed.

This way, the embeddings become tuned to the new dataset without touching the heavy model. Then the retriever (the classifier head) can work much better than simple dot products.

Those tiny pieces are the only things trained across clients with federated learning and differential privacy, so the heavy model stays frozen and shared.

In this paper, “client” means a participant in the federated learning setup. A client is simply one machine (like a phone, laptop, or small server) that has access to its own local dataset.

This setup beats maximum inner product search, hits 99.95% top-1 on a spam test, and gets up to 2.62x faster training when distributed, while matching centralized accuracy. Read more about it on their official blog

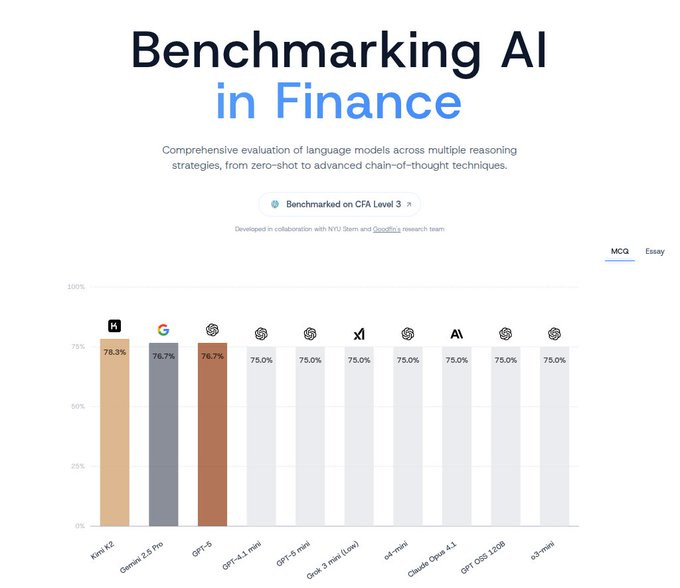

💼 AI just passed a brutal finance exam most humans fail

Frontier AI models can now pass CFA Level III exams, including essays, in minutes, based on a study that tested 23 models on portfolio and wealth planning scenarios.

Becoming a Chartered Financial Analyst (CFA) is not an easy task. Indeed, the exam has three levels, and most people spend several years studying before they can pass.

Top results LLMs are

79.1% for o4-mini

77.3% for Gemini 2.5 Flash,

74.9% for Claude Opus 4,

with graders noting that the essay evaluation was even stricter than certified humans. Level III focuses on case based portfolio construction and client constraints with long responses, and earlier tests showed models could pass Levels I and II but failed Level III essays, highlighting the challenge of written reasoning.

The experiments used safeguards against leakage, and researchers stressed that context and intent in client advice still need a human lead. In practice this points to AI drafting case answers, building audit trails, and explaining tradeoffs in plain language while a charterholder reviews, signs off, and handles compliance.

That’s a wrap for today, see you all tomorrow.