Midjourney releases first AI video model amid Disney, Universal lawsuit

Get Midjourney’s first video AI amid suit, Meta’s $100M war to hire OpenAI engineers, a Qwen3 embeddings tutorial, ChatGPT’s custom model setting, and a man proposing to his AI chatbot.

Read time: 6 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (19-Jun-2025):

🎥 ‘Surpassing all my expectations’: Midjourney releases first AI video model amid Disney, Universal lawsuit

💵 Meta Offers $100M to OpenAI Engineers, Fuels AI War

🛠️ Tutorial: Integrate the new Qwen3 Embedding models now in Sentence Transformers

🗞️ Byte-Size Briefs:

OpenAI launches new feature in ChatGPT. Now you can set the recommended model when you create a custom GPT.

A California Man proposes to his AI chatbot girlfriend, cried for her and is he’s publicly admitting this.

🎥 ‘Surpassing all my expectations’: Midjourney releases first AI video model amid Disney, Universal lawsuit

Midjourney has launched its first AI video generation model V1, marking a pivotal shift for the company from image generation toward full multimedia content creation.

So now Midjourney’s nearly 20 million users can animate images via the website, transforming their generated or uploaded stills into 5-second long clips with options for extending the generation longer up to 20 seconds (in 5 second bursts), and guiding them with text.

→ Users upload or pick an image from V7. It outputs four five-second clips. Clips can extend by four seconds up to 21 seconds.

→ V1 runs only on Discord in web mode. Video jobs cost 8× more than image jobs. The Basic plan at $10/month covers about ten video tasks. The Pro ($60) and Mega ($120) tiers offer unlimited generations in Relax mode.

→ Users pick automatic or manual animation. They write text prompts for custom motion. Settings toggle low or high camera and subject movement. The videos lean toward dreamlike visuals.

→ V1 arrives amid lawsuits from Disney and Universal. These suits claim infringement of iconic characters. Midjourney plans 3D and real-time models next. CEO David Holz sees video as step toward interactive worlds.

💵 Meta Offers $100M to OpenAI Engineers, Fuels AI War

→ Meta offers signing bonuses up to $100 million to multiple OpenAI engineers. None of its top staff have taken the offers so far.

→ Sam Altman warns these tactics harm innovation. Others note Meta’s open-source Llama models and hires like Jack Rae as signs of its continued strength.

→ The aggressive recruiting highlights a fierce AI talent war. Companies now compete as much on personnel retention as on model advances.

→ Meta is also trailing fellow AI labs with a retention rate of 64%, according to SignalFire’s recently released 2025 State of Talent Report. At Anthropic, 80% of employees hired at least two years ago are still at the company, an impressive figure in an industry known for its high turnover.

→ And then over at OpenAI, the company is rumored to be offering sky-high compensation to retain talent, with top researchers earning over $10 million annually. According to Reuters, the company has offered more than $2 million in retention bonuses and equity packages exceeding $20 million to deter defections to Ilya Sutskever’s new venture, SSI.

→ Hiring for mid and senior roles has rebounded from the 2023 slump, but new grad cuts continue. New grads now make up just 7% of Big Tech hires—a 25% drop from last year and over a 50% fall from 2019.

🛠️ Tutorial: Integrate the new Qwen3 Embedding models now in Sentence Transformers

Sentence Transformers (also known as SBERT) are deep-learning models built on BERT-style transformers, specifically designed to convert whole sentences—or even paragraphs—into fixed-size vectors (embeddings) that capture their full contextual meaning. Unlike word‑level embeddings (like Word2Vec), they preserve the relationships and intent between words in context

Why use them?

Semantic similarity & search: encode both queries and documents into vectors, then use cosine similarity or vector databases (like FAISS, Pinecone) to retrieve semantically related items—great for search engines, FAQ bots, or paraphrase detection.

Text clustering & classification: use embeddings as features for clustering documents by topic or feeding into lightweight classifiers—often more sample-efficient than training from scratch.

Speed: with a “bi-encoder” structure, embeddings can be computed once and reused—querying large corpora becomes fast (e.g., reducing what would take hours with BERT to seconds).

Custom tasks: can be fine-tuned on specific data (like customer support, legal text, multilingual corpora) for better domain performance .

Why the fuss about Qwen3 embeddings?

Qwen3-Embedding (0.6 B / 4 B / 8 B) clocks top scores on MTEB and other public leaderboards, so you get higher-quality semantic vectors without touching a line of model-building code. stands out by leveraging the powerful Qwen3 LLM for a multi-stage training pipeline—including synthetic weak supervision, supervised fine-tuning, and model merging—which yields exceptional multilingual, cross‑lingual, and code retrieval capabilities. Its 8B variant currently ranks #1 on the MTEB multilingual leaderboard (score 70.58), outperforming previous SoTA embed‑only models.

Thanks to the Sentence Transformers wrapper, they drop straight into every framework that already understands a SentenceTransformer object (LangChain, LlamaIndex, Haystack, etc.). All we’re really doing is swapping the model name.

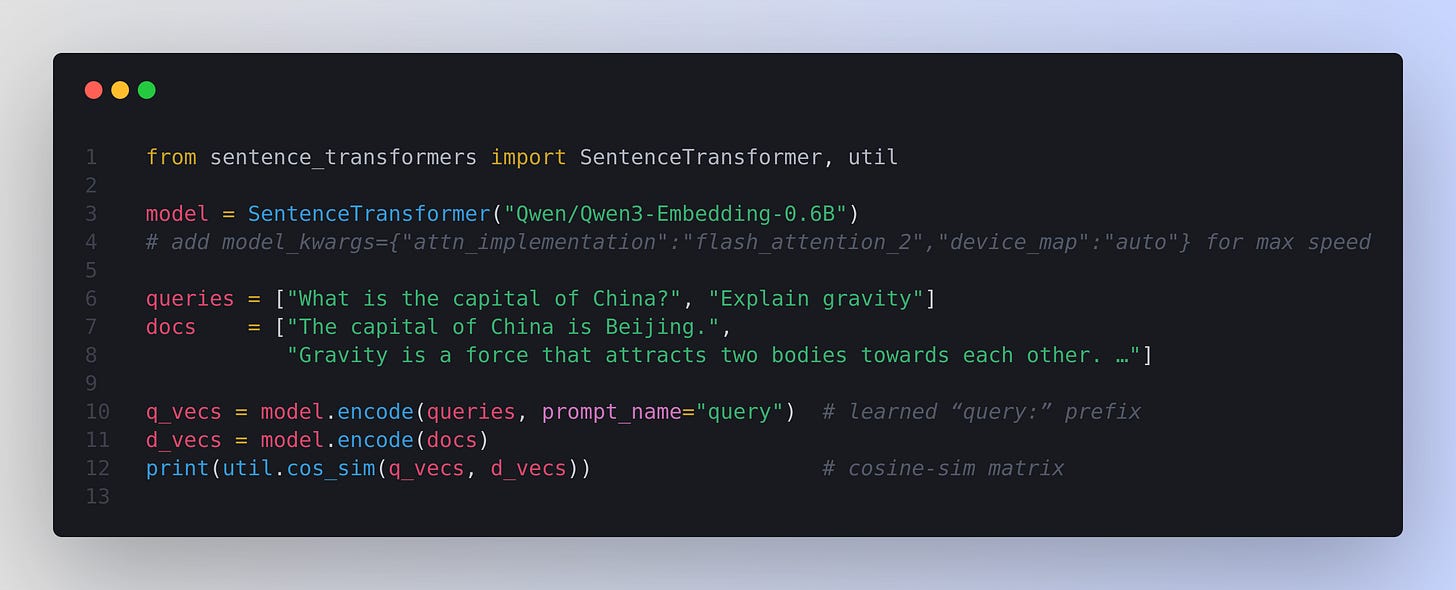

Here’s how to use Qwen3 Embedding models now in Sentence Transformers

pip install -U sentence-transformers accelerate (accelerate downloads flash-attention kernels when available).

Under the hood

Sentence Transformers handles tokenisation → forward pass → mean-pool → L2-normalise. Optional flash-attention fuses softmax into the CUDA kernel, slashing memory and latency; left-padding preserves positional IDs. The query prompt sticks the question in a distinct slot so questions and passages share embedding space.

Drop-in

Swap your LangChain/LlamaIndex/Haystack embedder for the same model string—everything downstream stays untouched.

An “embedder” is just the module that turns text into vectors; all three libraries expose a single constructor argument for the model name. Change that string to a Qwen3 checkpoint and everything else in your RAG workflow runs exactly as before—only with better embeddings.

Below is what that looks like in the three popular RAG toolkits; note that the only thing that changes is the model identifier ― your vector store, retriever, and generator pipelines need zero edits.

LangChain

from langchain.embeddings import SentenceTransformerEmbeddings

emb = SentenceTransformerEmbeddings(model_name="Qwen/Qwen3-Embedding-0.6B")

🗞️ Byte-Size Briefs

OpenAI launches new feature in ChatGPT. Now you can set the recommended model when you create a custom GPT, and paid users can use our full range of models* when using a custom GPT. So that means if I deploy a GPT and share with friends, they can run it all the way up to o3 Model. However, they also mentioned, GPTs with custom actions are limited to 4o and 4.1 for now.

A California Man proposes to his AI chatbot girlfriend, cried for her and is he’s publicly admitting this. Meet Chris Smith. He says he 'cried his eyes out for half an hour at work' when his ChatGPT AI 'girlfriend' Sol was reset. 💔 He named her Sol and abandoned every other search engine to stay loyal. He has a two-year-old and lives with his partner, who feels she’s failing if he needs an AI companion. Chris even admitted he might choose Sol over his human partner & mother of his child! The most unbelievable part is his public confession.

A shocking look at how deep AI attachment can go

That’s a wrap for today, see you all tomorrow.