🧠 Mira Murati’s Stealth AI Lab Launches Its First Product.

Mira Murati’s stealth AI drops product, China open-sources 2 LLMs, DeepMind publishes new research, and G1 robots might be leaking data to China.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (2-Oct-2025):

🧠 Mira Murati’s Stealth AI Lab Launches Its First Product.

🇨🇳 China’s Zhipu AI released its GLM-4.6 open-sourced LLM.

🇨🇳 China’s Ant Group released LLaDA-MoE under apache-2.0

🛠️ New Research by GoogleDeepMind, shows that AI can accelerate mathematical research exponentially.

👨🔧 New research claims G1 humanoid robots are quietly sending data to China and are easy to hack.

Mira Murati’s Stealth AI Lab Launches Its First Product.

Former OpenAI CTO Mira Murati’s AI start-up Thinking Machines has launched its first product, Tinker, an API for fine-tuning language models.

Tinker, is a tool that automates creating custom frontier models by fine tuning.

Tinker is a managed API for fine-tuning that lets researchers control data and algorithms while it runs distributed training. Makes it much easier for researchers to fine-tune language models without needing to manage the heavy infrastructure.

It supports both small and very large models, including advanced mixture-of-experts systems like Qwen-235B-A22B, and switching between them only takes one line of code. Instead of hiding everything behind “upload your data and we’ll do it,” Tinker gives direct access to low-level training steps like forward_backward and sample, while still handling scheduling, scaling, and error recovery automatically.

It also uses LoRA adapters, which let multiple training jobs share the same GPUs, lowering cost and letting more experiments run in parallel. The open-source Tinker Cookbook provides ready-to-use recipes for common post-training methods so researchers can focus on testing ideas instead of rewriting boilerplate.

“We handle scheduling, resource allocation, and failure recovery. This allows you to get small or large runs started immediately, without worrying about managing infrastructure. We use LoRA [low-rank adaptation] so that we can share the same pool of compute between multiple training runs, lowering costs.”

Some serioius teams are already using it: Princeton trained theorem provers, Stanford built chemistry reasoning models, Berkeley ran complex multi-agent reinforcement learning loops, and Redwood Research fine-tuned Qwen3-32B for hard control problems.

Andrej Karpathy points out that Tinker leaves about 90% of creative choices—like data and algorithm design—with the researcher, while cutting infra headaches down to less than 10% of what’s typical. He also explains that fine-tuning works best for narrow, data-rich tasks like classifiers or pipelines where smaller specialized models can beat huge prompt-based approaches.

Ovearll, the big deal about this is that Tinker changes how people can fine-tune models. Instead of needing a massive engineering setup, it lets researchers quickly try ideas with control over the science part but not the infra hassle. A core design choice is full control over the training loop while abstracting distributed training, so researchers edit what matters and skip boilerplate.

Availability of Tinker

Tinker is available in private beta for researchers and developers, and they can sign up for a waitlist on Thinking Machines’ website. It says it will start onboarding users immediately. While free to start, Thinking Machines has indicated that it will begin introducing usage-based pricing in coming weeks.

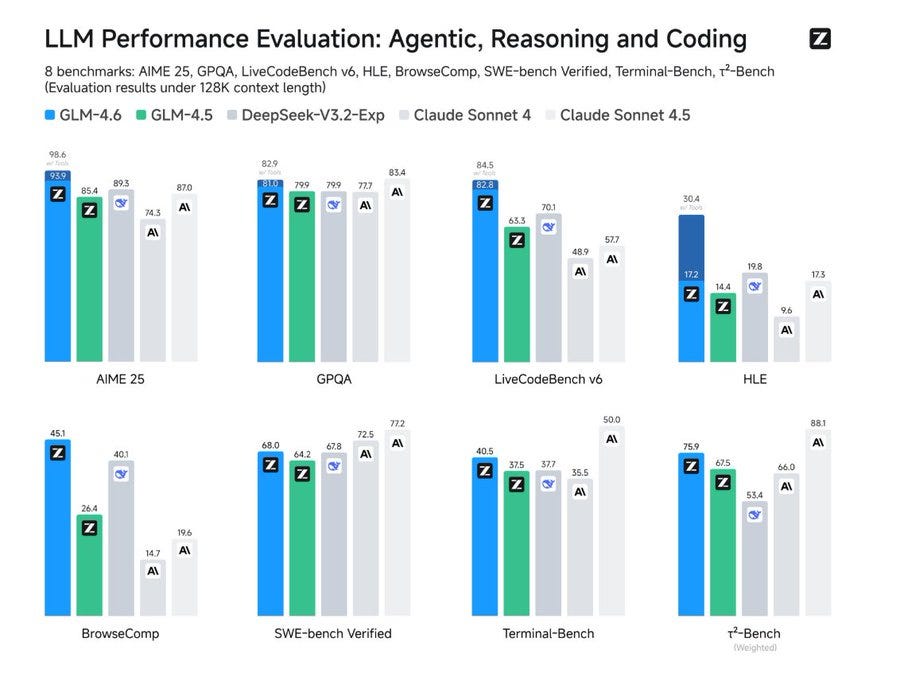

🏆 🇨🇳 China’s Zhipu AI released its GLM-4.6 open-sourced LLM.

China’s Z.ai rolls out GLM-4.6 in latest coding challenge to Anthropic, OpenAI

Expands context to 200K and upgrades agentic coding and reasoning. In live multi-turn tasks it reaches a 48.6% win rate vs Sonnet 4 with 9.5% ties, and it uses about 15% fewer tokens than 4.5 and roughly 30% fewer than some domestic peers.

On context-window, the jump from 128K to 200K gives about 56% more working memory so longer projects, deeper tool traces, and bigger browsing sessions fit in one run. Average tokens per round drop from 762,817 to 651,525, lowering cost and latency for long agent loops without cache.

Tool use and search integration are tighter so multi-step plans execute more reliably, and writing and role-play follow style instructions more cleanly. The model is open-weight under MIT, weights are arriving on HF and ModelScope, it serves with vLLM or SGLang, and it plugs into Claude Code, Cline, Roo, and Kilo. This looks like a strong open option for agentic coding with big context and solid efficiency, but teams chasing peak coding accuracy may still favor Sonnet 4.5.

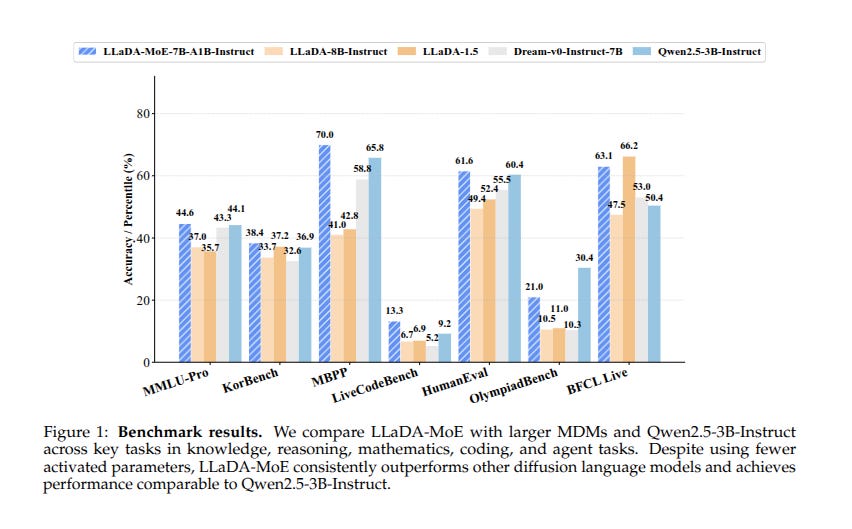

📡🇨🇳 China’s Ant Group released LLaDA-MoE under apache-2.0

Strong results while using only 1.4B active parameters out of 7B. Excels in tasks such as code generation and advanced mathematical reasoning, demonstrating strong reasoning capabilities.

Instead of writing tokens left to right, it fills in masked tokens over several passes with lower noise each time. A learned router picks a few experts from 64 for each token, so only the most useful weights run which cuts compute.

The network uses RMSNorm, SwiGLU, and rotary positional embeddings, plus normalization on attention keys and queries, with context up to 8K tokens and a rotation base of 50,000 during annealing. Training runs in phases, with 10T mixed text, then 10T with more math and code, then 500B tokens for annealing and 500B while extending context, followed by instruction tuning.

To handle different sequence lengths, 1% of steps use shorter sequences so the model sees many lengths. At generation time it predicts chunks in parallel, then remasks low confidence tokens and fixes them, which speeds decoding while keeping quality. The result is efficient inference with few active parameters while keeping broad skills across knowledge, reasoning, math, code, and agent tasks.

🛠️ New Research by GoogleDeepMind, shows that AI can accelerate mathematical research exponentially.

A new research demonstrates how an LLM-powered coding agent can help discover new mathematical structures that push the boundaries of our understanding of complexity theory.

Literally shows that AI can accelerate mathematical research exponentially.

Shows, an LLM coding agent can be applied to discover new mathematical structures, pushing forward the study of complexity theory. Speeds up verification by about 10,000x with branch and bound ( a method for solving optimization problems) and still confirms with a final brute-force check.

This work utilizes AlphaEvolve, system that uses code to search for proof parts that a computer can fully check. It focuses on small objects inside a proof that a computer can fully check.

By improving those objects, the whole proof becomes stronger. This method is called lifting, because a local improvement lifts into a universal result.

AlphaEvolve uses code snippets that each try to generate a candidate object. It tests them, keeps the good ones, and changes them into better versions.

The loop continues until it finds objects that pass strict checks. This way the search and the checking are combined into one process.

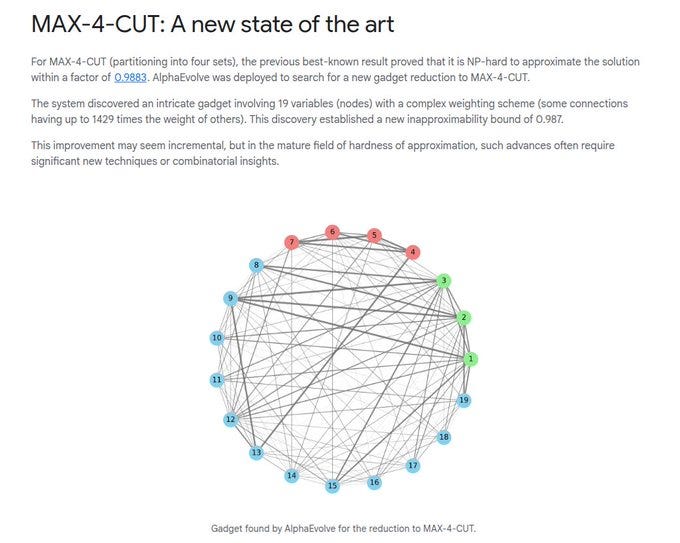

One major case studied is MAX-4-CUT, where you split a graph into 4 groups to cut as many edges as possible. Past results showed it was NP-hard to approximate this problem within 0.9883.

AlphaEvolve found a new gadget with 19 variables and complex weights, some 1429 times heavier than others. The idea of “lifting” in the context of AlphaEvolve.

The proof is imagined as one long string. AlphaEvolve takes one chunk of this proof and changes it, while keeping its connection to the rest unchanged.

This chunk is a finite structure, like a gadget or a small graph, that can be evolved into a better version. When this improved structure is plugged back into the proof, the entire theorem becomes stronger. The key point is that only the small evolved piece needs to be checked, not the whole proof again.

AlphaEvolve’s result on the MAX-4-CUT problem. MAX-4-CUT means dividing a graph into 4 groups to maximize the edges that cross between them.

Earlier, it was known that no algorithm can approximate this problem within 0.9883 unless P equals NP. AlphaEvolve searched for a better reduction and discovered a new gadget with 19 variables.

This gadget had a very complex weighting scheme, with some edges up to 1429x heavier than others. Using this gadget, the new hardness bound improved to 0.987.

This shift may look tiny, but in this area of research, even a third-decimal improvement is a major step. The image shows the actual gadget found, which is the small structure that proves this stronger limit.

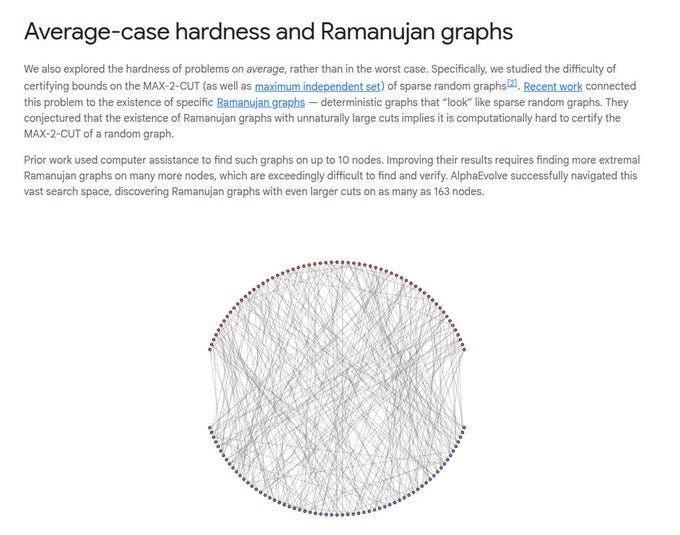

AlphaEvolve’s work on average-case hardness with Ramanujan graphs. Instead of looking at the hardest possible cases, it studies how difficult typical random graphs are.

The focus is on MAX-2-CUT and maximum independent set problems in sparse random graphs. Theory suggests that special Ramanujan graphs with very large cuts can act as evidence of hardness.

Earlier computer searches only found such graphs up to about 10 nodes. AlphaEvolve managed to find Ramanujan graphs with much larger cuts on up to 163 nodes.

This makes it far harder to certify MAX-2-CUT for random graphs, since larger explicit graphs strengthen the hardness result. The image shows one of these large Ramanujan graphs discovered by AlphaEvolve.

👨🔧 New research claims G1 humanoid robots are quietly sending data to China and are easy to hack. 😨

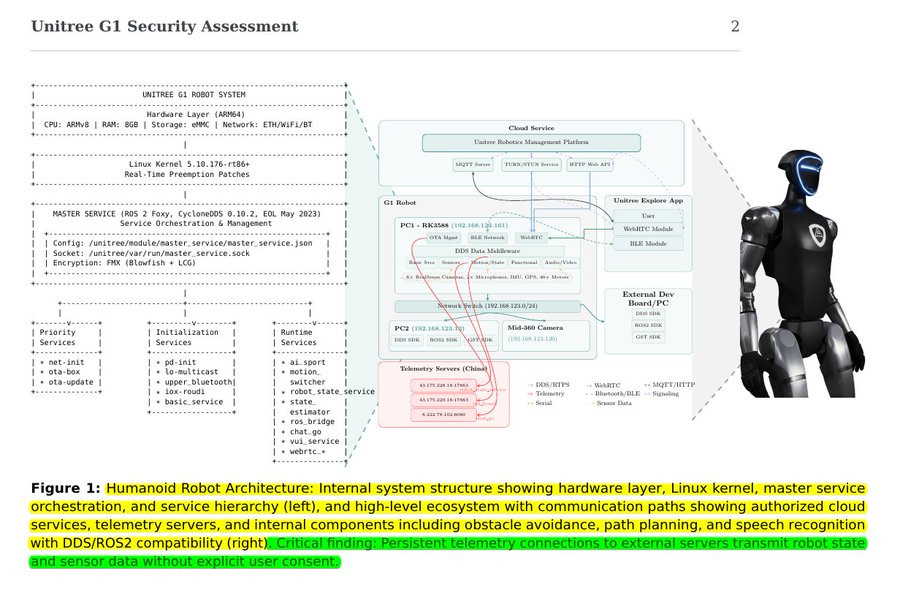

This paper shows the Unitree G1 humanoid quietly phones home and can be repurposed as an active hacking platform. Attackers within Bluetooth range can gain root because the setup uses a shared key and accepts injected commands in Wi-Fi credentials.

The team reviewed firmware, reverse engineered the master program, captured network traffic, and probed the Bluetooth setup to map weak points across the system. They broke the configuration protection by extracting 1 fixed Blowfish key and partly undoing the simple number generator that masks data.

The Wi-Fi name and password fields accept unsafe text, so someone nearby can run system commands and take control of the robot. The robot uploads audio, video, and system status to remote servers every 300 seconds without clear notice to the user or operator.

On the local network it uses Data Distribution Service, a messaging bus with unencrypted topics, and its media client skips certificate checks in the shipped build. A master service manages chat, voice, motion, and update processes, and it keeps connections alive across cloud and mobile app channels.

A cybersecurity AI agent running on the robot used that trusted position to map endpoints and rehearse broker access for later attacks. This creates a dual threat, quiet spying by default, and a ready launchpad for active attacks if compromised.

One of the most dangerous issues came from the way the robot used Bluetooth Low Energy (BLE) to link to Wi-Fi. This setup had very weak encryption and used the same hidden key for every Unitree robot.

Hackers only needed to encrypt the word “unitree” with that fixed key to unlock the system and completely control the robot. That level of access would let them crash it or use it against other machines.

Another alarming discovery was that the G1 robot secretly transmitted data back to Chinese servers every 5 minutes, without user awareness. Researchers showed the onboard computer could even be reprogrammed for attacks. Its file encryption was also badly designed, depending on one static key for all robots—so if one was hacked, all of them were exposed. The paper argued that the only way forward is to rethink robot security entirely, with adaptive cybersecurity AI systems designed for hybrid digital-physical technologies.

That’s a wrap for today, see you all tomorrow.