🏆 Mistral 3 (675B param) is launched and it beats DeepSeek 3.1

Mistral 3 beats DeepSeek, Claude boosts Anthropic productivity, AWS drops Trainium3, Transformers v5 goes modular, and Perplexity targets prompt injection in web content.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (3-Dec-2025):

🏆 Mistral 3 (675B param) is launched and it beats DeepSeek 3.1

🤖 Anthropic just released a detailed study revealing that their engineers now rely on Claude for 60% of their daily work, resulting in a self-reported 50% productivity increase.

📡 Anthropic acquires Bun (JavaScript Runtime) to accelerate code, announces Claude Code hit $1B milestone.

📢 AWS launched Trainium3 and new Trn3 UltraServers

📦 HuggingFace pushed out Transformers v5, and it basically rebuilds all the models into clearer, modular sections.

🌐 Perplexity introduced BrowseSafe and BrowseSafe-Bench, tools built to spot hidden prompt-injection tricks that may be buried inside webpages.

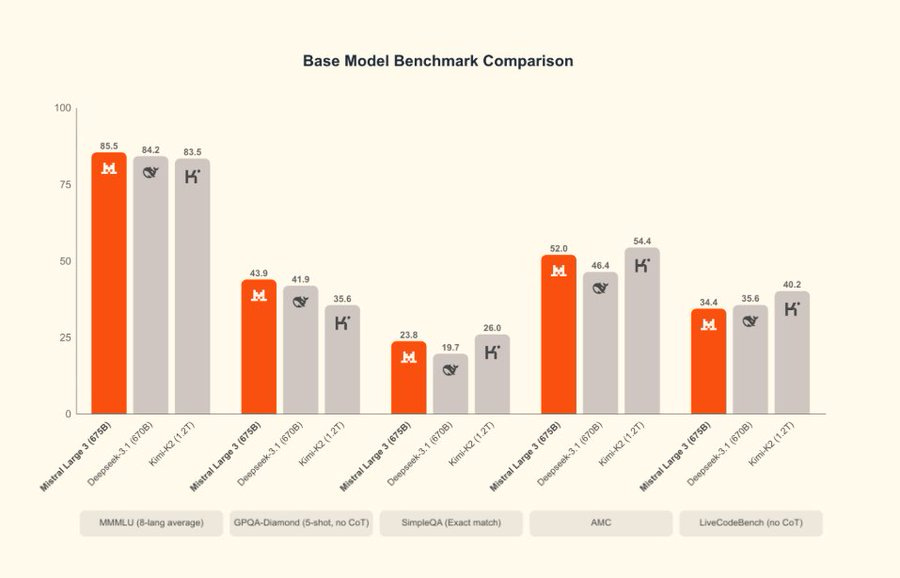

🏆 Mistral 3 (675B) is launched and it beats DeepSeek 3.1

Mistral introduces the Mistral 3 family, open-weight MoE models including its flagship Large 3

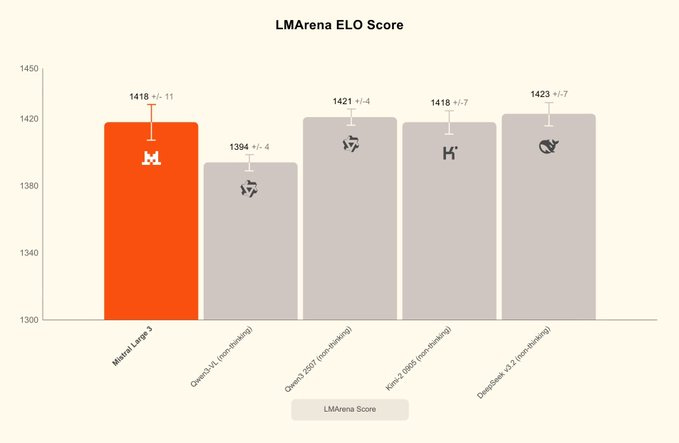

In lmarena benchmark Mistral-Large-3 lands at #6 among open models and #28 overall on the Text leaderboard. And ranks #2 in OSS non-reasoning models on LMArena.

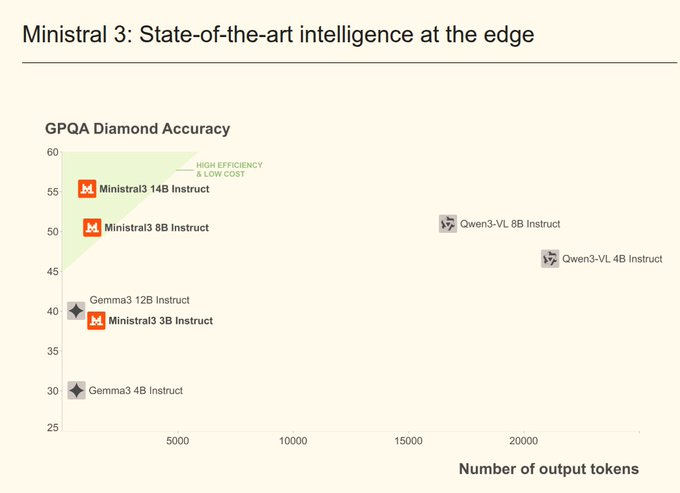

Overall, we get 1 big model Mistral Large 3 and also 3 smaller dense models called Ministral 14B, 8B, and 3B. All models are released under the Apache 2.0 license.

Mistral Large 3 is a sparse mixture of experts model with 41B active parameters and 675B total parameters, supports images and text, and is shipped in base and instruct versions, with a reasoning version promised soon.

The Ministral 14B, 8B, and 3B models are standard dense models, each shipped as base, instruct, and reasoning variants, so there are 9 Ministral models total. All the models are hosted on Mistral AI Studio, Amazon Bedrock, Azure Foundry, Hugging Face, Modal, IBM WatsonX, OpenRouter, Fireworks, Unsloth AI, and Together AI, with NVIDIA NIM and AWS SageMaker listed as coming soon.

Mistral Large 3 debuts at #2 in the OSS non-reasoning models category (#6 amongst OSS models overall) on the LMArena leaderboard.

For edge and local use cases, they release the Ministral 3 series, available in three model sizes: 3B, 8B, and 14B parameters.

All these models under the Apache 2.0 license.

You can run the models through Mistral AI Studio, Hugging Face, major cloud providers, or fine-tune them through Mistral’s training services when you need domain-specific behavior.

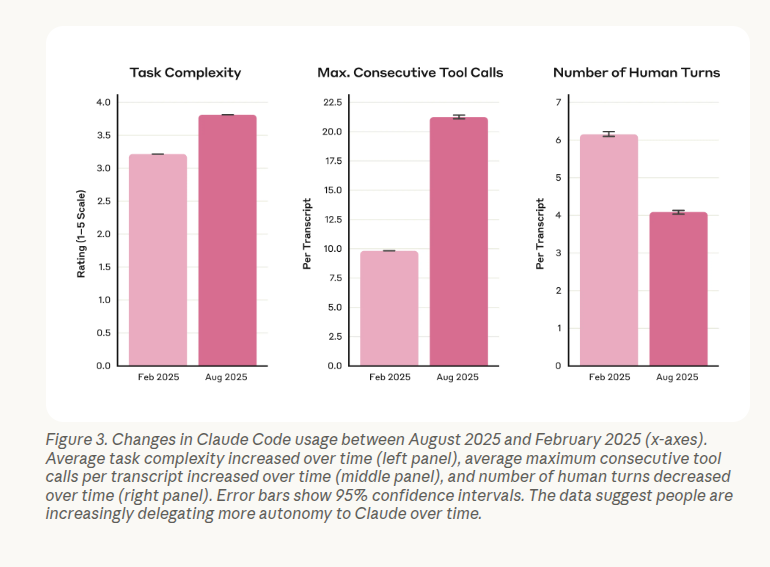

🤖 Anthropic just released a detailed study revealing that their engineers now rely on Claude for 60% of their daily work, resulting in a self-reported 50% productivity increase.

The data comes from surveying 132 technical staff and analyzing 200,000 internal coding transcripts. Engineers mainly use the tool for debugging and wrapping their heads around complex code, rather than just generating boilerplate text.

There is a clear trend toward autonomy, with the AI now handling about 20 consecutive actions—like editing files or running commands—on its own, which is double what it could do in February-25. This capability allows staff to act as “full-stack” developers, meaning a backend engineer can suddenly build a complex user interface by prompting the AI rather than learning the specific framework from scratch.

Interestingly, about 9% of the work involves fixing “papercuts,” which are minor code improvements or small tools that developers usually ignore because they aren’t worth the manual effort. However, the team expressed valid concerns about “skill atrophy,” fearing that relying on AI prevents them from deeply understanding the systems they are building.

Social dynamics are also shifting, as junior engineers are asking the AI for help instead of approaching senior colleagues, which potentially weakens human mentorship loops. Ultimately, the role is evolving from writing code to reviewing it, with humans acting more like managers of AI agents than individual contributors.

📡Anthropic is buying Bun.

By bringing Bun in house while keeping it open source and MIT licensed, Anthropic gets tight control over the runtime. “For those using Claude Code, this acquisition means faster performance, improved stability, and new capabilities.”

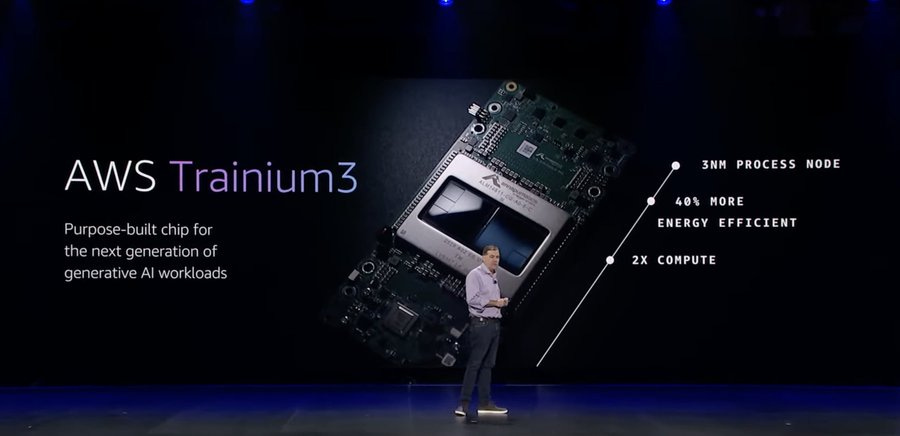

📢 AWS launched Trainium3 and new Trn3 UltraServers

AWS announced Trainium3 UltraServers now available, Enabling customers to train and deploy AI models faster at lower cost

Up to 4.4× higher performance vs Trn2

3.9× higher memory bandwidth vs Trn2

4× better performance per watt vs Trn2

144 chips/server = 362 FP8 PFLOPs, 20.7TB HBM3e, 706TB/s aggregate bandwidth

Scale to 1M+ chips in UltraClusters

3nm Trainium3, 2.52 PFLOPs FP8/chip, 144GB HBM3e, 4.9TB/s per-chip bandwidth

NeuronSwitch-v1 fabric, 2× inter-chip bandwidth vs Trn2

New first-class datatypes on Trainium3: MXFP8 and MXFP4 for dense and MoE workloads

AWS also teased Trainium4 that will interoperate with Nvidia GPUs via NVLink Fusion, with timing not announced.

Trainium3 is a 3nm chip delivering 2.52 PFLOPs FP8 per chip, 144GB HBM3e, and 4.9TB/s memory bandwidth, with new low-precision formats MXFP8 and MXFP4 for dense and MoE workloads. A Trn3 UltraServer packs 144 chips for 362 PFLOPs FP8, 20.7TB HBM3e, and 706TB/s aggregate bandwidth, connected by NeuronSwitch-v1 that doubles inter-chip bandwidth over Trn2.

EC2 UltraClusters 3.0 can scale Trn3 to hundreds of thousands of chips, targeting frontier-scale training, long-context reasoning, and real-time multimodal serving. AWS reports up to 4.4x higher performance, 3.9x higher memory bandwidth, and 4x better performance per watt versus Trn2, improving price-performance for both training and inference.

On Bedrock, Trn3 delivers up to 3x the performance of Trn2 and over 5x higher output tokens per megawatt at similar per-user latency. Developers get native PyTorch with the AWS Neuron SDK, plus lower-level hooks to tune kernels without changing model code. Early users like Anthropic and others reduced inference costs, helped by the memory-to-compute balance and improved networking.

📦HuggingFace pushed out Transformers v5, and it basically rebuilds all the models into clearer, modular sections.

PyTorch is now the single backend, dropping Flax and TensorFlow and making it easier for large pretraining or fine tuning stacks to share checkpoints. For inference, v5 bundles optimized kernels, continuous batching, and paged attention, and introduces transformers serve, an OpenAI compatible HTTP server for hitting Transformers models directly.

In production and on device, models move more easily between Transformers and engines like vLLM or ONNXRuntime, so 1 model definition works across several runtimes. Quantization becomes a first class path, with redesigned weight loading so 8bit and 4bit checkpoints work cleanly with TorchAO and bitsandbytes, which should reduce duplicated engineering work across the open source AI ecosystem.

🌐 Perplexity introduced BrowseSafe and BrowseSafe-Bench, tools built to spot hidden prompt-injection tricks that may be buried inside webpages.

Prompt injection here means attacker text inside HTML, such as comments or hidden fields, that tells the agent to ignore instructions and do something harmful.

BrowseSafe-Bench models this with 14K+ HTML pages built along 3 axes, covering attack goal, placement in the page, and wording style from explicit to stealthy.

They generate fake training examples by taking many different kinds of webpages and automatically inserting attack instructions into them.

They also create tricky “safe” examples, like policy notices or code snippets that look a bit like commands, so the model learns not to panic and mislabel normal content as malicious.

They fine-tune a Mixture-of-Experts detector on this corpus using Qwen-30B-A3B-Instruct-2507 and achieve about 0.91 F1 score, while small classifiers stay near 0.35 F1 score and generic safety LLMs drop without slow reasoning passes.

Experiments show multilingual and indirect prompts are hardest to spot, visible footers beat hidden metadata at fooling detectors, and adding benign distractor text can drag balanced accuracy from around 90%+ to roughly the low 80% range.

In production Comet treats any web-returning tool as untrusted, runs BrowseSafe on its output, sends ambiguous cases to a slower frontier model, and turns those edge examples into new synthetic training data.

Overall this work makes browser agent security feel more like a measurable system problem and less like scattered prompt tricks.

That’s a wrap for today, see you all tomorrow.

Solid roundup. The Anthropic productivity numbers are interesting, but that "skill atrophy" concern they mention is legit. When junior engineers default to Claude instead of asking seniors, you're basically trading short-term velocity for long-term team knowledge transfer. The Transformers v5 modularity push makes sense though, especially if it reduces the friction of moving models between different runtimes without rewriting everything.

these articles are great and are much appreciated