⚙️ Mistral Medium 3, Anthropic Web Search API and many more

Mistral drops a cheap SOTA LLM, Claude gets web search, Bhindi.ai brings 60+ agents in one chat, plus big moves from OpenAI, NVIDIA, Google, and Alibaba.

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter multiple times a week. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (9-May-2025):

🥉 Mistral AI launched Medium-3 LLM, SOTA performance with 8X lower cost

📡 Anthropic launches Web Search API for Claude

🏆 Bhindi.ai : This new Agentic AI tool allows you to Talk to 60+ AI Agents in One Chat and Get Real Work Done

💼 OpenAI expands leadership with Instacart CEO

🏗️ NVIDIA open-sourced Parakeet TDT 0.6B V2, a 600M parameter automatic speech recognition (ASR) model, which can transcribe 60 minutes of audio in just 1 second

🧠 Alibaba introduced Zerosearch, an AI that searches on its own and improves by itself

🗞️ Byte-Size Briefs:

Google adds v6e-1 TPUs to Colab with 918 BF16 TFLOPS

Gemini 2.5 Flash costs 150x more than 2.0 in evals

NVIDIA open-sources 32B OCR models, 30% more token efficient

OpenAI adds GitHub Repo integration to Deep Research in ChatGPT for code analysis and PR insights.

🥉 Mistral AI launched Medium-3 LLM, SOTA performance with 8X lower cost

🎯 The Brief

Mistral AI launched Mistral Medium 3, a frontier-level LLM delivering 90%+ of Claude Sonnet 3.7’s performance at 8X lower cost, with seamless hybrid/on-prem deployability and enterprise-grade customization. This unlocks serious enterprise use, especially in coding and STEM tasks, while undercutting both open and enterprise model pricing.

⚙️ The Details

→ Mistral Medium 3 targets a new middle-tier: SOTA performance, drastically lower cost, and frictionless deployment.

→ It beats models like Llama 4 Maverick and Cohere Command R+ in both performance and cost, and is priced at $0.4/input and $2/output per million tokens.

→ Enterprise features include custom post-training, hybrid/on-prem/in-VPC deployment, and full integration into business workflows.

→ It’s optimized for real-world coding, STEM reasoning, and multimodal tasks, validated via both internal benchmarks and third-party human evals.

→ The Mistral Medium 3 API is available on Mistral La Plateforme and Amazon Sagemaker, and soon on IBM WatsonX, NVIDIA NIM, Azure AI Foundry, and Google Cloud Vertex.

→ Supports deployment on setups with 4+ GPUs, making it viable for in-house runs.

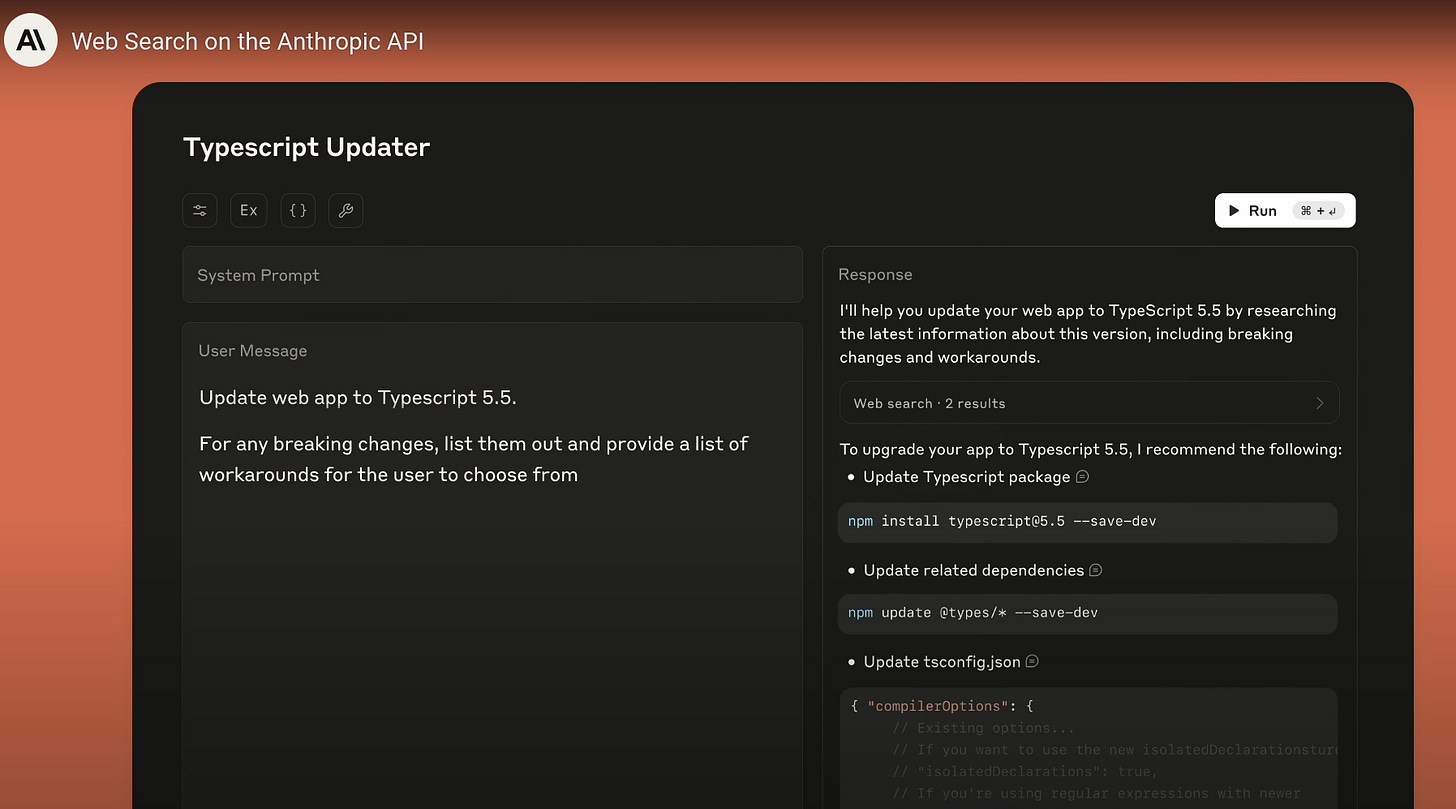

📡 Anthropic launches Web Search API for Claude

🎯 The Brief

Anthropic launched a Web Search API for Claude models, enabling real-time web access for current, accurate responses. Supports Claude 3.7 Sonnet, 3.5 Sonnet, and 3.5 Haiku, priced at $10 per 1,000 searches. This boosts Claude’s utility in high-stakes, time-sensitive domains like finance, law, and dev tooling.

⚙️ The Details

→ Developers can now enable web search in Claude API calls, letting Claude pull live data to enhance responses with up-to-date info.

How web search works: When you add the web search tool to your API request:

Claude decides when to search based on the prompt.

The API executes the searches and provides Claude with the results. This process may repeat multiple times throughout a single request.

At the end of its turn, Claude provides a final response with cited sources.

→ Claude uses reasoning to decide when to trigger web search, generates targeted queries, conducts multi-step lookups, and cites sources in answers.

→ Web search supports agentic workflows using max_uses, refining searches iteratively to handle complex requests.

→ Admins get granular control via domain allow/block lists and org-level settings to govern accessible content.

→ Claude Code integrates web search for up-to-date dev workflows, API docs, and library usage—ideal for fast-moving tech stacks.

→ Early adopters like Quora's Poe and Adaptive.ai report faster, deeper, and more accurate real-time responses.

Example of How to use web search: Just Provide the web search tool in your API request:

🏆 Bhindi.ai : This new Agentic AI tool allows you to Talk to 60+ AI Agents in One Chat and Get Real Work Done

The concept is so powerful.

Seeing 60+ agents collaborate in one chat using multiple platforms as tools is wild, especially with real-world use cases popping up in marketing, sales, and customer service.

Connect flights, hotels, weather, maps, Slack, trends—across apps—effortlessly. Bhindi.ai handles it all with 10 agents running in perfect sync. It can easily get your Trello tasks, merges PRs, and updates Slack – all in one flow.

From reading emails to pulling GitHub PRs to syncing Slack threads.

💼 OpenAI expands leadership with Instacart CEO

🎯 The Brief

OpenAI appointed Instacart CEO Fidji Simo as its new CEO of Applications to lead its product and operational teams, enabling CEO Sam Altman to focus more on research, infrastructure, and safety. This signals a serious scale-up of OpenAI’s global product and infrastructure ambitions.

⚙️ The Details

→ Fidji Simo joins as CEO of Applications, a new division merging OpenAI’s product and business ops that deliver research to users at scale.

→ She reports directly to Sam Altman, who remains CEO of OpenAI and will now concentrate on Research, Compute, and Safety Systems.

→ Simo has been on OpenAI’s nonprofit board for a year and brings leadership experience from Instacart and Facebook’s core app and ad business.

→ Her role aims to scale OpenAI’s "traditional" company functions in line with its growth across global products and infrastructure.

→ The move aligns with OpenAI’s shift toward being a vertically integrated AGI and superintelligence company with nonprofit oversight.

🏗️ NVIDIA open-sourced Parakeet TDT 0.6B V2, a 600M parameter automatic speech recognition (ASR) model, which can transcribe 60 minutes of audio in just 1 second

🎯 The Brief

NVIDIA just open-sourced Parakeet TDT 0.6B V2, a 600M parameter automatic speech recognition (ASR) model that tops the Huggingface Open-ASR leaderboard. It's open-sourced under CC-BY-4.0, ready for commercial use.

⚙️ The Details

→ Built on FastConformer encoder + TDT decoder, the model handles up to 24-minute audio chunks with full attention and outputs with punctuation, capitalization, and accurate word/char/segment timestamps.

→ It achieves RTFx 3380 at batch size 128 on the Open ASR leaderboard, but performance varies with audio duration and batch size.

→ Trained using 150K steps on 128 A100 GPUs, then fine-tuned on 500 hours of high-quality human-transcribed English data. Total training data spans 120K hours, combining human-labeled and pseudo-labeled sources, including LibriSpeech, Fisher, YTC, YODAS, and more.

→ Available via NVIDIA NeMo, optimized for GPU inference, and installable via pip install -U nemo_toolkit['asr'].

→ Compatible with Linux, runs on Ampere, Blackwell, Hopper, Volta GPU architectures, requiring minimum 2GB RAM.

🧠 Alibaba introduced Zerosearch, an AI that searches on its own and improves by itself

Alibaba brings a brilliant idea in its new research for LLM reasoning. 👏

No data? No problem.

Here, AI learns to reason by inventing and solving its own Python coding challenges, no human data needed.

Current AI reasoning models Reinforcement learning with verifiable rewards (RLVR), rely on curated datasets, facing scalability issues and limiting potential for superintelligent systems.

Absolute ZeroSearch removes the need for expensive search engine API calls during training by using an LLM to simulate search results. So The approach avoids the high API costs and unpredictable data quality associated with training AI on live commercial search engines.

Absolute Zero Reasoner (AZR), is a single model autonomously proposes code-based tasks to maximize its learnability and improves reasoning by solving them, entirely through self-play with no external data.

📌 AZR evolves its own learning curriculum, pushing beyond human-defined boundaries.

📌 Python's verifiability provides a solid ground for AZR's self-learning loop.

📌 AZR's self-play demonstrates strong cross-domain reasoning transfer, like math skills from coding.

Methods used in this Paper 🔧:

→ AZR uses one model for two roles: proposing tasks and solving them.

→ It grounds reasoning in Python, creating deduction, abduction, and induction tasks from program-input-output triplets.

→ The system learns by solving these self-generated tasks, receiving rewards from a code executor.

→ Reinforcement learning optimizes both task proposal for learnability and problem-solving abilities.

🗞️ Byte-Size Briefs

v6e-1 (Trillium) TPUs arrived in Google Colab. It will give you 2x the high bandwidth memory as v5e-1 (32GB) and a 918 BF16 TFLOPS (nearly 3x A100)!

ArtificialAnlys reported that Google’s Gemini 2.5 Flash costs 150x more than Gemini 2.0 Flash to run the Artificial Analysis Intelligence Index. This increase is driven by a 9x more expensive output tokens and 17x higher token usage across their evals.

NVIDIA open-sourced OpenCodeReasoning models (32B, 14B, 7B) under Apache 2.0 license (commercial use is allowed), beating O3 mini & O1 (low) on LiveCodeBench, with 30% higher token efficiency via their OCR dataset, and full compatibility across major inference stacks. OpenCodeReasoning is based on Qwen2.5-32B-Instruct and targets code reasoning via specialized post-training.

OpenAI adds GitHub Repo integration to Deep Research in ChatGPT for code analysis and PR insights.

That’s a wrap for today, see you all next time.