📉 MIT shows 95% investing in generative AI still lack visible results

MIT flags weak ROI in genAI, OpenAI eyes data-center sales and India pricing, while Firefox enables local LLMs, AWS architecture updates.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (20-Aug-2025):

📉 Research from MIT shows 95% of companies investing in generative AI still lack visible results. 😯

🏗️OpenAI may sell access to its own AI data centers later, aiming to offset huge compute costs.

📡 OpenAI announced the release of ChatGPT Go, a cheaper subscription specifically for India, priced at less than $5 per month and able to be paid in local currency.

🗞️ Byte-Size Briefs

OpenAI released their Agents.md Repo.

Firefox 142 now allows browser Extensions/Add-Ons to use local AI LLMs

🧑🎓 Deep Dive: A really practical collection of updates for planning your AWS architecture, that you might have missed.

📉 Research from MIT shows 95% of companies investing in generative AI still lack visible results. 😯

The MIT’s study pulls from 150 exec interviews, a survey of 350 employees, and 300 public deployments.

Although there has been between $30 and $40 billion in enterprise investment into generative AI, just 5% of those pilots “are extracting millions in value,” while the majority contribute no measurable impact to profits, the report found.

The pattern is simple. Generic chat tools feel magical for individuals because they flex to any prompt, but inside a company they stall because they do not learn the company’s data, rules, or handoffs.

That “learning gap” shows up as brittle workflows, noisy outputs, and no change in P&L. Budgets are also pointed the wrong way. More than half of spend goes to sales and marketing pilots, while the biggest gains show up in back-office automation.

The wins come from removing business process outsourcing, trimming external agency work, and tightening operations. That is where repeatable tasks, clean feedback loops, and clear cost baselines make impact measurable.

Build strategy matters. Buying from focused vendors and partnering lands about 67% success. Building internally hits roughly 33%. The gap is wider in regulated sectors like financial services, where in-house stacks add risk, slow approvals, and stretch thin platform teams.

Workforce effects are already visible. Companies are not backfilling some customer support and admin roles, especially ones previously outsourced. But shadow AI is everywhere, which makes measurement messy, so leaders struggle to prove productivity and profit lift with clean telemetry.

Yesterday (19-Aug-2025) this MIT Study also impacted WallStreet as well.

📊 US tech stocks fell as fresh doubts hit the AI trade. The trigger was this MIT report, claiming 95% of organizations see zero return from generative AI right now, while only 5% of integrated pilots show material gains.

This gap happens when pilots are built before data pipelines, security reviews, workflows, and training are ready. Firms spend on compute and tools first, then find the last mile to production is slower and costlier than expected.

Comments about a possible AI bubble added fuel. When many funds crowd into the same AI names, small negative surprises create fast exits. That is why losses cluster in the most popular trades.

Sam Altman also added to this in a separate comment. He admitted to believing we’re in an AI bubble.

“Are we in a phase where investors as a whole are overexcited about AI? My opinion is yes.”

- Altman said during an interview.

In my opinion, this pullback looks like a healthy check. The next leg should depend on proof of real unit economics, like lower inference cost per query and measured productivity gains that flow into profit and loss.

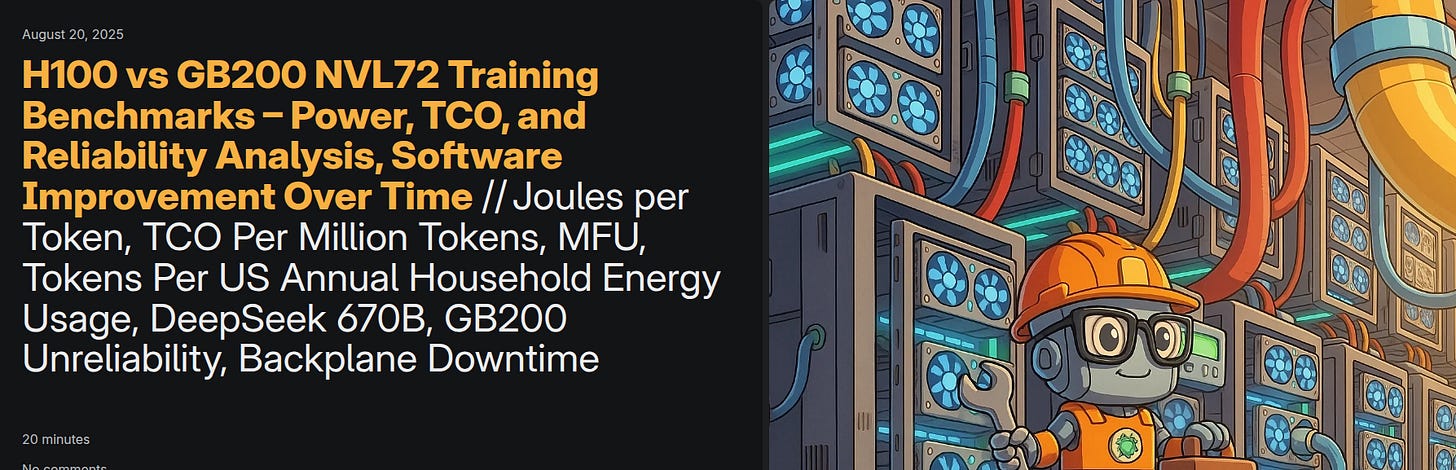

Semi Analysis published their latest report on H100 vs GB200 NVL72 Training Benchmarks

GB200 NVL72 only beats H100 on value if it delivers ≥1.6x speedup while also clearing its reliability hurdles, and right now H100, H200, and Google TPUs are the only platforms consistently finishing frontier-scale training.

Procurement teams still get sold on peak flops, so this report measures model flops utilization (MFU) cost per 1M tokens, and Joules per token across 2,000+ H100s with early GB200 NVL72 findings to ground choices.

The analysis ties real training throughput to total cost and power by reporting MFU, cost per 1M tokens, and all-in Joules per token for common model sizes, then compares those with early GB200 NVL72 numbers so buyers can weight performance against downtime and ops reality.

💸 Total cost of ownership (TCO) snapshot

An H100 server runs about $190K, and all-in with storage and networking a hyperscaler pays $250K per server, while a GB200 NVL72 rack lists around $3.1M for the rack unit and about $3.9M all-in per rack.

Across buyer types, GB200 NVL72 capex per GPU lands 1.6x–1.7x versus H100, opex per GPU is only slightly higher, and the gap is mostly power since GB200 draws 1200W per chip versus H100 at 700W.

When capex and opex are folded into TCO, GB200 NVL72 sits at about 1.6x H100, so it needs ≥1.6x real speed to win on performance per TCO.

⚙️ Software moved the needle

On a 128-H100 GPT-3 175B setup, BF16 MFU rose from 34% to 54% across 2024, a 57% throughput lift driven by kernel and collective upgrades.

FP8 MFU climbed from 29.5% to 39.5%, a 34% lift that came purely from software.

With a cost baseline of $1.42/hr/GPU, FP8 training cost per 1M tokens fell from $0.72 to $0.542, and the full 300B-token GPT-3 175B run dropped from $218K to $162K.

🔋 Energy in plain units

Each trained token now consumes about 2.46 J on FP8 and 3.63 J on BF16, which lets a single average US household’s yearly energy budget train about 15.8B FP8 tokens.

A 300B-token GPT-3 175B run maps to roughly 19 households on FP8 and 28 households on BF16, so experiments and failed runs, not one clean pass, drive the big grid impact.

📈 Weak scaling, Llama3 405B

Scaling from 576 to 2,304 H100s keeps FP8 near 43% MFU and BF16 near 54% MFU, which matches real runs that report about 41% BF16 MFU for pretraining at large scale.

Mid-training context extension pushes sequence length to 131,072, needs context parallel across 16 nodes, and MFU slips to about 38% due to ring attention communication.

BF16 pretraining cost comes out to $1.95 per 1M tokens on 2,304 H100s, so 15T tokens costs about $29.1M, and energy per token is about 8.8 J versus GPT-3 175B at 3.6 J.

That energy means one household’s yearly budget trains about 4.4B tokens, so 15T tokens consumes roughly 3,400 households for the pretraining phase alone.

🧪 Llama3 70B scaling quirks

FP8 model flops utilization (MFU) slides from 38.1% at 64 H100s to 35.5% at 2,048 H100s, while BF16 barely moves, 54.5% to 53.7%, since only the number of replicas changes.

Llama3 405B is 5.7x larger than 70B, and at around 2K H100s the BF16 cost per 1M tokens is 5.4x higher for 405B, which lines up with dense-model flops scaling.

FP8 energy per token grows about 10% when moving from 64 to 2,048 H100s, so 15T tokens equates to 440 households at small scale versus 472 at large scale.

🔩 Tensor Parallelism choices matter

GPT-3 175B runs better with TP 4 ( tensor parallelized) rather than TP 8 on H100 because the shard sizes are larger, so kernels do more math per byte moved, which raises arithmetic intensity and reduces communication bubbles.

🛠️ GB200 reliability problems

Large-scale GB200 NVL72 training has not finished frontier runs yet because the NVLink copper backplane still throws faults after burn-in, and the diagnostic and debugging tools lag what operators need for quick recovery.

Because downtime and lost engineer hours hit the denominator of performance per TCO, early GB200 NVL72 advantages get eaten unless the reliability story improves.

🧪 Benchmarking asks for more

Nvidia’s DGX Cloud benchmarking scripts on the EOS cluster provide reference numbers, and clouds that match them can earn the Exemplar Cloud badge, which makes pricing and SLAs far less ambiguous.

The community asks Nvidia to publish more cross-provider results, expand beyond NeMo-Megatron to native PyTorch with FSDP2 and DTensor, put muscle behind TorchTitan, and speed up GB200 NVL72 diagnostics.

📚 What to take away

The practical rule from all this is simple, compare clusters by MFU, $/1M tokens, and J/token, and for GB200 NVL72 demand ≥1.6x real throughput plus better uptime before expecting a cost-efficiency win.

🏗️OpenAI may sell access to its own AI data centers later, aiming to offset huge compute costs.

Sam Altman earlier flagged spending of $1T+ in the not very distant future, and a new financing instrument is being designed. Microsoft and Oracle funded a lot before, but banks and private equity are now offering debt, with other structures being explored.

In my opinion, if they also sell capacity, OpenAI becomes a market maker for compute, which could indeed lower cloud margins overall for the cloud-compute industry, but improve cost per token and reduce slow requests for its own products.

What I think possible impact on the overall GPU Market.

By locking in giant GPU fleets then publishing clear prices and terms for slices of those fleets, OpenAI could set a reference price and spread for GPU hours, which is exactly what a market maker does in a thin market.

Because it will then control the hardware plan and the scheduling software, it removes a layer of margin stacking that exists when passing through a hyperscaler, so its all-in cost base is lower and it can post tighter prices.

A purpose built LLM cluster can run with higher load factor and fewer idle gaps than mixed cloud fleets, so the cost per GPU hour drops and the posted price can drop with it, forcing others to compress gross margin.

If it offers spot and forward contracts for compute, buyers can hedge or prebook capacity, and those forwards become a public price signal that narrows the bid ask gap across the market.

As both a massive buyer and a seller, OpenAI can arbitrage idle capacity across time zones and workloads, and the clearing price from that arbitrage becomes the new benchmark others must match.

OpenAI’s strategic goal is lower cost per token, not maximizing resale profit, so it can live with a thin spread on compute sales that hyperscalers will struggle to match without cutting price.

Extra supply reduces the scarcity premium on top tier GPUs and high bandwidth fabric, and when that premium fades, headline cloud margins follow.

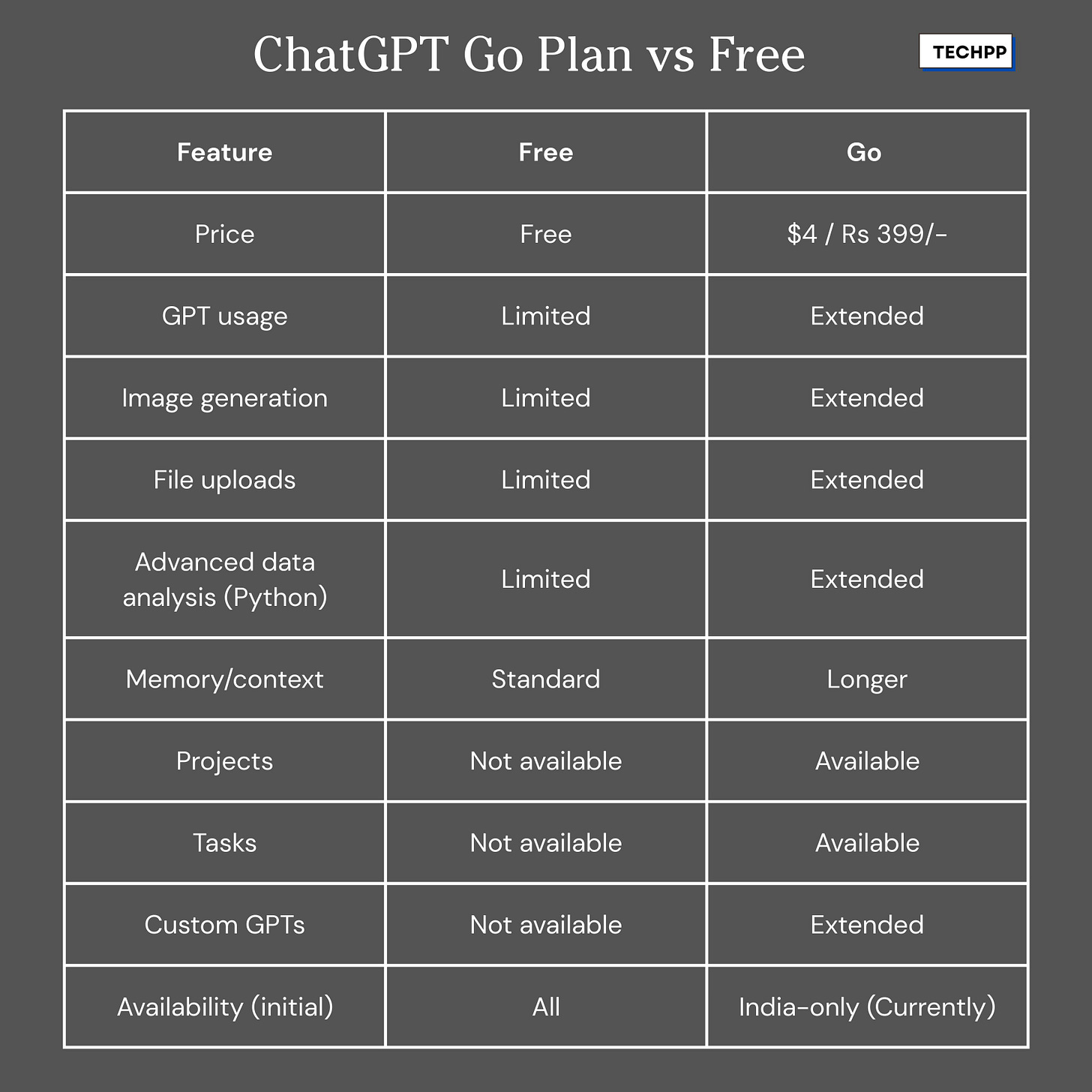

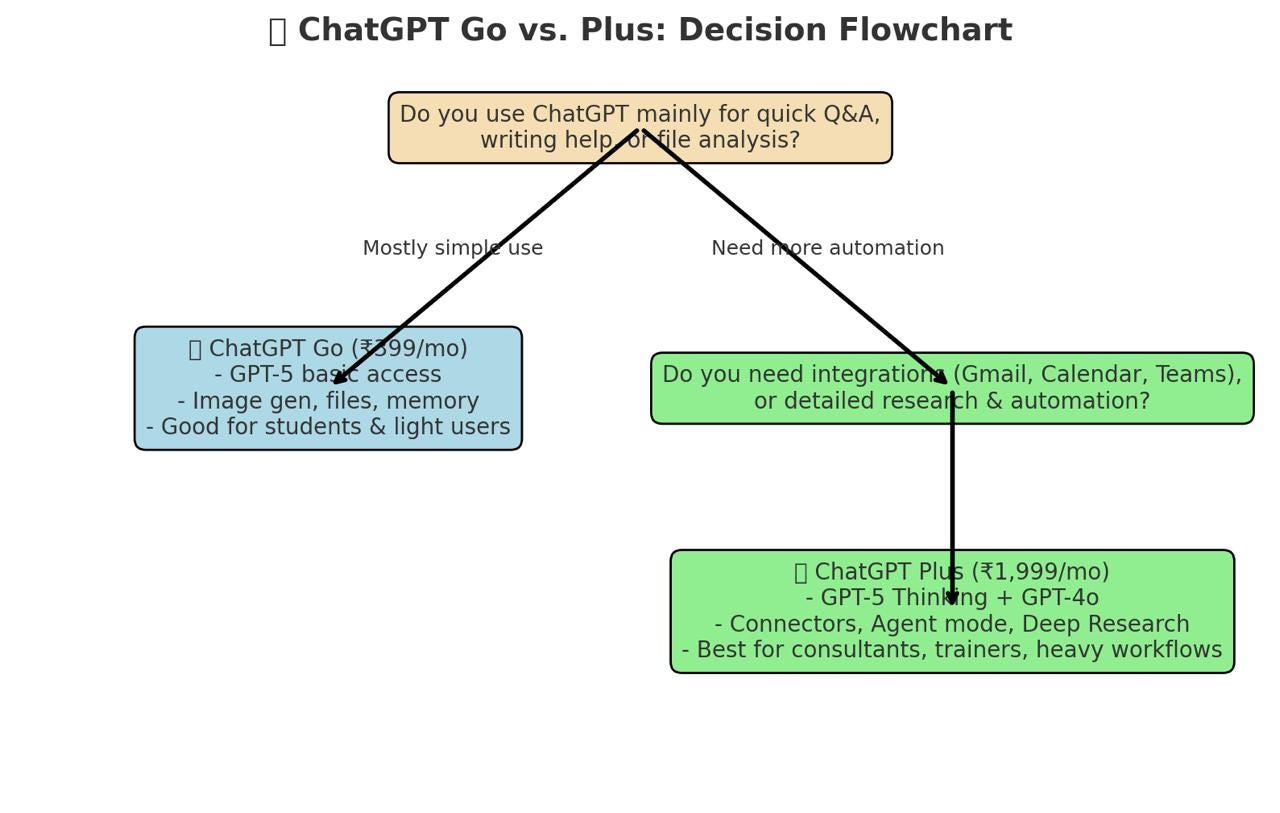

📡 OpenAI announced the release of ChatGPT Go, a cheaper subscription specifically for India, priced at less than $5 per month and able to be paid in local currency.

Price ₹399/month ($4.57/month).

More GPT-5 messages than Free.

More image generation, more file uploads and analysis of docs, sheets, and other files.

More advanced data analysis usage with Python tools.

Longer memory with a larger context window.

Access to projects, tasks, and custom GPTs.

Legacy models like 4o not included.

Sora access not included.

Connectors not included.

Pay with credit cards or UPI.

Switch from Plus or Pro at cycle end, no partial refund, Go starts next cycle.

Manage plan and invoices in Settings, Subscription.

If Go is not visible, confirm India is selected on pricing page and wait for rollout.

🗞️ Byte-Size Briefs

OpenAI released their Agents.md Repo.

Its a simple, open format for guiding coding agents

A dedicated, predictable place to provide context and instructions to help AI coding agents work on your project. Created with AI agents in mind, so they can parse your codebase.

The same file is compatible with Cursor, Claude, OpenAI Codex, Google Jules, and Factory AI.

🦊 Firefox 142 now allows browser Extensions/Add-Ons to use local AI LLMs, for fully on-device inference. Firefox 142.0 lets extensions call wllama, which exposes WebAssembly bindings for llama.cpp.

That means an extension can load a small model file, run text generation locally, and keep data on the machine. No network round-trip, lower latency, and tighter privacy, at the cost of CPU and memory use. Expect tools like page summarizers, offline chat, and smarter autofill to work without sending text out.

Developers also get the Prioritized Task Scheduling API. Code can label work as user-blocking, user-visible, or background, so the browser runs the urgent stuff first and keeps the UI responsive.

Under the hood, the wllama backend runs in a worker with WASM SIMD, can switch to multi-thread when cross-origin isolation headers are present, and exposes low-level knobs like tokenization, sampling, and KV-cache control. Single files hit a 2GB ArrayBuffer limit, so models use GGUF and can be split into parallel-loaded chunks.

🧑🎓 Deep Dive: A practical collection of updates for planning your AWS architecture, that you might have missed.

EC2: You can change security groups and IAM roles on running instances. You can modify, attach, and detach EBS volumes without stopping the instance. You can force stop or terminate instances without waiting for a clean shutdown. AWS performs live migrations during host maintenance, which reduces degradation events. Spot pricing is steadier and no longer a bidding exercise. AMI Block Public Access exists to keep AMIs private by default.

S3: You no longer need randomized key prefixes to avoid hotspots. ACLs are disabled by default with Object Ownership. New objects are encrypted at rest by default. Glacier is part of S3 storage classes with faster, clearer restore options.

Lambda: Now runs containers. Timeout is up to 15 minutes. You can package functions as container images, mount EFS, allocate up to 10GB memory, and set /tmp to up to 10GB. VPC networking for Lambda is much faster than the original model. Cold starts are better understood and easier to mitigate.

EFS: Throughput can be decoupled from capacity using Elastic Throughput or Provisioned Throughput, so you don’t pad volumes to raise IO.

EBS: Volumes created from snapshots lazy load blocks from S3, so first reads are slow unless you prewarm or enable Fast Snapshot Restore. Multi Attach lets certain io1 volumes attach to multiple instances, which is for clustered filesystems.

DynamoDB: Empty string and empty binary values are allowed for non key attributes. Contributor Insights and standard metrics make hot key patterns visible. On Demand capacity mode is a safe default for many workloads.

Cost and optimization: EC2 and EBS bill per second, so a 5 minute test costs 300 seconds. Savings Plans are now the preferred commitment model over EC2 Reserved Instances. Cost Anomaly Detection flags spend spikes. Compute Optimizer also covers EBS and some Trusted Advisor cost checks integrate with it.

Authentication: Use IAM roles with IAM Identity Center for human access rather than long lived IAM users. The root user can register multiple MFA devices.

Networking: public v4 IP addresses are no longer free; they cost the same as Elastic IP addresses.

Miscellaneous: You can close member accounts from the management account’s root instead of logging in to each account. And improved us east 1 reliability, more frequent deprecations for niche services, CloudWatch graphs no longer showing an artificially low last datapoint, and a few other quality of life changes.

That’s a wrap for today, see you all tomorrow.