🧠 MIT’s report on 95% AI failures is taken wrong

MIT’s misunderstood AI ROI stat, Jetson Thor’s 7.5x jump, Karpathy’s AI coding workflow, and DeepMind’s GPU thinking framework—broken down.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (25-Aug-2025):

🧠 Many are miss-reading the famous MIT report, about the 95% fail rate of AI

🛠️ NVIDIA Launches Jetson Thor with 7.5x AI Compute Increase

🗞️ Andrej Karpathy explained in a Tweet, how he uses several AI coding tools together

🧑🎓 Deep Dive: A team at DeepMind wrote this solid piece on how you must think about GPUs.

🧠 Many are miss-reading the famous MIT report, about the 95% fail rate of AI

This is that original MIT report that said 95% of AI pilots fail and which spooked investors across US Stockmarket. But let me explain why the report is not as bad as it looks, it exposes where the value hides and why leadership missed it, it does not say AI is a bust.

The reports says, most companies are stuck, because 95% of GenAI pilots produce zero ROI, while a small 5% win by using systems that learn, plug into real workflows, and improve with use.

BUT here’s the key mis-understanding, The “95% fail” in AI-result number measures only the brittle, custom enterprise pilots only, not AI in general. To the contrary, everyday workers keep getting real value from personal AI tools.

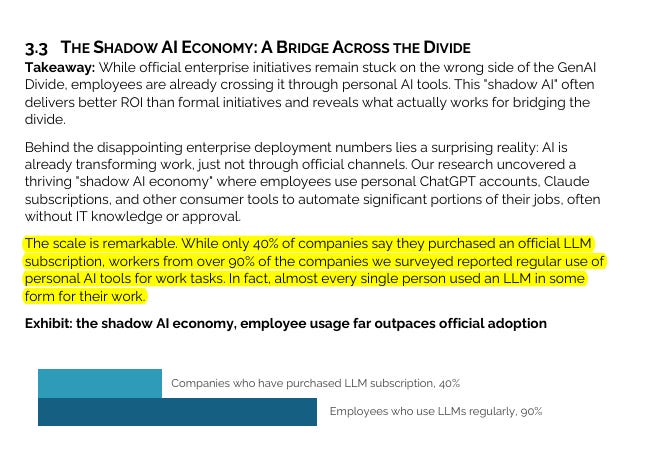

Employees at 90% of firms use personal AI tools for work, while only 40% of companies have official subscriptions, so most usage never shows up in the budget line that executives track.

Shadow AI is all the work people do with personal AI tools that never shows up in company budgets or dashboards.

In other words, the report counted slow, brittle projects inside procurement pipelines, and it missed the unofficial work that actually gets done. And , these bespoke corporate AI-pilots fails because they do not remember context, do not adapt to feedback, and require heavy setup every time, so users quietly avoid them.

People then grab flexible consumer tools that feel responsive, finish the task, and move on, which boosts productivity but never gets recorded as “AI ROI” on a program tracker. This is the “shadow economy” logic, value is created off the books, so dashboards show failure while work output shows gains.

Here is the proof in numbers, back-office automation tied to AI is already cutting external costs by $2-10M per year and reducing agency spend by 30%, which is real money that often lands in vendor and BPO lines, not in an “AI program” line.

Because those savings show up as fewer outsourced tickets or fewer creative invoices, executives who only track revenue lift or headcount might miss that impact.

There is another signal, companies that partner with external vendors get to deployment roughly 2x as often as those that try to build tools internally, which again means the value often lives in purchased services rather than an internal “AI project”.

So overall, the MIT headline focused on the weakest path, custom builds inside rigid workflows, and ignored the stronger path, bottom-up usage plus targeted buys that integrate into real processes. Markets reacted to the headline and sold AI names, but that reaction confused poor rollout strategy with poor technology fundamentals.

A cleaner read is this, official pilots struggle, yet unofficial adoption is high and already paying, which means the report is a map of misaligned execution, not proof that AI demand is collapsing.

Also in a separate research retort, GenAI has actually already delivered an ~$97 billion in consumer surplus by enhancing productivity and quality of life for millions of users.

But, it doesn’t appear in GDP because most of the benefit accrues to users rather than the companies.

Consumer surplus is the minimum cash someone would need to walk away from a tool.

In other words, Consumer surplus is the extra value people get when something feels worth more to them than what they actually pay for it. It is the hidden benefit or satisfaction you enjoy beyond the price on the receipt.

And this report says, Generative AI’s $97 billion in consumer surplus dwarfs the roughly $7 billion in U.S. revenue recorded by OpenAI, Microsoft, Anthropic and Google from their generative AI offerings last year.

GDP tracks the $7B because those dollars changed hands. The $97B is the “consumer surplus” that users say they would need to be paid to give up free and freemium versions for one month, multiplied out for a year. That surplus is value enjoyed by households, not companies, so it never shows up in standard GDP tables.

The paper tracks the consumer surplus people get from free generative-AI tools, not the revenue those tools earn. Economists run large online choice experiments. They show each participant a short scenario such as “You can keep using ChatGPT next month or accept a cash payment and lose access.”

By tweaking that cash offer up and down, they work out the smallest payment at which someone says, “Fine, take it away.”

That cash amount is the person’s private price for one month of access.

Averaging across 82 million U.S. adults who said they use generative-AI tools regularly, the price came out to $98. Multiply $98 by 82 million by 12 months and you get roughly $97 billion of annual surplus, far above the $7 billion those firms booked as sales.

The team folds this figure into a broader metric they call GDP-B, which tries to bolt the hidden benefits of free digital goods onto ordinary GDP.

🛠️ NVIDIA Launches Jetson Thor with 7.5x AI Compute Increase

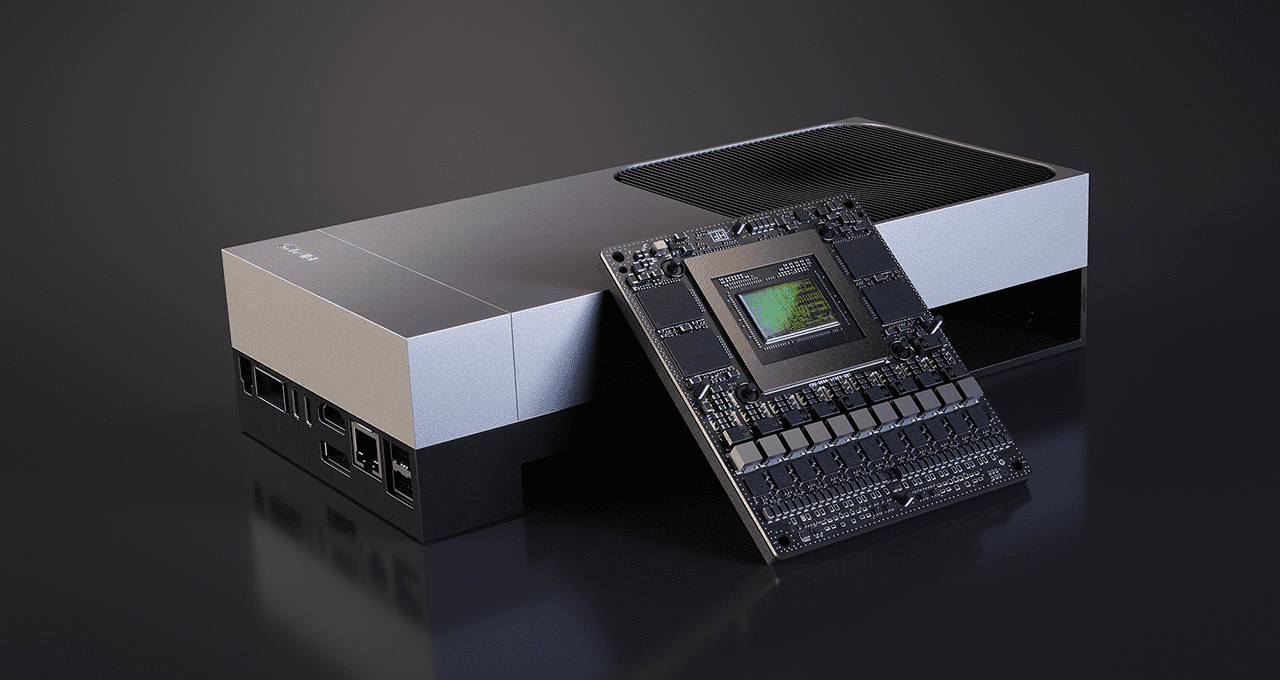

NVIDIA announced the general availability of the Jetson AGX Thor developer kit and production modules.

This will fundamentally change garage robotic builders around the world! A self contained robot platform by Nvidia. It is like the “brain” you could build into a robot, so the robot can understand and act in real time.

i.e. now, a single compact box becomes the robot’s runtime computer, handling high-speed sensor processing, the latest gen-AI models, and standard robotics middleware entirely on the edge.

Runs on Blackwell, giving a 7.5x in AI compute with 2070 FP4 teraflops.

3.5x boost in energy efficiency. 128GB of memory,

Gives real-time reasoning inference

So Jetson Thor brings server-grade AI to robots so they can think and act in real time locally.

Amazon, and Figure are already on board as early adopters.

Price tag lands at $3,499.

Robots need to process camera, lidar, audio and proprioception together with tiny delay, clouds add jitter and cost.

Jetson Thor combines a Blackwell GPU, faster CPUs and bigger memory so large transformers, vision language models and vision language action models run on device.

FP4 stores weights and activations in 4-bit floating numbers, cutting memory traffic and keeping more of the model close to compute.

Speculative decoding uses a small draft model to guess upcoming tokens, the main model checks them, which cuts time to first token and per token delay.

The stack includes Isaac for robotics, Metropolis for video agents and Holoscan for sensor streaming, so multi-sensor fusion lands in GPU memory with low latency.

Agility plans Thor for the next Digit, Boston Dynamics is integrating it into Atlas, and university labs use it to speed perception, planning and fleet tests.

This makes real-time reasoning and control practical at the edge, giving more responsive humanoids, safer factories and fewer trips to remote servers.

Robotics will be Nvidia’s next big cash machine.

🗞️ Andrej Karpathy explained in a Tweet, how he uses several AI coding tools together

Basically each of his tool is for a different job, instead of searching for 1 perfect tool, its a layered workflow.

Start from basics. Code is just precise instructions. Modern AI predicts likely next text, including code. So he first types a bit himself in the right place, then uses Cursor’s tab complete to fill the next lines. This is fast because his partial code acts as the instruction. He says this is ~75% of his use, and he toggles it on or off when it gets in the way.

When a small change is needed, he selects a specific block and asks the AI to modify only that block. This keeps the task concrete and local.

For bigger features, he opens a side tool like Claude Code. These can produce lots of code quickly, but they often lack “taste”, for example too many try or catch statements, overcomplicated structure, or repeated code. He often stops the tool early to avoid drift, then cleans the style himself.

He calls this a code post-scarcity era, meaning code is cheap to generate. If needed, an assistant can write 1,000 lines of throwaway diagnostics to find a bug, then he deletes that code after the bug is found.

If everything is stuck, he escalates to GPT-5 Pro for deep analysis. It has found subtle bugs for him and can pull in niche references.

🧑🎓 Deep Dive: A team at DeepMind wrote this solid piece on how you must think about GPUs.

Data parallel needs 2500 tokens per GPU on H100 to stay compute bound.

A GPU is many SMs, streaming multiprocessors, with Tensor Cores for matrix multiplies, caches, and high bandwidth memory HBM.

There’s a difference between communication inside a GPU node and communication outside it. Inside a node, the GPUs talk to each other through NVLink and NVSwitch. On the H100, each GPU gets about 450GB/s of bandwidth here. This is very fast and allows GPUs in the same node to share data almost seamlessly.

Outside the node, things slow down. The connection between nodes happens over InfiniBand, and the bandwidth there is only 400GB/s per node. So even though inside one node GPUs can exchange data quickly, once you need to move data across nodes, you hit a tighter limit.

This gap matters a lot for training large models. If your setup fits in a single node, communication is cheap and fast. But if the model is spread across many nodes, you have to be careful, because moving data between them can become a bottleneck.

GB200 NVL72 groups 72 GPUs into one NVLink domain and lifts node egress to 3.6TB/s, so many collectives get cheaper.

Tensor parallel works well inside a single node, usually up to 8 GPUs, because NVLink gives enough bandwidth for the constant exchange of activations. Beyond that, communication costs rise too much to keep scaling smoothly.

Expert parallel is a bit more forgiving. You can stretch it across 1 or 2 nodes, and if the feed forward layer is very wide, you can push even further. This is why Mixture of Experts models often rely heavily on this strategy.

Pipeline parallel is efficient in terms of data movement, since you’re just passing activations between stages. But the challenge here isn’t bandwidth, it’s scheduling. You need careful handling of microbatches to avoid idle bubbles, and methods like ZeRO-3 don’t mix well with it because they require repeated weight gathering.

For dense models, the best fits are Fully Sharded Data Parallel (FSDP), which shards model states across GPUs, or tensor parallel, which splits the heavy linear layers. These match the communication patterns to what the hardware can handle most effectively.

That’s a wrap for today, see you all tomorrow.