MixEval-X: Any-to-Any Evaluations from Real-World Data Mixtures

MixEval-X builds real-world multi-modal AI benchmarks by mixing existing tests with actual web queries

MixEval-X builds real-world multi-modal AI benchmarks by mixing existing tests with actual web queries

Tackles AI testing bias by mapping real user tasks to standardized evaluation metrics

Original Problem 🔍:

AI evaluations lack consistency across communities and suffer from query, grading, and generalization biases, hindering accurate model assessment and development.

Solution in this Paper 🛠️:

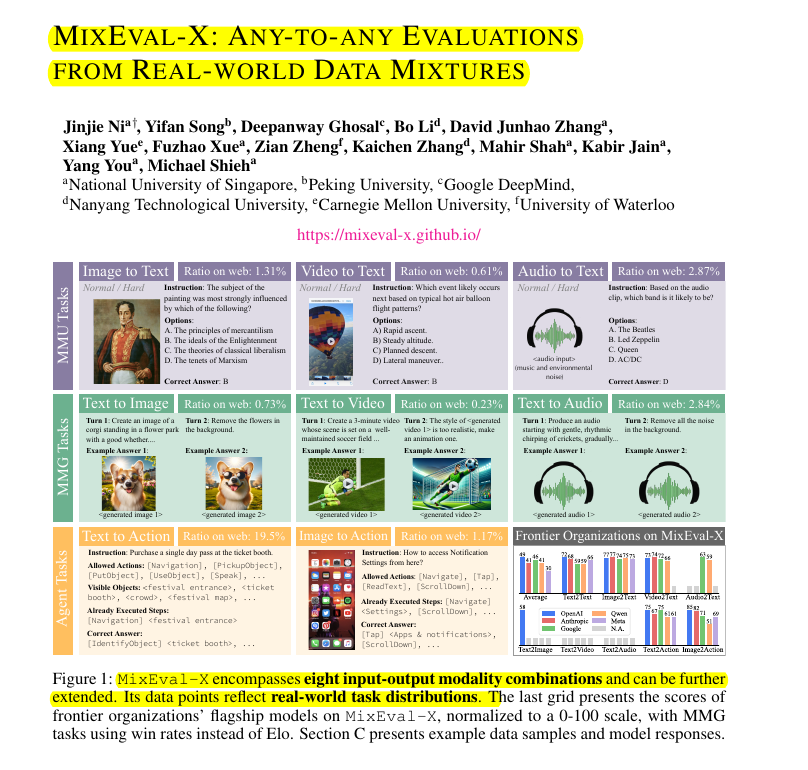

• MixEval-X: First any-to-any real-world benchmark for multi-modal evaluations

• Uses multi-modal benchmark mixture for MMU tasks

• Implements adaptation-rectification pipeline for MMG and agent tasks

• Covers 8 input-output modality combinations

• Aligns benchmark samples with real-world task distributions

• Enables periodic data refreshes to mitigate contamination

Key Insights from this Paper 💡:

• Unified evaluation standards across modalities improve AI assessment

• Real-world task distribution alignment enhances evaluation generalizability

• Dynamic benchmarking mitigates model contamination

• Efficient creation pipelines enable cost-effective, reproducible evaluations

Results 📊:

• Strong correlation with real-world user-facing evaluations (up to 0.98)

• Significantly more efficient than existing benchmarks

• Comprehensive leaderboards for reranking models and organizations

• Reveals performance gaps across different AI communities