ML Case-study Interview Question: Detecting Defective Products with Generative AI, Vision, and Multimodal Models.

Case-Study question

A large e-commerce organization faces a challenge in detecting and removing defective products that occasionally reach customers. They have millions of products moving through multiple fulfillment centers daily. They introduced an initiative (called “Project P.I.”) that uses a blend of generative AI, computer vision, and multimodal large language models to identify and isolate damaged or mislabeled items. The system relies on:

Imaging tunnels to capture product images at various stages.

An optical character recognition system to identify wrong or expired labeling.

A computer vision pipeline to detect superficial defects (torn covers, bent boxes, broken seals).

A multimodal large language model that analyzes image data and textual feedback to trigger corrective measures.

Continuous improvement feedback loops where customer returns data refines the defect detection models.

You are a Senior Data Scientist candidate. Propose how you would design and implement the solution pipeline. Address how you would incorporate machine learning, generative AI, and traditional computer vision modules. Clarify how you plan to handle edge cases, evaluate performance, scale the solution across multiple fulfillment centers, and communicate feedback to sellers. Include your approach for training the models using historical defective-product data, how you would automate real-time interception of bad items, and how you would measure success.

Detailed solution approach

Computer vision and generative AI work together to reduce the chances of defective products ever reaching customers. High-resolution image tunnels capture product images. OCR identifies expired or mismatched text. Advanced image classifiers spot visual anomalies. Multimodal large language models connect written feedback with images to confirm root causes.

Data ingestion and cleaning

Large volumes of images flow from each fulfillment center. Each image is tagged with an item identifier. Merging these images with ground-truth defect labels builds a training set. Automating data cleaning is crucial to filter out corrupted or incomplete records.

Model training pipeline

Supervised learning classifies items as “acceptable” or “defective”. Generative AI and self-supervised techniques bootstrap initial models with minimal labeled examples. Transformer-based architectures help incorporate textual feedback (for instance, a note indicating a wrong product size). The system refines itself with real defect data.

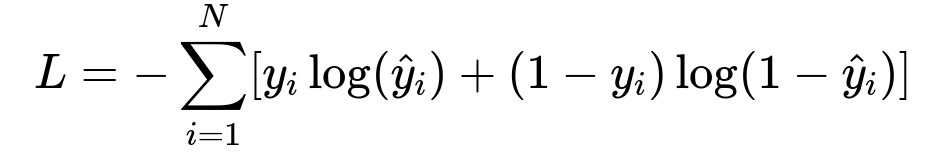

Core classification formula

Here, N represents the total number of training samples. y_i is the ground-truth label (1 for defective, 0 for normal). hat{y}_i is the model’s predicted probability. The goal is to minimize this loss, driving the classifier to align predictions with actual outcomes.

Fine-grained defect detection

Simple classification rarely suffices. Object detection or segmentation can localize tears, dents, or missing seals. For mislabeling (for example, a twin-size product labeled king-size), OCR text is compared to known correct text.

Generative AI for root-cause analysis

A visual-language model checks the item’s images, identifies suspicious traits, then uses a language model to produce a succinct explanation. It consults textual records of customer complaints, cross-references them against the images, and confirms if the complaint was accurate.

Real-time interception

Items pass through an imaging station. A local inference system screens them. When a product is flagged as defective, an immediate action removes it from the outbound workflow. This process is asynchronous from the main pipeline but connected to a centralized orchestration system for subsequent root-cause analysis.

Example Python snippet

import torch

import torchvision

import torch.nn.functional as F

# Simple forward pass in a classification model

def forward_pass(model, images, labels):

outputs = model(images)

loss = F.binary_cross_entropy_with_logits(outputs, labels)

return loss

# Zero-shot approach with a pretrained generative model

# for a quick textual check on the defect

def check_defect_with_generative_model(text_prompt, image):

# Pseudocode for a multimodal generative model

combined_input = fuse_modalities(text_prompt, image)

result = generative_model(combined_input)

return result

Scaling considerations

Fulfillment centers vary in lighting, camera setups, and conveyor speeds. Model calibration is needed for each site. A distributed inference architecture handles large throughput. Continuous retraining is done with newly observed defects and feedback loops.

Metrics for success

Fewer damaged or mislabeled items reaching customers indicates success. Monitoring the defect interception rate over total items processed, along with precision and recall of defect detection, provides a clear KPI. Reduced return rates indicate improved customer experience.

Communication with sellers

When mislabeling or consistent defects emerge from a specific batch, generate alerts that detail the issue. This feedback loop helps prevent repeat mistakes. Summaries from the multimodal LLM highlight any systematic errors.

Conclusion

An integrated pipeline combining computer vision, generative AI, and human feedback ensures an automated end-to-end defect elimination strategy. Real-time interception of defective products prevents customer dissatisfaction. Close collaboration with fulfillment operations and seller communication amplifies impact.

Potential Follow-Up Questions

1) How do you ensure the model remains robust to new or rare defect types?

Models often fail on unseen defect categories. Training with diverse examples is key. Self-supervised pretraining helps learn item representations. Unusual patterns trigger a novelty detection module, which flags items for human review and subsequent labeling. This feedback expands the training set.

2) How do you handle mislabeled products when the label is partially visible?

Partial text scanning can raise uncertainty. A probabilistic OCR approach or language modeling infers possible matches for incomplete text. The system calculates a confidence score. Items below a threshold score are escalated for manual or automated re-check. This approach balances speed and accuracy.

3) How do you handle the risk of high false positives, which could slow down operations?

Threshold tuning on the confidence score of the classifier mitigates this. Setting an appropriate threshold reduces false positives without missing many true defects. Regularly measuring the confusion matrix ensures the model is balanced. If false positives spike, retrain or readjust thresholds.

4) What if the camera angles or lighting conditions differ across fulfillment centers?

Domain shifts occur. Transfer learning handles site-to-site variations. A base model is pretrained on a large, diverse dataset. A smaller site-specific dataset fine-tunes the final layers. Continuous monitoring identifies performance drops, prompting additional calibration for local lighting or angle differences.

5) How do you incorporate multimodal large language models for more complex scenarios?

Textual complaints from customers combine with images. The large language model interprets the textual context. The vision-language model extracts semantic features from images. The final system merges these outputs to determine if the text correlates with an actual defect. This approach addresses nuances like color mismatch or sizing issues.

6) How do you ensure the generative AI model’s outputs are accurate and not hallucinated?

An internal verification layer checks consistency. For instance, if the model claims “box is torn,” the vision model must confirm a visible tear. Conflict triggers a re-check or a fallback to a simpler classification pipeline. Implementation of guardrails (like factual consistency checks) preserves reliability.

7) How do you evaluate whether this project meaningfully reduces returns?

Compare return volume and defect-related complaints before and after deployment. Track the proportion of defects caught by the new pipeline. Decreasing return rates and fewer defect complaints mean it is working. A deeper analysis of trends in negative feedback or shipping anomalies measures long-term gains.

8) How do you deal with privacy and data security concerns around product images?

Blur or obfuscate any sensitive textual data not relevant to classification. Secure all images and logs in controlled-access storage. Use encryption both at rest and in transit. Implement role-based access control on the image pipeline. Regular compliance audits ensure the data usage respects privacy regulations.

9) How would you refine your approach for future expansions, like international locations with different languages?

Adding multilingual OCR and domain adaptation for various packaging norms is crucial. A consistent data pipeline collects language-specific training data. Additional modules handle right-to-left scripts or other character sets. Continuous improvement cycles incorporate local quality checks and feedback loops.

10) How would you ensure minimal operational disruption?

Keep the model inference pipeline asynchronous from the main conveyor flow. Defective items get diverted without halting everything. A backup failsafe allows items flagged uncertain to move forward but get a secondary scan. This approach prevents throughput bottlenecks while maintaining strong interception rates.

All these details form a comprehensive strategy for a Senior Data Scientist interview scenario. The architecture, continuous learning loop, and practical considerations prepare you for follow-up challenges.