ML Case-study Interview Question: Accurate E-commerce Delivery Prediction via CatBoost Quantile Regression

Browse all the ML Case-Studies here.

Case-Study question

A large e-commerce retailer wants to improve its delivery-date predictions for small-parcel products shipped directly by external suppliers. They have a system that adds distinct, hand-crafted time estimates for each stage in the supply chain. They keep adding buffer time to reduce missed promises. This leads to inflated arrival dates, lowered customer satisfaction, and a dip in sales conversions. The retailer wants an end-to-end machine learning solution that gives a more accurate, reliable delivery promise for every product-page view. How would you design and implement a solution that addresses this requirement?

Detailed Solution

Problem Understanding

They have many suppliers and warehouses. Their old approach breaks down each delivery step (warehouse handling, shipping, etc.), sums those times, and inserts padding. This approach fails to capture correlations between steps and forces them to use conservative time estimates. A holistic solution is needed to reduce excessive padding while still preserving high on-time delivery rates.

Model Choice

They switch to a gradient boosted decision tree model using a library (for example CatBoost) that can efficiently handle large tabular datasets, extensive categorical features like supplier identifiers, and time-based or distance-related features. They adopt quantile regression to directly predict the lead time needed to meet a certain reliability target. It also helps them manage the trade-off between promising fast delivery and avoiding missed promises.

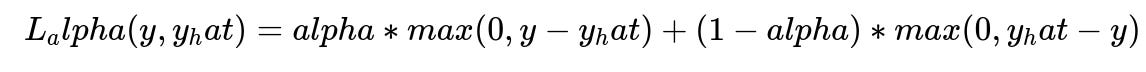

Where:

y is the actual delivery time from order placement to arrival.

y_hat is the predicted delivery time.

alpha is the chosen quantile (for example, 0.9 if they want 90% on-time delivery).

The function max(0, x) is standard ReLU notation in text form.

The alpha term controls how aggressively the model penalizes underestimates versus overestimates.

Feature Engineering

They feed the model with:

Supplier-specific historical performance (recent average lead time).

Distance between supplier warehouse and the buyer's address.

Variations in supplier lead times (if the supplier is known to fluctuate).

Time-of-day and day-of-week flags, including special holidays or known closures that affect shipping flow.

Supplier identity as a categorical feature to capture unique hidden processes within each supplier.

These inputs help the model learn how different suppliers, distances, and timing patterns affect delivery times.

Training Strategy

They use a wide lookback window to cover seasonality and special sales events. They apply recency weighting so that newer data has more influence. This balances the need to capture long-term patterns (like annual holidays) with short-term changes (like a recent supply chain bottleneck).

They further customize the loss function for different speed groups, allowing them to predict multiple quantiles in a single training pass. They modify the CatBoost source code to handle these specialized requirements efficiently.

Inference and Latency Considerations

They must return a delivery estimate at page load for each product. They handle this with an optimized service that:

Caches frequently used features (for example, a supplier’s average lead time).

Uses minimal network calls by carefully orchestrating the feature retrieval pipeline.

Keeps inference times under tens of milliseconds, ensured by the highly optimized CatBoost code and specialized caching.

Results

They run an A/B test and compare it to the old model. They keep the same delivery rate (fraction of orders arriving on time) but cut the difference between promised and actual delivery times in half. Users see faster estimated delivery, conversions go up, and the retailer gains net revenue.

Potential Follow-Up Questions

1) How do you maintain a specific on-time delivery rate with a quantile regression approach?

Quantile regression directly predicts the necessary lead time to meet a chosen probability threshold for on-time delivery. If the business wants a 90% on-time delivery, setting alpha = 0.90 in the quantile loss function ensures that the model’s output for y_hat targets that fraction. The model learns to overshoot in about 10% of cases (since 90% arrive on time or earlier). Using different alpha values (like 85%, 95%) provides fine-grained control over the trade-off between speed and reliability.

2) Why not train separate models for each quantile?

Training multiple separate models might produce inconsistent predictions for different speed thresholds. Also, each model must handle the same large dataset, which can be computationally expensive at inference. A single model that predicts multiple quantiles at once (for example, 0.7, 0.8, 0.9) is simpler to maintain and serves all business segments. Modifying the CatBoost source code for a custom multi-quantile loss accommodates this in one training pass and one inference step.

3) Why is distance an important feature if the model already gets supplier and customer zip codes?

Models can learn distances from raw zip codes given enough data. But explicit distance saves training time and reduces data requirements because it already encodes a key relationship: more distance usually means more transit time. It also improves generalization when the model encounters new suppliers or lesser-seen routes.

4) How do you handle new or rarely seen suppliers?

They rely on aggregated supplier performance metrics (like average handling time) instead of purely using supplier IDs. These generic features let the model guess how new suppliers might perform based on any rough historical data plus the distance. Once the new supplier has enough actual orders, the model weights that newer performance data more heavily.

5) What special engineering is needed to meet the strict page-load latency targets?

They minimize the total network round trips by co-locating the prediction service near the main customer-facing service. They cache frequent lookups such as supplier average lead times. They maintain a warm model instance in memory so the model does not need to re-initialize repeatedly. They also leverage efficient concurrency and custom CatBoost inference calls to handle large traffic volume under tight millisecond constraints.

6) If you wanted to extend the solution to shipments requiring two-person delivery, what changes would you make?

They would add specialized features for such shipments. Two-person deliveries often follow different warehouse pathways, may have specialized carriers, and often exhibit unique weekend constraints. The model would incorporate these new categorical variables (for example, different carrier IDs) and new numeric features (average performance for two-person teams). They would retrain on data specific to these shipping methods or jointly train on the unified dataset but add a flag that differentiates one-person vs two-person shipping processes.

7) How do you avoid overfitting given so many features and supplier IDs?

They use regularization settings built into the GBT framework, such as limiting tree depth or applying shrinkage (learning rate). They also use validated approaches like cross-validation to confirm generalization. They drop extremely sparse features or rely on aggregated representations. If a supplier has too few historical deliveries, the model places more weight on more general features like distance or typical handling time in that region.

8) Could neural networks outperform CatBoost in this scenario?

Neural networks sometimes excel with large unstructured inputs. Here, the data is structured, and GBTs often handle tabular data better, especially with numerous categorical features. They also need extremely fast inference. GBTs are simpler to optimize for this. However, they might explore transformer-based models to handle time-series patterns and more intricate relationships if future performance gains justify the complexity.

9) How do you measure final success beyond just the on-time delivery rate?

They monitor:

The gap between promised and actual delivery (should be minimal).

The fraction of deliveries meeting or beating the promise.

Sales conversions (improvements indicate that shorter promise windows attract more purchases).

Customer satisfaction or net promoter score (high timeliness and reliability foster return customers).

These metrics collectively confirm that faster promises do not come at the cost of eroding trust. They also ensure improved conversion leads to profitable, repeat sales.

10) How might you handle long-term changes or shifting supplier performance?

They regularly retrain with rolling data windows. They apply recency weighting to adapt to changing behavior. They also keep domain knowledge from supply chain experts in the loop. If a major shift happens, like a new holiday schedule or large supply chain disruptions, they feed new data quickly so the model remains accurate. They also set up anomaly monitoring to detect big deviations in the supply chain and to trigger immediate re-training or rule-based overrides when needed.

11) Could you integrate external data sources to further refine predictions?

They might add external shipping partner data, weather patterns, real-time traffic, or macroeconomic indicators if these provide consistent signals. The cost–benefit ratio is key. If external data is reliable and easily integrated, it can shrink predicted delivery windows further. However, if the data is noisy or expensive to fetch, it might not boost accuracy enough to justify the added complexity and latency overhead.

12) How would you test the system before a full production rollout?

They run offline tests on historical data: train on a past window and validate on subsequent orders. They check coverage (on-time fraction) and average promise accuracy. They do a controlled A/B test in production with a subset of visitors to confirm real-world behavior. They carefully measure if the new approach reduces the promised vs actual gap, maintains or improves reliability, and increases conversions. Only after stable and favorable results do they expand to the entire user base.

13) How do you ensure efficient collaboration between data scientists, engineering teams, and supply chain stakeholders?

They keep a common understanding of the supply chain logic, ensure data pipelines are well-documented, and maintain open communication. They confirm that feature definitions align with real operational processes, so the model reflects reality. They also coordinate with engineering for real-time inference needs, performance testing, and fallback strategies if the model service experiences downtime.

14) Why is communication with business partners crucial in such a project?

They need to know hidden supplier constraints, shipping schedules, or holiday closures to design better features. They also have to confirm that the final promised date is displayed clearly to customers and that missed promises are handled in a way that preserves trust. They align on trade-offs (for example, occasionally missing a promise for the sake of faster average delivery times) in light of the company’s overall strategy and brand image.

15) What pitfalls could arise if the new system is rolled out globally without regional adaptation?

Supply chains differ significantly by region. Different carriers and holidays may not align with the original model. They might face data mismatch or poor performance for regions with fewer historical samples. They must retrain or adapt the features for each market to account for local shipping nuances. Uniformly applying the same model might degrade accuracy and reliability in unfamiliar regions.