ML Case-study Interview Question: Optimized Unified Transformers for Real-Time Multilingual Gaming Chat

Browse all the ML Case-Studies here.

Case-Study question

You are tasked with building and deploying a real-time multilingual translation system for a large-scale social gaming platform with over 70 million daily users. The system must support 16 languages, translating any language pair with minimal latency (roughly 100 milliseconds) to enable fluid in-experience chat conversations. Users commonly use platform-specific slang and abbreviations (e.g., “afk,” “lol,” “obby”). The solution must integrate robust safety checks for policy violations, as well as handle resource constraints and rapid throughput. How would you design, train, and deploy this translation model at scale?

Detailed Solution

A single unified transformer-based model can handle all 16 languages in one system. It eliminates the need for 16x16 separate models. This approach leverages linguistic similarities across languages, reduces storage and maintenance, and allows shared learnings during training. Internal slang is addressed by feeding curated slang translations into the training data. Real-time latency near 100 milliseconds is achieved by optimizing model size, applying advanced serving optimizations, and using a caching strategy.

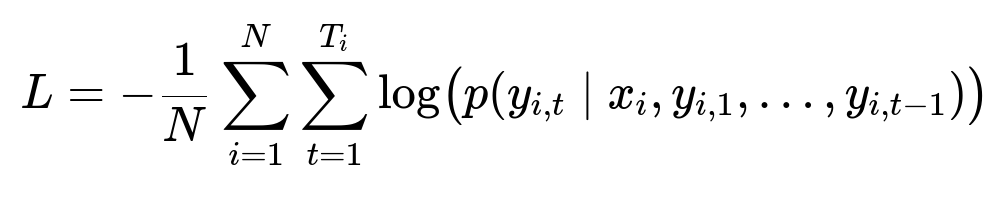

Below is one possible training objective for a neural machine translation model, using cross-entropy as the loss function across tokens:

N is the number of training examples, T_i is the number of tokens in example i, p(...) is the probability of predicting the next token y_{i,t} given the source text x_i and previously generated tokens.

Pretraining uses open-source corpora plus in-platform text. Back translation extends coverage for low-resource pairs by translating data forward and backward, comparing outputs to source text. Iterative back translation repeatedly refines translations for better quality. Human-labeled in-experience chat pairs help ensure correctness. An in-house translation evaluation metric checks accuracy, fluency, and context alignment without needing a ground-truth reference. A specialized ML model labels errors as critical, major, or minor, producing a quality score for continuous improvement.

Teacher-student distillation shrinks a large 1B-parameter model to a ~650M-parameter model. Quantization and model compilation further lower memory usage and speed up inference. Dynamic batching merges translation requests to minimize overhead. An embedding cache reuses encoder results across multiple target languages. The back end integrates safety checks, verifying translations for policy compliance before returning results. Recipients can see both translated and original text, allowing easy toggling.

In practice, the training pipeline includes these steps:

Gather parallel data and domain-specific slang.

Conduct back translation to enrich low-resource pairs.

Train a large model on all language pairs.

Distill it to a smaller model to meet speed and memory constraints.

Implement inference optimizations (quantization, compilation).

Integrate with the chat system’s back end to handle safety checks.

Cache at the encoder output to serve multiple target languages quickly.

Follow-Up Questions and Answers

How do you handle slang and evolving user-specific abbreviations?

We regularly retrain or fine-tune on new slang translations gathered by human evaluators. We store trending user terms (e.g., “gg,” “afk,” “obby”) in a specialized slang dictionary, then incorporate these pairs into the parallel corpus. The model sees these examples during training, learning to map them to correct translations in all target languages.

What if the source language is mislabeled or contains multiple languages at once?

A unified multilingual model can predict the source language on its own. If the user input includes a mixture of languages, the model attempts to translate each token appropriately. Accuracy may drop, but the translated text generally remains understandable. If the user input is mislabeled, the model still decodes correctly because it infers the real language context from the words themselves.

Why not just rely on an external commercial API for translation?

Commercial solutions might not handle in-platform slang or mixed languages well. They may also have higher latency and limited control over custom domain data or internal vocabulary. By building an in-house model, we optimize for our use case (real-time, large scale, slang, safety checks) and can continuously improve it with platform data.

How does the iterative back translation process improve low-resource language pairs?

We generate artificial pairs by translating existing data into the low-resource language and translating it back. We then refine the model on these synthetic pairs. Iterating helps correct mistakes in the generated translations. Each pass reduces errors, improving the model’s ability to handle scarce training examples.

What are some best practices to achieve near 100ms latency at scale?

We use efficient model architectures, dynamic batching, and GPU/TPU parallelization. Model compression (distillation, quantization) reduces computational needs. We enable server-side caching of encoder embeddings. The chat application is designed to batch requests together before sending them to the inference system, reducing overhead per request.

How do you ensure real-time safety and policy checks for the translated output?

We apply the same policy filters that catch harmful or disallowed language on the final translated text. The pipeline flags suspicious words or phrases. It applies penalty logic or blocks the content if it violates terms. Model outputs go through these checks before reaching the recipient, ensuring consistent moderation across all languages.

Could you extend this text translation system to voice?

The same pipeline can be used for voice if we add an automatic speech recognition (ASR) front end and text-to-speech (TTS) back end. The steps become: user speaks in one language -> ASR transcribes speech -> translation model translates -> TTS synthesizes the translation. Achieving low latency with voice requires ASR/TTS optimizations and partial streaming so we start translation before the speaker finishes. The final hurdle is preserving tone, rhythm, and emotion in TTS output.

How do you integrate user feedback to refine translations?

We can surface a feedback button for each translated message, allowing users to flag errors or offer correct translations. These new pairs feed back into the training corpus. We retrain or fine-tune with this feedback loop, steadily improving the model’s handling of domain-specific or newly emerging usage patterns.