ML Case-study Interview Question: Engine Fault Detection from Sound using Hybrid Signal Processing and Deep Learning

Case-Study question

You are given millions of engine sound recordings from vehicles, captured at idle and 2000 RPM. The Company wants an automated system to identify any engine faults, such as crankshaft issues, tappet cover anomalies, misfires, or unusual vibrations. Each sound file is roughly 7 seconds long but contains background noise (horns, chatter, etc.). Your task: Propose a method to classify the engine condition and flag potential faults without any human intervention. Present an end-to-end solution that handles noise reduction, feature extraction, and classification, using both classical signal processing and deep learning. Suggest how to tune the approach to maximize predictive performance while minimizing false alarms.

Detailed Solution

Overall approach

Use a hybrid solution combining signal processing for extracting dominant frequencies and a deep convolutional neural network for spectrogram-based classification. Extract crucial frequency-domain features via short-time Fourier transform (STFT) and Mel Frequency Cepstral Coefficients (MFCC). Then apply a nearest neighbors technique over the dominant frequencies, and use a ResNet-based model on MFCC spectrograms to classify engine faults.

Noise removal and segment selection

Denoise the raw audio with thresholds that preserve engine sound while removing extraneous noise. Select a 2-second window from the 7-second clip where the signal is least contaminated by random disturbances. Use zero cross rate checks in rolling windows to pick the best segment.

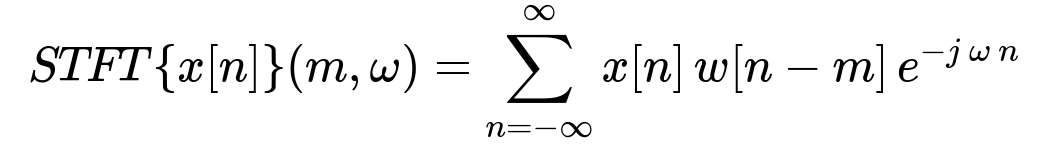

Short-Time Fourier Transform (STFT)

Below is the central formula for the STFT:

x[n] is the discrete-time audio signal. w[n] is a window function applied around index m. ω is the frequency index in radians. The summation slides across the signal, producing frequency content at each time-window. The dominant frequencies reveal engine rotational tones and potential anomalies.

Dominant frequency clustering

Extract the main peaks from the STFT for each engine recording. Compare these peaks to a labeled historical dataset of known engine conditions (including faulty and healthy engines). Use a nearest neighbors classifier to match current peaks to the closest historical signatures. If the matching faulty signatures exceed a threshold, flag the engine as potentially faulty.

Mel Frequency Cepstral Coefficients (MFCC) spectrogram

Convert the chosen 2-second audio window to an MFCC spectrogram. The spectrogram captures spectral-temporal variations closely linked to engine vibration harmonics. MFCC is computed by mapping the log power spectrum onto a mel scale, then applying a discrete cosine transform.

Convolutional neural network for classification

Feed the MFCC spectrogram into a ResNet-like architecture. Treat the 2D spectrogram as an image. Train on a labeled dataset of engine recordings, with ground-truth labels indicating healthy or faulty engines. The model learns subtle frequency and amplitude variations that indicate mechanical issues.

Combining the outputs

Derive a probability of engine fault from the ResNet classifier. Retrieve the nearest neighbors consensus from the frequency-clustering approach. Combine them (like an ensemble) to fine-tune precision and recall. Adjust thresholds depending on whether the Company wants fewer false positives or fewer missed faults.

Implementation snippet in Python

import librosa

import numpy as np

from sklearn.neighbors import KNeighborsClassifier

import torch

import torch.nn as nn

import torchvision.models as models

# Example pseudo-code for data pipeline

audio, sr = librosa.load('engine_sound.wav', sr=None)

clean_audio = some_denoise_function(audio)

best_segment = pick_best_2sec_segment(clean_audio, sr)

# Frequency peaks extraction

stft_data = np.abs(librosa.stft(best_segment))

dominant_freqs = extract_top_peaks(stft_data)

# KNN classifier

knn = KNeighborsClassifier(n_neighbors=5)

# knn.fit(...) with historical data

fault_prediction_knn = knn.predict([dominant_freqs])

# MFCC spectrogram for CNN

mfcc_spectrogram = librosa.feature.mfcc(best_segment, sr=sr, n_mfcc=40)

mfcc_spectrogram = np.expand_dims(mfcc_spectrogram, axis=0)

# Load a pre-trained ResNet

resnet = models.resnet50(pretrained=True)

# Adapt for single-channel input if needed

resnet.fc = nn.Linear(resnet.fc.in_features, 2)

# Convert MFCC to a tensor and run forward pass

input_tensor = torch.tensor(mfcc_spectrogram).float().unsqueeze(0)

logits = resnet(input_tensor)

prediction_cnn = torch.softmax(logits, dim=1)

Denoise, frequency extraction, KNN classification, and CNN classification steps combine into a final decision engine that flags suspicious engine activity.

Follow-Up Question 1

How do you justify the window selection for the 2-second audio segment?

Answer Short segments capture stable engine signals without major fluctuations. Using zero cross rate helps identify where the engine noise is steady and unaffected by gear changes or loud transient sounds. This reduces variability and improves the model’s ability to detect persistent faults. Choosing 2 seconds is often enough to capture engine rotation cycles and identify repeating mechanical anomalies without imposing excessive computational overhead.

Follow-Up Question 2

Why use both a nearest neighbors approach on frequency peaks and a ResNet on MFCC spectrograms, instead of just one method?

Answer Nearest neighbors on frequency peaks is highly interpretable. It leverages known refurbishment actions on similar engines. However, it can miss subtle patterns that CNN architectures detect in the time-frequency representation. The ResNet handles complex interactions of harmonics and helps capture nuances. Combining them improves robustness and confidence, because each method compensates for the other’s weaknesses.

Follow-Up Question 3

How do you handle background noise that changes over time or recordings with extreme distortion?

Answer Use adaptive noise estimation techniques via STFT. Estimate noise profiles at each frequency bin in short intervals, then subtract or attenuate them from the main signal. Reject severely distorted recordings by imposing thresholds on signal-to-noise ratio (SNR). If SNR is below a certain cutoff, request another recording to ensure reliable inference. This approach ensures robust detection under real-world conditions.

Follow-Up Question 4

What hyperparameters or settings would you prioritize tuning?

Answer Tune the K-neighbors parameter for clustering, because too many neighbors can lead to over-smoothing and too few can make the model oversensitive. Adjust threshold levels for deciding engine faults in both the KNN output and the CNN’s probability. For the CNN, tune learning rate, batch size, and the mix of training data for balanced coverage of faulty vs. healthy engines. Proper tuning ensures high predictive accuracy with minimal false alarms.

Follow-Up Question 5

What additional features could further improve the system?

Answer Amplitude-based features can help capture loud knocks or misfires that are not purely frequency-based. Monitoring engine temperature or vibrations from an accelerometer might add complementary signals. Expanding the ground-truth labels to pinpoint specific faults (e.g., tappet noise vs. crankshaft malfunction) strengthens predictive resolution. Checking multiple RPM ranges, not just 2000 RPM or idle, also captures issues that appear only under load changes.