ML Case-study Interview Question: Evaluating LLMs for Code Assistance using Cosine Similarity and Iterative Validation

Browse all the ML Case-Studies here.

Case-Study question

A large technology enterprise is developing an AI-assisted system for code suggestions and conversational assistance in a multi-tenant development platform. They want a reliable way to validate and test various Large Language Models. The system must scale to thousands of user queries daily, cover multiple domains (code explanation, code generation, vulnerability checks, and beyond), and ensure that fine-tuning or prompt engineering does not degrade performance across different use cases. How would you design a comprehensive evaluation process for choosing and refining models so that they perform reliably across the entire platform? What steps would you take, and how would you handle scoring, risk mitigation, and continuous improvement?

Detailed solution

Creating a representative prompt library

Crafting a prompt library that mimics real-world usage is critical. Relying on generic or public datasets risks poor coverage of domain-specific queries. Generating a corpus reflecting real user needs (e.g., code completion requests, vulnerability queries, or code explanations) ensures accurate testing. Including correct reference answers for each prompt gives a baseline target for evaluating model outputs.

Establishing a baseline model performance

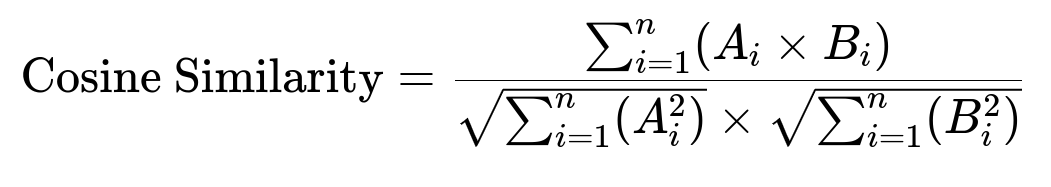

Selecting a few potential foundational models, running them against the prompt library, and comparing outputs to the target answers reveals initial performance. Scoring these responses provides a quantitative baseline. Multiple metrics can capture different aspects of quality. One key metric involves cosine similarity between vector embeddings of the generated text and the reference answer.

A is the embedding vector for the model-generated response. B is the embedding vector for the reference answer. A subscript i denotes the i-th component of the respective vector. Summations are taken over n components in the vectors.

Some organizations also use cross similarity, which measures alignment between sequences via token-level matches, or advanced methods like an LLM-based judge or consensus filtering.

Using Python for scoring

A quick example of how you might implement cosine similarity in Python:

import numpy as np

def cosine_similarity(vec_a, vec_b):

dot_product = np.dot(vec_a, vec_b)

norm_a = np.linalg.norm(vec_a)

norm_b = np.linalg.norm(vec_b)

return dot_product / (norm_a * norm_b)

reference_vec = np.array([0.2, 0.3, 0.4])

model_output_vec = np.array([0.1, 0.2, 0.5])

score = cosine_similarity(reference_vec, model_output_vec)

print("Cosine Similarity Score:", score)

This approach can be extended to large sets of embeddings for automated batch scoring.

Iterating via daily re-validation

Making daily improvements without a structured re-validation process risks overfitting to specific prompts. Retesting all prompts daily measures whether your modifications improved or harmed overall performance. Comparing new scores to the baseline highlights progress or regressions. Persistently low scores on certain prompt types signal the need for targeted prompt engineering or specialized fine-tuning.

Focusing on outlier cases

Certain prompts repeatedly cause errors. Creating a smaller dataset emphasizing these difficult cases helps rapidly test prompt tweaks or model tuning. Once performance improves for these outliers, recheck the broader library to ensure no regressions elsewhere. This cycle repeats until scores for all domains reach stable, acceptable levels.

Controlling prompt engineering

Prompt engineering helps shape a model’s responses, but blind edits risk hidden trade-offs. Tracking response changes by regularly re-scoring a broad set of prompts prevents inadvertently degrading other tasks. Daily or frequent re-validation keeps engineering efforts aligned with the platform’s overall goals.

Maintaining data privacy

Some projects do not use customer data for model training. Substituting a synthetic or anonymized prompt library matching domain needs still provides robust tests. Storing only minimal user data or using carefully curated input-output pairs avoids privacy concerns while preserving coverage.

Ensuring dynamic model selection

Multiple vendors may supply foundation models, each with unique strengths. Periodically testing all candidate models on the same prompt library surfaces the model best aligned with your objectives. Keeping your evaluation pipelines flexible allows switching or mixing models if cost, latency, or performance factors change.

Follow-up questions and in-depth answers

What if the model produces inconsistent outputs across multiple runs for the same prompt?

Stochastic outputs are natural for LLMs. Fixing a temperature setting or using deterministic sampling reduces variance but can limit creativity. For critical tasks, enabling deterministic sampling or setting temperature near zero ensures reproducible outputs. For tasks where variety is beneficial, a moderate temperature is acceptable but you still measure average performance over multiple runs.

How do you mitigate model hallucinations in high-stakes scenarios?

Injecting more detailed instructions or context in prompts reduces the chance of spurious answers. Using specialized fine-tuning or a separate verification system (such as a rule-based validator or a second AI model performing factual checks) provides additional safeguards.

How do you scale daily re-validation with thousands of prompts?

Running full-scale tests on every code commit is expensive. Implementing a two-tier system helps. A smaller subset runs frequently for quick checks. The entire prompt library runs on a scheduled basis. Parallelization across multiple GPU or CPU instances further reduces run time.

How do you handle potential biases in the model’s outputs?

Including a range of prompts that cover various coding styles, domains, and user demographics helps uncover bias patterns. Updating the prompt library to reflect real usage scenarios and systematically examining the model’s answers across subgroups or code contexts helps isolate bias. Iterative prompt engineering or additional data augmentation reduces bias over time.

How do you manage costs when repeatedly calling expensive LLM APIs?

Caching intermediate results and embeddings cuts down on repeated costs. Running smaller subsets for daily checks is cheaper. Negotiating volume-based pricing with vendors or adopting open-source models where feasible can also reduce expenditures. Continually monitoring usage patterns ensures you only run large-scale tests when necessary.

How do you ensure acceptance of AI outputs by end users?

Providing explanations alongside suggestions or a step-by-step reasoning feature builds user trust. Logging user feedback and incorporating it into iterative model improvement cycles addresses user concerns. Monitoring acceptance rates and analyzing user adjustments highlight areas needing more robust AI output or improved prompt construction.

How can you adapt this framework for code vulnerability detection use cases?

Introducing vulnerability-specific prompts with correct answers referencing known secure coding patterns or official documentation ensures the model is tested on relevant security scenarios. Weighing outputs on correctness and consistency with guidelines helps maintain a strong security posture. Gradually expanding the vulnerability dataset tests new threat patterns or compliance requirements.

How do you manage zero-shot or new tasks that were not in your original prompt library?

Creating quick placeholders for new tasks keeps coverage broad. Expanding the prompt library to incorporate domain experts’ reference answers supplies valid performance targets. If no reference answers exist, semi-supervised methods or manual evaluations become necessary until enough labeled data accumulates.

How do you measure business impact or Return on Investment for these AI features?

Monitoring productivity metrics (like code review turnaround time or average resolution times) helps track real-world gains. Tracking user satisfaction and usage patterns of AI-driven features captures adoption and perceived benefit. A/B tests measuring differences in developer performance between AI-enabled and non-AI workflows clarify the business value.