ML Case-study Interview Question: Tiered Recipe Recommendations: Using 2-Tower Deep Learning for Personalized Food Delivery

Browse all the ML Case-Studies here.

Case-Study question

A food-delivery business releases a set of new recipes every week. Their team wants to optimize the way customers see these recipes. They need a global model for potential customers, a specialized model for brand-new customers, and a more advanced model for repeat customers with enough purchase history. They face cold-start issues for new recipes, an exploration vs exploitation dilemma for diverse tastes, and the challenge of capturing customer preferences quickly. Propose a detailed plan to design these recommendation systems. Explain how you would handle popularity bias, coverage for vegetarian/vegan segments, explicit user feedback, and a 2-tower deep learning architecture for personalizing recipe suggestions.

Provide a thorough end-to-end data science approach. Address data ingestion, feature engineering, model training, evaluation, practical deployment, and post-launch performance tracking. Also incorporate ways to handle system constraints, such as new recipes launching every week and scaling to millions of users.

Detailed solution

Overview of the problem

The business requires multiple recommendation layers to serve different user segments. A global popularity-based ranking is not enough because it may ignore certain dietary preferences and lacks personalization. We need three models:

A global recommender that ranks recipes for new or potential customers with minimal known preferences.

A junior recommender that instantly adapts to brand-new customers who have placed their first order.

A senior recommender that uses more complex methods once the user has a larger purchase history.

Global recommender

This model surfaces the most popular or high-utility recipes across the entire platform. It ensures prospective users see a range of cuisines, dietary preferences, and best-sellers. Purely ranking by historical order counts will bias the top recipes toward the largest segment (e.g. meat-based). To address coverage, incorporate each recipe’s popularity within its own diet type or taste profile. Re-rank recipes so multiple diets are visible. This helps convey variety to new prospects.

Junior recommender

When a customer creates their first order, record which recipes they select. We can view that selection as an explicit signal of preference. To generate second-box recommendations, define similarity among recipes. Compute recipe embeddings based on co-purchase or attribute overlap. For example, consider how often two recipes are ordered together, their protein source, cuisine origin, or time-to-cook. If a user picks vegetarian recipes, rank vegetarian options higher while still including some exploration. This solves the exploitation vs exploration trade-off. The aim is to capture the user’s immediate tastes without missing unexpected but potentially desirable recipes.

Senior recommender

After a customer has placed multiple orders, build a 2-tower model that learns user embeddings and recipe embeddings jointly. Represent each user by their historical behavior (recipes chosen, dietary tags, cuisines) and represent each recipe by its attributes (protein type, carbs, diet tags, region). The 2-tower neural network projects both users and recipes into a shared embedding space and combines them to predict affinity.

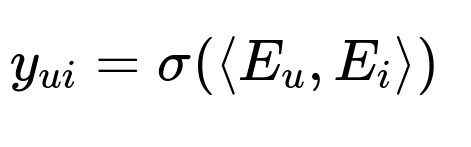

Where:

y_{ui} is the predicted affinity of user u for recipe i

E_u is the embedding of the user

E_i is the embedding of the recipe

sigma( ) is a suitable activation function such as sigmoid

<E_u, E_i> is the dot product of user and recipe embeddings

The model learns these embeddings through a large dataset of user-recipe interactions. The output is a probability score for how likely a user is to order a recipe. Re-rank the top predictions, possibly mixing in diversity or novelty to prevent monotony.

Implementation details

Data ingestion merges user profile data (e.g. dietary preferences, past orders) with recipe information (cuisine tags, protein type, cooking duration, newness). Store them in a distributed data warehouse. Transform features, including one-hot encodings for categorical tags and embeddings for textual recipe descriptions. Split data into train/validation/test. Use negative sampling for unpurchased recipes or rely on implicit feedback labeling. Train the 2-tower model via minibatch gradient descent on a platform like TensorFlow or PyTorch. Regularly retrain with the latest data. Deploy a real-time inference service that, given a user ID, retrieves the user embedding and multiplies it with each candidate recipe embedding. Sort recipes by predicted score.

Sample code snippet

import torch

import torch.nn as nn

import torch.optim as optim

class TwoTowerRecommender(nn.Module):

def __init__(self, num_users, num_recipes, emb_dim):

super(TwoTowerRecommender, self).__init__()

self.user_emb = nn.Embedding(num_users, emb_dim)

self.recipe_emb = nn.Embedding(num_recipes, emb_dim)

self.fc_layers = nn.Sequential(

nn.Linear(emb_dim*2, 64),

nn.ReLU(),

nn.Linear(64, 1)

)

def forward(self, user_ids, recipe_ids):

u = self.user_emb(user_ids)

r = self.recipe_emb(recipe_ids)

combined = torch.cat([u, r], dim=1)

out = self.fc_layers(combined)

return torch.sigmoid(out)

# Example training logic

model = TwoTowerRecommender(num_users=10000, num_recipes=5000, emb_dim=32)

criterion = nn.BCELoss()

optimizer = optim.Adam(model.parameters(), lr=1e-3)

for epoch in range(10):

# user_batch, recipe_batch, labels are your training data

optimizer.zero_grad()

preds = model(user_batch, recipe_batch)

loss = criterion(preds, labels)

loss.backward()

optimizer.step()

During inference, rank candidate recipes by the output score. We can apply final re-ranking or business rules to ensure coverage or handle brand-new items.

Handling user feedback

Collect explicit user choices via “this-or-that” recipe battles. Convert user replies into preference pairs for the recommender to learn from. Also track implicit feedback from order logs and browsing patterns. For new recipes, backfill or rely on content-based attributes. This addresses cold-start, since user and recipe embeddings rely on attribute-level signals even if user-recipe interactions do not exist yet.

Embedding insights

Plot high-dimensional embeddings (via t-SNE or UMAP) to see user clusters. Group them by dietary patterns or cuisine tastes. Spot unusual patterns or confirm natural segments (like vegetarian or fish lovers). Monitor embeddings over time to see if tastes drift.

Operational scaling

Retrain the models weekly or daily. Cache real-time inferences for large campaigns. Keep track of new items weekly and populate their embeddings via content-based features. Update user embeddings after each box purchase. Store everything in a scalable data pipeline. Automate checks for performance metrics like coverage, average rating, and conversion.

Possible follow-up questions

How do you decide which recipes to present for brand-new customers with zero prior orders?

To address zero prior orders, rely on a popularity-based model that includes coverage constraints. Each diet category has a baseline popularity score. Combine these popularity scores with attributes or user registration data if available (e.g. self-declared dietary preference). This ensures the user sees items that broadly match their potential preferences.

How do you handle exploration vs exploitation for customers who only choose one type of cuisine?

Include a small fraction of recipes that do not match the user’s past orders but rank them lower than highly relevant items. This ensures some variety. Over several recommendation cycles, gather fresh signals from these exploration items. For instance, if the user tries an out-of-usual-range cuisine, it adjusts user embeddings to reflect broader tastes. If they ignore it, the system learns that preference is unlikely.

How do you address the cold-start problem for new recipes that have no order history?

Embed them via recipe metadata such as protein type, cuisine, approximate nutritional profile, and textual descriptions. Predict similarity with older items. When a new recipe is added, initialize its embedding from those attributes. Once real orders accumulate, fine-tune. This shortens ramp-up time for brand-new items.

How do you evaluate success?

Compute coverage by dietary categories. Compute top-k accuracy or basket match: the fraction of user-chosen recipes that appear in the top-k recommendations. Track engagement, reorder rates, and diversity metrics. Run A/B tests when rolling out new changes. Evaluate the coverage-lift for underrepresented segments. Track user satisfaction or any relevant rating signals, if available.

How do you deploy it in production at scale?

Maintain a real-time feature store with user embeddings and recipe embeddings. When a user requests recommendations, fetch the user embedding, dot it with candidate recipe embeddings, and produce a top-k list. Cache the results to reduce latency. Refresh embeddings offline on a frequent schedule. Monitor memory usage, inference times, and model performance drift. Implement a fallback if the model fails, possibly using a simpler popularity-based approach.

Why a 2-tower structure instead of a single combined model?

A 2-tower design can handle large candidate sets by enabling efficient approximate nearest neighbor searches. It is easier to scale, because you represent users and recipes independently. You can compute the dot product or feed embeddings into a small final network. This allows quick retrieval of top recommendations. A single model that jointly encodes user-recipe pairs can be more accurate but often scales poorly for huge item catalogs.

How do you handle repeated recommendations for the same recipes a user already purchased?

Track each recipe’s purchase history and apply a penalty or remove recipes already chosen. For recipes a user loves enough to reorder (for example, a user who always wants one favorite dish), implement logic that allows partial repetition. If the service’s business logic discourages repeats, the system can demote those recipes. This is usually a design choice based on product goals.

How do you update the model to reflect changing menu options every week?

Keep a weekly pipeline that ingests new recipes, updates recipe embeddings, and schedules model re-training. Archive older recipe embeddings if they may return in a seasonal rotation. Between major retraining cycles, run a smaller job to embed brand-new items from metadata. Update user preference histories after each purchase, ensuring the next recommendation cycle sees the latest behavior.

What if user tastes evolve drastically?

Set a decay factor on older user interactions. For instance, discount recipes ordered months ago so that new orders weigh more. Maintain rolling windows for user histories. Monitor embedding distances over time. If a user drastically changes diet (e.g. becomes vegetarian), the system recalculates embeddings to emphasize recent behavior.