ML Case-study Interview Question: RAG Copilot for Internal Support: Automating Query Answers from Documentation

Browse all the ML Case-Studies here.

Case-Study question

A large-scale tech company has numerous internal teams that offer platform support through chat channels. They observe tens of thousands of queries in these channels each month, leading to slow responses and repetitive questions. Many answers exist in scattered documentation sources. The company wants to build an on-call copilot using a Retrieval-Augmented Generation approach to address these challenges. How would you, as a Senior Data Scientist, design a system that automates query resolution, reduces engineering response burden, protects sensitive information, and evaluates performance?

Detailed Solution

System Overview

A centralized service ingests documents from internal wiki pages, internal Q&A sites, requirement documents, and other data sources. The ingestion process chunks text into manageable parts and converts them into high-dimensional vectors using an embedding model. These embeddings go into a vector database. When a chat user asks a question, the system encodes it into an embedding, searches for the most similar document chunks, and supplies those chunks to a Large Language Model (LLM). The LLM produces an answer based on the retrieved context.

Data Ingestion Workflow

A data extraction job fetches content from each source. A Spark-based pipeline processes and cleans the text. Each document is chunked and mapped to a unique identifier. A second Spark step calls an embedding API with a User-Defined Function that converts the chunk into a vector. That vector is then stored alongside the chunk in a vector database.

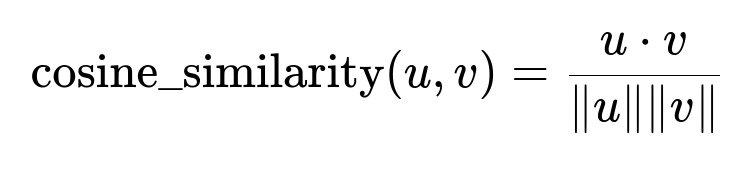

Core Similarity Formula

u and v are embedding vectors for the user's query and a document chunk. u dot v is the dot product. ||u|| is the magnitude of vector u, and ||v|| is the magnitude of vector v. This formula calculates the similarity score between the query embedding and each document chunk embedding.

Vector Storage

A vector database solution handles indexing and merging. A backend microservice retrieves the best matches from this database by computing cosine similarity. Pre-curated data sources ensure that only widely accessible content is exposed.

On-Call Copilot Bot

A bot runs in the chat platform and invokes a backend knowledge service. The query is turned into an embedding, the relevant chunks are fetched from the vector database, and the LLM receives these chunks as context. The final answer is posted back into the channel with links to the source. If the answer is unsatisfactory, users can escalate to a human on-call or mark it as resolved.

Cost Tracking

An audit log or a tracking ID records every call to the LLM. This allows financial oversight, especially if the embedding or model usage incurs costs. A gateway service handles these requests and appends metadata to each model call for monitoring.

Evaluations and Feedback

Users can mark the bot’s answer as resolved, partially helpful, incorrect, or not relevant. A separate Spark-based pipeline collects these ratings for performance analytics and to detect hallucinations. Another evaluation pipeline checks the quality of the documents themselves. If a document is inaccurate or unclear, the system flags it for improvement.

Code Example

import pyspark.sql.functions as F

from my_embedding_library import get_embedding_udf

# Assume df has two columns: 'doc_id', 'chunk'

# get_embedding_udf returns a vector for each chunk

df_with_embeddings = df.withColumn("embeddings", get_embedding_udf(F.col("chunk")))

# Write df_with_embeddings to vector database or blob store

df_with_embeddings.write.format("parquet").save("/path/to/output")

This snippet shows how a Spark pipeline might produce embeddings for each chunk. A similar job merges these embeddings with an index build process in the vector database.

Practical Considerations

Accuracy requires that the LLM only relies on the retrieved chunks. The system includes explicit instructions to cite the retrieved context and discourages using any external data. Sensitive sources remain off-limits. An additional mode for interactive Q&A lets users refine their query with a follow-up question or escalate to a human if the bot fails.

How to Handle Follow-up Questions

Q1. What strategies reduce hallucinations when the LLM has only partial context?

One approach is to strictly instruct the LLM to answer only from the retrieved chunks. Adding sub-context sections and requiring the LLM to cite them helps reduce speculation. Another method is to apply a re-check system that compares the LLM output against a reference source for consistency. If there is a discrepancy, the answer is flagged or corrected.

Q2. How can the team ensure that only those with the right privileges view certain knowledge base documents?

A permissions layer filters relevant chunks during retrieval. The query includes user access tokens, and the backend checks the user’s authorization before sending the chunk to the LLM. A fine-grained access control mechanism at the vector database level is also possible, ensuring only authorized embeddings are returned.

Q3. How is the system updated with new documents without retraining the entire model?

A pipeline periodically runs to ingest newly added or edited documents. The embedding generation step applies the same model to the new chunks and merges them into the existing vector index. The LLM remains the same, as it only needs relevant context from the updated embeddings.

Q4. How would you handle conflicting sources or multiple possible answers?

An aggregation step can rank candidate answers by confidence. The system can also present multiple relevant chunks and let the LLM merge or compare them. If conflicts persist, the user can be prompted to clarify or escalate to human support. Performance metrics can track how often contradictions happen.

Q5. What steps would you take to improve document quality if user feedback shows repeated inaccuracies?

A document evaluation pipeline can flag entries with poor user feedback. A separate app reads these flagged items and runs an LLM-based judge to extract reasons for the errors. The app then auto-generates suggestions for rewriting. A dedicated team or automated rewriting mechanism can update the documents. The pipeline re-ingests these improved documents and updates the embeddings.

Q6. How would you keep the system scalable for hundreds of thousands of queries each month?

A distributed Spark job handles large volumes of documents during ingestion. A load-balanced microservice or serverless functions respond to user queries in parallel. The vector database scales horizontally by sharding embedding indexes across nodes. Caching frequent or recent queries can reduce retrieval time for hot data. If the LLM usage spikes, container orchestration with autoscaling can spin up more replicas to handle load.

Q7. How would you approach cost optimization for LLM calls?

Batch queries wherever possible. Cache repeated or similar questions. Track usage by grouping queries under the same use case or department, then set usage limits. Encourage partial reuse of embeddings for near-duplicate queries. Explore open-source or in-house LLMs for internal data to reduce API cost overhead.

Q8. How do you validate the performance of the overall pipeline beyond user feedback?

An offline evaluation run picks representative queries and checks retrieval accuracy. Synthetic questions can also measure how well the system handles edge cases. Each retrieval output is compared against a curated “golden” answer set. Continuous integration tests can run these checks whenever the pipeline updates data sources or modifies the retrieval logic. This ensures stable performance and quick regression detection.