ML Case-study Interview Question: Neural Embeddings for Item Recommendations: Solving Cold Start via Content & Interactions.

Browse all the ML Case-Studies here.

Case-Study question

A large online marketplace faces the challenge of providing relevant Item-to-Item recommendations. Items are posted by sellers with varying content quality (title, description, category, etc.). Traditional Collaborative Filtering suffers from data sparsity and “cold start” issues when newly posted items lack interaction history. Content-based methods sometimes fail to capture user preferences because of inconsistent listing details. Propose a solution that merges interaction data and content features. Explain how you would build and deploy a neural-network-based model that can handle cold start items and scale to millions of users and items. Discuss your approach in detail, from data preprocessing to final deployment. Justify every design choice and show how you would measure success. Prepare for potential follow-up questions on algorithmic complexity, negative sampling, model architecture, and A/B testing strategies.

Detailed Solution

Overview

The marketplace has two key recommendation approaches. Collaborative Filtering relies on interaction data (item co-occurrences) but struggles with data sparsity and cannot recommend cold items. Content-based methods rely on item properties but often miss latent user interests. A blended approach is essential.

High-Level Method

Train a neural network (an encoder) that outputs a vector embedding for each item. These embeddings reflect similarities, so dot products of related items become large. Use both item content and user behavior (session data) to guide training. Newly posted items can be embedded using the content-only path, bypassing the cold start issue.

Training Data Construction

Collect user session data: each session is a sequence of item views. Generate positive pairs from consecutive items in a session. Generate negative pairs by randomly sampling items. This contrast drives the model to produce higher similarity for positive pairs and lower similarity for random pairs.

Model Architecture

Use an encoder network that ingests item content (title text, category, other structured attributes). Merge these features in dense layers. Output a vector embedding that captures semantic aspects. During training, compute the dot product between item embeddings for positive and negative pairs.

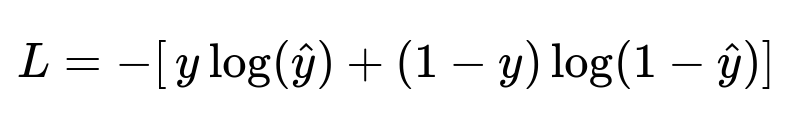

Core Loss Function

Use cross-entropy to push positive-item similarity to 1 and negative-item similarity to 0:

Here L is the loss for a single pair, y is the ground truth label (1 for positive pairs, 0 for negative), and hat{y} is the predicted probability derived from the dot product of the two item embeddings.

Inference

To recommend similar items for a target item T, embed T via the encoder. Compare its embedding against a large item pool. Sort items by similarity scores (e.g., dot product). Return top candidates to users.

Practical Implementation Example in Python

import torch

import torch.nn as nn

import torch.optim as optim

class ItemEncoder(nn.Module):

def __init__(self, input_dim, embed_dim):

super(ItemEncoder, self).__init__()

self.linear1 = nn.Linear(input_dim, 128)

self.relu = nn.ReLU()

self.linear2 = nn.Linear(128, embed_dim)

def forward(self, x):

x = self.linear1(x)

x = self.relu(x)

x = self.linear2(x)

return x

# Suppose we have item_features, pos_item_features, neg_item_features as tensors

encoder = ItemEncoder(input_dim=300, embed_dim=64) # Adjust sizes as needed

optimizer = optim.Adam(encoder.parameters(), lr=0.001)

criterion = nn.BCEWithLogitsLoss()

for epoch in range(num_epochs):

optimizer.zero_grad()

emb_i = encoder(item_features)

emb_p = encoder(pos_item_features)

emb_n = encoder(neg_item_features)

# Positive similarity

dot_pos = torch.sum(emb_i * emb_p, dim=1) # Dot product

# Negative similarity

dot_neg = torch.sum(emb_i * emb_n, dim=1)

# Prepare labels

labels_pos = torch.ones(dot_pos.size(0))

labels_neg = torch.zeros(dot_neg.size(0))

# Compute loss

loss_pos = criterion(dot_pos, labels_pos)

loss_neg = criterion(dot_neg, labels_neg)

loss = loss_pos + loss_neg

loss.backward()

optimizer.step()

Explanation: The encoder learns to map each item into a latent space. Positive pairs produce higher dot products, and negative pairs produce lower dot products.

Deployment Strategy

Offline Embedding Computation: Run a batch job to embed existing items, store vectors in a scalable database or key-value store.

Online Inference: When a user visits an item page or has an item history, compute or retrieve the embedding. Retrieve neighbors by approximate nearest neighbor search (e.g., Faiss, Annoy).

Cold Start Handling: For new items, run the same encoder on content. No user interactions required.

A/B Testing

Compare the neural approach with baseline algorithms. Track engagement metrics (clicks, contact rate, watch time). A typical test might show a 10–20% uplift in key metrics when combining collaborative and content signals.

How would you handle follow-up questions?

Ensuring Model Scalability

Large item catalogs demand efficient training. Batching session data, using GPUs, and implementing negative sampling strategies help. Incremental training or mini-batch updates handle new items. Model compression methods (quantization, distillation) manage memory usage.

Handling Negative Sampling

Randomly pick negatives from the item pool, ensuring they differ from positives. For popular categories, implement more dynamic sampling to capture subtle variations. Confirm diversity so the model learns to separate truly dissimilar items.

Avoiding Overfitting

Use dropout or L2 regularization in encoder layers. Control the embedding dimension to avoid memorization. Early stopping based on validation set performance helps maintain generalization.

Combining Collaborative Filtering and Neural Embeddings

Ensemble final recommendations by blending neural model scores with classical Collaborative Filtering. Weighted linear combinations or more advanced meta-models can produce better results than a single system.

Evaluating Content Representation

Check that item attributes (title, description, etc.) parse correctly. Experiment with embeddings from pretrained text models or image models if relevant. Evaluate domain-specific features (e.g., brand, condition) that significantly impact user preferences.

Measuring Success

Focus on user-centric metrics (click-through rates, conversions). Measure coverage of cold items. Monitor performance drift over time. Validate final candidate sets both quantitatively (A/B tests) and qualitatively (user feedback).

Potential Edge Cases

Extremely short sessions: Might produce noisy pairs. Add constraints to session filtering.

Misclassified categories: The encoder might embed items incorrectly if input data is wrong. Periodically clean up or re-check data pipelines.

Long-tail items: There may be items with few direct similarities. Ensure the encoder captures relevant content-based signals.

Implementation Challenges

Workflow orchestration, real-time re-ranking, approximate nearest neighbor indexing, and safe fallback logic when no strong neighbors exist. A robust pipeline can combine offline and online components with minimal latency.

Practical Tips

Explaining these design decisions in interviews requires clarity on each component of the pipeline. Present trade-offs with real data constraints. Justify hyperparameters. Show that consistent iteration and A/B testing drives final improvements.