ML Case-study Interview Question: Reducing Gender Bias in Grammar Correction Using Data Augmentation.

Browse all the ML Case-Studies here.

Case-Study question

A company has a grammar-checking system that often miscorrects or overlooks issues when dealing with masculine, feminine, and gender-neutral singular "they" terms. They want to reduce bias and maintain high correction accuracy. They have a corpus of texts containing masculine references and want to expand it to include feminine and singular "they" usage. How would you approach designing a data augmentation strategy, improving model training, evaluating bias reduction, and ensuring the system retains or improves overall grammatical error correction quality?

Detailed solution

Large datasets often lack balance between masculine, feminine, and neutral pronouns. Augmentation addresses this gap. Replacing or inserting terms that cover all gender forms is critical. Techniques must systematically transform existing texts to produce parallel data reflecting diverse usages.

Training a grammatical error correction model involves aligning erroneous sentences with corrected versions. Adding more balanced gender examples requires generating new input-output pairs. Applying transformations to masculine pronouns and obtaining parallel feminine or singular "they" versions produces extra training samples. Systems see broader distribution of terms during learning and learn to correct them accurately.

Augmenting for singular "they" requires careful handling of verb agreement. Singular "they" often pairs with plural verb forms, but context might differ from true plural references. Matching real usage patterns avoids errors. Augmentations must insert singular "they" in scenarios where it actually replaces a singular antecedent.

Refining preexisting masculine-to-feminine augmentation involves consistent word substitution. Checking morphological variations (e.g., "actor" to "actress") ensures the new text stays grammatically valid. Tweaking context is important. Overly simplistic replacements risk nonsensical sentences.

Augmenting both masculine and feminine terms helps build a balanced training set. Inserting singular "they" is more nuanced because many corpora do not consistently annotate or handle it. Separate pass-through generation processes for each pronoun form create comprehensive coverage.

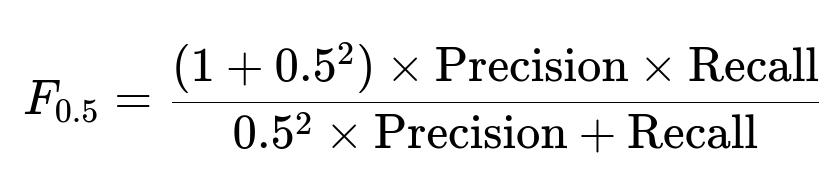

Explaining the effect of these augmentations requires a suitable metric. Grammatical error correction often uses precision, recall, or an F-measure with a heavier weight on precision, such as F0.5. A widely used formula is shown below.

Precision is the fraction of system-corrected tokens that are correct. Recall is the fraction of actual errors in the text that the system successfully corrects. F0.5 places more importance on precision, which is crucial to avoid introducing spurious corrections.

Evaluation for bias reduction examines how well the system handles masculine, feminine, and singular "they" usage. Samples are grouped by pronoun type, then system outputs are measured for error rates, false corrections, and missed corrections. Comparisons before and after augmentation show improvements or regressions in handling each group.

A Python snippet for generating augmented data:

import spacy

nlp = spacy.load("en_core_web_sm")

def augment_to_feminine(text):

doc = nlp(text)

tokens = []

masculine_to_feminine = {"he":"she", "His":"Her", "him":"her"}

for token in doc:

if token.text in masculine_to_feminine:

tokens.append(masculine_to_feminine[token.text])

else:

tokens.append(token.text)

return " ".join(tokens)

sentence = "He gave his book to him."

augmented = augment_to_feminine(sentence)

print(augmented) # She gave Her book to her.

Translating such logic to handle singular "they" is trickier. Proper subject-verb agreement must match typical usage. The system sees both original and augmented examples during training. This coverage encourages the model to generalize to unseen sentences containing varied pronoun usage.

Deployment considerations

Trained systems must integrate seamlessly into real pipelines. Batch processing or streaming mode changes little at inference time. Data distribution shifts when real user input appears. Monitoring real-time feedback is essential. If post-deployment performance for certain pronoun types degrades, additional targeted augmentation or fine-tuning might follow.

Ensuring quality

Regular regression testing is key. Some augmented data could introduce synthetic errors. Human evaluation can catch subtle mistakes where data augmentation does not reflect real usage. Continuous iteration fine-tunes transformations or expansions to maintain precision on standard grammar tasks and avoid missing new corner cases.

Follow-up question 1

How would you detect and measure undesirable biases in the system output when handling sentences with masculine, feminine, and singular "they" pronouns?

Answer:

Measuring biases involves examining miscorrection rates per pronoun category. Splitting a labeled test set into subsets (masculine, feminine, singular "they") and comparing error metrics reveals discrepancies. Tracking precision and recall for each category shows whether the system systematically fails on one group. Comparing numeric results before and after augmentation quantifies progress. Verifying misclassification patterns (e.g., changing "she" to "he") or repeated misgendering highlights persistent bias. Adding domain-specific pronouns or context-specific expansions refines the analysis.

Follow-up question 2

What if the augmented data over-represents rare forms of singular "they" usage? How would you maintain a realistic data distribution?

Answer:

Over-sampling rare forms might distort real-world frequency. Reducing the augmentation ratio or weighting the training data accordingly maintains a balanced distribution. Sometimes weighting examples in the loss function ensures the model sees enough singular "they" examples without overshadowing typical usage. Real usage statistics, if available, guide the proportion of augmented sentences. Occasionally, dynamic sampling or staged training merges the dataset in controlled steps. Validating on a holdout set that reflects real distribution confirms that performance remains strong across all pronoun types.

Follow-up question 3

How could you adapt the augmentation approach for languages that have more than two gendered pronoun forms?

Answer:

Extra pronoun categories involve listing each relevant form and applying the same substitution logic, extended for grammatical rules specific to the language. Synthetic generation must respect morphological changes or declensions. Reviewing standard reference corpora or consulting linguistic experts clarifies correct transformations. Testing each pronoun type with multiple morphological cases ensures coverage. If the language requires agreement beyond pronouns (e.g., adjectives and nouns that change with gender), the augmentation script must handle those additional inflections.

Follow-up question 4

How would you handle verbs and adjectives that change forms based on the subject's gender in other languages?

Answer:

Identifying gender-dependent verb or adjective forms requires a morphological analyzer or part-of-speech tagger. The script modifies not only pronouns but also associated verbs or adjectives. Linguistic databases help map original forms to their correct variations. Training data and generation rules must align with the grammar structure of the target language. Parallel transformations for each grammatical aspect keep the final text coherent. Confirming correctness with human feedback is crucial, since automated tools can miss subtle morphological constraints.