ML Case-study Interview Question: LLM-Driven Engineering Analytics for Software Team Productivity and Oversight.

Browse all the ML Case-Studies here.

Case-Study question

A large technology organization faces an urgent need to improve the productivity and oversight of its software development teams. Due to restructuring, many engineering managers now lead much bigger groups than before. They must keep track of potential bottlenecks, detect anomalies in development pipelines, and provide timely status updates to stakeholders without compromising on quality. Your challenge is to design a solution that ingests data from GitHub, Jira, and other developer tools and uses a Large Language Model (LLM) to produce data insights, incident identification, sprint analysis, and automated documentation for both technical and non-technical audiences. Propose an end-to-end plan covering data ingestion, modeling approach, user-facing features, privacy/security measures, and how you would measure success.

Detailed Solution

Data Integration and ETL

Start by creating robust data ingestion layers for GitHub pull requests, Jira tickets, and any relevant metadata from source control systems. Store all items in a structured database or data lake. Implement real-time pipelines that update as soon as a pull request merges or a Jira ticket changes status. Include metrics like commit frequency, code review times, and story point completion progress.

Large Language Model Choice

Use a foundation model that can process queries in natural language, even if the underlying data is fragmented. Fine-tune or prompt-engineer it to parse queries about sprint status, upcoming deadlines, incidents, and dev-ops tickets. Feed it textual summaries of each ticket, pull request descriptions, and any relevant conversation logs so it can generate context-aware responses.

LLM-Powered Functionalities

Use the model to convert raw data into concise status updates. When an engineering manager requests a sprint analysis, let the system aggregate the issues, classify them by severity, and highlight anomalies. Incorporate an intelligent writing assistant that automatically drafts release notes or user-facing announcements. Generate these by synthesizing details from tickets and pull requests, then adapt the tone or complexity level as needed for different audiences.

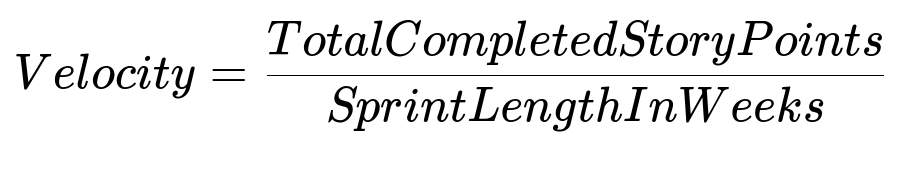

Core Formula for Sprint Velocity

This approach often includes tracking sprint velocity to measure throughput. A common velocity formula is shown below.

Where TotalCompletedStoryPoints is the sum of completed story points in a single sprint, and SprintLengthInWeeks is the duration of that sprint. This helps managers estimate how much work their teams can accomplish in upcoming sprints and detect potential scope issues.

Security and Privacy

Use an LLM infrastructure that keeps data self-contained. Deploy the model in a way that does not expose proprietary code or confidential team messages. Host it within a controlled environment that aligns with the organization’s data handling standards. Mask sensitive information before sending it to the model if needed, and ensure logs are properly encrypted.

Implementation Considerations

Connect your ingestion pipelines to stream updates into a central data store. Use a job scheduler to process them in small batches. Ensure you have data transformation functions that standardize ticket statuses and unify them under consistent naming conventions. Train or prompt the model with real examples of sprints and tickets so it learns the organization’s domain-specific terms. Provide a user interface that lets managers type free-form questions about project status or request summary updates. For advanced analytics, combine the LLM with a data warehouse query to provide real-time metrics on throughput or identify stuck issues.

Measuring Success

Track incident response time, stand-up meeting durations, documentation completeness, and release note turnaround time. Compare these metrics before and after deploying the system to quantify productivity gains. Monitor usage logs to see how often managers rely on automated updates and how quickly they can identify high-priority incidents. Track team satisfaction by gathering feedback on the accuracy and clarity of LLM-generated content.

Follow-up question 1

How would you handle the risk of inaccurate outputs or hallucinations from the LLM when generating critical updates?

Answer: Include a validation layer that cross-checks any critical text with numeric data. For instance, if the LLM summarizes that 20 tickets are completed, verify that with a direct database call. If the numeric check differs, trigger a fallback to a more conservative template. Also give managers an option to confirm or edit the generated output. Build a feedback loop that allows corrections to be fed back into the model or used to improve future prompts.

Follow-up question 2

What strategies can ensure timely anomaly detection for site reliability engineering teams?

Answer: Include an event-driven microservice that filters commits and incident logs in near real time. Integrate metrics like error counts and build failures into your ingestion layer. Set up triggers that automatically prompt the LLM for an analysis whenever error rates exceed certain thresholds. Provide managers with immediate notifications if the system detects unusual commit activity or repeated revert commits. Use a rules-based approach alongside the LLM so clear-cut anomalies are flagged without waiting for model-based interpretation.

Follow-up question 3

How would you adapt your approach if the team uses different project management tools with inconsistent data structures?

Answer: Design your ingestion logic to map each tool’s fields into a universal schema. Maintain a translator component that converts each distinct data format into a canonical ticket object. Use flexible data pipelines with well-defined intermediate data formats so you can plug in new tools with minimal changes. Test the LLM with each integrated source to confirm that the summarized context reflects the unique fields of that tool (such as priority levels, custom statuses, or time tracking entries).

Follow-up question 4

What measures would you take to ensure sensitive information does not leak when generating external release notes?

Answer: Implement content filters that detect references to confidential topics before final text generation. Provide high-level “public-friendly” abstract text for external notes, stripping out internal references like technical debt tasks or private chat logs. Configure the model to follow instructions that mark certain fields as restricted. Route external announcements through a final approval stage, letting product managers confirm the content. Insert explicit classification rules to block or redact certain categories of information before the LLM processes them.

Follow-up question 5

How do you see this system scaling as the organization onboards more teams with greater amounts of data?

Answer: Separate ingestion, transformation, LLM inference, and front-end layers into services with horizontal scaling. Balance the load across multiple replicas of each service. Cache frequently requested analytics or text summaries. Use asynchronous job queues for data transformation steps, ensuring that spikes in usage do not stall real-time queries. Implement multi-region deployment for high availability. Keep a versioned model registry, so updated or more efficient model variants can replace older ones smoothly.